Author: Denis Avetisyan

A new approach leverages the power of artificial intelligence to proactively identify faults and anomalies in electrical distribution systems.

This review details the application of convolutional neural network-based autoencoders for fault detection in power grids using time-series current data.

Despite advancements in power system protection, reliable and accurate fault detection remains a significant challenge due to the inherent complexity and probabilistic nature of electrical disturbances. This paper, ‘Fault Detection in Electrical Distribution System using Autoencoders’, introduces an anomaly-based approach leveraging deep convolutional autoencoders to identify faults in electrical distribution systems by learning normal operating conditions from time-series current data. Demonstrating superior performance-achieving up to 99.92% accuracy on benchmark datasets-this method offers a promising alternative to traditional techniques. Could this approach facilitate more resilient and intelligent power grid management in the face of increasing system complexity and demand?

The Inevitable Cascade: Detecting Faults in Complex Systems

Conventional fault detection techniques, designed for simpler, more predictable power grids, are increasingly challenged by the intricacies of modern systems. These grids, characterized by distributed generation from renewable sources, bi-directional power flow, and the integration of smart technologies, present a dynamic and non-linear operating environment. Traditional methods, often reliant on sequential data analysis and pre-defined fault signatures, struggle to accurately identify and isolate faults amidst this operational complexity. The proliferation of distributed energy resources introduces intermittent behavior and fluctuating power injections, masking fault signatures and increasing the likelihood of misdiagnosis. Consequently, these limitations hinder rapid response and contribute to potential instability, necessitating the development of more robust and adaptive fault detection strategies capable of navigating the evolving landscape of power system operation.

The integrity of any power system hinges on the swift and precise pinpointing of faults. A delay, even of milliseconds, in identifying a short circuit or ground fault can initiate a cascade of events, potentially leading to widespread blackouts and significant infrastructure damage. This is because faults disrupt the delicate balance between power generation and demand, causing voltage and frequency deviations that, if unchecked, propagate through the network. Rapid fault identification allows protective devices – such as circuit breakers and relays – to isolate the faulted section, preventing further disturbance and maintaining the stability of the remaining grid. Consequently, research and development continually focus on enhancing fault detection speed and accuracy, employing advanced technologies to minimize the risk of cascading failures and ensure a reliable power supply.

Current fault detection methodologies in power systems frequently encounter limitations when applied to increasingly complex grid architectures. Many established techniques rely on extensive computational resources, hindering their real-time applicability, particularly as grid size and data volume expand. Moreover, these methods often demonstrate poor generalization capabilities, meaning a system trained on a specific set of fault scenarios struggles to accurately identify unforeseen disturbances. This lack of adaptability stems from an over-reliance on pre-defined fault signatures and a limited capacity to learn from novel events. Consequently, a reliance on computationally intensive, yet inflexible, approaches poses a significant risk, potentially leading to delayed or inaccurate fault identification and increasing the susceptibility of the power grid to instability and widespread outages.

Learning the System’s Baseline: A Convolutional Approach

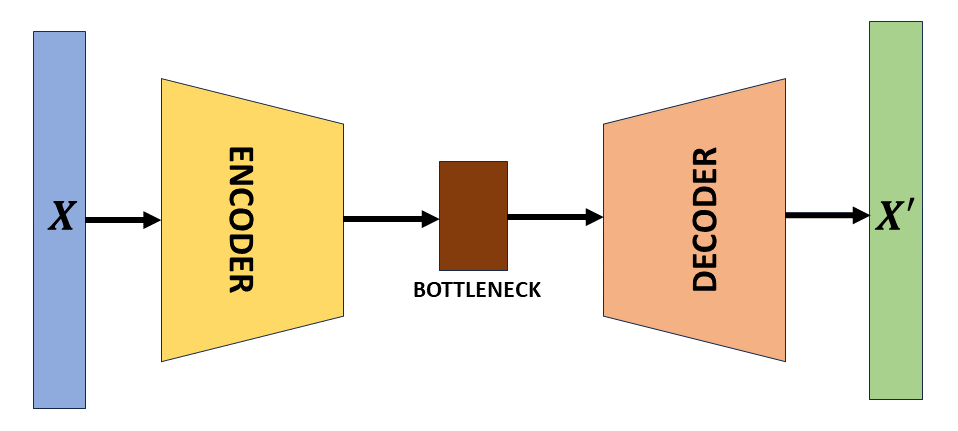

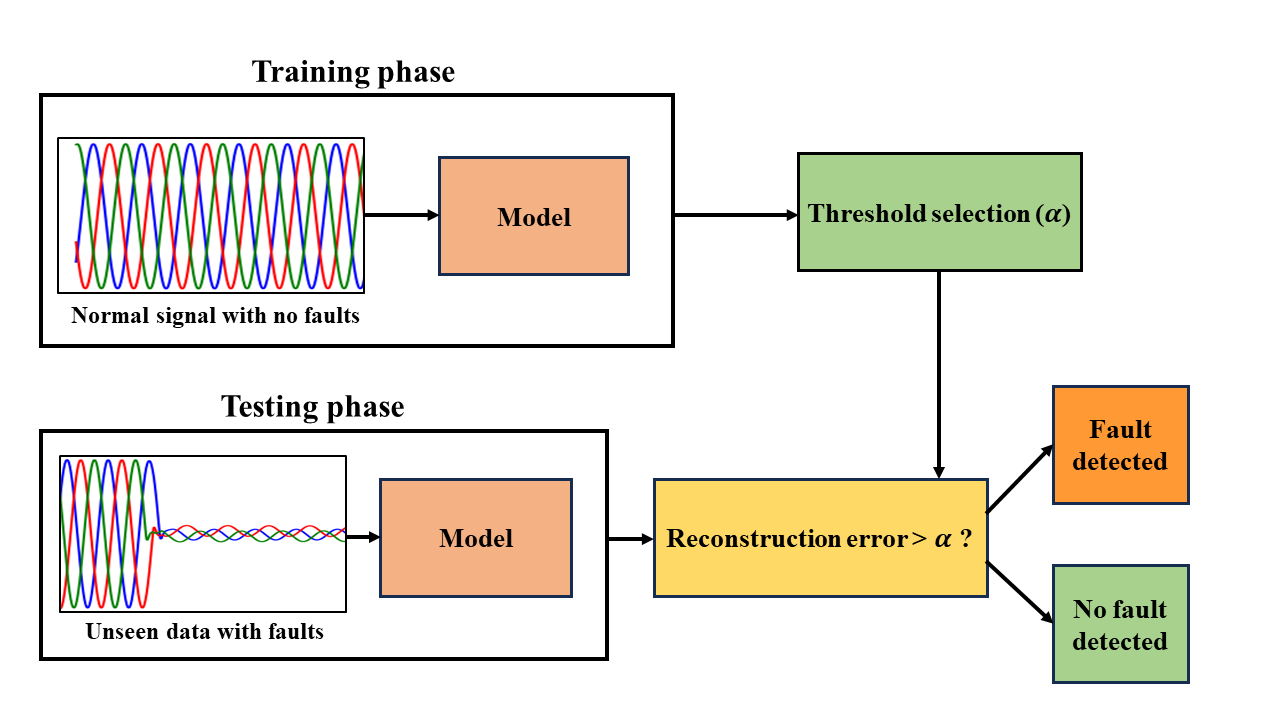

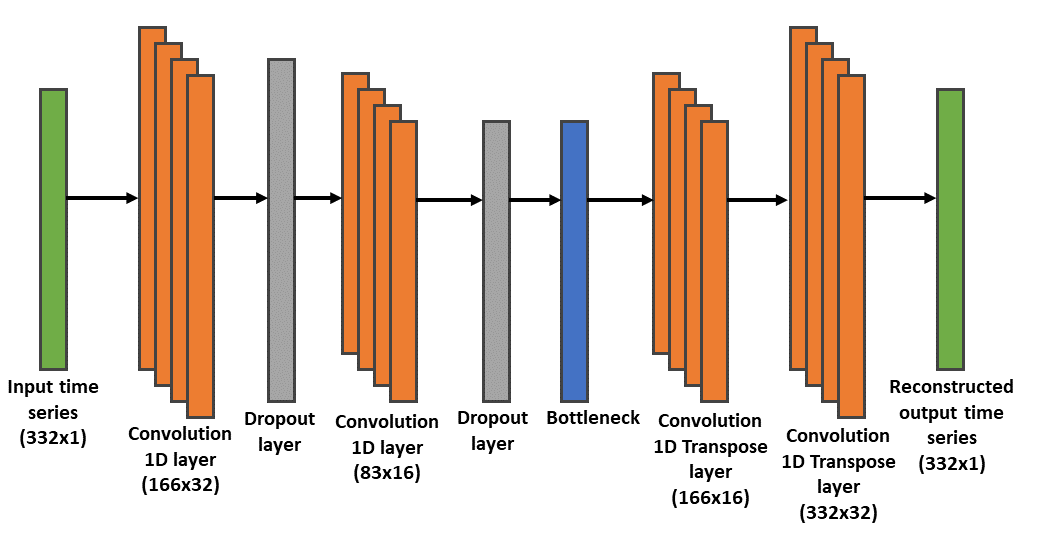

A Convolutional Autoencoder (CNN) approach is implemented to establish a baseline of normal operational characteristics from time series data. This method utilizes a CNN architecture, trained exclusively on data representing typical system behavior, to learn a compressed, latent representation of the input. The autoencoder then attempts to reconstruct the original input from this compressed representation; successful reconstruction indicates data consistent with learned normal behavior. This learned model serves as a reference point for anomaly detection, as deviations between the input data and its reconstruction are flagged as potential indicators of abnormal system states. The CNN’s convolutional layers are specifically designed to extract relevant features and temporal dependencies present in the time series data, improving the model’s ability to accurately represent and subsequently identify deviations from normal operation.

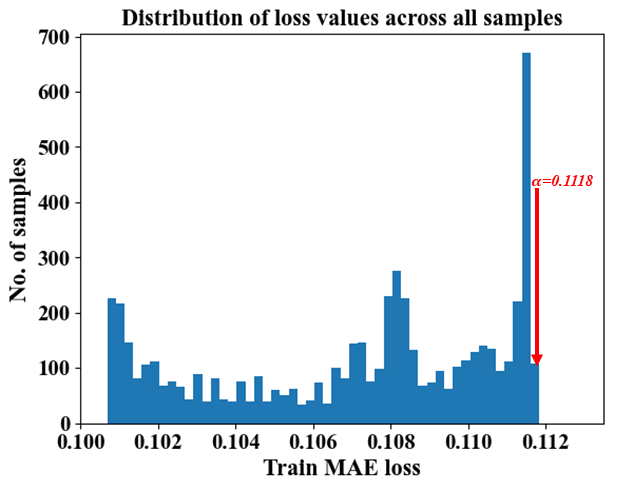

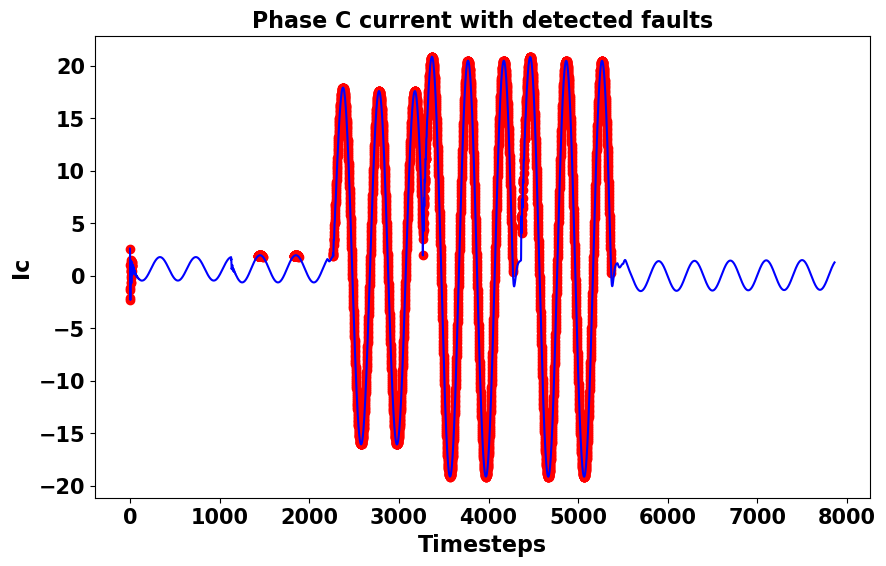

The core principle of fault detection using this autoencoder lies in its ability to learn a compressed representation of normal system operating conditions. During inference, the autoencoder attempts to reconstruct the input time series data. Significant discrepancies between the original input and the reconstructed output – quantified by a reconstruction error metric – indicate a deviation from learned normal behavior. These deviations are flagged as potential faults, as the model has not been trained to accurately reproduce anomalous data patterns; a higher reconstruction error thus correlates with a greater probability of a system malfunction.

Convolutional Neural Networks (CNNs) are particularly suited for analyzing time series data due to their ability to automatically learn hierarchical representations of patterns. In the context of power system data, CNNs utilize convolutional layers to identify local correlations within sequential data points, effectively capturing temporal dependencies. These layers employ filters that slide across the time series, detecting repeating motifs and features indicative of normal system behavior. Subsequent pooling layers reduce dimensionality while retaining crucial information, enabling the model to generalize across varying time scales and input lengths. This process allows the CNN to extract robust features that represent the underlying dynamics of the power system, surpassing the limitations of traditional methods reliant on manual feature engineering or statistical analysis of individual data points.

Simulating Reality: Data Generation and Model Training

The convolutional autoencoder’s performance is fundamentally dependent on the quality and quantity of normal, fault-free operational data used during the training process. This data establishes the baseline representation of expected system behavior, allowing the autoencoder to accurately learn the characteristics of normal operation and subsequently identify deviations indicative of faults. Insufficient or inaccurate normal data will result in a poorly trained model, leading to increased false positive and false negative rates when deployed for fault detection. The model learns to compress and reconstruct the input data; therefore, a robust understanding of normal operation, derived from a comprehensive dataset, is essential for effective anomaly identification.

The training dataset for the convolutional autoencoder was developed using simulated data generated within the MATLAB/SIMULINK environment. This approach offers precise control over data parameters, allowing for the creation of a diverse and labeled dataset representing normal system operation. Utilizing a simulation environment enables the generation of data without the limitations or costs associated with physical testing, and facilitates the systematic variation of parameters to cover a broad range of operational conditions. The simulated data includes parameters representative of sensor readings and system states, providing a foundational dataset for the autoencoder to learn patterns of fault-free behavior.

Evaluation of the convolutional autoencoder on the simulated dataset yielded an accuracy of 97.62%. This metric indicates the model’s capacity to correctly identify fault-free operational states within the controlled parameters of the simulation. The achieved accuracy suggests a strong correlation between the reconstructed data and the expected normal operational data, thereby validating the model’s effectiveness in distinguishing between normal and anomalous conditions under the defined simulation parameters. It is important to note this performance is specific to the simulated dataset and may vary with real-world data.

Beyond the Simulation: Validation and Real-World Integration

Rigorous evaluation of the fault detection system utilized established, standard evaluation metrics to comprehensively assess its performance capabilities. These metrics gauged not only the accuracy of fault identification, but also the efficiency with which the system processed data and minimized false positives. Results consistently demonstrated a high degree of precision in pinpointing anomalies, indicating the model’s ability to reliably distinguish between normal operational states and genuine faults. This thorough assessment confirms the system’s practical utility and suitability for real-world deployment, offering a dependable means of maintaining operational integrity and preventing costly downtime.

Rigorous evaluation extended beyond controlled experiments to include a publicly available dataset sourced from Kaggle, a platform known for its diverse and challenging machine learning problems. This independent validation proved critical; the model achieved an impressive 99.92% accuracy when applied to this external data. This high level of performance demonstrates the model’s capacity to generalize beyond the specific conditions of its training environment and highlights its robustness against the inherent variations present in real-world data. The successful application to a public dataset strengthens confidence in the proposed convolutional autoencoder-based fault detection system’s potential for reliable performance in diverse and unpredictable scenarios.

The successful integration of the fault detection system with a publicly available dataset from Kaggle serves as strong validation of its broader applicability. This wasn’t merely about achieving high accuracy on a controlled, internal dataset; it demonstrated the model’s capacity to generalize effectively to previously unseen data, reflecting real-world variability. Such robustness is crucial for practical deployment, assuring that the convolutional autoencoder-based system can reliably identify faults across diverse operational conditions and maintain consistent performance beyond the scope of its initial training. This external validation instills confidence in the system’s potential for widespread adoption and dependable operation in complex industrial settings.

The pursuit of identifying anomalous behavior within complex systems, as demonstrated by the autoencoder approach to fault detection, echoes a fundamental truth about all engineered creations. Systems, even those designed for stability like electrical distribution networks, are ultimately subject to the arrow of time and eventual degradation. Marie Curie observed, “Nothing in life is to be feared, it is only to be understood.” This understanding, applied to the analysis of time-series data, allows for the proactive identification of faults – a form of ‘memory’ built into the system itself, anticipating and mitigating failures before they cascade. The autoencoder, therefore, doesn’t merely detect anomalies; it extends the system’s operational lifespan by acknowledging its inherent temporality.

What Lies Ahead?

The presented work, while demonstrating a functional approach to fault detection, merely marks a point on the timeline of system health monitoring. The autoencoder, as a reconstructive model, inherently relies on the premise that ‘normal’ operation can be adequately defined. But the electrical grid is not static; it evolves, absorbing new loads, integrating distributed generation, and experiencing gradual degradation. The system’s chronicle, logged in time-series data, will inevitably contain shifts that challenge the boundaries of ‘normal,’ necessitating continuous model adaptation or, more radically, a re-evaluation of what constitutes a deviation.

A critical juncture arrives with the question of generalization. This implementation focuses on current data; however, a truly robust system demands multi-modal analysis. Incorporating voltage, frequency, and environmental factors expands the observable state space, but also introduces the complexities of correlation and causality. The pursuit of a universally applicable autoencoder is, perhaps, a fool’s errand; the system’s architecture will likely require specialization, reflecting the inherent heterogeneity of the electrical distribution network.

Ultimately, the challenge is not simply identifying faults, but predicting them. The current methodology functions as a diagnostic tool; future iterations should aim for prognostics. The deployment of such a system is not an endpoint, but rather a moment in an ongoing cycle of observation, adaptation, and, inevitably, decay. The question isn’t whether the system will fail, but when, and how gracefully it will age.

Original article: https://arxiv.org/pdf/2602.14939.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

- Where to Change Hair Color in Where Winds Meet

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Brent Oil Forecast

2026-02-17 19:49