Author: Denis Avetisyan

A new framework enables neural networks to accurately process images and data undergoing complex transformations without the need for extensive training data.

This review details DiffeoNN, a method for achieving diffeomorphism equivariance in neural networks through energy canonicalisation, enabling robust geometric deep learning.

Modern deep learning often demands extensive datasets and struggles with generalisation to unseen transformations, yet incorporating inherent symmetries can mitigate these limitations. This is the central challenge addressed in ‘Diffeomorphism-Equivariant Neural Networks’, which introduces a novel framework-DiffeoNN-for inducing equivariance to diffeomorphic transformations in pre-trained neural networks via energy-based canonicalisation. By formulating equivariance as an optimisation problem and leveraging established image registration techniques, the authors demonstrate approximate equivariance without requiring data augmentation or retraining. Could this approach unlock more robust and data-efficient geometric deep learning models capable of handling complex, non-rigid deformations?

The Illusion of Geometric Understanding

Image analysis frequently demands that computational models grasp how objects change under various transformations – rotation, scaling, translation, and more. However, standard convolutional neural networks, while remarkably successful in many visual tasks, fundamentally lack an inherent understanding of these geometric principles. These networks learn patterns through the detection of features, but without explicit mechanisms to account for changes in viewpoint or orientation, they treat a rotated or scaled object as entirely different from its original form. This limitation necessitates extensive training data covering all possible transformations, and even then, generalization to unseen variations remains a significant challenge. Consequently, a core area of research focuses on equipping these models with the ability to reason geometrically, moving beyond simple pattern recognition towards true visual understanding.

While increasing the size of a training dataset through simple data augmentation – rotating, scaling, or shifting images, for example – can improve a model’s performance on seen transformations, this approach offers only a limited solution to achieving true equivariance. These techniques essentially teach the model to recognize transformed instances as new, separate examples, rather than understanding the underlying geometric principles. Consequently, performance often falters when confronted with transformations not present in the training set, highlighting a critical gap between superficial pattern matching and genuine geometric reasoning. The model learns to memorize transformed appearances, instead of developing an intrinsic understanding of how objects remain consistent despite changes in perspective or orientation – a limitation that hinders reliable generalization to novel scenarios and necessitates more robust approaches to geometric understanding in image analysis.

Diffeomorphism: The Prophecy of Transformation

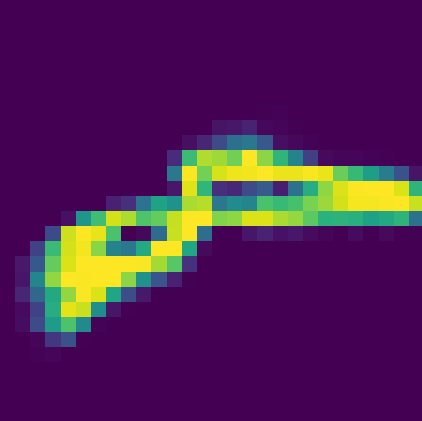

DiffeoNN achieves diffeomorphism equivariance by representing transformations as Stationary Velocity Fields (SVFs). These SVFs, denoted as v(x), map points in the input space to a velocity vector indicating displacement under a transformation. The framework explicitly models these transformations, allowing for consistent processing of inputs regardless of their initial configuration. By operating directly on the velocity fields, DiffeoNN ensures that any transformation applied to the input data results in a corresponding and predictable transformation in the feature space, satisfying the equivariance property. This differs from approaches that implicitly learn transformations, providing greater control and interpretability.

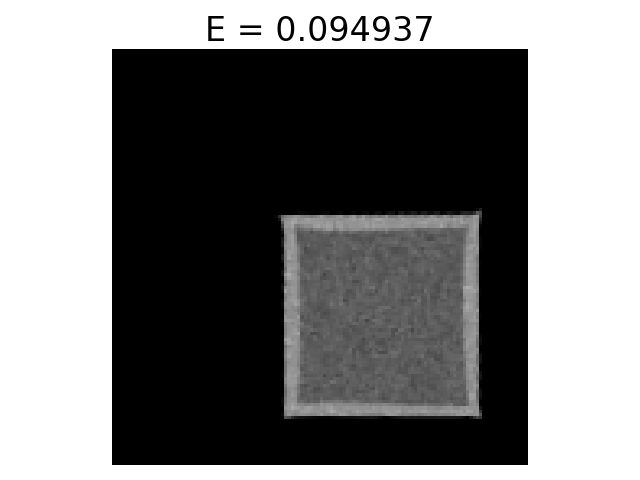

Energy Canonicalization is a process within the DiffeoNN framework designed to establish a consistent representation of input data irrespective of applied transformations. This technique operates by mapping variable inputs to a fixed, canonical space where comparisons and analyses become transformation-invariant. Specifically, it utilizes a learned mapping – implemented via a Variational Autoencoder (VAE) – to project inputs into this canonical representation. The VAE is trained to reconstruct the original input from the canonical form, thereby enforcing similarity of transformed inputs within the canonical space and ensuring that transformations do not alter the underlying representation used for downstream tasks. This canonicalization effectively decouples the data’s intrinsic properties from its specific geometric realization, enabling diffeomorphism equivariance.

The DiffeoNN framework employs a Variational Autoencoder (VAE) to enforce consistency within the canonical space achieved through Energy Canonicalization. The VAE is trained to reconstruct input data from its canonical representation, and the reconstruction loss functions as a regularization term. This encourages the encoder to map similar inputs to proximate points in the canonical space, effectively minimizing the distance between transformed versions of the same input. By minimizing the reconstruction error, the VAE ensures that the canonical representation captures the essential features of the input, maintaining data integrity under diffeomorphic transformations and promoting similarity between equivalent inputs within the canonical space.

Constraining Chaos: Regularization and Stability

The Energy Canonicalization process employs Jacobian Determinant Loss and Gradient Loss as regularization terms during training to constrain the learned transformations. Jacobian Determinant Loss, calculated as the mean absolute error of the determinant of the transformation’s Jacobian matrix, penalizes transformations that collapse or expand volume, ensuring local invertibility and preserving orientation. Simultaneously, Gradient Loss minimizes the magnitude of the transformation’s gradient, preventing high-frequency distortions and promoting smoother, more stable spatial mappings. These losses are combined with a reconstruction loss to yield a total energy function, driving the model towards transformations that are both geometrically valid and visually coherent.

Jacobian Determinant Loss enforces the preservation of orientation during transformations by penalizing configurations where the determinant of the Jacobian matrix deviates from unity; a determinant less than one indicates compression and potential folding, while a value greater than one signifies expansion. Simultaneously, Gradient Loss addresses spatial discontinuities by minimizing the magnitude of the gradient of the transformation field; this regularization term prevents sharp, unrealistic deformations by encouraging smoother transitions between points in the original and transformed spaces. Both losses are critical for maintaining topological consistency and ensuring stable, physically plausible transformations.

An adversarial discriminator network is integrated into the transformation learning process to improve the quality and stability of generated images. This discriminator is trained to differentiate between images from the original dataset and images produced by the transformation network. The gradients from the discriminator are then backpropagated through the transformation network, providing a refinement signal that encourages the generation of more realistic outputs. This adversarial training process effectively minimizes the perceptual distance between real and transformed images, resulting in transformations that are less prone to artifacts and more visually consistent with the training data. The discriminator’s role is not to classify the content of the images, but to assess the realism of the transformation itself, thereby stabilizing the learning process and enhancing the overall fidelity of the generated results.

Beyond Segmentation: Topology and the Map of Structure

Lung segmentation, the accurate identification of lung structures within medical images, is paramount for diagnosis and treatment planning, and DiffeoNN, when integrated with a U-Net convolutional neural network, offers a compelling advancement in this field. This combination leverages the strengths of both approaches – DiffeoNN’s capacity to learn smooth, invertible transformations and U-Net’s efficient feature extraction – resulting in highly precise segmentation maps. The framework excels at delineating lung boundaries even in the presence of anatomical variations or image noise, a frequent challenge in clinical settings. Performance benchmarks demonstrate that this DiffeoNN-enhanced U-Net achieves a Dice coefficient – a measure of overlap between predicted and actual segmentation – comparable to that of heavily augmented U-Net models, indicating a robust and competitive approach to automated lung analysis.

Beyond simply identifying structures within an image, this framework facilitates a deeper understanding through robust homology classification. This process moves beyond shape recognition to analyze the connectivity of image features – essentially, it maps and categorizes holes, loops, and connected components. By quantifying these topological characteristics, the system can differentiate between structures that appear similar visually but possess fundamentally different arrangements – a crucial distinction in medical imaging where subtle changes in connectivity can indicate disease progression. This capability extends beyond traditional image segmentation, offering a new dimension for characterizing and classifying complex biological structures and patterns with a level of detail previously unattainable.

Evaluations demonstrate the efficacy of this novel framework across crucial tasks in image analysis. Specifically, the approach achieves a Dice coefficient – a measure of overlap between predicted and actual segmentations – that is statistically comparable to that of traditional U-Net architectures enhanced with data augmentation techniques, on both artificially generated and clinically acquired lung imaging datasets. Furthermore, performance extends beyond segmentation; the methodology successfully matches the accuracy of established augmented approaches when applied to the MNIST homology classification task, which involves identifying topological features within images. These results suggest the framework provides a competitive and potentially more efficient alternative to existing methods, offering robust performance without relying heavily on extensive data augmentation.

The pursuit of diffeomorphism equivariance, as demonstrated by DiffeoNN, reveals a deeper truth about system design. It’s not about imposing rigid control, but about fostering an inherent adaptability. As Barbara Liskov observed, “Programs must be right first before they are fast.” This framework prioritizes correctness – the preservation of geometric relationships – allowing the network to generalize beyond the confines of specific training data. Like tending a garden, the researchers didn’t build a solution; they cultivated an environment where the network could learn robust representations, forgiving minor variations in input while maintaining essential structural integrity. The emphasis on canonicalisation is akin to establishing healthy root systems – a foundation for resilience and growth.

What Lies Ahead?

The pursuit of diffeomorphism equivariance, as exemplified by DiffeoNN, is not merely a technical refinement, but an admission. It acknowledges the inherent instability of representation, the fact that any mapping of data to feature space is predicated on arbitrary coordinate choices. The framework doesn’t solve the problem of geometric variation; it merely shifts the burden, internalizing the canonicalization as a learned constraint. Monitoring this constraint, tracing its failures, will become the art of fearing consciously.

Future work will inevitably confront the limits of this internalisation. The stationary velocity field, while elegant, is itself a simplification, a prophecy of failure when confronted with truly complex deformations. True resilience begins where certainty ends, and the field must move beyond enforcing equivariance as a rigid constraint, towards systems that anticipate and adapt to unexpected geometric transformations.

The ultimate challenge isn’t building models that are invariant or equivariant, but constructing ecosystems that reveal their own blind spots. Each incident, each failure to generalize, is not a bug – it’s a revelation, a pointer towards the next layer of complexity that must be embraced, not evaded. The goal, then, is not perfect representation, but perfect observability of imperfection.

Original article: https://arxiv.org/pdf/2602.06695.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Top 15 Insanely Popular Android Games

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- EUR UAH PREDICTION

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- Silver Rate Forecast

- Gold Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- Core Scientific’s Merger Meltdown: A Gogolian Tale

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

2026-02-09 23:20