Author: Denis Avetisyan

A new framework clarifies the meaning of alpha-mutual information by connecting it to the fundamental concept of information leakage.

This work establishes a generalized gg-leakage representation of alpha-mutual information, leveraging the Kolmogorov-Nagumo mean to define conditional vulnerability and refine quantitative information flow analysis.

Despite its widespread use, a unifying interpretation of α-mutual information-and its connection to information-theoretic security-has remained elusive. This paper, ‘A Generalized Leakage Interpretation of Alpha-Mutual Information’, resolves this by establishing a novel framework representing α-MI as a generalized gg-leakage, defined through adversarial decision problems and utilizing the Kolmogorov-Nagumo mean. Crucially, this allows for interpreting the parameter α as a quantifiable measure of an adversary’s risk aversion. Does this framework offer a path towards more robust and interpretable measures of information leakage in complex systems?

Beyond Simple Metrics: Defining Leakage in Complex Systems

Conventional information-theoretic metrics, such as mutual information and conditional entropy, frequently struggle to fully characterize information leakage in increasingly complex systems. These measures often assume a simplified model of the adversary, typically focusing on an idealized attacker with unlimited computational resources and a singular goal of maximizing information gain. However, real-world leakage scenarios often involve nuanced data correlations, side channels, and adversaries with limited capabilities or specific objectives. Consequently, subtle leakage patterns – those not resulting in a dramatic reduction in entropy but still revealing sensitive information over time or through statistical inference – can remain undetected. This limitation is particularly acute in modern systems involving machine learning models, networked devices, and intricate software stacks, where leakage can manifest through seemingly innocuous data interactions and require more sensitive and adaptable quantification techniques to ensure robust security and privacy.

The pervasive integration of data-driven technologies necessitates a rigorous understanding of information leakage, making a robust quantification framework essential for safeguarding security and privacy across numerous applications. From healthcare records and financial transactions to personal communications and critical infrastructure, sensitive data is constantly at risk of unintended disclosure. Current security protocols often rely on simplistic metrics that fail to capture the nuanced ways information can seep from systems, leaving vulnerabilities unaddressed. A generalized framework moves beyond basic binary classifications of “secure” or “compromised” by providing a continuous scale of leakage risk, enabling proactive mitigation strategies tailored to specific threats and data sensitivities. This allows developers and security professionals to not only detect breaches but also to assess the degree of compromise, facilitating more informed decision-making and bolstering defenses against increasingly sophisticated adversaries. Ultimately, a comprehensive quantification of leakage is no longer merely a technical refinement, but a fundamental requirement for building trustworthy and resilient systems in the digital age.

Current methods for assessing information leakage often presume a uniform level of risk acceptance amongst potential adversaries, a simplification that undermines their practical utility. An attacker’s willingness to expend resources – and therefore accept risk – varies significantly; a highly motivated adversary might pursue even low-probability information gains, while a more cautious one will focus solely on high-confidence extractions. Existing leakage metrics fail to capture this nuance, treating all revealed information as equally valuable regardless of the attacker’s profile. Consequently, a measurement indicating ‘low leakage’ based on a standard metric might still be exploited by a risk-tolerant adversary, while the same measurement could accurately reflect security for a more conservative attacker. Developing metrics that incorporate adjustable parameters for risk aversion-effectively allowing quantification of leakage relative to an adversary’s cost-benefit analysis-is therefore critical for building truly robust and adaptable privacy safeguards.

Alpha Mutual Information: A Unified Approach to Quantifying Leakage

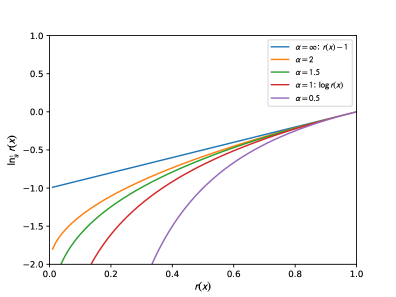

Alpha Mutual Information (AMI) extends the concept of standard mutual information I(X;Y) by introducing a parameter, α, which allows for a continuous relaxation between different measures of dependence. Standard mutual information quantifies the reduction in uncertainty about one random variable given knowledge of another; AMI generalizes this by weighting the contributions of different levels of overlap between the probability distributions of the variables. When α approaches zero, AMI converges towards a measure sensitive to overlapping supports, while as α approaches infinity, it approaches the standard mutual information. This parameterization allows AMI to capture a wider range of relationships than traditional mutual information, effectively providing a family of dependence measures within a single framework.

Alpha Mutual Information (AMI) provides a generalized framework for quantifying information leakage that unifies several previously established measures. Specifically, the Sibson Mutual Information, Arimoto Mutual Information, Hayashi Mutual Information, Lapidoth-Pfister Mutual Information, and Augustin-Csiszár Mutual Information can all be expressed as special cases of AMI by adjusting the alpha parameter. This unification is achieved through a consistent mathematical formulation, allowing for direct comparison and analysis of these different leakage measures within a single, coherent system, as demonstrated by the paper’s derived representations. The ability to express these diverse metrics using a common foundation simplifies analysis and facilitates the development of generalized leakage detection and mitigation strategies.

Alpha Mutual Information (AMI) offers a tunable approach to quantifying information leakage by varying the alpha parameter. Different values of alpha prioritize different aspects of the relationship between random variables; lower values emphasize strong dependencies while higher values focus on weaker, more nuanced relationships. This parameterization allows for the creation of α-MI variants that can be directly interpreted as measures of vulnerability and risk aversion in information-theoretic security contexts. Specifically, lower alpha values correspond to more conservative, risk-averse leakage assessments, while higher values allow for a more relaxed analysis potentially accepting a greater degree of information disclosure. This adaptability allows researchers to tailor the leakage quantification to the specific security requirements of a given scenario, providing a flexible tool beyond the constraints of fixed mutual information measures like I(X;Y).

Foundational Principles: Rényi Entropy and Divergence for Leakage Measurement

Adversarial Machine Learning (AMI) leverages Rényi Entropy and Rényi Divergence to quantify information leakage and assess the robustness of machine learning models. Traditional information theory often relies on Shannon Entropy, which has limitations when dealing with high-dimensional data or complex distributions. Rényi Entropy, defined as H_{\alpha}(P) = \frac{1}{1-\alpha} \log \sum_{x} P(x)^{\alpha} where \alpha \ge 0 and \alpha \ne 1, generalizes Shannon Entropy and allows for tunable sensitivity to different parts of the probability distribution. Rényi Divergence, a corresponding measure of distance between probability distributions, extends Kullback-Leibler divergence and provides a more flexible tool for quantifying the difference between the original and perturbed data distributions encountered in adversarial scenarios. By utilizing these generalized measures, AMI gains a more nuanced and comprehensive understanding of uncertainty and information distance, facilitating more effective adversarial attack and defense strategies.

Rényi entropy and divergence provide quantifiable metrics for assessing information leakage by characterizing the statistical distance between probability distributions. Information leakage is formally defined as the reduction in uncertainty about a sensitive variable, and Rényi divergence D_{\alpha}(P||Q) = \frac{1}{\alpha-1} \log_2 \sum_{x} \frac{P(x)^{\alpha}}{Q(x)^{\alpha}} measures the dissimilarity between the prior distribution P and the posterior distribution Q given observed data. Different values of α in Rényi divergence emphasize varying degrees of sensitivity to differences in the tails of the distributions, allowing for a nuanced analysis of leakage. By applying these concepts, complex systems can be modeled and analyzed to determine the amount of information revealed through observation, enabling the development of privacy-preserving mechanisms and leakage mitigation strategies.

The application of Rényi Entropy and Rényi Divergence as foundational elements within the AMI framework provides a level of mathematical rigor that enables formal verification of its properties. Specifically, this reliance facilitates the derivation of bounds on information leakage, allowing for quantifiable assessments of privacy loss under various conditions. The consistent mathematical structure afforded by these concepts ensures that performance guarantees, such as the accuracy of leakage estimation or the effectiveness of privacy mechanisms, are not based on heuristics but on provable properties derived from the underlying definitions of α-divergence and related quantities. This allows for robust and reliable operation of the AMI framework, especially in security-critical applications where predictable behavior is paramount.

Practical Implementation: Quantifying Leakage with gg-Leakage

gg-Leakage provides a practical implementation of a generalized vulnerability framework by employing Arimoto Mutual Information (AMI) as its core metric. AMI, defined as I_{\alpha}(X;Y) = \max_{\alpha} E[\max(0, \log_{\alpha} \frac{p(x,y)}{p(x)p(y)})], is utilized for quantifying information leakage, and the framework incorporates the Kolmogorov-Nagumo Mean to facilitate a more robust and stable evaluation of leakage across various data distributions. This mean provides a method for averaging the AMI values, mitigating the impact of extreme values and enhancing the reliability of the leakage quantification process. The concrete implementation allows for practical application of the generalized vulnerability concepts to real-world systems and data.

gg-Leakage quantifies information leakage using the Power Score, a scoring function designed to provide a numerical assessment of vulnerability. The Power Score is calculated as \mathbb{E}[ \sqrt{ \frac{P(X|Y)}{P(X)} } ] , where P(X|Y) represents the conditional probability of random variable X given Y, and P(X) is the prior probability of X. This score ranges from 0 to 1, with higher values indicating a greater degree of information leakage. A score of 0 indicates no leakage, while a score approaching 1 signifies a complete loss of privacy. The Power Score allows for a comparative analysis of different leakage scenarios and provides a standardized metric for evaluating the effectiveness of privacy-enhancing technologies.

gg-Leakage utilizes Gibbs’ Inequality to provide provable bounds on leakage quantification, ensuring the analytical validity of the results. The framework employs conditional entropy to enable a precise evaluation of information leakage between random variables. Significantly, gg-Leakage generalizes existing information measures; it demonstrates that established metrics such as Shannon Mutual Information I(X;Y), Arimoto Mutual Information IαA(X;Y), Hayashi Mutual Information IαH(X;Y), and Lapidoth-Pfister Mutual Information IαLP(X;Y) are all specific instances within the broader gg-Leakage formulation, effectively unifying them under a single, consistent framework.

The pursuit of quantifying information leakage, as detailed in this exploration of α-mutual information, mirrors a sculpting process. Unnecessary complexities obscure the core understanding; the essence lies in what remains after rigorous reduction. Alan Turing observed, “Sometimes people who are unhappy tend to look at the world as if there were nothing else.” This resonates with the paper’s core idea – establishing a unified framework for α-mutual information through the Kolmogorov-Nagumo mean. By stripping away extraneous variables and focusing on the fundamental relationship between variables, the research clarifies the concept of generalized vulnerability and its implications for information-theoretic security. It isn’t about adding layers of complexity, but about revealing the underlying structure with clarity.

What Remains?

The present work clarifies α-mutual information as a specific instance of generalized leakage. This, however, does not resolve the fundamental unease with quantifying information flow. The Kolmogorov-Nagumo mean offers a tractable, if imperfect, bridge between abstract theory and concrete calculation. But reliance on any mean-any averaging-obscures the critical details. The devil, as always, resides in the specific instance, and a single number, no matter how elegantly derived, will never capture the nuance of a complex system. The pursuit of a ‘universal’ measure feels increasingly like tilting at windmills.

Future effort should resist the siren song of abstraction. A more fruitful path lies in understanding when these generalized vulnerabilities matter. Decision-theoretic consequences, the actual costs of information leakage in specific contexts, remain largely unexplored. To fixate on the quantity of leaked information without considering its qualitative impact is a category error. Intuition suggests that the most damaging leaks are not necessarily the largest, but those that exploit existing vulnerabilities.

Ultimately, the goal is not to achieve perfect quantification, but to develop tools that are sufficient for the task at hand. Code should be as self-evident as gravity. Perhaps the most valuable outcome of this line of inquiry will be the realization that simplicity, not sophistication, is the ultimate virtue. The best measure is often the one that is discarded in favor of a clear understanding of the underlying mechanisms.

Original article: https://arxiv.org/pdf/2601.09406.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- ETH PREDICTION. ETH cryptocurrency

- The Labyrinth of Leveraged ETFs: A Direxion Dilemma

- ‘Zootopia+’ Tops Disney+’s Top 10 Most-Watched Shows List of the Week

- The Best Actors Who Have Played Hamlet, Ranked

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Uncovering Hidden Groups: A New Approach to Social Network Analysis

2026-01-15 23:52