Author: Denis Avetisyan

A new framework uses the mathematics of spectral geometry and random matrices to simultaneously enhance the reliability and efficiency of deep neural networks.

This review explores how spectral geometry and random matrix theory enable robust hallucination detection and effective model compression in deep learning systems.

Despite advances in deep learning, concerns remain regarding model reliability and computational cost. This paper, ‘Spectral Geometry for Deep Learning: Compression and Hallucination Detection via Random Matrix Theory’, introduces a novel framework leveraging spectral geometry and random matrix theory to address these challenges by analyzing the eigenvalue structure of hidden activations. The core finding is that spectral statistics provide interpretable signals for both detecting out-of-distribution behavior and guiding model compression via iterative knowledge distillation-achieving improved efficiency without sacrificing accuracy. Could this approach unlock more robust and scalable deep learning systems capable of both trustworthy prediction and efficient deployment?

The Illusion of Intelligence: Peering Inside the Black Box

Contemporary neural networks excel at complex tasks, yet their internal workings remain largely opaque-a phenomenon often described as the ‘black box’ problem. These models, built upon layers of interconnected nodes, process information in ways that are difficult for humans to interpret, even for the engineers who designed them. While a network might accurately predict an outcome, understanding why it arrived at that conclusion can be elusive. This lack of transparency isn’t merely an academic concern; it hinders debugging, limits the ability to refine performance, and, crucially, erodes trust in systems deployed in sensitive applications like healthcare or autonomous driving, where accountability and explainability are paramount. The intricate, high-dimensional representations learned by these networks make tracing the causal pathways of decision-making a formidable challenge, necessitating new approaches to internal monitoring and interpretability.

The inherent opacity of modern neural networks presents substantial reliability challenges, particularly when deployed in critical applications demanding both trust and explainability. Unlike traditional algorithms where reasoning is transparent, these complex systems often arrive at conclusions through intricate, non-human-interpretable processes. This ‘black box’ nature is especially problematic in fields like healthcare, finance, and autonomous vehicles, where incorrect predictions can have severe consequences. Establishing confidence in these systems necessitates understanding why a decision was made, not just what the decision is. Consequently, research is increasingly focused on developing methods to illuminate the internal workings of these networks, fostering greater accountability and enabling effective error correction-a crucial step toward widespread adoption in high-stakes environments.

A significant hurdle in deploying advanced artificial intelligence lies in its propensity to ‘hallucinate’ – confidently generating incorrect or nonsensical outputs. Identifying these instances of confident incorrectness is not merely an academic exercise, but a fundamental requirement for building AI systems worthy of trust, particularly in high-stakes applications. Current hallucination detection methods, however, demonstrate limited efficacy, achieving only around 89-92% accuracy. This remaining margin of error represents a critical vulnerability, suggesting that a substantial number of confidently incorrect outputs may go unnoticed, potentially leading to flawed decision-making and undermining the reliability of the entire system. Improved detection techniques are therefore essential to bridge this gap and unlock the full potential of AI in sensitive domains.

Spectral Signatures: A New Way to Look Inside

The internal state of a neural network, at any given layer, is fundamentally determined by the activations of its neurons. These activations, when organized into matrices – typically representing the outputs of a layer for a given input batch – exhibit spectral properties that characterize the learned representations. The spectrum of an activation matrix comprises its eigenvalues and eigenvectors, which collectively define the matrix’s inherent dimensionality, stability, and the distribution of information it encodes. Specifically, eigenvalues indicate the variance of the activations along corresponding eigenvector directions, with larger eigenvalues signifying more significant dimensions in the representational space. Analysis of these spectra allows for quantification of the effective rank of the activations, identification of dominant features, and detection of potential redundancy or bottlenecks in information flow within the network. These spectral characteristics are not simply descriptive; they directly impact the network’s capacity to generalize, its robustness to noise, and its susceptibility to adversarial attacks.

Spectral geometry offers a mathematical formalism for characterizing matrices – in this context, activation matrices within neural networks – through the analysis of their eigenvalues and eigenvectors. Eigenvalues represent the magnitude of variation along specific directions, or principal components, defined by the corresponding eigenvectors. These spectral properties directly relate to the matrix’s capacity to represent and transform data; a matrix with a diverse range of eigenvalues can capture more complex relationships than one with a limited spectrum. By decomposing activation matrices into their spectral components, researchers can quantify the dimensionality and stability of the network’s internal representations, and identify dominant modes of information flow. This approach moves beyond simply observing activations to understanding the underlying geometric structure defining the network’s state.

Analysis of the geometric properties of activation spectra provides a means to interpret internal network states as representations of input data. Specifically, the distribution of eigenvalues and corresponding eigenvectors within activation matrices – representing the network’s response to stimuli – reveal information about the dimensionality and structure of learned features. Deviations from expected spectral properties, such as unusually large or small eigenvalues, or eigenvectors concentrated in specific subspaces, can indicate anomalies like overfitting, dead neurons, or adversarial perturbations. Quantifying these spectral characteristics – including spectral radius, condition number, and eigenvalue distribution – allows for the development of metrics to assess network health and interpret its decision-making process without direct access to training data or model parameters.

EigenTrack: Shining a Light in the Darkness

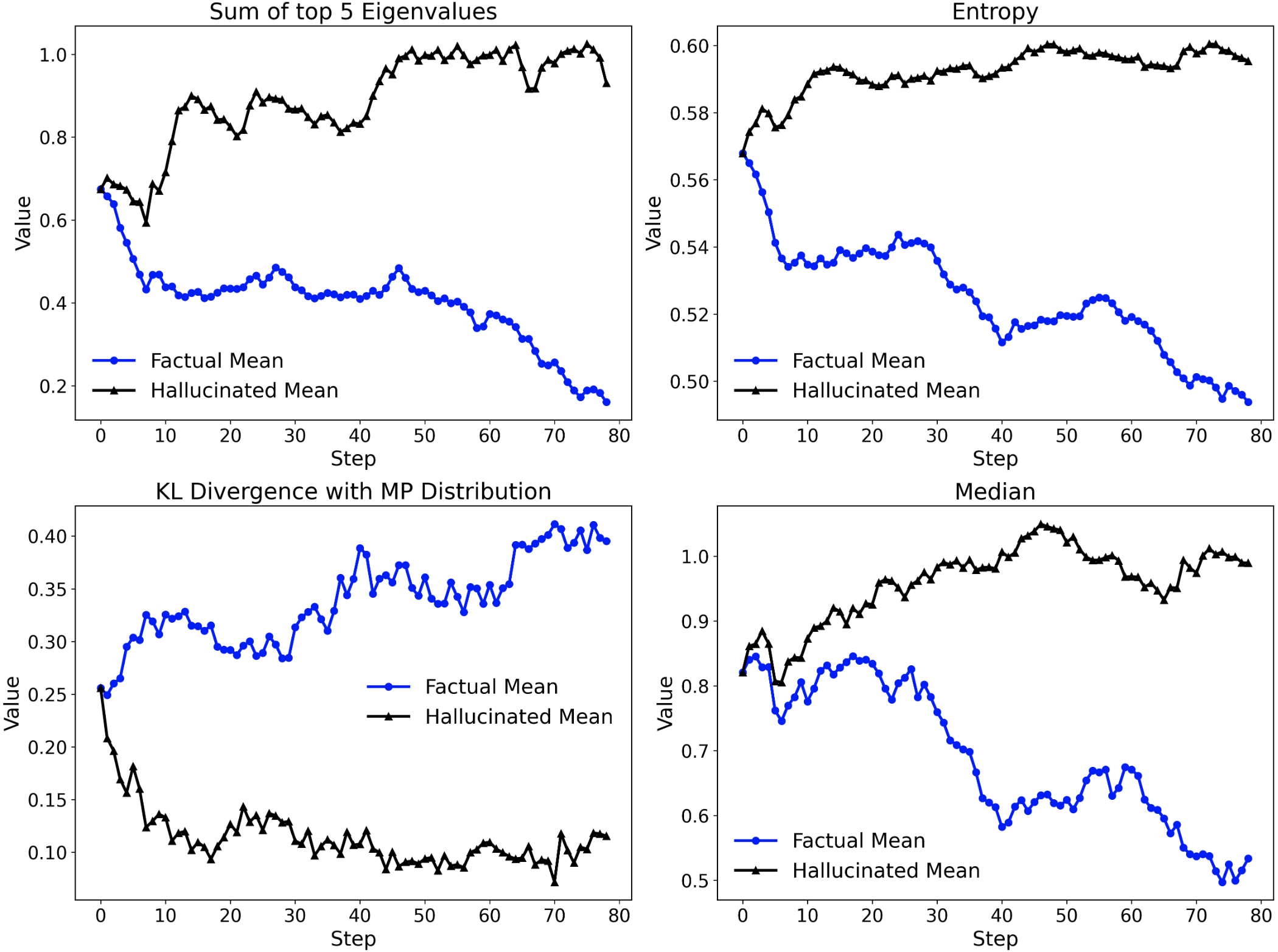

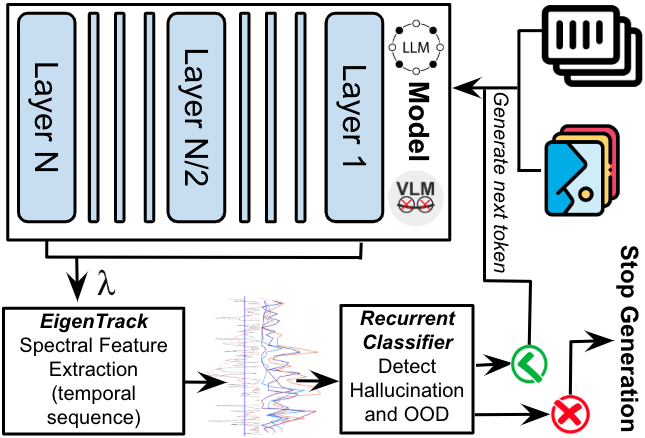

EigenTrack is a real-time anomaly detection framework utilizing spectral geometry to analyze the activation patterns within neural networks. The method focuses on monitoring the evolution of activation spectra – the distribution of eigenvalues derived from the activation matrices of network layers – over time. By representing these activations as points in a spectral space, EigenTrack tracks changes in their geometric properties. This allows the framework to characterize normal network behavior and identify deviations indicative of either internal hallucinations, where the network generates nonsensical outputs, or the processing of out-of-distribution (OOD) inputs that differ significantly from the training data. The system operates by continuously observing these spectral changes, offering a proactive approach to detecting anomalies as they emerge.

EigenTrack utilizes eigenvalue analysis of activation spectra to identify anomalous inputs. The distribution of eigenvalues, representing the variance in neural activations, establishes a baseline of expected behavior. Deviations from this baseline, quantified through statistical measures, signal either a hallucination – an internally generated, nonsensical output – or an out-of-distribution (OOD) input, meaning the input differs significantly from the data the model was trained on. Research indicates that this eigenvalue-based anomaly detection achieves up to 96% accuracy in identifying these deviations, providing a robust method for real-time monitoring of model reliability.

EigenTrack utilizes Recurrent Neural Networks (RNNs) to capture the changing patterns of activation spectra over time. These RNNs are trained on sequences of spectral features – specifically, the distribution of eigenvalues derived from neural network activations – to establish a baseline of expected temporal behavior. By modeling these temporal dynamics, the framework can predict future spectral states and identify deviations from this predicted trajectory. Anomalous behavior, indicative of hallucinations or out-of-distribution (OOD) inputs, is flagged when observed spectral features significantly diverge from the RNN’s predictions, allowing for proactive detection before the anomaly manifests as a discernible error in the system’s output.

Random Matrices and the Noise Floor

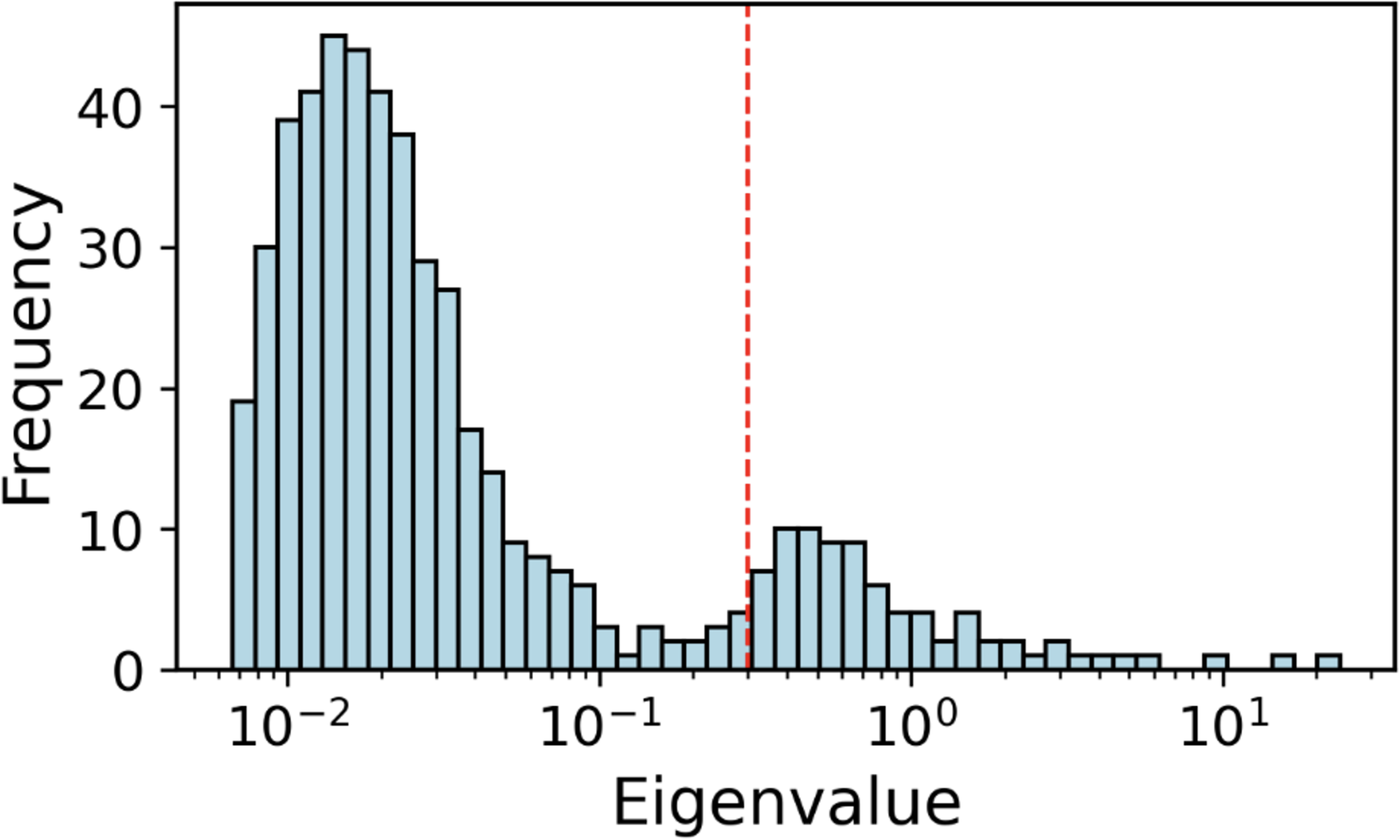

The behavior of complex systems, from nuclear physics to neural networks, often manifests as a spectrum of eigenvalues reflecting the system’s internal states. Random Matrix Theory (RMT) offers a powerful framework for interpreting these activation spectra, providing statistical predictions for what constitutes “noise” in the absence of any underlying signal. Unlike traditional signal processing which assumes a specific signal structure, RMT characterizes the spectral properties of random matrices, establishing a baseline distribution against which observed spectra can be compared. Deviations from this expected random behavior-eigenvalues significantly outside the predicted range-then become indicators of meaningful information, suggesting the presence of structured activity rather than mere statistical fluctuation. This allows researchers to effectively filter noise and isolate the signals driving a system’s function, revealing hidden patterns within seemingly chaotic data and offering insights into the underlying mechanisms at play.

The spectral properties of large, random matrices reveal crucial information about the underlying data, and understanding these properties relies heavily on foundational distributions like the Marchenko-Pastur Law and the Tracy-Widom Distribution. The Marchenko-Pastur Law predicts the distribution of eigenvalues for a random matrix, establishing a baseline expectation for what constitutes ‘noise’ in the eigenvalue spectrum – essentially, the typical distribution when no meaningful signal is present. Deviations from this expected distribution, specifically the appearance of outlier eigenvalues significantly larger or smaller than predicted, then signal the presence of meaningful information. The Tracy-Widom Distribution further refines this analysis by precisely describing the distribution of the largest eigenvalue, allowing researchers to statistically determine whether an observed large eigenvalue is genuinely significant or simply a random fluctuation. By comparing observed eigenvalue spectra to these theoretical baselines, it becomes possible to effectively distinguish informative signals from inherent noise in complex datasets.

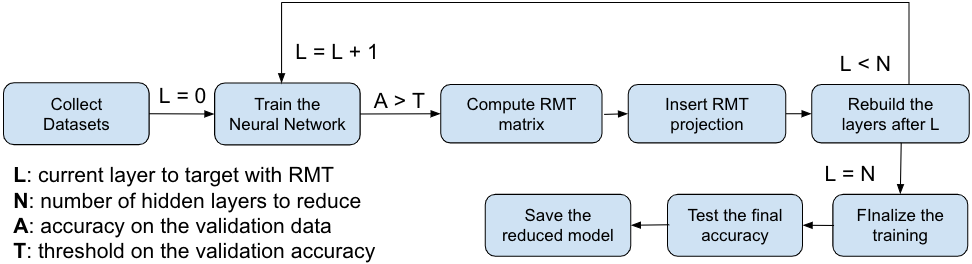

Rooted in the principles of Random Matrix Theory, RMT-KD represents a sophisticated compression technique designed to significantly reduce the computational burden of large neural networks. This method leverages the identification of outlier eigenvalues – those spectral values indicating meaningful information within the activation matrices – to project activations onto lower-dimensional subspaces. By focusing computational resources on these key components, RMT-KD achieves a remarkable 60-80% reduction in model size. Crucially, this compression doesn’t come at a substantial cost to performance, with observed accuracy losses typically remaining within the 1-2% range, offering a compelling pathway towards more efficient and deployable machine learning models.

Towards Reliable AI: A Spectral Future

EigenTrack marks a considerable advancement in the pursuit of dependable artificial intelligence, offering a novel framework designed to illuminate the often-opaque inner workings of neural networks. This system doesn’t simply assess what an AI decides, but delves into how it arrives at that conclusion, tracking the flow of information through the network’s layers via its spectral properties. By revealing these internal dynamics, EigenTrack facilitates a proactive approach to error detection and mitigation, allowing developers to pinpoint potential vulnerabilities and biases before they impact performance or, crucially, erode user trust. The system’s ability to provide real-time insights into the network’s ‘reasoning’ process represents a fundamental shift towards more transparent and reliable AI, paving the way for broader adoption in sensitive applications where accountability is paramount.

The framework offers developers an unprecedented ability to peer inside the ‘black box’ of neural networks, providing real-time monitoring of internal states during operation. This proactive insight allows for the early detection of anomalies – subtle shifts in activation patterns that might otherwise signal emerging problems, such as the generation of inaccurate outputs or responses to out-of-distribution inputs. Rather than reacting to errors after they occur, developers can now identify and mitigate these issues before they manifest as failures, leading to more robust and reliable AI systems. This preemptive approach significantly enhances trust in AI, particularly in applications where safety and dependability are paramount, and enables a shift from reactive debugging to proactive system maintenance.

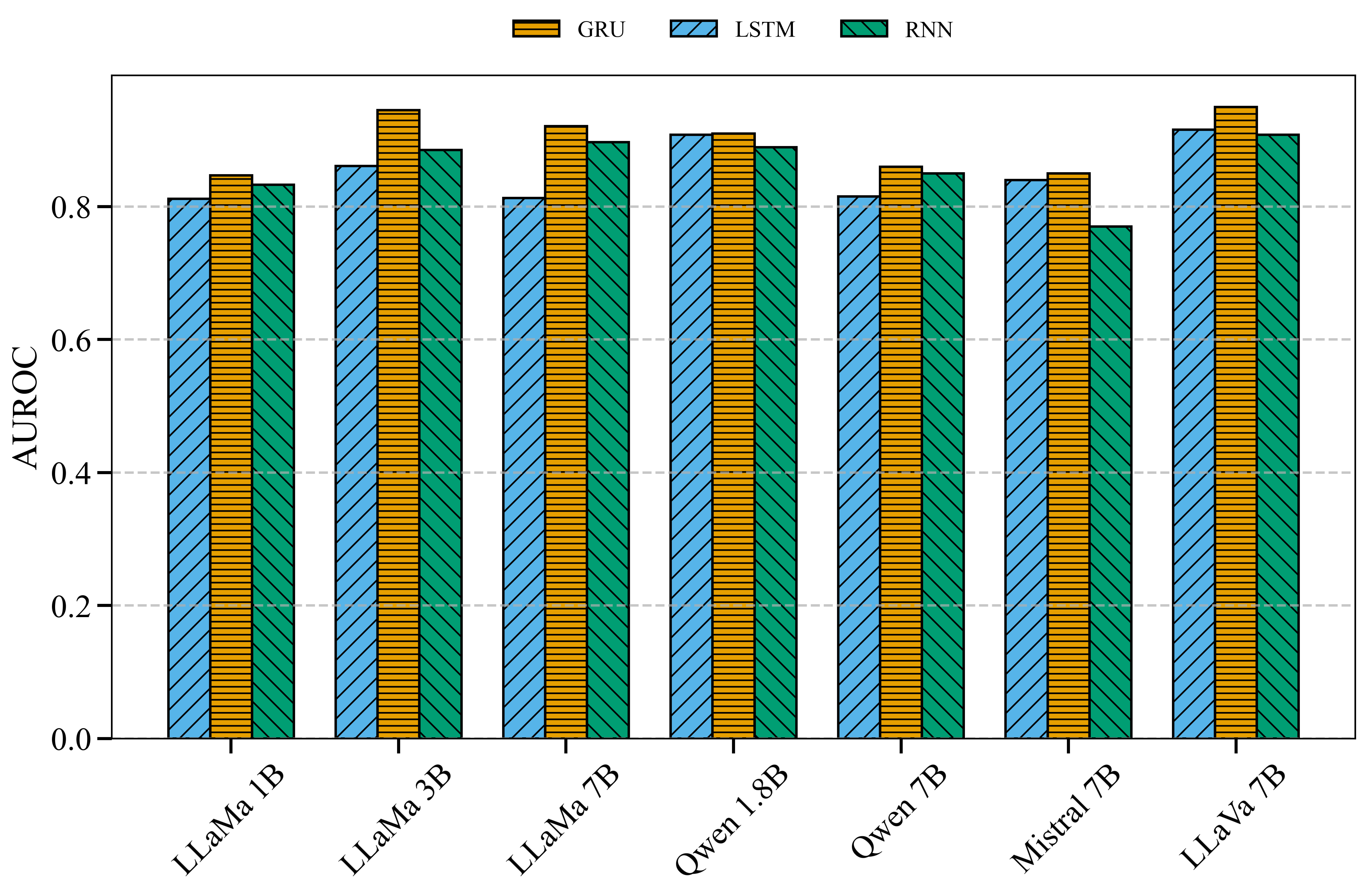

The efficacy of spectral monitoring, as demonstrated by the EigenTrack framework achieving AUROC values of 0.82-0.96 in detecting both hallucination and out-of-distribution (OOD) inputs – significantly surpassing metrics of 92.0 for Cosine Distance and 89.0 for Energy Score – suggests a pathway toward increasingly robust AI systems. Ongoing research is directed at scaling this approach to accommodate the intricacies of more advanced neural network architectures. This expansion holds considerable promise not only for enhancing the reliability of AI in general applications, but also for unlocking its potential in high-stakes domains like safety-critical infrastructure and accelerating discovery in scientific fields where predictive accuracy and trustworthy outputs are paramount.

The pursuit of elegant compression, as outlined in the paper’s exploration of spectral geometry, invariably courts the realities of production. It’s a dance with diminishing returns, a constant recalibration of theory against the relentless entropy of real-world data. One finds echoes of this in Ken Thompson’s observation: “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” The paper’s method, relying on eigenvalue decomposition to identify and prune redundant parameters, feels less like a perfect solution and more like a sophisticated delaying action – a momentary stay against the inevitable accrual of technical debt. It’s a valiant effort to wrestle order from chaos, knowing full well that chaos will, eventually, have its way.

What’s Next?

The application of random matrix theory to neural network analysis feels, predictably, like trading one set of unknowns for another. This work demonstrates a path to characterizing network behavior through spectral properties – a neat trick, if it holds. However, the leap from theoretical eigenvalue distributions to robust, production-ready hallucination detection remains substantial. Tests, as always, are a form of faith, not certainty; a model that performs beautifully on curated out-of-distribution samples will inevitably find novel ways to fail when exposed to actual, adversarial data.

The compression aspect, while promising, skirts the central issue of what information is truly redundant. Simply pruning weights based on spectral characteristics assumes a uniformity of importance that rarely exists. One suspects that the ‘compressed’ networks will reveal unforeseen fragility when subjected to distribution shifts. Further work will likely focus on adaptive pruning strategies, acknowledging that the cost of retaining a few seemingly unnecessary parameters is often lower than the cost of a Monday morning outage.

Ultimately, the enduring challenge isn’t finding elegant mathematical descriptions of neural networks, but building systems that degrade gracefully. The beauty isn’t in code cleanliness, but in resilience. The field will likely move toward hybrid approaches, combining spectral analysis with more pragmatic, data-driven robustness techniques – accepting that perfect theory always collides with the messy reality of production.

Original article: https://arxiv.org/pdf/2601.17357.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Where to Change Hair Color in Where Winds Meet

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Is Taylor Swift Getting Married to Travis Kelce in Rhode Island on June 13, 2026? Here’s What We Know

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

2026-01-28 03:35