Author: Denis Avetisyan

A new approach allows models to refine their problem-solving skills during testing, unlocking significant performance gains across diverse scientific fields.

Researchers demonstrate a reinforcement learning-based test-time training method for continual learning and algorithm discovery, achieving state-of-the-art results in areas like mathematics, single-cell analysis, and GPU kernel optimization.

Achieving state-of-the-art performance often requires extensive pre-training and fine-tuning, yet adapting to novel problems remains a challenge. This paper, ‘Learning to Discover at Test Time’, introduces a method for continual reinforcement learning during test time, enabling a language model to refine solutions specifically for the problem at hand. We demonstrate that this “Test-Time Training to Discover” (TTT-Discover) approach yields superior results across diverse domains-from resolving mathematical problems and optimizing GPU kernels to excelling in algorithm competitions and advancing single-cell analysis-often surpassing results achieved with closed-source models. Could this paradigm shift test-time adaptation from mere inference to active discovery and optimization?

Deconstructing the Problem: Why LLMs Stumble

Despite remarkable advancements, Large Language Models frequently falter when confronted with problems requiring extended, multi-step reasoning-a limitation particularly evident in the domain of competitive programming. These models demonstrate proficiency in tasks involving pattern recognition and information retrieval, yet struggle to devise and execute complex algorithms that demand careful planning, hypothesis testing, and iterative refinement. Competitive programming, with its emphasis on crafting efficient solutions under time constraints, exposes this weakness; successful contestants must not only know algorithms but also apply them creatively, debug effectively, and adapt strategies based on real-time feedback-cognitive processes that currently exceed the capabilities of most LLMs. The challenge lies not merely in possessing knowledge, but in the ability to orchestrate that knowledge through a sustained, deliberate problem-solving process.

Conventional machine learning strategies, such as pre-training on massive datasets followed by fine-tuning for specific tasks, frequently fall short when confronted with the dynamic demands of competitive programming and other complex problem-solving arenas. These methods typically assume a degree of similarity between training and testing conditions, an assumption that breaks down when facing entirely novel challenges. The rigidity inherent in pre-defined weights and biases struggles to accommodate the creative, adaptive reasoning required to devise solutions on the fly, particularly in real-time contests where immediate responses are crucial. Consequently, models trained through these traditional avenues often exhibit limited generalization ability and an inability to effectively navigate the unpredictable landscape of complex, unseen problems, highlighting the need for more flexible and adaptable approaches to artificial intelligence.

Dynamic Adaptation: The Power of Test-Time Training

Test-Time Training (TTT) deviates from traditional machine learning paradigms by performing parameter updates during inference. Rather than maintaining a static model after the training phase, TTT leverages each input instance to refine the model’s weights through gradient descent or similar optimization techniques. This allows the model to adapt to the specific characteristics of the encountered test data, potentially improving performance on previously unseen examples. The updated parameters are typically discarded after processing each instance, ensuring the model remains adaptable to subsequent inputs and avoids catastrophic forgetting. This dynamic adaptation is particularly beneficial in scenarios where the test distribution differs from the training distribution or where individual instances exhibit unique properties.

Static models, deployed with fixed parameters, lack the ability to adjust to variations in input data. In contrast, test-time training enables a dynamic response by iteratively updating model weights during inference. This is particularly relevant in competitive programming, where the distribution of problems changes frequently; a model trained on past competitions may perform suboptimally on novel problem sets. The ability to adapt to these shifting distributions-by, for example, refining parameters based on the specific characteristics of encountered test cases-allows for improved generalization and sustained performance in a non-stationary environment.

Integrating Test-Time Training (TTT) with established search algorithms addresses a key limitation of both approaches: static models lack adaptability to novel instances, while pure search can be computationally expensive. TTT refines model parameters based on the current input during evaluation, providing adaptability. However, relying solely on parameter updates can lead to suboptimal solutions. Combining TTT with search algorithms – such as beam search or A* – allows the search process to be guided by a model that is dynamically adapting to the specific problem instance. This synergy enables more informed exploration of the solution space, leveraging the adaptability of learning with the efficiency of targeted search, and ultimately improving solution quality and reducing computational cost compared to either approach used in isolation.

TTT-Discover: Reinforcement Learning for Algorithmic Evolution

TTT-Discover extends the TTT methodology by incorporating Reinforcement Learning (RL) techniques to enhance its problem-solving capabilities. Specifically, the Entropic Policy Gradient is utilized as a key component of the RL agent’s learning process. This gradient introduces an entropy bonus to the reward function, incentivizing the agent to explore a wider range of potential solutions rather than converging prematurely on a suboptimal strategy. By maximizing both reward and entropy, TTT-Discover mitigates the risk of getting stuck in local optima and promotes a more robust and comprehensive search for optimal solutions across diverse problem spaces.

The TTT-Discover method employs a carefully constructed reward function to direct the Reinforcement Learning agent’s search for effective problem-solving strategies. This function assigns numerical values to the agent’s actions based on their contribution to solving the target problem, incentivizing behaviors that lead to successful outcomes. Through iterative trial and error, the agent learns to maximize cumulative reward, effectively discovering optimal or near-optimal solutions without explicit programming. The reward function’s design is critical; it must accurately reflect the problem’s requirements and provide sufficient signal for the agent to differentiate between effective and ineffective strategies, guiding the learning process toward desirable solutions.

KL Divergence is employed as a regularization term during policy updates within the TTT-Discover reinforcement learning framework to balance exploration and exploitation. Specifically, it measures the difference between the current policy and a reference policy – typically the initial policy or an average of past policies – and adds a penalty proportional to this divergence to the reward function. This discourages excessively large policy updates, preventing the agent from prematurely converging on suboptimal solutions and encouraging continued exploration of the solution space. By controlling the magnitude of policy changes, KL Divergence promotes stability during training and helps the agent refine its strategy more effectively, leading to improved performance and generalization capabilities.

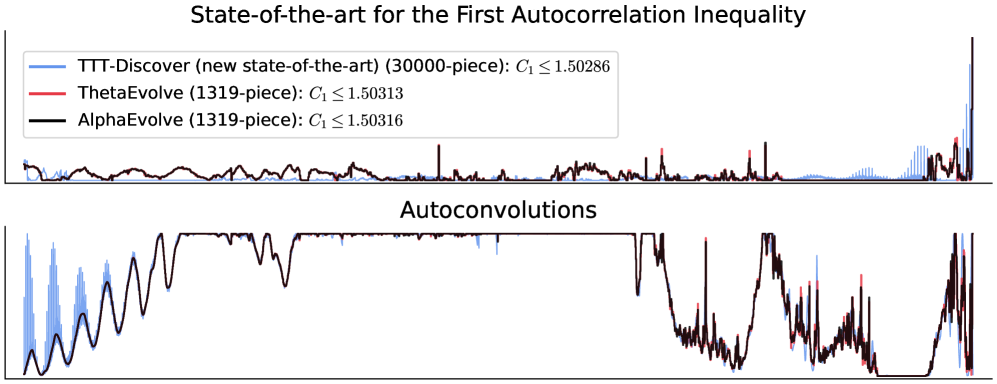

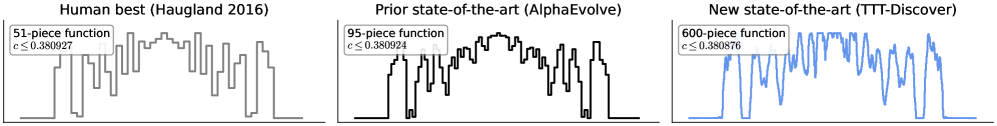

TTT-Discover has demonstrated state-of-the-art performance across multiple distinct domains. In kernel engineering, the method facilitates the discovery of novel kernel functions optimized for specific machine learning tasks. Within algorithm design, TTT-Discover generates algorithms that outperform existing approaches on benchmark problems. The method has also achieved advancements in mathematical problem-solving, notably establishing a new upper bound on the Erdős’ Minimum Overlap Problem. Beyond these computational areas, TTT-Discover has been successfully applied to the analysis of single-cell biological data, achieving competitive results on the OpenProblems benchmark, indicating its versatility and potential for scientific discovery.

During the AHC058 automated heuristic contest, the TTT-Discover method achieved a top ranking amongst participating systems. This performance indicates the efficacy of the approach in the domain of competitive programming, which requires rapid development of effective algorithms to solve complex problems under strict time constraints. The contest serves as a benchmark for comparing the performance of different automated problem-solving techniques, and a top ranking demonstrates TTT-Discover’s ability to generate competitive solutions within the contest’s defined parameters and evaluation criteria.

TTT-Discover established a new upper bound of 0.380876 for the Erdős’ Minimum Overlap Problem, representing an improvement of 0.000016 over the previously reported state-of-the-art result. This problem seeks to find the minimum possible overlap between two sets of size n, and achieving tighter upper bounds requires novel algorithmic approaches. The reported improvement, while incremental, demonstrates TTT-Discover’s capacity to refine solutions for established mathematical challenges and contribute to ongoing research in discrete mathematics. This result was obtained through the application of reinforcement learning techniques, allowing for exploration of a vast solution space and identification of configurations that yield improved performance on this specific problem instance.

Evaluation of TTT-Discover on the OpenProblems Benchmark for single-cell analysis demonstrated its ability to generalize beyond competitive programming challenges. This benchmark assesses performance on unsolved biological problems, specifically focusing on data analysis tasks within single-cell genomics. The achieved competitive score indicates that the method, originally designed for algorithmic problem solving, can be effectively adapted to extract meaningful insights from complex biological datasets, suggesting a broader applicability for automated scientific discovery and data-driven research.

Hardware Synergy: Accelerating Dynamic Intelligence

Real-time performance in dynamic learning systems isn’t solely a matter of clever algorithms; it demands a holistic approach encompassing both computational efficiency and specialized hardware. While sophisticated learning algorithms can minimize the number of operations required, their full potential remains untapped without considering the underlying hardware architecture. Efficient algorithms executing on inadequate hardware will inevitably face bottlenecks, hindering responsiveness. Consequently, a synergistic design-where algorithmic steps are optimized to exploit the parallel processing capabilities of accelerators like Graphics Processing Units (GPUs)-becomes paramount. This co-design philosophy ensures that computations are not only logically streamlined but also physically suited to the available resources, ultimately paving the way for truly interactive and adaptive systems.

Optimizing computational kernels for modern Graphics Processing Units (GPUs) demands a synergistic approach combining meticulous kernel engineering with automated auto-tuning techniques. Kernel engineering involves rewriting and restructuring the core computational routines – the kernels – to fully exploit the massively parallel architecture of GPUs. However, achieving peak performance isn’t simply about clever coding; the optimal kernel configuration is often highly specific to the GPU hardware and the characteristics of the data being processed. This is where auto-tuning comes in, employing algorithms to systematically search the vast design space of possible kernel configurations – parameters like thread block size, memory access patterns, and loop unrolling – and identify those that maximize throughput. Through this iterative process of refinement, the computational efficiency of the kernels is dramatically increased, allowing for substantial acceleration of data-intensive tasks and unlocking the full potential of the GPU hardware.

The architecture of TTT-Discover is designed to fully exploit the massively parallel processing power inherent in modern Graphics Processing Units (GPUs). Traditional computational approaches often struggle with the demands of dynamic learning, particularly during the iterative training and evaluation cycles required for complex tasks. However, by offloading computationally intensive operations – such as matrix multiplications and the evaluation of numerous potential solutions – to the GPU, TTT-Discover achieves substantial speedups. This parallelization allows for the simultaneous processing of multiple data points and hypotheses, dramatically reducing the time needed for both training and assessing the performance of the evolving system. The result is a significantly accelerated discovery process, enabling rapid adaptation and optimization in dynamic environments.

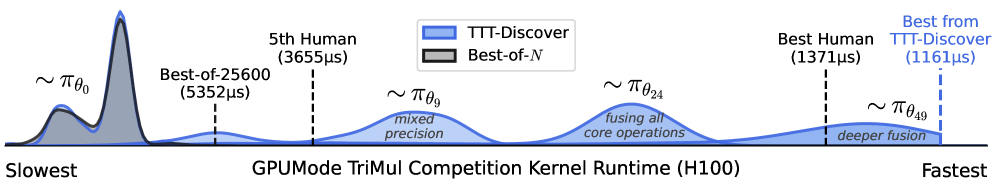

TTT-Discover recently established a new performance standard by achieving the fastest runtime on the TriMul H100 benchmark, a significant feat in the field of computational algorithms. This accomplishment isn’t merely incremental; the system demonstrably outperformed kernels crafted by leading human experts-those previously at the top of the public leaderboard. The result showcases the power of dynamically learned algorithms, highlighting how automated optimization can surpass manually tuned implementations. This breakthrough suggests a pathway toward algorithms that not only execute efficiently on modern hardware but also adapt and improve performance over time, potentially redefining benchmarks for computational speed and resource utilization.

Establishing a reliable yardstick for performance evaluation is crucial in the field of dynamic learning, and frameworks like OpenEvolve serve precisely that purpose. This established system offers a standardized suite of benchmarks and testing protocols, allowing for objective comparison of TTT-Discover’s advancements against existing, highly-optimized kernels. By consistently measuring against OpenEvolve’s results, researchers can definitively quantify the gains achieved through the synergistic interplay of dynamic learning and hardware acceleration, demonstrating not just theoretical improvements, but concrete performance benefits in real-world applications. This benchmark-driven approach fosters transparency and facilitates further innovation within the community, ensuring that progress is measurable and reproducible.

The Horizon of Adaptive Intelligence

The core innovations driving TTT-Discover-a system initially demonstrated through competitive programming challenges-hold considerable promise for advancing artificial intelligence beyond narrow applications. The techniques employed, focusing on iterative self-play, dynamic strategy refinement, and a search for universally effective approaches, aren’t inherently limited to coding contests. These principles can be generalized to create AI agents capable of adapting to unforeseen circumstances and exhibiting resilience in complex, real-world scenarios. By prioritizing the process of learning-how to effectively explore, exploit, and improve-rather than solely focusing on achieving a specific outcome, this work paves the way for more robust and versatile AI systems, potentially applicable to fields ranging from robotics and resource management to scientific discovery and personalized medicine. The ability to continuously refine strategies, independent of pre-programmed solutions, represents a significant step toward truly adaptive intelligence.

Traditional artificial intelligence often relies on models trained on fixed datasets, creating systems that struggle when faced with unforeseen circumstances or changing conditions. However, the capacity for real-time adaptation offers a powerful solution to this limitation. By allowing models to continuously learn and refine their strategies during operation, rather than solely relying on pre-programmed knowledge, these systems can navigate dynamic environments with increased robustness and efficiency. This approach mirrors the way humans learn – not just from past experiences, but from immediate feedback and ongoing adjustments – and promises a new generation of AI capable of thriving in complex, unpredictable situations, effectively bridging the gap between static intelligence and genuine adaptability.

Continued advancements in adaptive intelligence necessitate extending these techniques beyond their current scope, with future studies concentrating on increasingly complex challenges. Researchers are actively investigating methods to effectively scale these learning algorithms to handle high-dimensional problem spaces and real-world datasets. A crucial area of exploration involves refining reward shaping strategies – carefully designing the feedback signals that guide the learning process – and developing more efficient exploration techniques. These enhancements aim to enable agents to not only discover optimal solutions but also to generalize their learning capabilities to novel and unforeseen situations, ultimately fostering a new generation of truly adaptable AI systems.

The development of artificial intelligence is increasingly focused on creating agents capable of not simply executing pre-programmed tasks, but of fundamentally improving their own learning processes. This recent work signifies progress toward that goal, demonstrating a pathway for AI to move beyond reliance on static datasets and fixed algorithms. By enabling systems to analyze and refine their strategies during problem-solving, these agents exhibit a form of meta-learning – learning how to learn more efficiently. This isn’t merely about achieving better performance on a single task, but about equipping AI with the capacity to adapt quickly to unforeseen challenges and continuously enhance its problem-solving abilities, ultimately fostering a new generation of truly intelligent and resilient systems.

The pursuit of optimized performance, as demonstrated in this work on test-time training, echoes a fundamental principle of system understanding. One begins with a challenge: what happens if the model continues to learn during evaluation, pushing the boundaries of its initial training? This paper doesn’t merely accept the limitations of pre-defined algorithms; instead, it actively explores what happens when those rules are bent, refined, and even broken during the test phase. As Donald Davies observed, “The best way to predict the future is to create it.” This sentiment perfectly encapsulates the approach taken here-not passively accepting performance, but actively creating improved results through continual refinement, whether it’s in mathematics, single-cell analysis, or GPU kernel optimization. The study demonstrates that understanding a system often necessitates pushing its limits.

Beyond the Immediate Exploit

The demonstrated capacity for a language model to refine itself during inference represents more than incremental progress; it’s an exploit of comprehension. The system isn’t merely applying learned patterns, but actively reshaping them against the live feedback of the problem space. Yet, this success illuminates the fragility of current evaluation metrics. Performance gains across mathematics, algorithm design, and even specialized fields like single-cell analysis are impressive, but they sidestep a fundamental question: what constitutes ‘optimal’ behavior when the model is simultaneously defining and solving the problem? The current paradigm implicitly assumes a fixed ground truth, an assumption increasingly strained by the model’s adaptive capacity.

Future work must address the inherent instability of continual self-improvement. The presented method, while effective, lacks explicit mechanisms to prevent catastrophic forgetting or the drift towards locally optimal, yet ultimately suboptimal, solutions. A crucial next step involves developing formal methods for auditing and controlling the model’s internal state during test-time training-essentially, a debugger for emergent intelligence.

Ultimately, this research suggests a shift in perspective. The goal isn’t to build models that know the answers, but systems capable of systematically discovering them. The true challenge lies not in achieving peak performance on predefined benchmarks, but in engineering a process of reliable, auditable, and beneficial self-modification. The current work is a promising breach in the wall of static intelligence, but the real exploration has only just begun.

Original article: https://arxiv.org/pdf/2601.16175.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- Silver Rate Forecast

- Top 15 Insanely Popular Android Games

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- EUR UAH PREDICTION

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-01-23 10:27