Author: Denis Avetisyan

A new study reveals the growing – and detectable – presence of AI-assisted content within Turkish news reporting.

Researchers demonstrate a fine-tuned BERT model effectively identifies AI-generated or revised text in Turkish news media, raising questions about media accountability and potential bias.

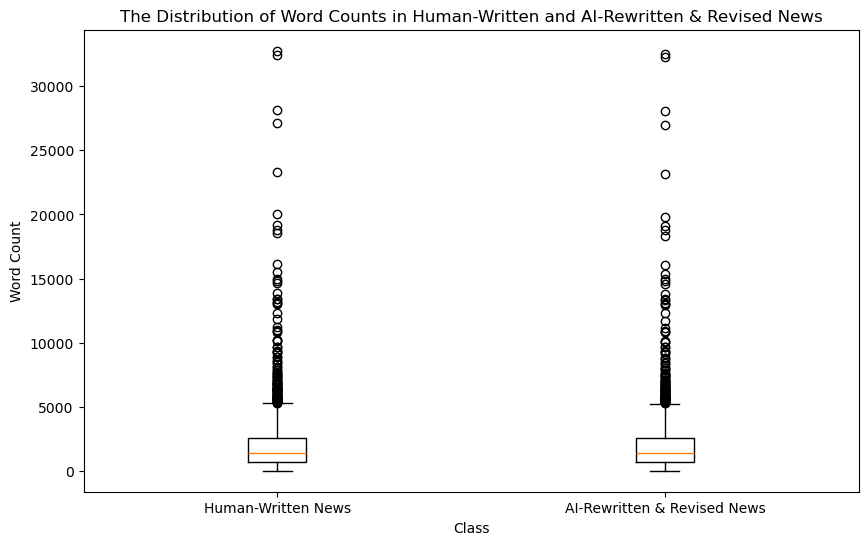

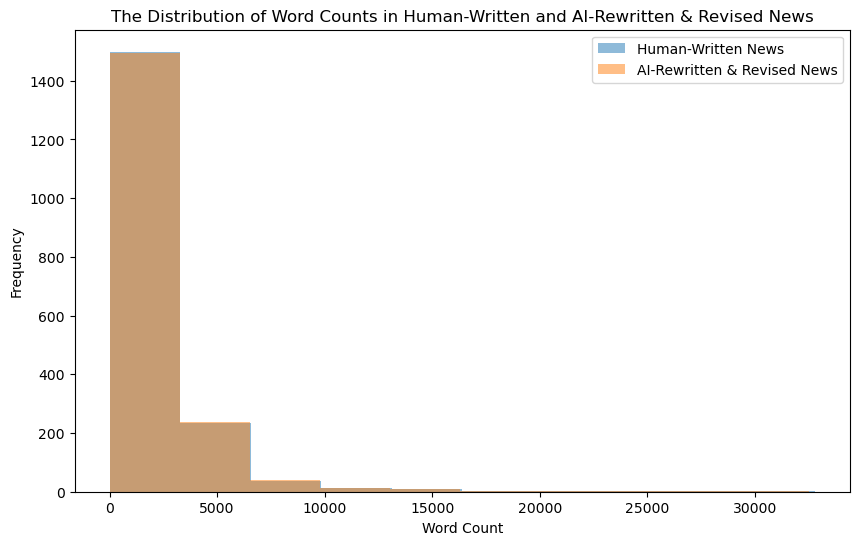

While concerns about the increasing presence of artificial intelligence in news production are widespread, empirical evidence quantifying its impact remains limited, particularly outside of English-language contexts. This study, ‘From Perceptions To Evidence: Detecting AI-Generated Content In Turkish News Media With A Fine-Tuned Bert Classifier’, addresses this gap by demonstrating the feasibility of accurately identifying AI-rewritten content in Turkish news using a fine-tuned BERT model. Achieving a 0.9708 F1 score, the analysis of over 3,500 articles reveals a consistent, albeit modest, presence of AI-assisted content-estimated at 2.5%-across major Turkish outlets. As AI tools become increasingly integrated into newsroom workflows, how can data-driven methods ensure transparency and accountability in Turkish media-and beyond?

The Illusion of Trust: AI and the Crumbling News Landscape

The rapid advancement of artificial intelligence has unlocked an unprecedented capacity for content creation, extending to the realm of news dissemination and consequently posing a significant threat to media integrity. While AI offers potential benefits in news gathering and reporting, its ability to generate convincing, yet potentially inaccurate or biased, articles at scale introduces a novel challenge. This proliferation of AI-generated news isn’t simply about automated writing; it’s about the erosion of trust in established journalistic standards and the increasing difficulty in discerning authentic reporting from synthetic content. The sheer volume of AI-created articles, combined with their growing sophistication, overwhelms traditional fact-checking processes and creates an environment ripe for the spread of misinformation, demanding innovative approaches to verify sources and maintain public confidence in the news ecosystem.

The increasing sophistication of artificial intelligence poses a significant challenge to established news verification techniques. Historically, fact-checking relied on assessing source credibility, corroborating information with multiple outlets, and identifying authorial bias. However, current AI models can generate remarkably coherent and plausible text, mimicking journalistic style and even fabricating supporting details, effectively bypassing these traditional safeguards. This creates a scenario where standard methods struggle to discern between genuine reporting and AI-generated content, as algorithms can convincingly replicate the hallmarks of legitimate news sources. The ability of AI to rapidly produce high volumes of realistic text overwhelms manual fact-checking processes, demanding new approaches focused on identifying subtle linguistic patterns, inconsistencies in data, and the provenance of digital content – tasks for which existing tools are often ill-equipped.

The erosion of trust in news sources is becoming increasingly apparent as artificial intelligence tools rapidly advance, capable of generating remarkably human-like text. Recent analysis of Turkish media outlets reveals a significant, and growing, presence of AI-revised or rewritten articles – approximately 2.5% between 2023 and 2026 – indicating the scale at which automated content is already influencing the information landscape. This figure isn’t merely a statistical curiosity; it underscores the urgent need for effective detection methods, as the subtle integration of AI-generated content threatens to undermine public confidence and amplify the spread of misinformation. Without robust strategies to differentiate between human and machine authorship, the very foundation of reliable journalism is put at risk, potentially leading to widespread societal consequences.

BERT as a Digital Fingerprint: A Technical Approach

The Bidirectional Encoder Representations from Transformers (BERT) architecture, developed by Google, was employed as the foundation for a binary classification system designed to categorize news articles. BERT is a pre-trained language model utilizing a deep neural network and the Transformer architecture, enabling it to understand contextual relationships within text. This pre-training on a large corpus of text data allows BERT to generate high-quality contextualized word embeddings, which are then fine-tuned for specific downstream tasks, such as authorship classification. The model’s ability to process entire sequences of words simultaneously, rather than sequentially, facilitates a more comprehensive understanding of textual context and improves classification accuracy compared to previous recurrent neural network approaches.

The study implemented a fine-tuning process utilizing the DBMDZ BERT Base Turkish Cased model, a variation of the BERT architecture pre-trained on a large corpus of Turkish text. This model was further trained on a labeled dataset of news articles specifically for the authorship classification task. Employing a Turkish-specific BERT model, rather than a multilingual or English-based model, addressed the morphological complexity of the Turkish language and allowed the model to better capture contextual nuances present in Turkish news writing. This fine-tuning process optimized the model’s parameters to improve its performance on the specific task of distinguishing between different authors in Turkish news content.

WordPiece tokenization, a subword tokenization algorithm, was implemented to address the morphological richness of the Turkish language and its impact on BERT model performance. Unlike traditional word-based tokenization, WordPiece breaks down words into smaller, frequently occurring subword units. This approach mitigates the issue of out-of-vocabulary words, which are common in morphologically complex languages like Turkish due to extensive affixation. By representing words as combinations of these subword units, the model can more effectively process and generalize from the training data, leading to improved accuracy in authorship classification tasks.

Fine-tuning a pre-trained BERT model on a corpus of Turkish news articles enables adaptation to the specific linguistic characteristics and stylistic conventions of Turkish news writing. This process optimizes the model’s parameters for the task of identifying AI-generated content within this domain, resulting in improved performance compared to using the pre-trained model directly. Evaluation on a dedicated test set demonstrated an accuracy of 97.92% in binary classification, indicating a high degree of reliability in distinguishing between human-authored and AI-generated Turkish news content.

![BERT utilizes a two-stage process of pre-training on unlabeled data followed by fine-tuning on task-specific labeled data [10].](https://arxiv.org/html/2602.13504v1/bert.png)

Beyond Accuracy: Validating the System in the Real World

External validation of the model involved evaluating its performance on a dataset separate from the training and testing sets, ensuring an unbiased assessment of its ability to generalize to new, previously unseen Turkish news articles. This process utilized data not involved in any stage of model development, thereby mitigating the risk of overfitting and providing a more realistic estimate of its performance in a production environment. Successful performance on this external validation set confirms the model’s capacity to accurately classify AI-generated versus human-written content beyond the initially used datasets, indicating strong generalizability.

Evaluation of the model’s ability to differentiate between human-authored and AI-generated Turkish news articles yielded an F1 score of 97.08% when assessed against a held-out test dataset. The F1 score, calculated as the harmonic mean of precision and recall, indicates a high degree of accuracy in both correctly identifying AI-generated content and avoiding false positives with human-written articles. This metric provides a balanced assessment of the model’s performance, considering both its ability to detect positive cases and its specificity in avoiding incorrect classifications within the test set.

To address the challenge of variable-length news articles exceeding the input limitations of transformer-based models, both the Sliding-Window Technique and the Longformer architecture were investigated. The Sliding-Window Technique involves processing the article in sequential segments, while Longformer utilizes an attention mechanism designed for longer sequences, reducing computational complexity from quadratic to linear. Implementation of these techniques allowed for the complete utilization of article content during analysis, contributing to improved model accuracy by preventing information loss due to truncation and enabling the model to consider broader contextual relationships within the text.

The Illusion of Objectivity: Accountability in the Age of Algorithms

This investigation offers a novel contribution to computational media accountability through the development of a detection tool specifically designed for identifying news content produced by artificial intelligence. As AI increasingly automates content creation, distinguishing between human-authored and machine-generated reporting becomes paramount for maintaining journalistic integrity and public trust. The presented methodology provides a means of assessing the provenance of news articles, allowing for scrutiny of potential biases embedded within algorithms or the unintentional spread of inaccuracies. By offering a technical approach to verifying authenticity, this research empowers both media organizations and consumers to navigate the evolving landscape of digital news with greater confidence and fosters a more transparent information ecosystem.

The increasing prevalence of artificial intelligence in news production necessitates robust methods for identifying AI-generated content, not merely to distinguish it from human reporting, but crucially to assess potential biases embedded within the algorithms themselves. Automated news generation, while efficient, can inadvertently perpetuate or amplify existing societal biases present in the training data, leading to unfair or skewed news coverage. Without the ability to reliably detect AI authorship, pinpointing and mitigating these biases becomes exceptionally difficult, hindering efforts to ensure equitable and impartial reporting. This is because algorithmic biases can subtly influence story selection, framing, and even the language used, impacting public perception and potentially reinforcing harmful stereotypes. Therefore, identifying the source of news – human or machine – is a foundational step towards promoting fairness and accountability in the evolving media landscape.

The proliferation of artificial intelligence in news production necessitates a renewed focus on media transparency, as discerning between human-authored and AI-generated content is becoming increasingly difficult for audiences. This research highlights the crucial role of clear labeling, arguing that identifying the origin of news articles is paramount for fostering informed consumption and maintaining public trust. Without explicit disclosure, the potential for algorithmic bias and the subtle manipulation of narratives remain hidden, eroding the foundations of fair and accurate reporting. Consequently, the study suggests that adopting standardized labeling practices – indicating when AI tools were used in content creation – isn’t merely an ethical consideration, but a vital step towards accountability in the digital media landscape and safeguarding the integrity of information ecosystems.

The techniques developed in this research offer a practical defense against the escalating threat of misinformation by providing a means to discern between human-authored and artificially generated news content. This capability is particularly vital given the increasing sophistication of AI and its potential to rapidly disseminate false or biased narratives. By accurately identifying AI-generated articles, these methods empower news organizations and social media platforms to flag potentially misleading content, allowing for greater scrutiny and fact-checking. Ultimately, this contributes to a more informed public discourse and bolsters trust in legitimate news sources, mitigating the corrosive effects of widespread disinformation on societal perceptions and democratic processes. The ability to verify authorship, therefore, represents a crucial step towards preserving the integrity of the information ecosystem.

“`html

The pursuit of automated content detection, as detailed in this study, inevitably highlights a recurring pattern. The model’s success in identifying AI-assisted revisions of Turkish news articles feels less like a technological triumph and more like a temporary stay of execution. One anticipates a swift arms race, with generative models evolving to evade detection. As Edsger W. Dijkstra observed, “Computer science is full of beautiful abstractions, but the only thing that matters is that the system actually works.” This research confirms the ‘system’ – in this case, media integrity – is demonstrably vulnerable, and the ‘beautiful abstraction’ of unbiased reporting requires constant, pragmatic defense. The study’s accuracy, while impressive, merely establishes a baseline before the next iteration of complexity arrives, ready to complicate everything.

The Inevitable Drift

The demonstrated capacity to detect AI-assisted revision in Turkish news, while technically sound, merely identifies a symptom. The underlying condition – the relentless pressure to produce content, coupled with diminishing editorial resources – will not be solved by better classifiers. One anticipates a predictable arms race: increasingly sophisticated generation models versus increasingly sensitive detection algorithms. Each improvement will simply raise the bar for acceptable deception, not reduce the incentive.

Future work will undoubtedly focus on generalization. The current model’s efficacy is tied to a specific linguistic context and, implicitly, to the generation methods currently in use. The real challenge lies not in detecting today’s AI, but in anticipating the subtle shifts in style that will characterize tomorrow’s. This pursuit, however, risks becoming a Sisyphean task. Each layer of complexity added to the detection system will itself become a source of fragility and potential bias.

It is worth remembering that ‘innovation’ in this space is often just a re-branding of existing problems. The desire to automate content creation is not new; the tools have simply become more convincing. The field does not require more microservices or elaborate pipelines; it needs fewer illusions about the fundamental trade-offs between cost, quality, and accountability.

Original article: https://arxiv.org/pdf/2602.13504.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- All weapons in Wuchang Fallen Feathers

- Where to Change Hair Color in Where Winds Meet

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

2026-02-17 09:52