Author: Denis Avetisyan

A novel approach to financial modeling leverages information theory to create an idealized market framework for more accurate asset pricing and risk management.

This review details a model minimizing surprisal and Kullback-Leibler divergence to derive a benchmark-neutral framework based on stochastic processes and market microstructure.

Traditional financial models often struggle to reconcile market efficiency with observed statistical properties of asset returns. This paper, ‘Information-Theoretic Approach to Financial Market Modelling’, proposes a novel framework by treating the financial market as a communication system governed by information-theoretic principles. The resulting ‘minimal market model’-derived by minimizing surprisal and Kullback-Leibler divergence-characterizes asset price dynamics as squared radial Ornstein-Uhlenbeck processes evolving in activity times. Could this approach offer a more robust foundation for understanding price formation and developing optimal trading strategies?

The Illusion of Precision in Financial Models

Traditional financial modeling frequently encounters limitations when attempting to represent the intricate and ever-changing character of market volatility. These models often depend on simplifying assumptions – such as normally distributed returns or constant volatility – to make calculations manageable. However, real-world financial data rarely conforms to these idealized conditions. The inherent complexity of market interactions, driven by behavioral factors, geopolitical events, and unforeseen shocks, introduces non-linear dynamics that these simplified models struggle to accommodate. Consequently, predictions generated from these models can significantly deviate from actual market behavior, particularly during periods of extreme volatility or crisis. This disconnect underscores the need for more sophisticated approaches capable of capturing the nuanced and dynamic nature of financial markets, moving beyond reliance on overly simplistic representations of reality.

Traditional financial models often presume symmetrical distributions of returns, meaning both gains and losses are expected to follow a similar pattern. However, real-world financial data consistently demonstrates asymmetry – large negative movements, or ‘black swan’ events, occur far more frequently than equally large positive ones. Compounding this issue is the phenomenon of clustering, where periods of high volatility tend to be followed by further volatility, and conversely, calm periods are often succeeded by more calm. Because these models fail to incorporate these inherent characteristics of financial markets, predictions frequently underestimate risk and overestimate stability. Consequently, the resulting forecasts can be significantly off, leading to flawed investment strategies and potentially substantial financial losses, particularly during times of market stress. This limitation underscores the need for alternative modeling approaches that better reflect the true, often unpredictable, nature of financial data.

The prevailing limitations in financial modeling necessitate a shift towards frameworks prioritizing both simplicity and analytical power. Current methodologies often grapple with excessive complexity, hindering their ability to effectively capture the essential drivers of market behavior. A more parsimonious approach-one that distills market dynamics to their core components-promises enhanced interpretability and predictive capability. Such a framework wouldn’t merely aim to replicate observed data, but to articulate the underlying mechanisms generating it, allowing for more robust stress testing and a clearer understanding of systemic risk. This pursuit of analytical tractability isn’t about sacrificing realism, but about achieving a more fundamental and ultimately more reliable representation of how financial markets actually function, enabling proactive rather than reactive strategies.

Stripping Away the Noise: A Foundation in Information and Stationary Factors

The model’s factor dynamics are predicated on the information-theoretic principle of surprisal minimization. Surprisal, quantified as -log(p(x)) where p(x) is the probability density of an event x , represents the degree of unexpectedness. By minimizing the surprisal associated with changes in market factors, the model aims to predict factor movements that are statistically probable given the existing data distribution. This approach effectively encourages the model to favor factor transitions that reduce uncertainty and align with observed patterns, thereby generating a dynamic system driven by the reduction of information content.

The model’s foundation rests on ‘StationaryFactors’, which are defined as market factors undergoing normalization to ensure stable probability densities over time. This normalization process maintains consistent statistical properties, preventing unbounded or erratic behavior in the factor dynamics. By concentrating on factors exhibiting this stability, the model circumvents the need to account for unpredictable shifts or drifts in the underlying market variables, thus creating a more predictable and robust framework for analysis. The use of StationaryFactors facilitates a simplified representation of market movements, allowing for efficient computation and accurate simulation of asset behavior without requiring complex statistical corrections.

The developed model constructs a minimal market representation, enabling an alternative to traditional risk-neutral pricing methodologies. By focusing on a limited set of stationary factors and their interactions, the model avoids the need for complex assumptions regarding investor preferences or the specification of a risk-free rate. This simplification allows for a direct valuation of assets based on the information contained within these factors, circumventing the intricacies of deriving risk-neutral probabilities and characteristic functions typically required in conventional asset pricing frameworks. The resulting valuation approach is computationally efficient and provides a self-contained mechanism for determining asset values without reliance on external market data beyond the observed factor dynamics.

The Devil’s in the Detail: Modeling Dynamics with Squared Radial Processes

The model’s foundational component is the SquaredRadial Ornstein-Uhlenbeck (OU) process, a stochastic process chosen for its analytical tractability and ability to model mean reversion. This process dictates the evolution of key elements – factors, benchmarks, and their constituent parts – by defining their dynamic behavior over time. Specifically, the process is defined by a drift term, a diffusion term, and a mean-reversion rate, allowing for a mathematically rigorous representation of how these elements move towards a long-term equilibrium. The squared radial component introduces a unique structure to the covariance, impacting the interaction between different components and enabling precise calculation of sensitivities and correlations within the model. dX_t = \theta(\mu - X_t)dt + \sigma \sqrt{|r|} dW_t , where X_t represents the factor, θ is the mean-reversion rate, μ is the long-term mean, σ is the volatility, r denotes the radial component, and dW_t is a Wiener process.

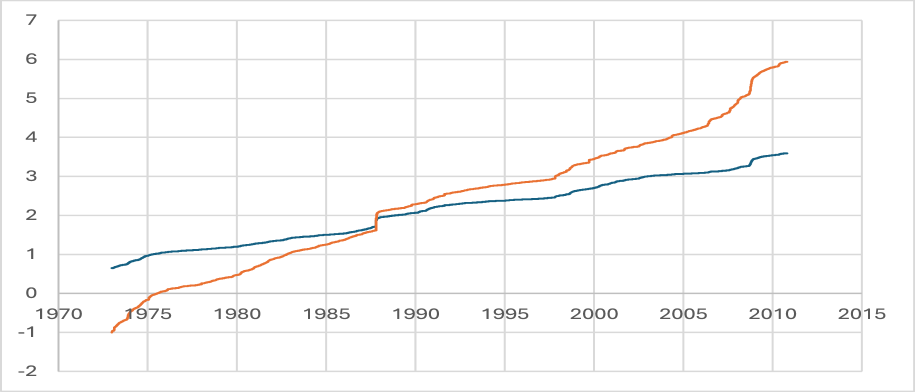

The SquaredRadialOUProcess’s temporal evolution is governed by ‘ActivityTime’, a variable quantifying the rate of trading and information dissemination within the modeled system. This ‘ActivityTime’ is not independent; it is directly driven by ‘MarketTime’, a linearly progressing variable as visually demonstrated in figures 4.1 and 4.2. Consequently, changes in ‘MarketTime’ result in corresponding adjustments to ‘ActivityTime’, and subsequently influence the dynamics of the underlying factors and benchmarks. The linear progression of ‘MarketTime’ ensures a predictable, albeit scalable, relationship between time and the intensity of activity within the model.

The model’s architecture facilitates the analysis of market factor interactions by defining a system where components evolve according to a SquaredRadial Ornstein-Uhlenbeck (OU) process, driven by ‘ActivityTime’ and referenced to ‘MarketTime’. This allows for the quantification of dependencies between factors, benchmarks, and their constituent parts, enabling researchers to trace the temporal evolution of these relationships. By mathematically representing these dynamics, the framework captures core financial market characteristics such as mean reversion, volatility clustering, and the impact of information flow, providing a robust foundation for predictive modeling and risk assessment. The analytical pathway established by the model allows for the systematic examination of how changes in one factor propagate through the system, influencing other components and ultimately shaping overall market behavior.

The Illusion of Control: Analytical Insights and Model Characteristics

The MinimalMarketModel distinguishes itself through the implementation of constant volatility, a deliberate design choice that significantly streamlines analytical processes. Unlike models incorporating stochastic volatility, this feature permits the derivation of closed-form solutions for various financial instruments, bypassing computationally intensive simulations. This simplification doesn’t arise from a lack of realism, but rather a focus on capturing essential market dynamics with mathematical tractability. By assuming volatility remains constant over time, the model allows for direct calculation of prices and hedging strategies, providing a powerful tool for understanding and managing risk in bond markets. This characteristic facilitates both efficient calibration and rapid evaluation of portfolio performance, making it particularly valuable for real-time applications and complex derivative pricing scenarios.

The MinimalMarketModel demonstrates an inherent capacity to replicate key characteristics of financial time series, notably volatility clustering and the leverage effect. Volatility clustering, the tendency for large price changes to be followed by other large changes, emerges naturally within the model’s dynamics without requiring explicit modeling assumptions. Similarly, the leverage effect-where negative returns are associated with increased volatility-is a spontaneous outcome of the model’s structure. This fidelity to observed data patterns stems from the model’s internal mechanisms, which allow it to realistically simulate the conditional heteroskedasticity frequently seen in financial markets. Consequently, the model doesn’t simply fit historical data; it reproduces the underlying processes that generate these patterns, enhancing its predictive power and providing a more robust framework for risk management and asset pricing.

The MinimalMarketModel demonstrates exceptional performance in pricing and hedging, achieving a hedge error below 0.0001 for deeply discounted, long-maturity bonds. This precision is not simply a matter of fitting data; the model’s construction prioritizes a balance between accuracy and complexity, mathematically formalized by minimizing the Kullback-Leibler Divergence at λ^τ²/2. Crucially, the model employs a ‘BenchmarkNeutralPricing’ approach, utilizing the ‘GrowthOptimalPortfolio’ as its numéraire – a technique that ensures a consistent and intuitively understandable pricing framework, independent of arbitrary reference assets. This combination of low error, minimized divergence, and a robust pricing structure positions the model as a powerful tool for analyzing and managing risk in complex fixed-income markets.

The pursuit of a ‘minimal market model’ – an idealized system distilled from information-theoretic principles – feels predictably optimistic. This work, attempting to derive asset pricing through surprisal minimization and Kullback-Leibler divergence, confidently proposes elegance. But one anticipates the inevitable entropy. As Paul Feyerabend observed, “Anything goes.” The very act of deployment, of translating these theoretical constructs into live systems, will introduce unforeseen interactions and emergent behaviors. The model may initially appear to function as designed, minimizing surprisal in controlled simulations, but production environments rarely cooperate with such neatness. It’s a beautiful, fragile thing, this minimal model, and likely already breaking in ways the authors haven’t conceived.

What’s Next?

The pursuit of a ‘minimal market model’ derived from information-theoretic principles feels, predictably, like chasing a phantom. The elegance of minimizing surprisal and Kullback-Leibler divergence does not guarantee robustness against the sheer inventiveness of market participants. Tests, after all, are a form of faith, not certainty. The current framework offers a compelling theoretical structure, but the devil, as always, resides in the mapping to actual, messy data. A significant challenge remains in calibrating these models without falling prey to overfitting, especially given the non-stationarity inherent in financial time series.

Future work will inevitably grapple with the practical limitations of benchmark-neutral pricing. The assumption of a perfectly informed, rational agent-even as an idealized limit-feels increasingly distant from observed behavior. More fruitful avenues may lie in relaxing this assumption, incorporating agent-based modeling to explore the emergent properties of markets driven by bounded rationality and imperfect information. The focus should shift from finding ‘the’ minimal model to understanding the space of plausible minimal models, and the conditions under which each fails spectacularly.

Ultimately, the true test won’t be the theoretical purity of the model, but its ability to survive the weekly deployment. The real world doesn’t care about Kullback-Leibler divergence; it cares about systems that don’t crash on Mondays. Automation will not save anyone; scripts have a habit of deleting production. The pursuit of elegant theories is a worthwhile endeavor, but a healthy dose of skepticism is essential.

Original article: https://arxiv.org/pdf/2602.14575.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

- Where to Change Hair Color in Where Winds Meet

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- The Best Single-Player Games Released in 2025

2026-02-18 04:15