Author: Denis Avetisyan

As AI writing tools become increasingly prevalent, platforms face a critical question: how much transparency is needed regarding the origin of online content?

This review analyzes the optimal conditions for governing AI-generated content, considering the trade-offs between transparency, creator incentives, and overall content quality.

The increasing prevalence of AI-generated content presents a paradox for digital platforms: transparency demands disclosure, yet overzealous regulation risks stifling innovation and creator value. This tension is explored in ‘When Is Self-Disclosure Optimal? Incentives and Governance of AI-Generated Content’, which develops a formal model to analyze platform governance of AI content, finding that mandatory disclosure is only optimal when the benefits of AI outweigh the costs, and its effectiveness diminishes as AI technology advances. The analysis demonstrates that disclosure regimes impact creator incentives, content quality, and platform trust, ultimately suggesting a dynamic approach to enforcement. As AI capabilities continue to evolve, how can platforms best balance the need for transparency with the preservation of a thriving content ecosystem?

The Inevitable Expansion: AI and the Redefinition of Creation

The landscape of content creation is undergoing a dramatic shift, fueled by increasingly sophisticated generative AI models such as GPT-5.2 and Gemini Ultra. These aren’t simply tools for automating basic tasks; they represent a fundamental expansion of creative capacity, capable of producing text, images, audio, and even video with remarkable fidelity and nuance. This rapid advancement extends beyond simple replication; these models demonstrate an ability to synthesize information, adapt to different styles, and generate wholly original content at an unprecedented scale and speed. Consequently, the sheer volume of AI-generated material entering the digital sphere is accelerating, impacting industries ranging from journalism and marketing to entertainment and education, and presenting novel challenges in discerning the source and veracity of information.

The accelerating capabilities of generative artificial intelligence are not merely expanding the volume of available content, but fundamentally challenging established notions of authenticity and, consequently, public trust. As AI systems become increasingly adept at mimicking human creativity – producing text, images, and even videos indistinguishable from those created by people – discerning the origin of information becomes exponentially more difficult. This ambiguity fosters a climate of skepticism, where viewers may unconsciously discount the reliability of all content, fearing undetectable manipulation or fabrication. The proliferation of synthetic media, therefore, doesn’t simply present a technical hurdle; it represents a significant threat to the very foundations of information ecosystems, potentially eroding confidence in news, entertainment, and interpersonal communication.

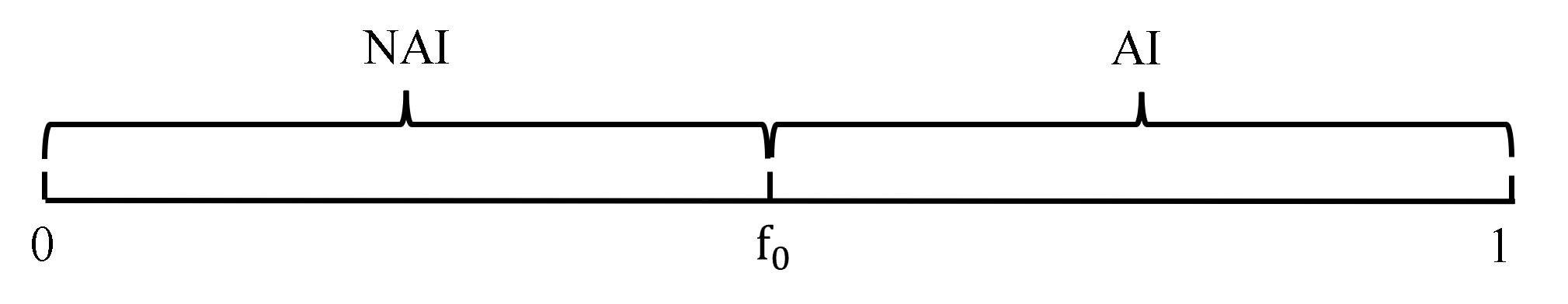

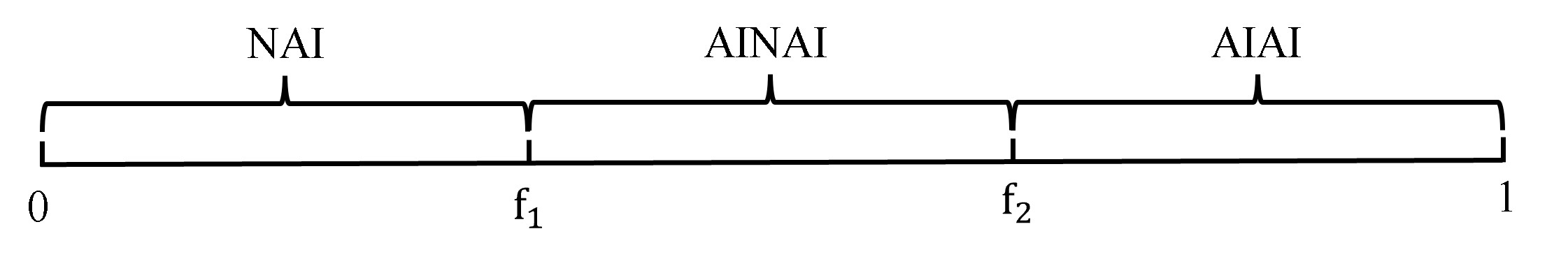

A fundamental challenge in the age of advanced artificial intelligence centers on the increasing difficulty in differentiating between content created by humans and that produced by algorithms. This ambiguity directly impacts perceptions of reliability, as viewers demonstrate a discernible preference for human-authored material – a phenomenon quantified by the ‘Credibility Discount Factor’ (f). Essentially, content suspected of being AI-generated experiences a reduction in perceived trustworthiness, even if factually accurate. This isn’t simply a matter of technological detection; it reflects a deeper cognitive bias where audiences inherently associate human creation with authenticity and emotional resonance, qualities currently lacking in most AI outputs. Consequently, the proliferation of AI-generated content necessitates new strategies for transparency and verification, lest widespread distrust erode the value of information itself.

Platform Governance: Balancing Innovation and Algorithmic Integrity

Effective platform governance is essential for mitigating the consequences of increasing AI-generated content. This governance directly impacts the quality of content disseminated on the platform, as policies regarding disclosure and detection influence creator behavior and the prevalence of potentially misleading or inaccurate material. Simultaneously, governance mechanisms shape creator incentives; policies that are overly restrictive may discourage content creation, while insufficient oversight can lead to a flood of low-quality, AI-generated content. Consequently, platforms must carefully calibrate governance strategies to balance fostering innovation with maintaining content integrity and a sustainable ecosystem for creators, acknowledging that both content quality and creator participation are sensitive to the chosen governance approach.

In response to the increasing prevalence of AI-generated content, platforms including Meta and YouTube have implemented disclosure policies mandating that creators identify when AI tools have been used in the creation of their content. These policies typically require creators to explicitly label videos, images, or text that have been wholly or partially generated by artificial intelligence. The specific implementation varies between platforms, but commonly involves checkboxes, tags, or dedicated sections within the content upload process. The stated purpose of these disclosure requirements is to promote transparency with audiences, allowing viewers to assess content with awareness of its origin, and to mitigate potential misinformation or deceptive practices. Enforcement mechanisms range from content flagging and review to potential account penalties for non-compliance.

Algorithmic detection of AI-generated content, while increasingly employed by platforms, is fundamentally constrained by imperfect accuracy. Current detection technologies do not achieve 100% reliability; instead, performance is characterized by a probability β ranging from 0.5 to 1.0. This means that even under optimal conditions, a non-negligible proportion of AI-generated content may evade detection, and conversely, some human-created content may be falsely flagged. The inherent limitations stem from the evolving sophistication of AI models and the difficulty in distinguishing between nuanced patterns generated by both AI and human creators, necessitating a multi-layered governance approach beyond solely relying on automated detection.

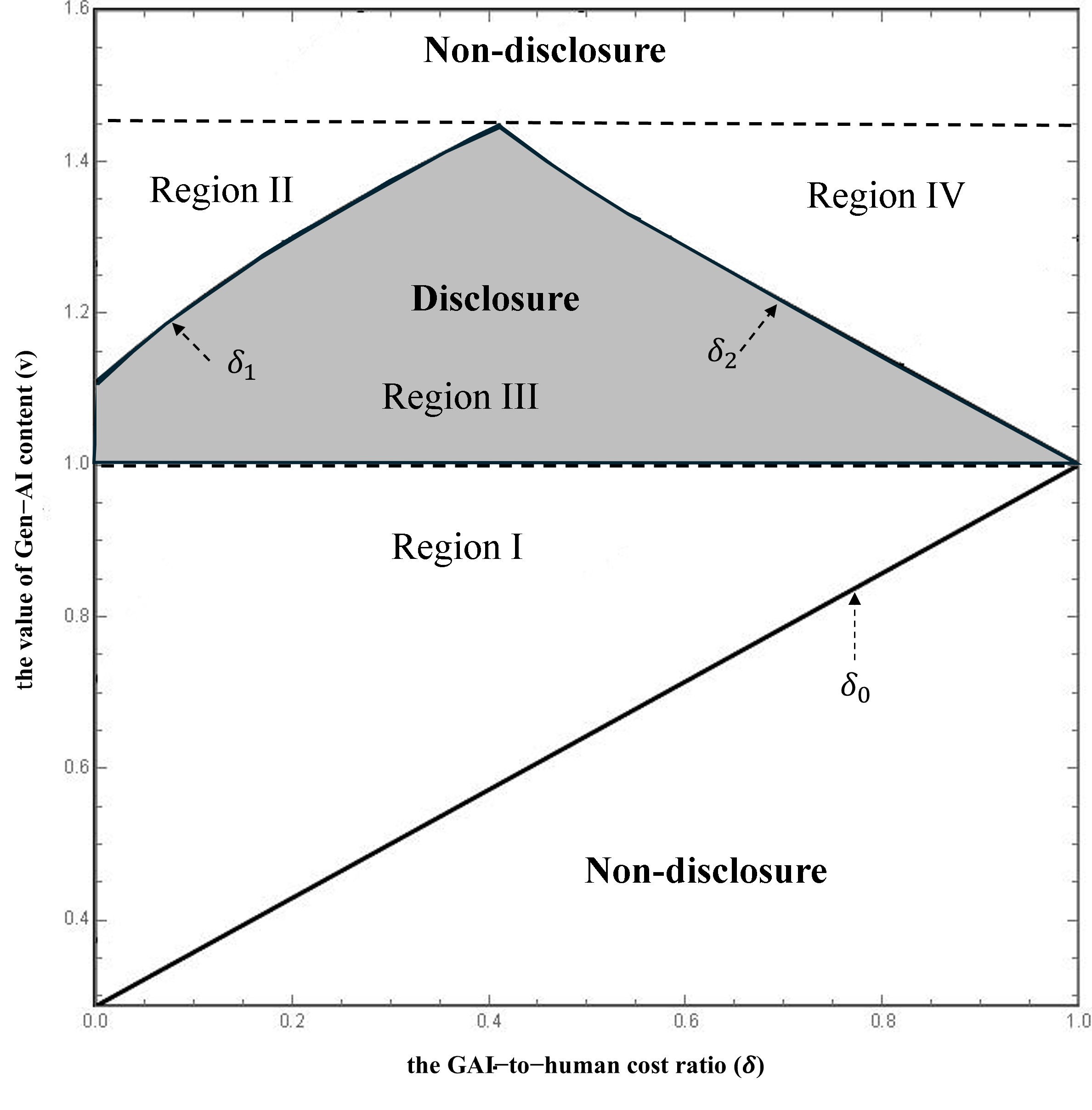

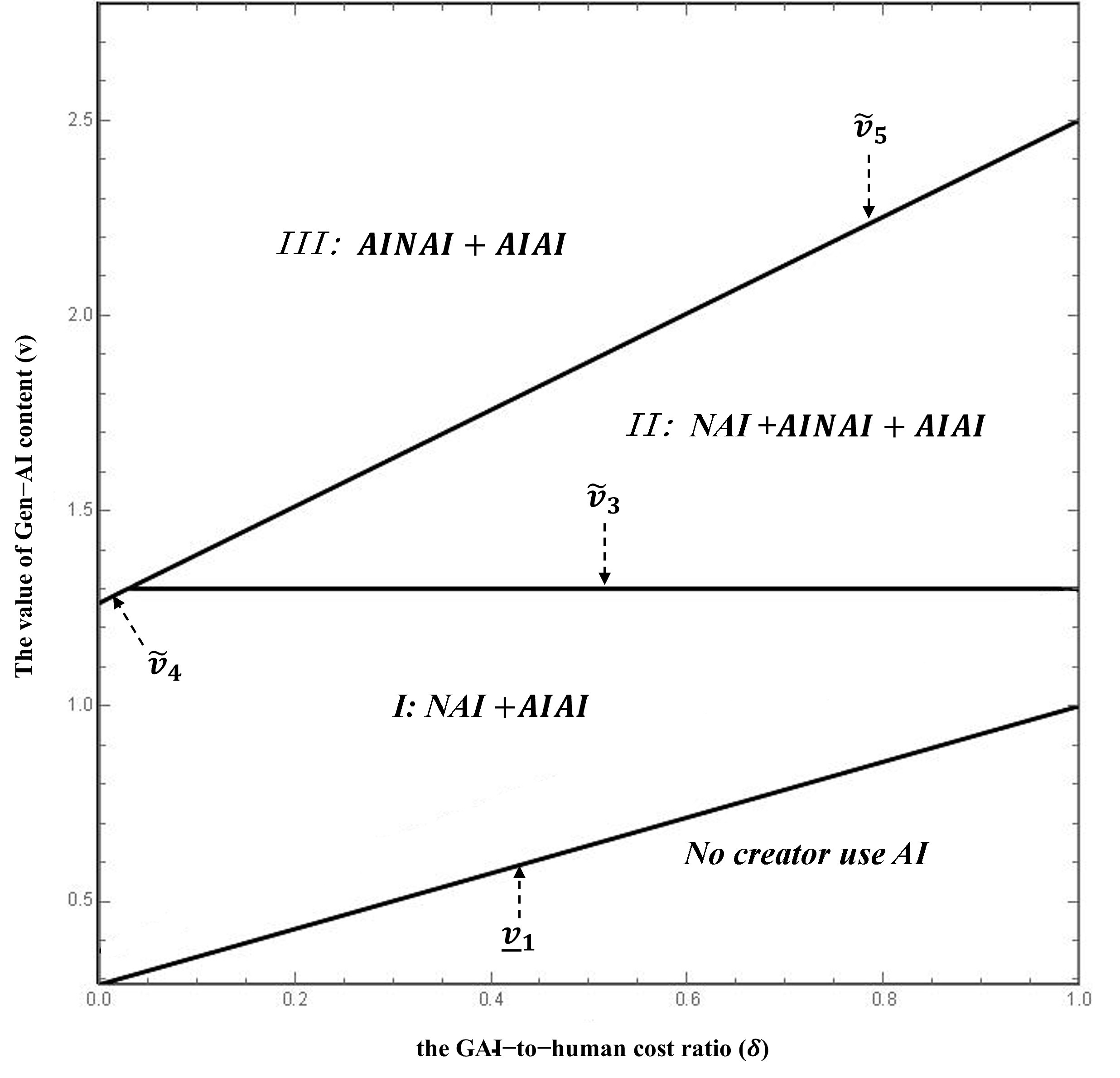

Research utilizes formal modeling to evaluate the efficacy of various platform governance strategies regarding AI-generated content. Simulations compare a “Non-Disclosure Benchmark” – where AI usage remains unlabeled – against a “Self-Disclosure Regime” requiring creator labeling. Results indicate that optimal disclosure – maximizing platform benefit – is not achieved through universal labeling or complete non-disclosure. Instead, the research demonstrates an intermediate region of AI content value and cost efficiency where disclosure is most effective; conditions outside this region favor either full disclosure or complete non-disclosure depending on the relative costs and benefits of AI content generation and detection. These models allow for quantitative assessment of governance policies prior to implementation, predicting outcomes based on defined parameters of content value, detection accuracy, and associated costs.

The Economics of Trust: Viewer Perception and Rational Incentives

Viewer Discounting represents a quantifiable reduction in perceived value applied to content identified as, or suspected of being, AI-generated. This phenomenon is modeled by the Credibility Discount Factor, denoted as f, where a value between 0 and 1 indicates the proportional decrease in willingness-to-pay. Empirical data suggests that viewers consistently assign a lower monetary value to AI-generated content compared to human-created equivalents, even when objectively similar in quality. This discounting directly impacts revenue streams for platforms hosting such content, as it lowers the effective price point achievable through advertising, subscriptions, or direct purchases. The magnitude of f is influenced by factors including the sophistication of AI detection methods, the transparency of content labeling, and the viewer’s pre-existing biases towards AI-generated media.

Attempts to monetize AI-generated content through strategic concealment – deliberately obscuring its artificial origin from viewers – introduce a quantifiable negative impact on perceived value, termed the ‘Trust Discount Factor’ (k). This factor represents the reduction in willingness to pay resulting from a perceived lack of authenticity or transparency. While intended to bypass the f Credibility Discount Factor associated with openly acknowledging AI involvement, concealment erodes viewer trust in the platform and content creators. The magnitude of k is directly proportional to the perceived deception and the potential for misrepresentation, creating a financial penalty that offsets any initial gains from concealing the AI-generated nature of the content. This discount is applied to the base value of the content, reducing potential revenue streams and ultimately impacting platform profitability.

Platform profitability is directly correlated with both viewer engagement and the perceived authenticity of content, with revenue sharing models functioning as a key component. Platforms typically remit a commission, designated as ‘r’, on content revenue, with standard rates falling between 30% and 45%. Higher engagement, driven by authentic or demonstrably valuable content, increases overall revenue volume, thus maximizing the platform’s share. Conversely, declining viewer trust or perceived inauthenticity negatively impacts engagement and subsequently reduces the total revenue subject to the commission rate. Effective revenue sharing, therefore, requires a balance between incentivizing content creators and maintaining viewer confidence to sustain consistent revenue streams for both parties involved.

Prioritizing content quality and transparency is crucial for establishing viewer trustworthiness and ensuring sustained economic performance. Platforms can mitigate the negative impacts of viewer discounting by focusing on demonstrable content value, thereby increasing viewer engagement and retention. The economic benefit of this approach is further amplified by reductions in AI content creation costs, quantified as δ. This cost reduction, when combined with increased viewer loyalty stemming from authentic content, contributes to a higher overall return on investment and offsets potential losses from the Credibility Discount Factor. Long-term economic viability, therefore, is directly correlated with a commitment to quality and openness regarding content origins, fostering a positive feedback loop between viewer trust and platform revenue.

Toward a Transparent Future: Authenticity as the Cornerstone of Creation

The landscape of content creation is rapidly evolving, increasingly characterized by a collaborative interplay between human ingenuity and artificial intelligence. Future content will seldom be purely the product of a single source; instead, AI tools are poised to become integral to nearly every stage of the creative process, from initial concept generation and scriptwriting to visual effects and even performance enhancement. This isn’t necessarily about replacing human creators, but rather augmenting their abilities, enabling them to produce more complex and personalized content at an unprecedented scale. Consequently, discerning the precise contribution of each – human or machine – will become increasingly difficult, necessitating new frameworks for acknowledging authorship and ensuring accountability within the creative sphere. The coming era promises a seamless fusion of human artistry and algorithmic precision, redefining what it means to create and consume content.

The evolving digital landscape demands a fundamental shift towards content transparency, necessitating systems capable of verifying origin and assessing quality. Increasingly sophisticated technologies, such as cryptographic hashing and blockchain-based provenance tracking, are being explored to establish immutable records of content creation and modification. These systems aim to move beyond simple labeling, offering viewers verifiable data about the tools and processes involved in a piece’s production – whether it was generated by a human, an algorithm, or a collaborative effort. Establishing such robust verification protocols isn’t merely about combating misinformation; it’s about empowering audiences to critically evaluate information and fostering a more informed and trustworthy digital environment, ultimately rewarding creators who prioritize genuine and accountable content creation.

A renewed emphasis on authenticity in content creation isn’t simply about ethical considerations; it represents a significant economic shift. As audiences become increasingly discerning and sophisticated, they demonstrably favor genuine contributions over manufactured or misleading content. This preference is fostering a willingness to directly support creators who demonstrably prioritize originality and transparency, through mechanisms like subscriptions, direct patronage, and micro-payments. Consequently, a marketplace is emerging where authenticity itself becomes a valuable commodity, incentivizing creators to invest in building genuine connections with their audiences and fostering trust. This move away from reliance on algorithmic amplification and towards direct creator-audience relationships promises a more sustainable and equitable economic model for the digital content landscape, rewarding quality and originality while simultaneously safeguarding viewers from manipulation and misinformation.

The longevity of digital content relies heavily on cultivating a trustworthy environment, demanding that platforms move beyond reactive measures and embrace proactive investment. This necessitates developing and implementing technologies – such as advanced watermarking, cryptographic signatures, and AI-powered provenance tracking – that can reliably verify content authenticity and trace its origins. Simultaneously, robust policies are crucial, establishing clear guidelines regarding AI-generated content, deepfakes, and synthetic media, alongside effective mechanisms for reporting and addressing misinformation. A sustainable ecosystem isn’t simply about detecting falsehoods; it requires fostering a culture of transparency where creators are incentivized to disclose AI assistance and viewers are empowered with the tools to critically evaluate the information they consume, ultimately safeguarding both trust and the value of genuine creative work.

The study rigorously assesses the conditions under which mandatory disclosure of AI-generated content becomes a viable governance strategy. It acknowledges the inherent tension between fostering transparency and preserving creator motivation-a duality demanding precise calibration. This pursuit of optimal conditions echoes G.H. Hardy’s sentiment: “Mathematics may be considered with precision, but this is no guarantee of usefulness.” The paper, much like a mathematical proof, seeks a formally defined range – a threshold of AI quality and cost – where disclosure isn’t merely a symbolic gesture but a functional component of a healthy content ecosystem. Without such precise definition, any governance approach risks becoming an unproductive, and ultimately, useless imposition.

What’s Next?

The presented analysis, while establishing a bounded rationality for mandatory disclosure of AI-generated content, ultimately highlights the persistent difficulty of aligning incentives with verifiable truth. The model’s sensitivity to parameters representing AI quality and cost suggests that any governance strategy is, at best, a temporary accommodation-a static solution to a dynamically evolving problem. A truly robust system necessitates a shift from detection-based approaches to content verification grounded in mathematical certainty – a provable origin, not a probabilistic assessment.

Further research should therefore prioritize the development of cryptographic methods capable of embedding demonstrably unique signatures within AI-generated content at the point of creation. Such an approach would sidestep the inherent limitations of post-hoc detection, which remains an arms race predicated on increasingly sophisticated obfuscation. The current focus on ‘trustworthiness’ as a subjective metric is, frankly, a category error. Trust is a human construct; algorithms require proof.

The ultimate question isn’t if we can detect AI-generated content, but whether we can establish a system where its provenance is inherently, mathematically undeniable. Until that is achieved, discussions of optimal disclosure policies remain exercises in applied pragmatism – a necessary compromise, perhaps, but one lacking the elegance of a truly solved problem.

Original article: https://arxiv.org/pdf/2601.18654.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- All weapons in Wuchang Fallen Feathers

- Where to Change Hair Color in Where Winds Meet

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Best Video Games Based On Tabletop Games

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

2026-01-27 22:12