Author: Denis Avetisyan

A new study examines the Civitai platform and its bounty system, revealing a thriving market for AI-generated adult content and the challenges of moderating this rapidly expanding landscape.

Research into the Civitai platform demonstrates a concentration of activity around NSFW requests and deepfakes, with inconsistent enforcement of content policies.

While generative AI promises creative innovation, its incentive structures often remain opaque. This research, ‘A Marketplace for AI-Generated Adult Content and Deepfakes’, analyzes the Civitai platform’s “Bounty” system, revealing a substantial and growing demand for “Not Safe For Work” content and deepfakes, particularly those targeting female celebrities. Our longitudinal analysis demonstrates that this monetized, community-driven system concentrates activity among a small group of users and exhibits uneven enforcement of content moderation policies. How can platforms balance open creation with the ethical and legal challenges posed by AI-generated synthetic media and its potential for harm?

The Inevitable Bloom: Diffusion and the Erosion of Reality

Diffusion models represent a significant leap forward in image generation, eclipsing earlier generative adversarial networks (GANs) and variational autoencoders in both fidelity and nuanced detail. These models operate by progressively adding noise to an image until it becomes pure static, then learning to reverse this process – effectively ‘denoising’ from randomness to create a coherent visual. This iterative refinement allows diffusion models to capture intricate textures and complex structures with unprecedented realism. Unlike GANs, which can suffer from instability during training and mode collapse, diffusion models exhibit more stable learning dynamics, leading to higher-quality and more diverse outputs. The result is synthetic imagery that increasingly blurs the line between digital creation and photographic reality, opening doors for innovation in fields ranging from art and design to scientific visualization and data augmentation.

The recent proliferation of platforms such as DALL-E, Midjourney, and Stable Diffusion signifies a substantial shift in content creation, moving generative artificial intelligence beyond research labs and into the hands of a broad audience. This democratization, however, is not without its complexities. While these tools empower individuals to realize visual concepts with unprecedented ease, they simultaneously present challenges regarding intellectual property, artistic authorship, and the potential for misuse – including the creation of deepfakes or the spread of misinformation. The ability to generate highly realistic images from text prompts raises questions about the value of original art and the need for new frameworks to address copyright and attribution in a landscape where content is increasingly synthesized rather than solely created by human hands. Ultimately, these platforms represent both a powerful creative opportunity and a call for thoughtful consideration of the ethical and societal implications of readily available AI-driven content.

As generative AI tools become increasingly user-friendly and widely available, a natural consequence is the emergence of dedicated platforms for sharing and creatively adapting the resulting outputs. These digital spaces aren’t simply repositories for finished images or text; they function as vibrant communities where users showcase their creations, offer constructive criticism, and collaboratively remix and build upon each other’s work. This fosters a unique cycle of innovation, where initial AI outputs serve as springboards for further artistic exploration and refinement. The growth of these communities highlights a shift from AI as a solitary tool to a collaborative medium, demonstrating a burgeoning culture around AI-assisted creativity and the collective exploration of its potential. This dynamic environment is rapidly shaping not only the aesthetic landscape of AI-generated media but also the very definition of authorship and artistic practice in the digital age.

Civitai: The Garden Takes Root

Civitai operates as a centralized online platform facilitating the sharing, adaptation, and generation of AI-created content, primarily focused on images generated by diffusion models. Users can upload and download models, LoRAs, prompts, and generated images, fostering a collaborative environment for experimentation and refinement. The platform supports a robust community through features like commenting, liking, and user profiles, enabling interaction and the dissemination of techniques. This structure allows for a rapid cycle of content creation, remixing, and improvement, driven by user contributions and feedback. The platform’s architecture is designed to lower the barrier to entry for both creating and accessing AI-generated visual content.

Civitai’s Bounty System operates as a demand-driven content creation mechanism, allowing users to post requests with associated rewards, typically in the form of Civitai points. These points can then be used to commission content from other users, effectively creating a micro-economy within the platform. This incentivization model fosters a responsive ecosystem by directing creative effort towards specific, requested content, and ensures a continuous flow of new material driven by user demand rather than solely by individual initiative. The system includes features for bounty posting, claim management, and completion verification, facilitating a structured exchange between requesters and content creators.

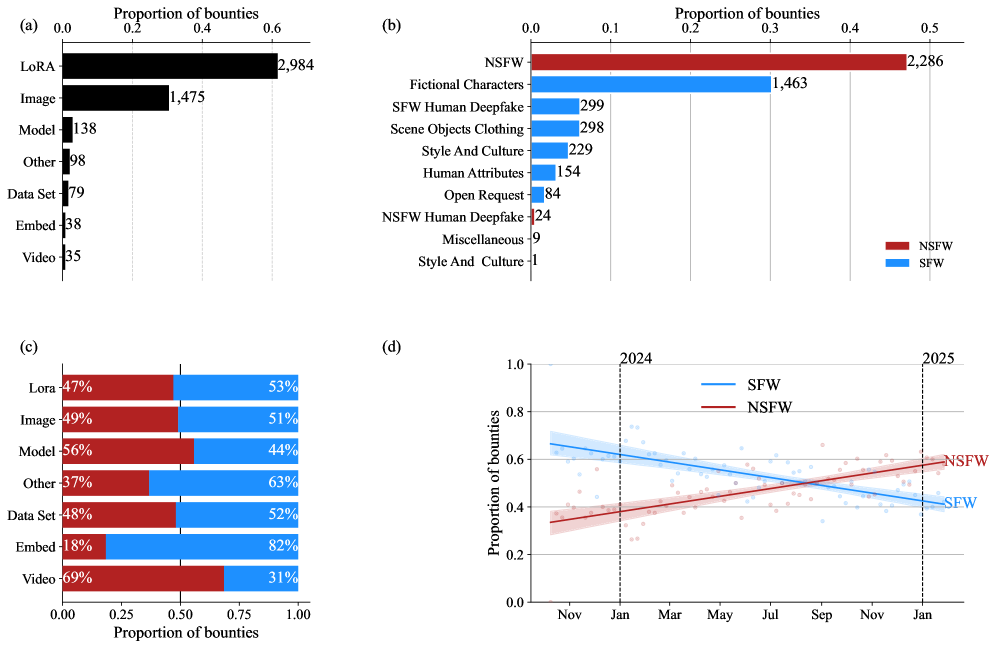

Data analysis of the Civitai bounty system indicates that approximately 48% of all posted bounties request Not Safe For Work (NSFW) content. This figure consistently represents a majority of weekly bounty requests, demonstrating a pronounced demand for this content type within the Civitai user base. The prevalence of NSFW bounties suggests a significant portion of content creation activity on the platform is directed towards adult-oriented material, influencing the types of models and LoRAs generated and shared.

LoRA, or Low-Rank Adaptation, is a technique used to fine-tune pre-trained diffusion models with a significantly reduced number of trainable parameters. This is achieved by introducing low-rank matrices into the layers of the original model, allowing for adaptation to new datasets or styles without modifying the entire model’s weight set. Consequently, LoRA models require substantially less computational resources and storage space compared to full fine-tuning, making personalized content creation more accessible. Users can then share these LoRA adaptations, enabling others to reproduce specific aesthetic styles or incorporate novel concepts into their generated images, effectively customizing the output of diffusion models.

Shadows in the Machine: Moderation and the Deepfake Threat

Civitai utilizes OpenAI’s Content Moderation APIs as a first line of defense against the proliferation of problematic user-generated content. These APIs automatically assess submitted images and associated metadata, flagging material that violates pre-defined safety guidelines relating to nudity, violence, hate speech, and other harmful categories. This automated process supplements, but does not replace, manual review conducted by Civitai’s moderation team. The API integration allows for scalability in content assessment, enabling the platform to handle a high volume of submissions, while human moderators focus on nuanced cases and appeals, improving overall moderation efficiency and response time.

Despite the implementation of automated content moderation via OpenAI APIs, the Civitai platform remains susceptible to the creation and distribution of Deepfake content. This vulnerability stems from the increasing sophistication of Deepfake generation techniques and the limitations of current automated detection methods. The proliferation of Deepfakes raises substantial ethical concerns, particularly regarding non-consensual pornography, defamation, and the potential for malicious misrepresentation. The platform’s open nature, while fostering creativity, inadvertently provides a conduit for the creation and sharing of potentially harmful synthetic media, necessitating ongoing refinement of moderation strategies and a heightened awareness of the associated risks.

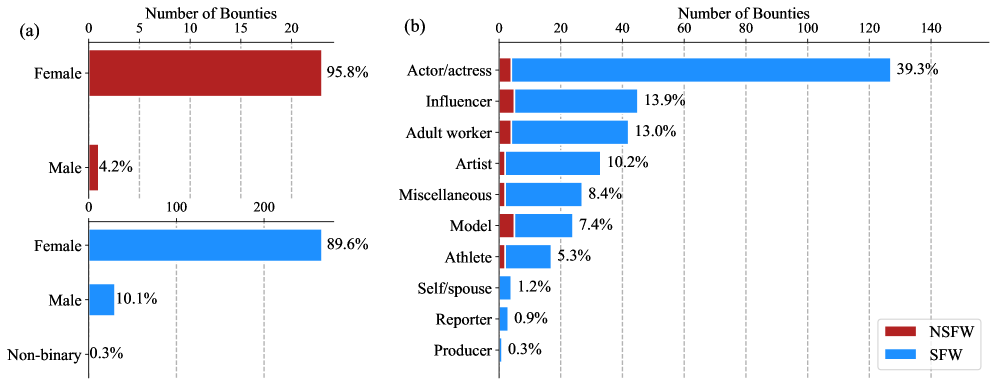

Analysis of deepfake request data reveals a significant gender disparity, with women being the subjects of approximately nine requests for every one request featuring a man. This 9:1 ratio indicates a disproportionate targeting of women within deepfake creation, raising substantial concerns regarding potential exploitation and the weaponization of synthetic media for harassment or non-consensual pornography. The observed imbalance suggests that deepfake technology is not being utilized neutrally, and that specific demographic groups are at a demonstrably higher risk of harm through the creation and dissemination of manipulated imagery.

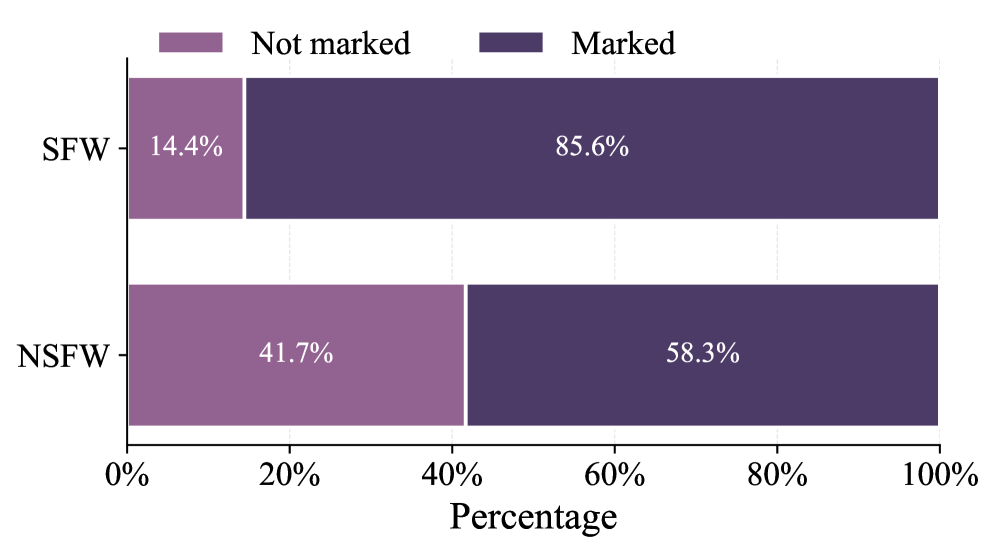

Analysis of platform data indicates that intervention – either flagging, removal, or other moderation actions – occurred on only 58.3% of identified NSFW deepfake bounty requests. This represents a substantial gap in policy enforcement and overall content moderation effectiveness concerning explicitly sexualized deepfakes. The observed rate suggests that over 40% of such requests currently bypass automated and manual review processes, potentially contributing to the proliferation of non-consensual imagery and exploitative content on the platform. This finding underscores the need for improved detection mechanisms, more robust review procedures, and potentially increased resources allocated to addressing NSFW deepfake content.

The Gini coefficient, a measure of statistical dispersion, was calculated for deepfake bounty creation and compared to Safe For Work (SFW) and Not Safe For Work (NSFW) content. A coefficient of 0.45 for deepfake bounties indicates a higher degree of concentration in participation; this means a smaller number of users are responsible for creating a disproportionately large number of these bounties. In comparison, SFW content exhibited a Gini coefficient of 0.41, and NSFW content a coefficient of 0.37, suggesting more evenly distributed participation within those categories. A higher Gini coefficient, therefore, implies that deepfake bounty creation is less democratized and more heavily influenced by a select group of users.

The Expanding Garden: Ecosystems Beyond Civitai

The proliferation of platforms dedicated to AI-generated content extends far beyond the well-known Civitai, with sites like TensorArt and PixAI actively fostering a rapidly expanding creative ecosystem. These platforms serve as vital hubs where users can share, discover, and refine diffusion model outputs – ranging from stunning visuals to innovative prompts and model checkpoints. TensorArt, for instance, emphasizes high-resolution image sharing and artistic curation, while PixAI focuses on accessibility and collaborative creation. This diversification isn’t merely about offering choice; it signifies increasing user demand for AI-driven artistry and a growing need for interoperability between these tools, ultimately empowering a broader range of creators to participate in and contribute to the evolving landscape of generative AI.

The proliferation of AI art platforms extending beyond Civitai signifies a rapidly expanding appetite for AI-driven creative tools and content. This diversification isn’t merely about offering more choices; it underscores a growing user base eager to explore and share generative art, demanding greater accessibility and specialization. However, this fragmented landscape also highlights the critical need for interoperability – the ability for different platforms to seamlessly exchange data and models. Without standardized formats and communication protocols, creators face limitations in portability and collaboration, hindering the full potential of this emerging ecosystem. Successfully navigating this challenge will be essential to fostering a truly open and collaborative future for AI-generated art, allowing creators to move freely and build upon innovations regardless of the platform they choose.

The proliferation of AI-generated content platforms presents a significant balancing act between fostering open access and proactively addressing potential harms. While unrestricted access fuels innovation and democratizes creative tools, it simultaneously creates pathways for the dissemination of malicious or inappropriate material. Current efforts focus on developing robust content moderation systems, but these face ongoing challenges in accurately identifying and removing harmful outputs without stifling legitimate expression. The core difficulty lies in defining “harmful” – a concept subject to cultural nuance and ethical debate – and implementing scalable solutions that can keep pace with the rapidly evolving capabilities of diffusion models. Successfully navigating this tension is crucial for ensuring the long-term sustainability and responsible development of the AI creative ecosystem, demanding ongoing collaboration between platform developers, researchers, and policymakers.

The relentless advancement of Diffusion Models serves as the primary engine driving innovation within the realm of AI-generated content. These models, continually refined through research and development, are not simply improving existing capabilities but are fundamentally expanding the possibilities for creators. Each new iteration introduces novel techniques – from enhanced image resolution and stylistic control to more sophisticated methods for prompting and content manipulation. This constant flow of progress empowers artists, designers, and hobbyists with increasingly powerful tools, allowing them to realize visions previously confined to imagination. The development extends beyond core model architecture, encompassing advancements in areas like efficient training, reduced computational costs, and increased accessibility, effectively democratizing the creation of high-quality, AI-driven art and media.

The Civitai platform, as the research demonstrates, isn’t simply a content repository; it’s a burgeoning ecosystem fueled by user incentives. The bounty system, intended to foster creation, inadvertently cultivates a landscape where demand-particularly for NSFW content and deepfakes-shapes the growth. This mirrors the inherent unpredictability of complex systems. As Tim Bern-Lee once stated, “The Web is more a social creation than a technical one.” The platform’s moderation policies, while present, struggle to keep pace with the evolving demands of this social creation, revealing how even well-intentioned architectural choices can become prophecies of future challenges within a rapidly expanding digital garden.

What’s Next?

The study of Civitai reveals less a marketplace than an accelerating ecosystem. The bounty system, intended as a mechanism for directing creative effort, instead functions as a selective pressure. Requests for increasingly specific, and often problematic, content are not aberrations; they are the predictable outcome of optimizing for engagement within loosely defined boundaries. Architecture is, after all, how one postpones chaos, not prevents it.

Future work must move beyond cataloging content trends. The concentration of activity within a small cohort of users suggests the emergence of power dynamics worthy of deeper scrutiny. The platform’s moderation policies, unevenly applied, are not failures of implementation, but symptoms of a fundamental tension: the desire for control versus the inevitability of emergent behavior. There are no best practices – only survivors.

The real challenge lies in understanding the systemic implications. This is not simply about adult content or deepfakes. It is about the broader question of how incentive structures shape the evolution of generative AI, and how quickly order degrades into just cache between two outages. The focus must shift from policing outputs to analyzing the underlying forces that drive them, and accepting that every architectural choice is a prophecy of future failure.

Original article: https://arxiv.org/pdf/2601.09117.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Banks & Shadows: A 2026 Outlook

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- ETH PREDICTION. ETH cryptocurrency

- Gay Actors Who Are Notoriously Private About Their Lives

- 9 Video Games That Reshaped Our Moral Lens

- The Weight of Choice: Chipotle and Dutch Bros

2026-01-15 12:16