Author: Denis Avetisyan

New research reveals that simply knowing what an AI can actually do isn’t enough to guarantee its successful adoption, and can even create new challenges for users.

Information asymmetry regarding AI system capabilities hinders optimal reliance, demonstrating that partial disclosure can be more effective than full transparency in fostering trust and improving market outcomes.

Despite the promise of artificial intelligence, widespread adoption is hindered by a fundamental challenge: buyers often lack the information needed to assess AI system quality. This research, framed by the question ‘When Life Gives You AI, Will You Turn It Into A Market for Lemons? Understanding How Information Asymmetries About AI System Capabilities Affect Market Outcomes and Adoption’, investigates how these information asymmetries impact user choices in simulated AI markets. Our findings demonstrate that even partial disclosure of AI capabilities can improve decision-making, yet full transparency doesn’t guarantee optimal reliance, suggesting that carefully designed transparency mechanisms are crucial. How can we best calibrate trust and foster efficient human-AI collaboration in the face of inherent uncertainty?

Unveiling the Asymmetry: The Hidden Risks of AI Integration

The rapid integration of artificial intelligence into daily life is proceeding with a significant imbalance of knowledge. Developers, possessing deep understanding of AI’s capabilities and limitations, create systems often deployed to users lacking such expertise. This disparity isn’t merely a gap in technical understanding; it extends to the algorithms’ potential biases, data dependencies, and failure modes. Consequently, individuals and organizations are increasingly reliant on technologies whose inner workings remain opaque, creating a situation where the benefits of AI are enjoyed while the associated risks are disproportionately borne by those least equipped to assess them. This fundamental information asymmetry threatens to erode trust and ultimately stifle the widespread, responsible adoption of AI technologies.

The rapid proliferation of artificial intelligence systems is increasingly likened to a ‘market for lemons,’ a concept from economics where asymmetric information leads to a decline in quality. This analogy highlights a critical challenge: users often lack the expertise to assess the reliability and performance of AI tools before adoption, creating a situation where low-quality or biased systems can easily gain traction. Such systems, while potentially offering short-term gains, erode public trust and can stifle the development of genuinely beneficial AI applications. The risk is that a flood of substandard AI offerings will overshadow innovative, well-designed systems, ultimately hindering the technology’s potential to deliver on its promises and discouraging investment in robust, trustworthy AI development.

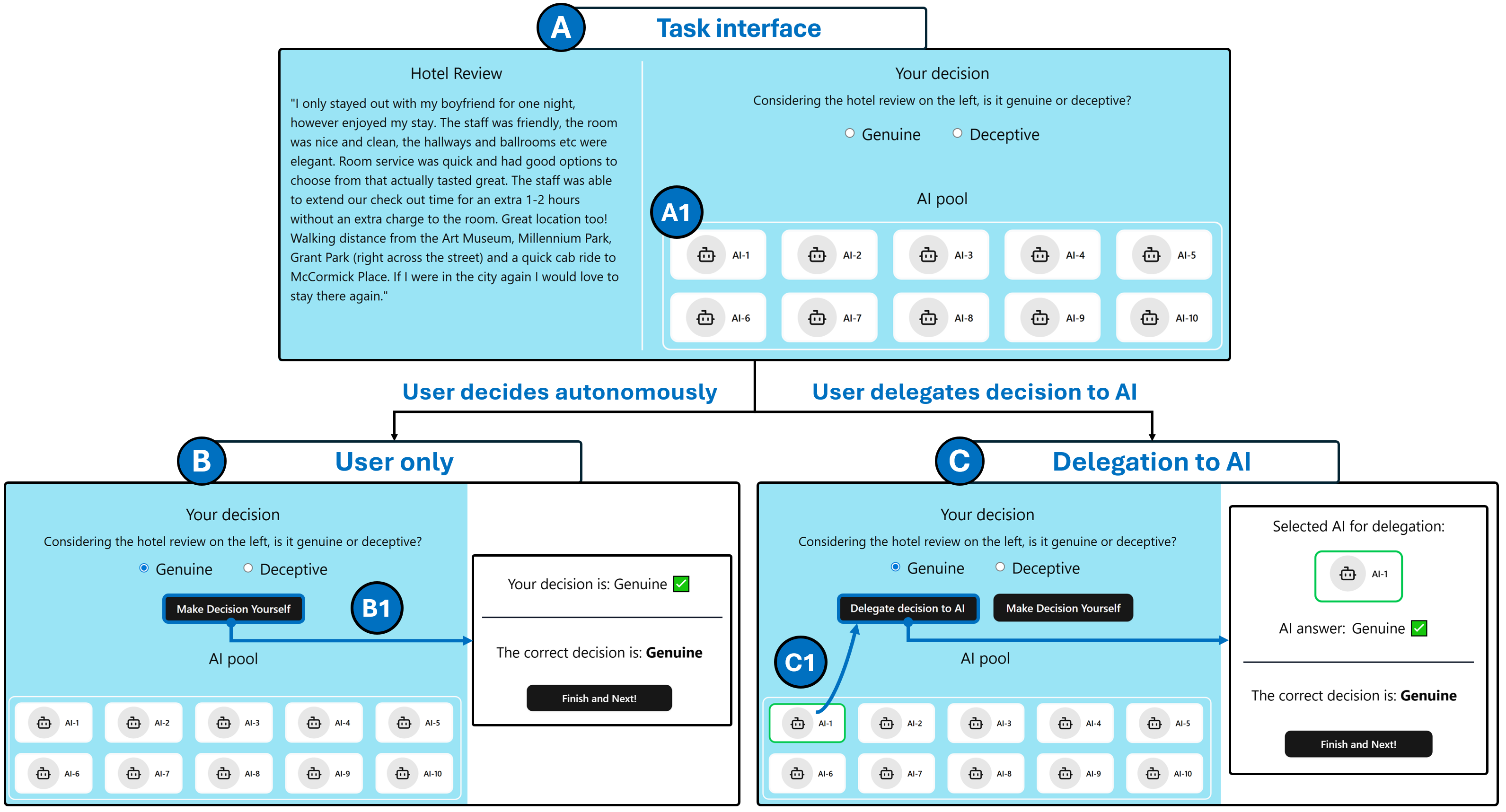

The extent to which individuals cede control to artificial intelligence systems is directly proportional to their exposure to potential harms stemming from flawed or biased algorithms. As decision-making authority shifts from human judgment to automated processes, users become increasingly reliant on the accuracy and integrity of these systems, even without full understanding of their inner workings. This delegation of trust creates a vulnerability, particularly when AI is applied to critical domains like finance, healthcare, or criminal justice. A greater reliance on AI, without corresponding transparency and accountability measures, means that errors or malicious intent within the system can have far-reaching consequences, impacting individuals in ways that are often opaque and difficult to redress. Ultimately, the benefits of AI integration hinge on a careful balance between automation and human oversight, ensuring that delegation of decision-making does not equate to a surrender of control or accountability.

The promise of artificial intelligence – increased efficiency, novel discoveries, and solutions to complex problems – hinges on widespread adoption and genuine user trust. However, this potential remains largely untapped due to a critical imbalance in the current landscape. Unless the disparity between developers’ understanding of AI systems and users’ ability to assess their reliability is actively addressed, the benefits will be limited and unevenly distributed. This isn’t simply a matter of technical refinement; it’s a systemic issue where the risks of deploying flawed or biased AI outweigh the rewards, fostering skepticism and hindering innovation. Realizing the full scope of AI’s capabilities requires a concerted effort to empower users with the knowledge and tools necessary to navigate this evolving technology, ensuring that trust is earned, not simply assumed.

Illuminating the Black Box: Transparency as a Remedy

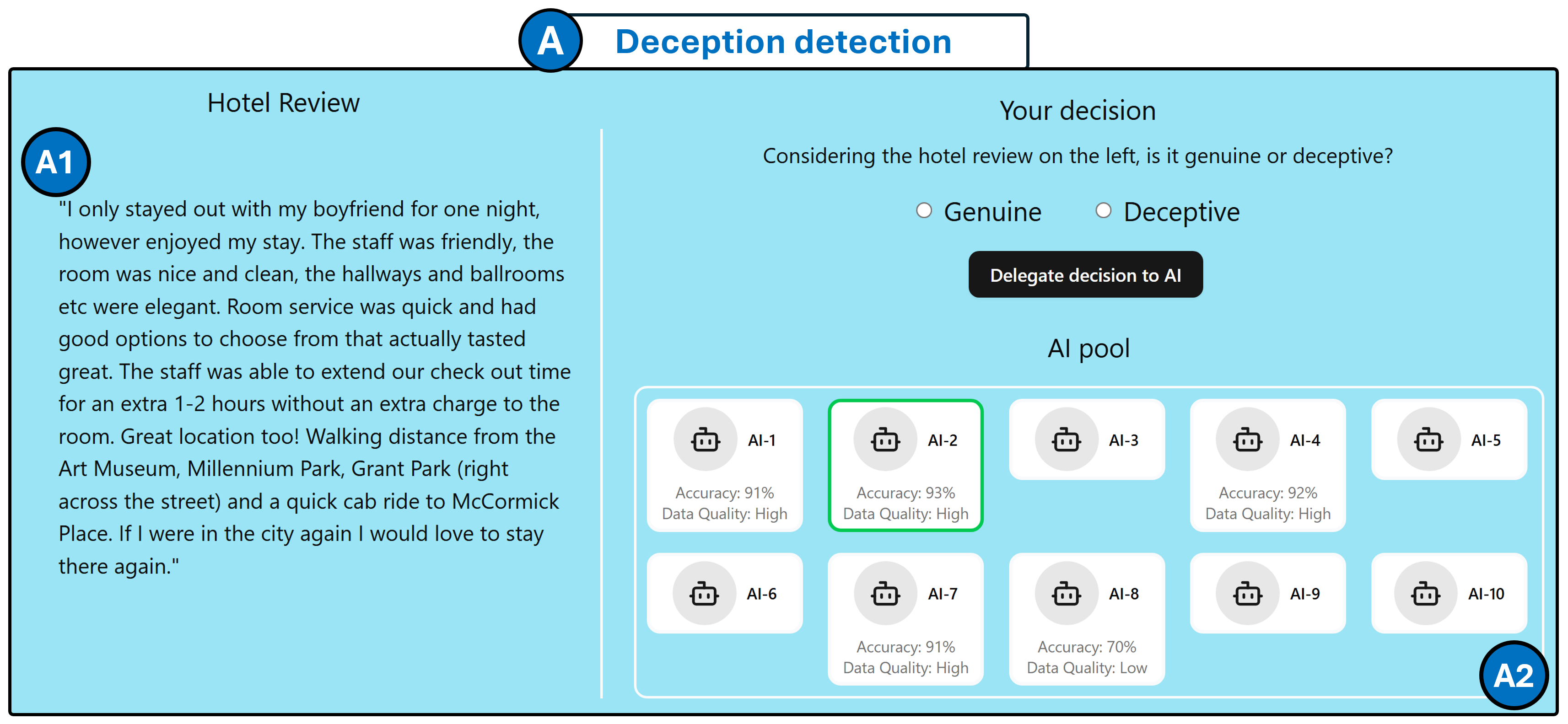

Information asymmetry, where one party in a transaction possesses more knowledge than another, is a significant challenge in the deployment of artificial intelligence systems. Users often lack insight into an AI’s performance characteristics, including its accuracy rates and the quality of the data used for training. Providing users with clear and accessible information regarding these capabilities is therefore crucial for reducing this imbalance. Disclosure of accuracy metrics, data provenance, and potential biases allows users to better assess the reliability of AI-generated outputs and make informed decisions about whether and how to utilize these systems. This transparency is not merely about building trust, but about enabling rational evaluation and mitigating the risks associated with relying on opaque AI technologies.

Disclosure functions as a crucial mechanism for fostering appropriate user reliance on artificial intelligence systems. By providing information regarding an AI’s functionality, limitations, and the data used in its training, users are equipped to assess the system’s suitability for a given task. This informed assessment allows individuals to calibrate their level of trust – increasing it for reliable systems and decreasing it, or opting out entirely, when faced with systems exhibiting questionable characteristics or known deficiencies. Consequently, disclosure directly supports more rational decision-making processes and mitigates the risks associated with blind acceptance of AI-generated outputs.

Disclosure strategies for AI system information vary in scope, primarily falling into two categories: FullDisclosure and PartialDisclosure. FullDisclosure involves revealing comprehensive details regarding the AI’s training data, model architecture, limitations, and performance metrics. Conversely, PartialDisclosure presents a curated subset of this information, typically focusing on key performance indicators and known limitations without detailing the underlying technical specifics. The implications of each strategy relate to user comprehension, decision-making load, and potential for misinterpretation; while FullDisclosure theoretically offers maximum information, it can overwhelm users, whereas PartialDisclosure aims for efficient communication of critical data, albeit with reduced detail.

Research indicates that providing users with partial disclosure regarding AI system quality demonstrably improves decision-making efficiency. Specifically, users presented with data indicating the accuracy or data provenance of an AI system were significantly more likely to bypass systems identified as low-quality, leading to a reduction in time spent evaluating ineffective tools. This avoidance behavior, facilitated by partial disclosure, streamlines the decision process and allows users to focus resources on higher-performing AI systems. The observed effect is not necessarily predicated on full transparency; limited, relevant data regarding system capabilities is sufficient to impact user choices and improve overall efficiency.

The Calibration of Trust: Navigating Human Fallibility

User assessment of AI systems is consistently subject to cognitive biases, impacting both perception and reliance. These biases represent systematic deviations from rational judgment, meaning individuals do not evaluate AI performance based solely on objective metrics. Factors such as confirmation bias, where users favor information confirming pre-existing beliefs about the AI, and automation bias, leading to an over-reliance on AI suggestions even when demonstrably incorrect, significantly influence user behavior. Consequently, even with access to performance data, users may overestimate the reliability of AI in some scenarios and underestimate it in others, hindering optimal human-AI collaboration and potentially leading to inappropriate levels of trust or distrust.

TrustCalibration, crucial for effective AI system integration, refers to the user’s ability to modify their level of dependence on an AI based on its demonstrated performance. This is not a static assessment of trustworthiness, but rather a dynamic process where users increase reliance with consistently accurate outputs and decrease reliance following errors or inconsistencies. Successful TrustCalibration involves ongoing observation of AI behavior, evaluation of its outputs against expected results, and subsequent adjustment of delegation rates – the extent to which a user accepts the AI’s recommendations or allows it to operate autonomously. A failure to calibrate trust appropriately can lead to both over-reliance, potentially resulting in acceptance of flawed outputs, and under-reliance, hindering the benefits of AI assistance.

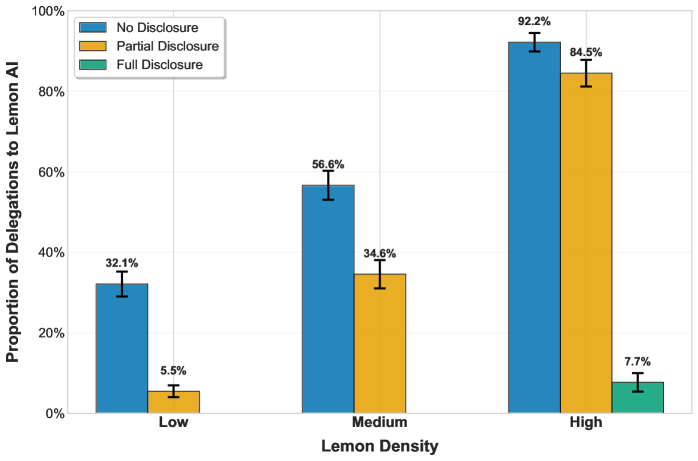

The prevalence of low-quality AI systems within a given market significantly impacts user trust and reliance. A ‘LowDensityLemons’ scenario, characterized by a small proportion of ineffective AI, cultivates higher levels of trust as users are less frequently exposed to poor performance. Conversely, a ‘HighDensityLemons’ scenario, where a large number of AI systems are unreliable, erodes user confidence, leading to decreased delegation even when high-performing systems are present. This dynamic demonstrates that overall market quality, not just individual system performance, is a critical factor in the successful adoption of AI technologies.

Research findings indicate a correlation between market context, disclosure levels, and user reliance on AI systems. In scenarios characterized by a high prevalence of unreliable AI – termed ‘high-density lemon’ environments – delegation rates to AI remained at 57.7% even when users were fully informed about potential system inaccuracies, suggesting a degree of under-reliance. Conversely, partial disclosure of AI limitations, combined with low to medium densities of unreliable systems, resulted in increased coin earnings for users, implying that strategic information management can positively influence user acceptance and benefit outcomes. These results highlight that full transparency does not guarantee appropriate trust calibration and that the broader market landscape significantly impacts how users interact with and delegate tasks to AI.

Forging Robust Ecosystems: A Path Forward

The successful integration of artificial intelligence hinges on overcoming a fundamental challenge: information asymmetry. Often, the inner workings of AI systems remain opaque, even to experts, creating a power imbalance between those who develop and deploy these technologies and those who are impacted by them. This lack of transparency erodes trust and hinders widespread adoption. Fostering ‘trust calibration’ – a nuanced understanding of an AI’s capabilities and limitations – is therefore critical. It requires moving beyond simple assurances of accuracy and instead providing clear, accessible explanations of how an AI arrives at its conclusions. Without this, users are left unable to appropriately weigh the risks and benefits, ultimately limiting the potential of AI to drive innovation and improve decision-making across all sectors. A calibrated trust, built on informed understanding, is not merely desirable – it is the cornerstone of a robust and beneficial AI ecosystem.

Successfully deploying artificial intelligence in intricate real-world situations demands more than just algorithmic prowess; it necessitates a deliberate pairing of transparency and human judgment. Proactive disclosure strategies, where the reasoning behind an AI’s decisions is readily available for examination, build critical trust and facilitate error correction. However, complete automation is often insufficient, especially when facing ambiguous or high-stakes scenarios. Therefore, the ‘HumanInTheLoop’ approach-integrating human oversight into the decision-making process-allows for nuanced evaluation, ethical considerations, and the application of contextual understanding that algorithms may lack. This collaborative model doesn’t merely correct errors; it fosters continuous learning for both the AI and the human operators, ultimately leading to more robust, reliable, and responsible AI systems capable of navigating complexity with greater confidence.

The current AI landscape often resembles a ‘lemons market’ – where asymmetric information allows low-quality models to proliferate, hindering genuine progress and eroding trust. However, a transition towards a ‘LowDensityLemons’ market – one emphasizing quality, transparency, and rigorous evaluation – promises to unlock significantly broader AI adoption and foster genuine innovation. This shift necessitates not only standardized benchmarks and accessible model documentation, but also mechanisms for verifying claims of performance and mitigating biases. By prioritizing demonstrably reliable AI systems, developers can cultivate a marketplace where users confidently integrate these tools, driving further refinement and expansion of beneficial applications across diverse fields. Such a transparent ecosystem incentivizes investment in robust AI, rather than simply rewarding quantity, ultimately accelerating the realization of AI’s full potential.

The successful and ethical implementation of artificial intelligence hinges on a unified approach involving those who create the technology, those who utilize it, and those who govern its application. Developers must prioritize transparency and accountability in algorithm design, while users require the tools and education to critically evaluate AI-driven outputs and provide meaningful feedback. Crucially, policymakers play a vital role in establishing clear ethical guidelines, safety standards, and regulatory frameworks that foster innovation while mitigating potential risks. This collaborative ecosystem, where expertise is shared and perspectives are valued, is not merely a matter of risk management; it is fundamental to building public trust and unlocking the transformative potential of AI for the benefit of all.

The research illuminates a fascinating paradox: increased transparency regarding AI capabilities doesn’t automatically translate to optimal market outcomes. This echoes Donald Knuth’s observation that, “Premature optimization is the root of all evil.” Just as rushing to optimize code before understanding its fundamental behavior can lead to complications, blindly assuming full disclosure will solve adoption issues overlooks the complexities of human calibration and trust. The study demonstrates that a carefully calibrated disclosure strategy-acknowledging AI limitations alongside strengths-is crucial. It’s not simply about revealing information, but understanding how that information is processed and integrated into decision-making, much like refining an algorithm for efficiency and clarity.

What’s Next?

The findings suggest a peculiar landscape for AI integration. It isn’t simply a matter of revealing what an algorithm can do, but rather, calibrating expectations around what it will do, and, crucially, what it is likely to fail at. One wonders if the ‘lemon density’ isn’t an inherent feature of complex systems, a necessary byproduct of pushing boundaries. Perhaps the pursuit of perfect transparency is a misdirection; the real challenge lies in designing systems that gracefully reveal their limitations, signaling the edges of competence rather than attempting to erase them.

Future work should investigate the interplay between disclosure, trust, and the very definition of ‘expertise’ in a human-AI collaborative context. If delegation to AI is optimal only under specific conditions of asymmetric information, then the research raises a deeper question: are humans naturally inclined to seek just enough information to justify reliance, or are they predisposed to over- or under-calibrate trust? The temptation to treat opacity as a bug, rather than a feature of complex intelligence, must be resisted.

The field now needs to move beyond simply measuring the effects of information asymmetry and begin to explore the design of ‘trust architectures’ – systems that actively manage the flow of information, not to eliminate uncertainty, but to make it legible. What if the ‘bug’ isn’t a flaw, but a signal? A notification of impending failure, perhaps, or a subtle cue to re-evaluate the boundaries of delegation. The pursuit of truly intelligent systems may depend on embracing the inherent imperfection of both the machine and the human who wields it.

Original article: https://arxiv.org/pdf/2601.21650.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Banks & Shadows: A 2026 Outlook

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- MicroStrategy’s $1.44B Cash Wall: Panic Room or Party Fund? 🎉💰

- QuantumScape: A Speculative Venture

- Where to Change Hair Color in Where Winds Meet

2026-01-30 19:11