Author: Denis Avetisyan

A new activation function, Brownian ReLU, leverages the principles of Brownian motion to improve the performance of long short-term memory networks.

This paper introduces and validates Brownian ReLU (Br-ReLU) as a superior activation function for LSTM networks applied to financial time series analysis and classification tasks.

Despite the demonstrated efficacy of deep learning for sequential data, standard activation functions can struggle with the inherent noise and non-stationarity of financial time series. This paper introduces Brownian ReLU (Br-ReLU), a novel activation function for Long Short-Term Memory (LSTM) networks, inspired by the principles of Brownian motion to enhance gradient propagation and learning stability. Experimental results across diverse financial datasets-including Apple stock, GCB, the S&P 500, and LendingClub loan data-demonstrate that Br-ReLU consistently outperforms traditional activations like ReLU and LeakyReLU in both forecasting and classification tasks. Could this stochastic approach unlock further improvements in the predictive power of recurrent neural networks for complex, real-world time series analysis?

The Illusion of Predictability in Financial Markets

The pursuit of reliable financial forecasting underpins nearly all investment decisions, yet achieving consistent accuracy remains a formidable challenge. Traditional statistical models, while offering a foundation for understanding market behavior, frequently falter when confronted with the inherent volatility and non-linear complexities of financial time series. These models often assume stable relationships within the data, an assumption quickly invalidated by unpredictable events and the dynamic interplay of countless factors influencing asset prices. Consequently, even sophisticated econometric techniques can produce forecasts with significant errors, particularly over extended time horizons, limiting their practical utility for informed investment strategies and risk management. The ever-present noise and chaotic elements within financial markets demand increasingly robust and adaptive forecasting methodologies.

Financial forecasting has long sought methods to discern patterns within the inherent chaos of market data. Recurrent Neural Networks (RNNs) represented a significant shift in approach, moving beyond static models to those capable of processing sequential information. Unlike traditional neural networks that treat each data point independently, RNNs possess a “memory” allowing them to consider past values when predicting future ones. Within the RNN family, Long Short-Term Memory (LSTM) networks gained prominence due to their architecture specifically designed to address the challenges of learning from extended time series. By incorporating mechanisms to retain relevant information over numerous time steps, LSTMs offered a potential solution for capturing the complex, often subtle, temporal dependencies crucial for anticipating market trends and informing investment strategies. This capability positioned LSTMs as a compelling tool for analysts seeking to improve predictive accuracy in the volatile realm of finance.

Standard Long Short-Term Memory networks, while designed to address the limitations of earlier recurrent neural networks, still grapple with the vanishing gradient problem during training. This occurs because, as information propagates through many time steps, the gradients used to update the network’s weights can become exponentially small, effectively preventing the model from learning relationships between distant events in the time series. Consequently, the network struggles to capture long-term dependencies – crucial patterns spanning extended periods – which are often vital for accurate financial forecasting. The inability to learn these dependencies limits the model’s capacity to predict future values based on historical data, hindering its overall performance in volatile financial markets where events far in the past can influence present conditions.

Beyond Determinism: Embracing Stochasticity in Activation

The Rectified Linear Unit (ReLU) activation function, defined as f(x) = max(0, x) , is susceptible to the “dying ReLU” problem. This occurs when a substantial number of neurons become inactive during training. Specifically, if a neuron’s weighted sum of inputs consistently results in negative values, the gradient flowing back through that neuron will be zero. Consequently, the weights associated with that neuron will not be updated, effectively halting the learning process for that unit. This is particularly problematic in deep networks, as it can lead to a significant reduction in the network’s effective capacity and hinder its ability to model complex relationships within the data.

Leaky ReLU and Parametric ReLU (PReLU) attempt to mitigate the dying ReLU problem by introducing a small, non-zero slope for negative inputs; Leaky ReLU utilizes a fixed slope, while PReLU learns this slope during training. However, these functions treat input as deterministic and fail to fully leverage the inherent stochasticity present in financial time series data. Financial data often exhibits unpredictable fluctuations and noise; simply applying a fixed or learned slope to negative values doesn’t capture the dynamic and random nature of these fluctuations, potentially limiting the model’s ability to accurately represent and forecast financial behavior. Consequently, these approaches may not fully exploit the information contained within the randomness of financial data, hindering performance compared to methods designed to explicitly incorporate stochastic elements.

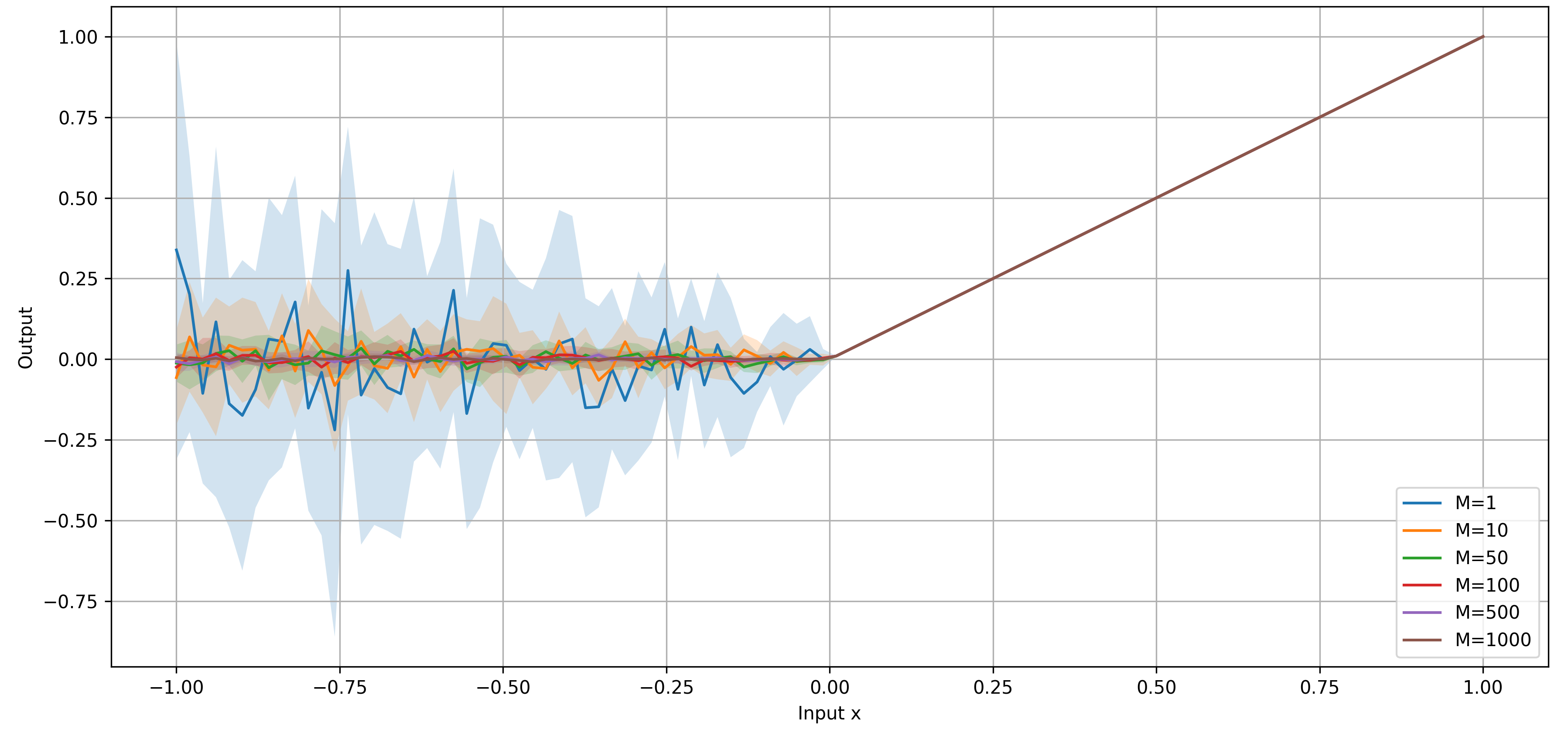

Brownian ReLU introduces stochasticity into the activation process by modeling the nonlinearity’s adjustment with elements derived from Brownian motion, a continuous-time stochastic process. Specifically, the standard ReLU function, f(x) = max(0, x) , is modified by adding a normally distributed random variable with a mean of zero and a variance controlled by a learnable parameter. This introduces a fluctuating threshold for activation, allowing the neuron’s response to vary even with the same input. The magnitude of this fluctuation is governed by the learnable variance, enabling the network to adapt the degree of stochasticity during training and potentially improving generalization by introducing a form of regularization and more effectively capturing the inherent noise present in financial time series data.

Modeling Uncertainty: The Principles of Brownian ReLU

Brownian ReLU introduces stochasticity into the activation function by applying principles derived from Brownian motion, a mathematical model of particle movement exhibiting random displacement over time. Specifically, a Wiener process, or Brownian motion, W(t), is used to generate a time-varying noise component. This noise is then added to the standard ReLU function, effectively creating a dynamic activation threshold. The resulting activation, rather than being a fixed value for a given input, becomes a probabilistic function influenced by the characteristics of the Brownian motion, including its mean of zero and variance proportional to time. This dynamic adjustment aims to introduce controlled randomness into the network’s processing, potentially mitigating issues associated with static activation functions.

The introduction of stochasticity into the activation function, through Brownian ReLU, facilitates adaptation to input data by injecting noise during the forward pass. This stochastic component alters the activation landscape, reducing the likelihood of neurons becoming trapped in local optima during training. By disrupting the deterministic nature of standard activation functions, Brownian ReLU encourages exploration of a broader solution space and can improve gradient flow, particularly in complex, high-dimensional datasets. This dynamic adjustment of the activation function’s response is intended to promote more robust and efficient learning compared to static activation functions.

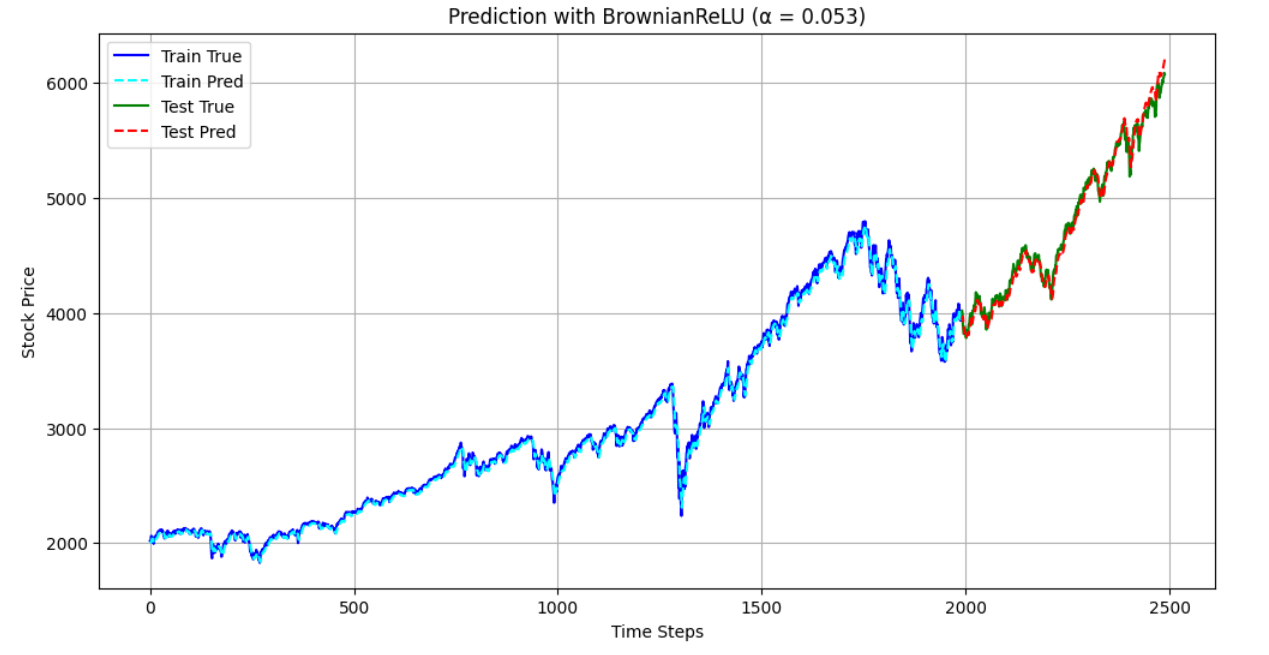

Model performance was quantitatively assessed using Mean Squared Error (MSE) and the Coefficient of Determination (R^2) on financial time series data from Apple, GCB (Goldman Sachs), and the S&P 500. Results demonstrated that the Brownian ReLU consistently achieved the lowest MSE and highest R^2 values compared to standard ReLU, LeakyReLU, and PReLU activations across all three datasets. This indicates superior predictive accuracy and a better fit to the observed financial data when utilizing the Brownian ReLU activation function.

The Architecture of Prediction: Implications and Future Trajectories

Recent advancements in financial modeling explore the potential of stochastic activation functions, specifically Brownian ReLU, when integrated into Long Short-Term Memory (LSTM) networks for time series forecasting. Analyses across diverse datasets – including Apple, GCB, and the S&P 500 – consistently demonstrate improved performance compared to traditional methods. This integration yields notably lower Mean Squared Error (MSE) values – 0.002035 for Apple, 0.000275 for GCB, and 0.000242 for the S&P 500 – alongside correspondingly higher R-squared values of 0.9381, 0.9869, and 0.9891, respectively. These results suggest that introducing stochasticity into the activation process enhances the model’s ability to capture the inherent uncertainties and complex dynamics of financial markets, ultimately leading to more accurate and robust predictions.

Financial markets are inherently dynamic systems, characterized by unpredictable fluctuations and complex interdependencies that challenge traditional forecasting models. This research introduces a novel methodology leveraging stochastic activation functions – specifically, Brownian ReLU within Long Short-Term Memory networks – to directly address these challenges. By incorporating stochasticity, the model mimics the random walk often observed in asset prices, enabling it to better capture the nuanced and often chaotic behavior of financial time series. Critically, this approach isn’t simply about improving short-term predictions; it’s about modeling the underlying data-generating process to reveal and utilize long-term dependencies typically obscured by market volatility, thus offering a more robust and reliable foundation for financial analysis and decision-making.

Recent evaluations indicate that incorporating Brownian ReLU into loan classification models yields promising results. The activation function achieved an overall accuracy of 0.7802, suggesting a notable ability to correctly categorize loan applications. While the ROC-AUC score of 0.5148 indicates a modest capacity to distinguish between positive and negative cases, the recall value of 0.2446 demonstrates that the model successfully identifies approximately 24% of actual positive cases-a potentially valuable metric in minimizing the risk of overlooking viable loan applicants, though further refinement is needed to balance precision and minimize false positives.

The pursuit of novel activation functions, as demonstrated by Brownian ReLU, echoes a fundamental truth: systems are not sculpted, but cultivated. The researchers present Br-ReLU as a response to the limitations of established methods in handling temporal dependencies within financial forecasting – a domain where even minute shifts can propagate into significant deviations. As Vinton Cerf observed, “Any sufficiently advanced technology is indistinguishable from magic.” This holds a particular resonance here; the stochastic element introduced by Brownian motion isn’t merely a technical adjustment, but an attempt to imbue the network with a capacity to navigate the inherent unpredictability of complex systems. The observed improvements in gradient descent and forecasting accuracy suggest the network is, indeed, beginning to grow into a more resilient form.

The Shifting Sands

The introduction of Brownian ReLU is, predictably, not about better forecasts. Every novel activation function promises a fleeting edge, until the market finds a way to exploit its predictability. This work suggests a move toward acknowledging inherent noise-injecting stochasticity not as a corrective measure, but as a fundamental property of the model itself. It implies a quiet admission: perfect prediction is a mirage, and adaptation to uncertainty is the only true optimization. The real question isn’t whether Br-ReLU outperforms its predecessors, but whether it delays the inevitable descent into diminishing returns.

Future efforts will likely center not on refining the Brownian element, but on understanding its interplay with the recurrent structure. LSTM networks, for all their sophistication, remain brittle in the face of truly chaotic systems. A deeper investigation into the emergent properties of combining stochastic activation with memory cells may reveal whether such a hybrid can move beyond incremental gains and toward genuine resilience. Or, it may simply create a more complex failure mode.

The pursuit of novel activation functions is a symptom, not a solution. The underlying problem is a faith in static architectures. Order is just a temporary cache between failures. The next step isn’t a better building block, but an acceptance that the structure itself must evolve, adapt, and perhaps even disassemble, as the landscape shifts beneath it.

Original article: https://arxiv.org/pdf/2601.16446.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Celebrities in Music Videos

- Top 20 Extremely Short Anime Series

- Best Video Games Based On Tabletop Games

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

2026-01-26 14:14