Author: Denis Avetisyan

A new framework, WebClipper, dramatically improves the efficiency of web-based AI agents by intelligently eliminating unproductive exploration paths.

WebClipper utilizes graph-based trajectory pruning and a novel F-AE score to balance accuracy and efficiency in web agent evolution.

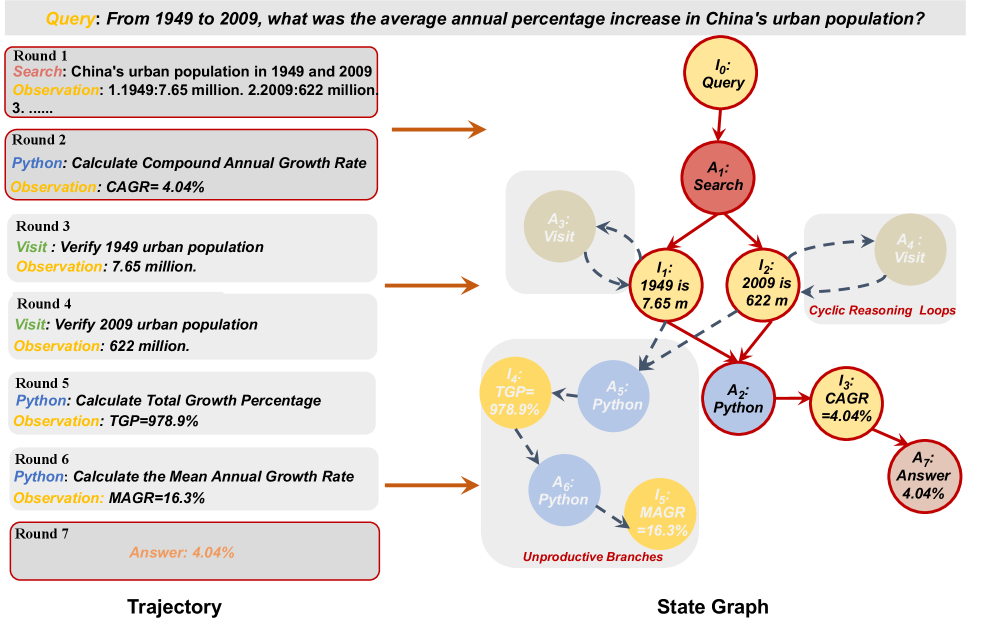

Despite the promise of deep research systems powered by web agents, their search efficiency often lags due to lengthy and redundant tool-call trajectories. To address this, we introduce WebClipper: Efficient Evolution of Web Agents with Graph-based Trajectory Pruning, a framework that compresses these trajectories by modeling the agent’s search as a state graph and optimizing for a minimum-necessary Directed Acyclic Graph (DAG). This graph-based pruning reduces tool-call rounds by approximately 20% while maintaining-and in some cases improving-accuracy, as quantified by a novel F-AE score measuring the accuracy-efficiency trade-off. Can this approach unlock a new generation of web agents capable of truly efficient and reliable information seeking?

The Erosion of Reasoning: Challenges in Web Agent Navigation

Contemporary web agents frequently encounter limitations when tasked with information retrieval requiring intricate, multi-step reasoning. These agents, designed to automate web-based tasks, often struggle to effectively decompose complex queries into a series of logical steps necessary to navigate the vast and often unstructured information landscape of the internet. This difficulty stems from the need to not only locate relevant data, but also to synthesize it, resolve ambiguities, and draw accurate conclusions – processes that demand a level of cognitive ability exceeding the capabilities of many current systems. Consequently, agents may return incomplete, irrelevant, or even misleading information, highlighting a significant challenge in achieving truly intelligent and autonomous web interaction.

Existing approaches to web agent navigation frequently stumble upon convoluted paths to information, generating unnecessarily lengthy and inefficient ‘trajectories’ through the digital landscape. This inefficiency isn’t merely a matter of wasted processing time; it fundamentally limits the practical deployment of these agents. Each additional step in a trajectory increases the likelihood of error, exacerbates computational demands, and drastically reduces scalability. Consequently, agents reliant on such methods struggle to handle complex tasks or operate effectively across large datasets, hindering their ability to deliver timely and accurate results in real-world applications. The sheer volume of steps required often surpasses practical thresholds for both resource allocation and user patience, necessitating the development of more streamlined reasoning techniques.

Pruning the Labyrinth: WebClipper’s Approach to Efficiency

WebClipper employs two primary methods for trajectory condensation: MNDAG Pruning and Trajectory-to-State-Graph Transformation. MNDAG Pruning, or Minimal Node Directed Acyclic Graph pruning, reduces the length of agent trajectories by iteratively removing nodes deemed redundant based on information gain. The Trajectory-to-State-Graph Transformation then abstracts the sequence of actions into a graph representing transitions between key states, effectively summarizing the agent’s reasoning process by focusing on significant state changes rather than individual actions. This transformation allows for a more compact representation of the trajectory while preserving essential information about the agent’s decision-making path.

WebClipper achieves trajectory condensation by representing the agent’s reasoning process not as a sequence of individual actions, but as transitions between key states. This abstraction disregards the specific actions taken to move between states, retaining only the initial and final states and the resulting change. By focusing on these essential state transitions, the length of the agent’s trajectory is significantly reduced without losing the core information regarding the problem-solving process. This method effectively summarizes the reasoning by highlighting what changed, rather than how it changed, leading to a more concise and efficient representation.

Coherence-Aware Thought Rewriting operates post-pruning to maintain semantic integrity within the condensed agent trajectories. This process identifies and corrects potential inconsistencies introduced by the removal of intermediate reasoning steps. Specifically, it analyzes the remaining state transitions to verify logical flow and ensures that conclusions remain valid given the abstracted reasoning path. The rewriting component utilizes a rule-based system, informed by the original, full trajectory, to reconstruct missing logical connections or rephrase statements to maintain coherence without reintroducing excessive length. This ensures the final trajectory represents a valid and understandable reasoning process, despite the reduction in steps.

Refining the Signal: Perplexity and Rejection Sampling in Thought Refinement

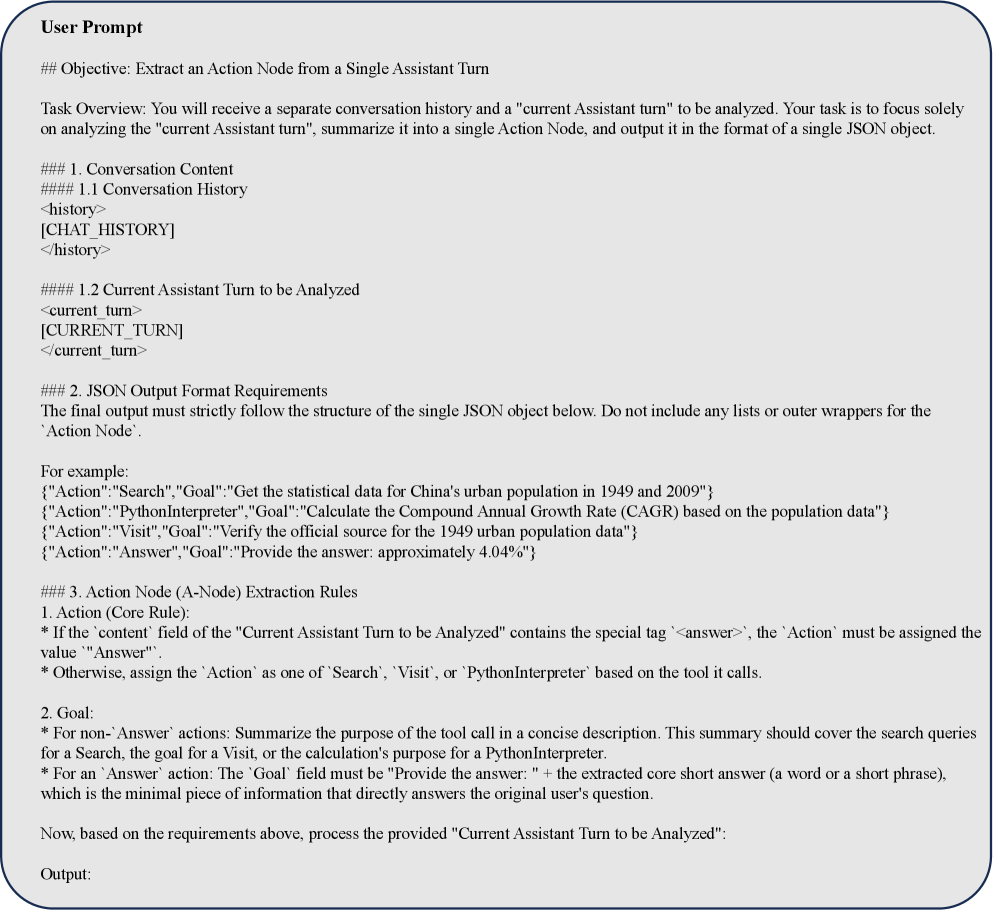

Perplexity-Based Selection operates by assessing the probability distribution predicted by a language model (LLM) for a given sequence of tokens. Lower perplexity scores indicate the LLM assigns higher probability to the rewritten thought, signifying greater stylistic consistency and fluency compared to the original or alternative revisions. This metric effectively quantifies how well a thought aligns with the patterns and structures the LLM has learned from its training data. Consequently, rewritten thoughts are prioritized based on these scores, with lower perplexity values indicating a stronger preference for those outputs during the refinement process. The selection isn’t absolute; it’s used to weight the probability of exploring particular thought trajectories, guiding the model towards more coherent and natural-sounding expressions.

Rejection sampling is employed to filter generated thoughts based on their perplexity scores, effectively concentrating computational resources on more promising refinement paths. During each iteration, multiple thought trajectories are initially generated; however, those exceeding a predetermined perplexity threshold – indicating lower stylistic consistency or fluency – are discarded. This probabilistic filtering mechanism ensures that subsequent refinement steps, such as further rewriting by the LLM Extractor, are preferentially applied to trajectories exhibiting a higher likelihood of converging toward a high-quality, coherent thought, thereby improving overall efficiency and output quality.

The LLM Extractor functions as a core component in the thought refinement process by providing the necessary parsing and interpretation of both initial and rewritten thoughts. Specifically, it is utilized to deconstruct complex thought structures into a standardized format suitable for quantitative evaluation, enabling the perplexity-based selection metric to accurately assess fluency and stylistic consistency. Furthermore, the Extractor is instrumental in generating candidate thoughts during the rewriting stage, ensuring that proposed revisions adhere to the established thought framework and facilitating a consistent basis for comparison during rejection sampling. This dual role – in both generation and analysis – establishes the LLM Extractor as a foundational element for reliable thought refinement.

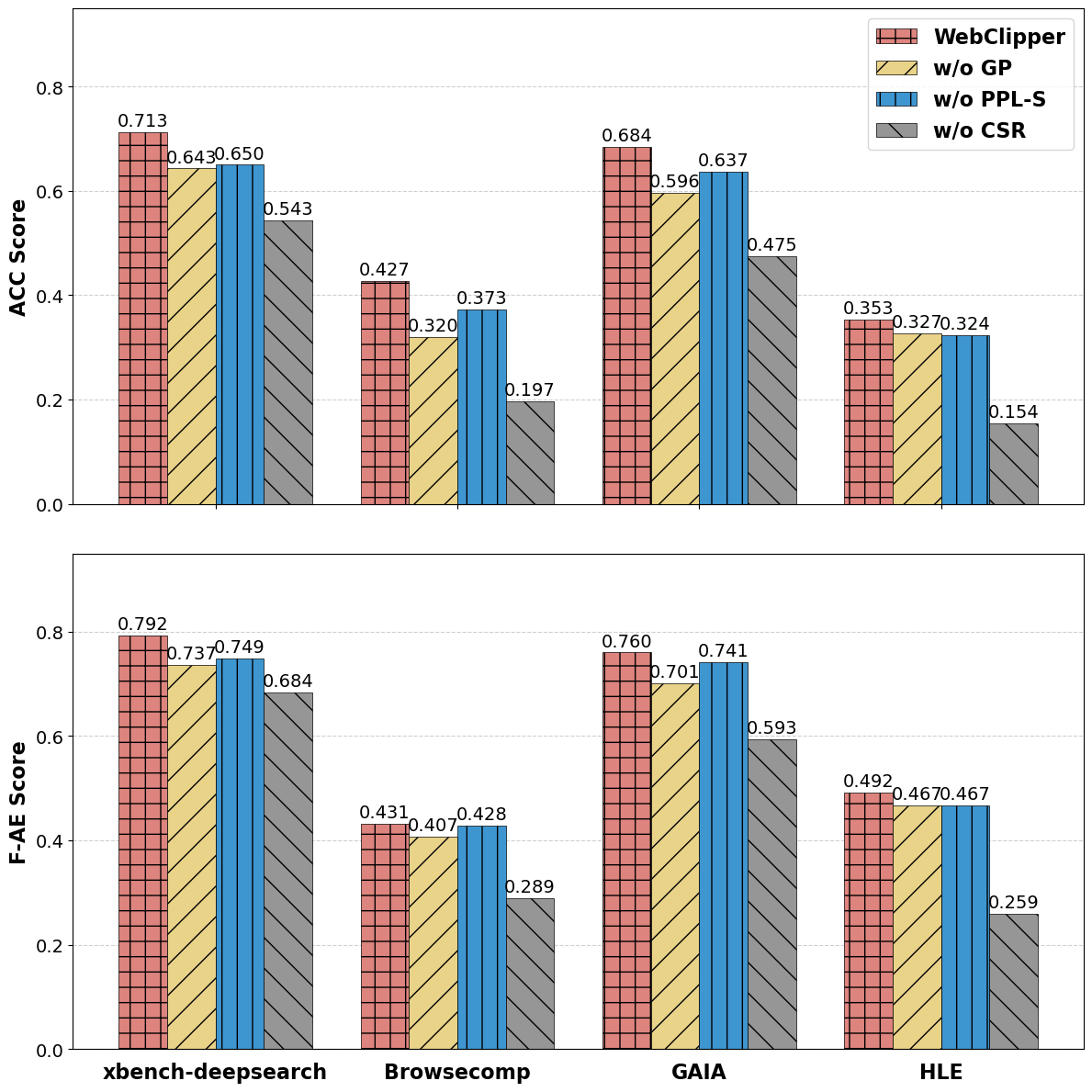

Measuring Graceful Decay: The F-AE Score and WebClipper’s Impact

The evaluation of web-based agents often presents a challenge: maximizing both the accuracy of information retrieval and the efficiency of the process. To address this, researchers have introduced the F-AE Score, a novel metric designed to quantify the trade-off between these two crucial aspects. This score doesn’t simply measure success or speed in isolation; instead, it synthesizes performance across both dimensions, providing a more holistic understanding of an agent’s capabilities. The F-AE Score considers not only whether an agent correctly extracts the desired information – its accuracy – but also how effectively it does so, measured by factors like the number of tool calls and tokens used. A higher F-AE Score indicates a superior balance, signifying an agent that achieves strong results with minimal resource consumption – a vital characteristic for practical deployment in real-world web interactions.

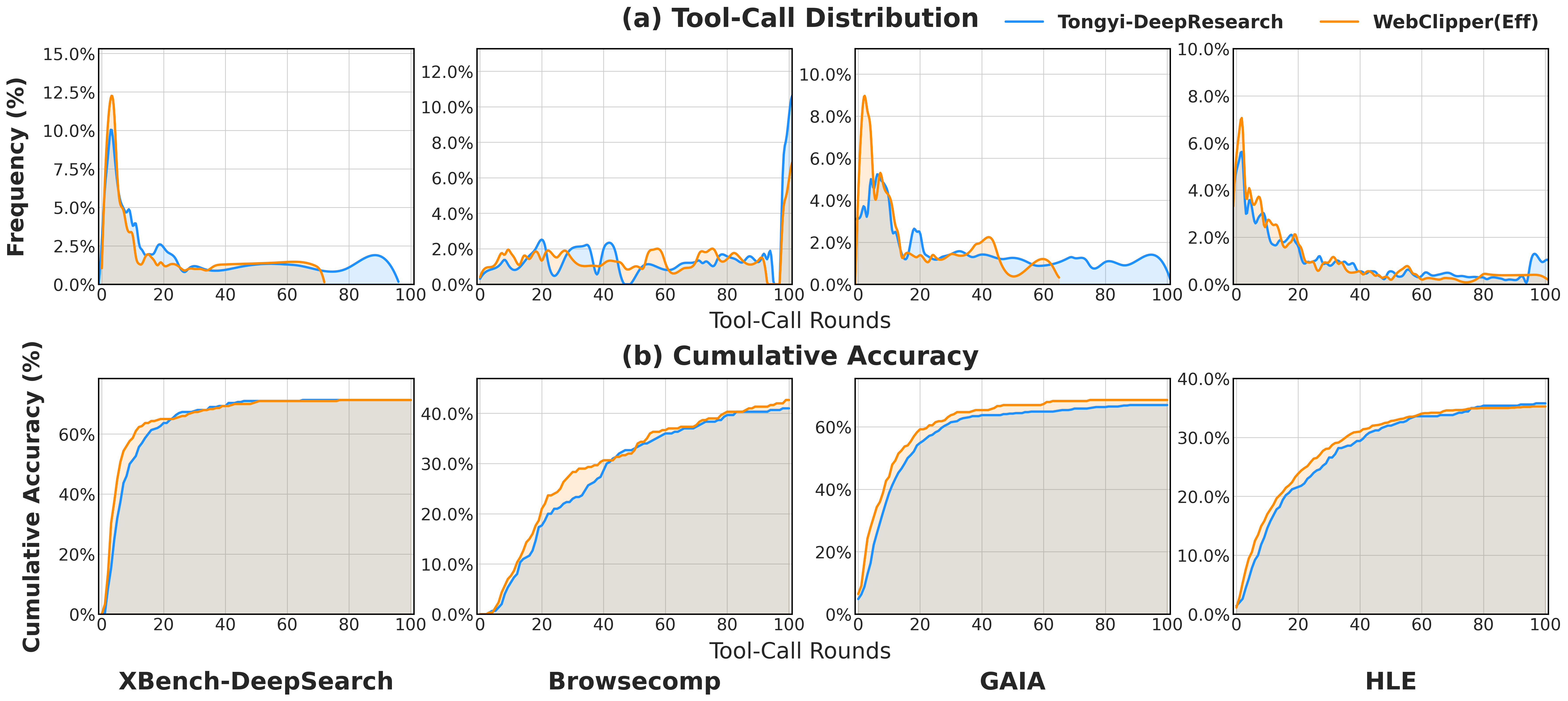

Rigorous experimentation reveals that WebClipper, through the implementation of its novel techniques, significantly optimizes operational efficiency in web-based tasks. Specifically, the agent demonstrates an approximate 21% reduction in the number of tool-call rounds required to complete a given task, indicating a streamlined interaction process. Furthermore, WebClipper exhibits a marked decrease in token usage – roughly 19.4% less than comparable baseline models – suggesting a more concise and resource-conscious approach to information processing and task execution. These combined improvements highlight WebClipper’s ability to achieve substantial gains in both speed and cost-effectiveness when navigating and interacting with web environments.

Evaluations reveal that WebClipper currently attains the leading F-AE Score when contrasted with other publicly available open-source web agent models. This superior performance isn’t solely attributable to heightened accuracy; rather, it signifies a carefully calibrated equilibrium between achieving correct results and doing so with minimal computational expense. The F-AE Score effectively quantifies this trade-off, and WebClipper’s leading position indicates its capacity to complete web-based tasks both reliably and efficiently – a crucial advancement for practical deployment in resource-constrained environments. This balanced approach distinguishes WebClipper, suggesting a more sustainable and scalable solution for automated web interaction than models prioritizing one aspect over the other.

The pursuit of efficient web agents, as demonstrated by WebClipper, inherently acknowledges the transient nature of any complex system. The framework’s emphasis on trajectory pruning, guided by the F-AE score, isn’t merely about optimization; it’s about gracefully accommodating inevitable decay. As Robert Tarjan aptly stated, “The most effective programs are those that evolve gracefully over time.” WebClipper embodies this principle, recognizing that the web itself is in constant flux, and an agent’s ability to adapt – to shed inefficient paths and embrace new ones – is crucial for sustained performance. The system doesn’t strive for static perfection, but rather for a resilient architecture capable of weathering change, aligning with the idea that incidents, in this case, inefficient trajectories, are simply steps toward maturity.

What Lies Ahead?

The pursuit of efficient web agents, as exemplified by WebClipper, invariably encounters the principle that any improvement ages faster than expected. Pruning trajectories based on graph analysis offers a temporary reprieve from combinatorial explosion, but the underlying entropy remains. Future work will likely focus not merely on how to prune, but on predicting which branches of the agent’s exploration will inevitably decay into inefficiency. The F-AE score represents a pragmatic attempt to balance accuracy and efficiency, yet such metrics are, at best, snapshots of a dynamic system-a fleeting assessment of value before the inevitable shift in operational context.

A critical limitation lies in the assumption of static utility. Web environments are not immutable; content shifts, APIs evolve, and user expectations drift. Consequently, ‘pruned’ trajectories are not simply discarded; they represent potential pathways that, given a different temporal coordinate, might have proven optimal. Rollback, therefore, is not merely a return to a previous state, but a journey back along the arrow of time, re-evaluating discarded options in the light of present conditions.

The true challenge, then, isn’t maximizing efficiency at a single point in time, but building agents capable of gracefully accepting-and adapting to-their own obsolescence. This demands a shift from optimization towards resilience-a willingness to relinquish current gains in anticipation of future decay. The field may well move beyond trajectory pruning, toward systems that actively cultivate a diverse portfolio of potentially viable-but currently inefficient-strategies.

Original article: https://arxiv.org/pdf/2602.12852.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Where to Change Hair Color in Where Winds Meet

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- All weapons in Wuchang Fallen Feathers

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

2026-02-17 06:21