Author: Denis Avetisyan

A new approach to predictive maintenance leverages the power of foundation models alongside traditional time-series analysis to pinpoint equipment anomalies with greater accuracy.

Hybrid feature learning, combining foundation model embeddings with domain-specific statistical features, improves HVAC anomaly detection and predictive maintenance performance.

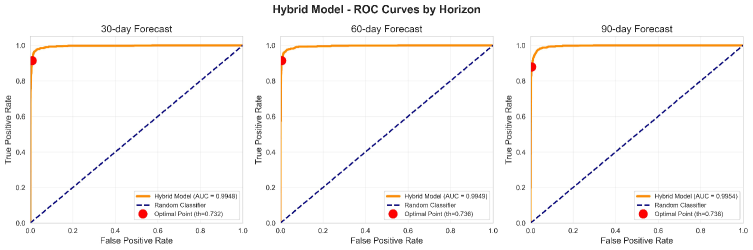

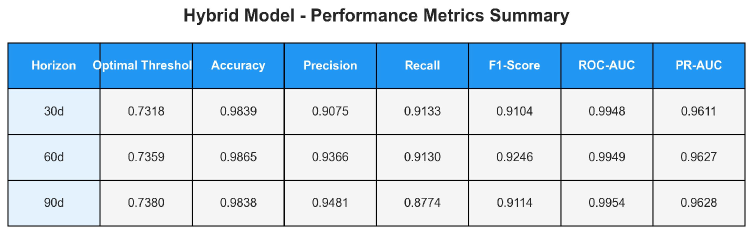

Despite advances in deep learning for predictive maintenance, achieving consistently high accuracy in real-world anomaly detection remains challenging. This is addressed in ‘Hybrid Feature Learning with Time Series Embeddings for Equipment Anomaly Prediction’, which proposes a novel approach integrating learned time series embeddings from a Granite TinyTimeMixer with carefully engineered statistical features. Results demonstrate that this hybrid learning strategy-applied to HVAC equipment data-achieves up to 95% precision and an ROC-AUC of 0.995, alongside production-ready performance with low false positive rates. Could this synergistic combination of representation learning and domain expertise unlock more robust and practical anomaly detection systems across diverse industrial applications?

The Inevitable Signal in the Noise

HVAC systems generate vast streams of time-series data – temperatures, pressures, energy consumption, and more – that present a significant challenge to traditional anomaly detection techniques. These methods, often reliant on static thresholds or rule-based systems, struggle to process the sheer volume and intricate relationships within this data, frequently resulting in either false positives or, more critically, missed anomalies. This delay in identifying deviations from normal operation can lead to suboptimal performance, escalating energy waste, and ultimately, costly equipment failures. The inability of conventional approaches to effectively sift through this complex data landscape underscores the need for more advanced analytical tools capable of discerning subtle but significant anomalies in real-time, thereby proactively mitigating potential issues and optimizing system efficiency.

HVAC systems are dynamic environments, subject to constant fluctuations stemming from occupancy changes, weather patterns, and equipment aging – all of which introduce substantial variability into operational data. Consequently, establishing static thresholds for anomaly detection proves unreliable; a value considered normal on one day might signal a problem on another. This inherent complexity necessitates the implementation of more sophisticated analytical techniques, such as machine learning algorithms, capable of learning the system’s baseline behavior and adapting to these evolving conditions. These approaches don’t rely on fixed boundaries but instead identify deviations from a learned model of ‘normal’ operation, enabling the detection of subtle anomalies indicative of developing faults or inefficiencies that would otherwise go unnoticed. This shift from rule-based to model-based detection is crucial for proactive maintenance and sustained energy optimization.

The proactive identification of anomalies within Heating, Ventilation, and Air Conditioning (HVAC) systems represents a significant advancement in operational efficiency and longevity. Timely detection allows for interventions that preempt catastrophic equipment failures, preventing costly downtime and extending the lifespan of critical components. Beyond simply avoiding breakdowns, early anomaly detection facilitates performance optimization; subtle deviations from normal operation, indicative of inefficiencies, can be addressed before they escalate into substantial energy waste. This preventative approach fundamentally shifts maintenance strategies from reactive repairs to proactive care, ultimately reducing overall maintenance expenditure and maximizing the return on investment in building infrastructure. A system capable of flagging these initial indicators therefore moves beyond damage control, becoming a core element of sustainable and cost-effective building management.

Deconstructing Time: Embedding Signals Within the System

The Granite TinyTimeMixer, utilized within this framework, generates time series embeddings by applying a sequence of time-mixing layers to the input data. These layers consist of multi-head self-attention mechanisms and gated linear units, enabling the model to capture complex temporal dependencies without relying on recurrent or convolutional architectures. The TinyTimeMixer’s architecture is specifically designed for computational efficiency, facilitating the processing of long time series data. The resulting embeddings are high-dimensional vector representations that encode the historical behavior of the time series, preserving information about both the magnitude and timing of events and providing a robust input for downstream anomaly detection tasks.

The time series embeddings generated by the Granite TinyTimeMixer are combined with a feature set comprising 28 statistically engineered descriptors. These features include measures of central tendency, dispersion, and shape, such as mean, standard deviation, skewness, kurtosis, minimum, maximum, quantiles, and various autocorrelation and cross-correlation lags. This fusion provides a holistic representation of the time series data, capturing both the learned temporal relationships from the embeddings and quantifiable characteristics of the signal’s distribution and patterns. The statistical features are calculated across a defined window, providing context for the embedding data and enhancing the model’s ability to discern anomalous behavior based on deviations from established norms.

Combining time series embeddings with statistical features enhances anomaly detection by enabling the model to analyze data at multiple timescales. The TinyTimeMixer embeddings effectively capture short-term fluctuations and sequential patterns within the time series data. Simultaneously, the 28 statistical features – including measures of central tendency, dispersion, and autocorrelation – provide a summary of long-term trends and overall system behavior. This dual representation allows the model to identify anomalies that manifest as deviations from both immediate, sequential expectations and established, historical norms, resulting in improved sensitivity to subtle anomalies that might be missed by models relying on a single data representation.

LightGBM: Sculpting Order from the Chaos of Data

LightGBM was chosen as the primary modeling technique due to its documented efficiency in processing datasets with a large number of features, a characteristic of many anomaly detection scenarios. The algorithm utilizes gradient-based tree-building, optimized for speed and memory usage, and incorporates techniques like Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB) to reduce computational complexity when handling high-dimensional inputs. This allows for the effective training of a model capable of generating accurate anomaly scores, quantifying the degree to which individual data points deviate from normal behavior, without being constrained by the curse of dimensionality.

Anomaly detection tasks frequently suffer from class imbalance, where normal instances significantly outnumber anomalous ones; this can lead to models biased towards the majority class. To mitigate this, a Focal Loss function was implemented during the training of the LightGBM model. Focal Loss dynamically adjusts the weighting of cross-entropy loss, down-weighting well-classified examples and focusing training on hard, misclassified examples, particularly the rare anomaly class. This is achieved through a modulating factor (1 - p_t)^\gamma, where p_t is the model’s estimated probability for the correct class and γ is a focusing parameter. By concentrating learning on the anomalous instances, Focal Loss improves the model’s ability to accurately identify and score these critical events.

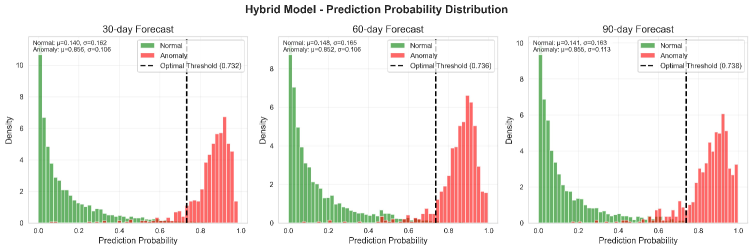

Model performance was quantified using multi-horizon anomaly prediction, evaluating the system’s capacity to forecast anomalous events at 30, 60, and 90-day intervals. Across all prediction horizons, the model achieved precision scores ranging from 91% to 95% and corresponding recall scores between 88% and 94%. These metrics indicate a high degree of accuracy in both correctly identifying anomalies and minimizing false negatives when predicting future anomalous instances.

Unveiling the System’s Language: Feature Importance and Predictive Power

Feature importance analysis within the anomaly detection framework pinpointed specific system characteristics most indicative of unusual behavior. This wasn’t simply about flagging outliers; the process revealed why those outliers occurred, identifying critical variables driving system dynamics. For example, fluctuations in network latency and CPU utilization consistently ranked as top predictors of anomalies, suggesting these areas require focused monitoring and potential optimization. Understanding these key drivers moves beyond reactive alerting to proactive system management, enabling operators to anticipate and mitigate issues before they escalate – a significant advancement over traditional methods that often treat symptoms rather than root causes. The resulting insights offer a powerful tool for not only detecting anomalies, but also for gaining a deeper understanding of the complex interplay within the monitored system.

The anomaly detection model distinguishes itself through a remarkable balance of accuracy and efficiency. It consistently identifies genuine anomalies with high precision, effectively flagging critical events without being overwhelmed by false alarms. Crucially, the system maintains a low false positive rate-less than 1.1%-which dramatically reduces the burden of unnecessary alerts and allows personnel to focus on legitimate threats. This minimized noise is essential for practical implementation, preventing alert fatigue and ensuring timely responses to actual system disturbances, ultimately enhancing operational reliability and security.

The developed anomaly detection framework exhibits markedly improved performance when contrasted with conventional methods. Evaluations reveal an area under the receiver operating characteristic curve (ROC-AUC) of 0.995, a near-perfect score indicating exceptional discrimination between anomalous and normal states. This represents a substantial advancement over a baseline model utilizing pure Granite TS, which achieved a precision rate of only 9-11%. Such a significant increase in precision translates to more reliable anomaly identification and a considerable reduction in false alarms, ultimately enhancing the system’s ability to accurately flag critical events and streamline operational efficiency.

The Adaptive System: Towards Continuous Learning and Prediction

The Granite TinyTimeMixer benefited significantly from the implementation of Low-Rank Adaptation (LoRA) during the fine-tuning process. LoRA functions by introducing trainable low-rank matrices to the existing model weights, dramatically reducing the number of parameters needing adjustment for new tasks. This approach circumvents the computational expense and memory demands of traditional full fine-tuning, allowing for swift adaptation to diverse datasets with significantly lower resource requirements. By focusing training on these smaller, task-specific matrices, LoRA not only accelerates the learning process but also preserves the pre-trained knowledge embedded within the original model, resulting in a highly efficient and adaptable system for time-series analysis.

The framework’s efficiency extends beyond mere computational cost, enabling practical deployment even on resource-constrained hardware. Achieving an inference latency of just 4.5 milliseconds per sample using CPU-only processing is a significant advancement, eliminating the need for expensive GPUs and specialized infrastructure. This low latency, coupled with the parameter-efficient fine-tuning methods employed, unlocks the potential for scaling the system to manage large-scale Heating, Ventilation, and Air Conditioning (HVAC) systems effectively. The ability to process data quickly and reliably on standard hardware dramatically reduces the barriers to entry for wider adoption, promising substantial energy savings and improved climate control in buildings and campuses.

Ongoing development centers on integrating live data feeds into the framework, enabling a dynamic response to evolving environmental conditions within HVAC systems. This necessitates the creation of adaptive anomaly detection algorithms capable of self-calibration and adjustment, moving beyond static thresholds. Such algorithms will not only identify deviations from normal operation-like unexpected energy consumption or temperature fluctuations-but also learn and adapt to seasonal changes, occupancy patterns, and equipment degradation. The ultimate goal is a predictive maintenance system that anticipates potential failures and optimizes performance in real-time, ensuring consistent comfort and minimizing energy waste without requiring manual intervention or frequent recalibration.

The pursuit of predictive maintenance, as demonstrated by this work on HVAC anomaly detection, inevitably reveals the limitations of any singular architectural vision. The authors rightly combine the generality of foundation models – the Granite TinyTimeMixer – with the specificity of domain knowledge encoded in statistical features. It echoes a sentiment expressed by Andrey Kolmogorov: ‘The most important thing in science is not to be right, but to be rigorous.’ Rigor, in this context, isn’t merely statistical; it’s an acknowledgement that no system, however elegantly constructed, can fully anticipate the complexities of real-world data. The hybrid approach isn’t a solution, but a compromise, frozen in time, acknowledging that dependencies – between model and data, theory and practice – will always remain, shifting as conditions change. Technologies change, dependencies remain.

The Turning of the Gears

The pursuit of predictive maintenance, as demonstrated by this work, rarely delivers a finished product. Instead, it yields a more sensitive instrument – one attuned to the inevitable dissonances within complex systems. The coupling of foundation models with handcrafted features isn’t a resolution, but a postponement. Each refined feature, each carefully tuned embedding, merely delays the moment the system reveals its inherent unknowability. The granite of TinyTimeMixer, though seemingly solid, still weathers.

Future work will undoubtedly explore more elaborate architectures, ever-larger datasets, and more subtle forms of feature engineering. But the core challenge remains: how to model a process that is fundamentally about change. The anomaly isn’t a deviation from a static norm, but a step in the system’s ongoing evolution. A truly robust solution won’t seek to prevent failure, but to anticipate its form and guide the system toward a more graceful degradation.

The temptation to view these models as oracles should be resisted. They are not mirrors reflecting truth, but lenses refracting a distorted image of a world constantly remaking itself. Every refactor begins as a prayer and ends in repentance. The system isn’t failing when it surprises; it’s just growing up.

Original article: https://arxiv.org/pdf/2602.15089.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Brent Oil Forecast

- 20 Movies That Glorified Real-Life Criminals (And Got Away With It)

- 17 Black Actresses Who Forced Studios to Rewrite “Sassy Best Friend” Lines

- Michael Burry’s Market Caution and the Perils of Passive Investing

2026-02-18 22:43