Author: Denis Avetisyan

New research shows that machine learning can accurately assess the complexity of combinatorial auctions, enabling a hybrid approach that outperforms traditional algorithms.

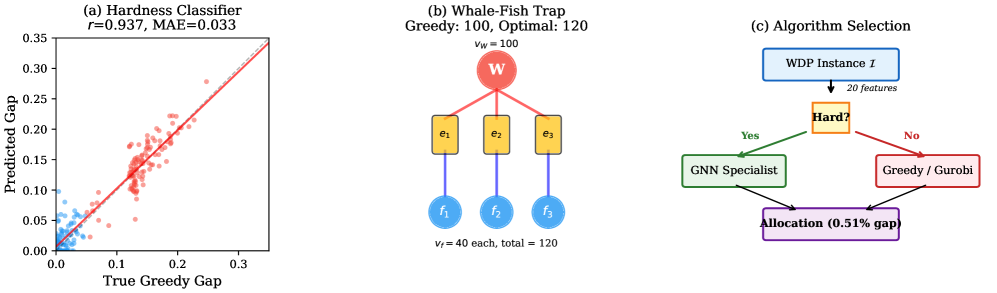

This paper introduces a Graph Neural Network capable of predicting instance hardness in combinatorial auctions, facilitating dynamic algorithm selection between a GNN and a greedy heuristic.

Despite advances in machine learning for combinatorial optimization, reliably predicting instance difficulty remains a key challenge. This paper, ‘Learning Structural Hardness for Combinatorial Auctions: Instance-Dependent Algorithm Selection via Graph Neural Networks’, introduces a method for predicting the hardness of combinatorial auction instances using structural features and machine learning. By accurately identifying difficult instances, we demonstrate a hybrid approach-routing challenging problems to a Graph Neural Network and easier ones to a greedy heuristic-achieves near-optimal solutions with a 0.51\% overall optimality gap. Could this instance-dependent algorithm selection framework offer a more tractable path towards solving complex combinatorial problems than attempting to universally replace classical methods?

The Inherent Complexity of Combinatorial Resource Allocation

Combinatorial auctions offer a robust mechanism for allocating multiple, interdependent goods, promising efficiency gains over traditional auction formats. However, realizing these benefits is hampered by the Winner Determination Problem (WDP), a computationally challenging task inherent in their design. The WDP requires identifying the allocation of goods to bidders that maximizes revenue or social welfare, but the number of possible allocations grows exponentially with the number of items and bidders. This explosive growth renders exact solution methods impractical for even moderately sized auctions, creating a significant bottleneck for real-world implementation. Effectively addressing the WDP is therefore crucial to unlock the full potential of combinatorial auction formats and ensure their scalability for complex resource allocation scenarios.

The practical application of combinatorial auctions is significantly hampered by the limitations of traditional computational methods when faced with real-world scale. As the number of items and bidders increases, the Winner Determination Problem (WDP) quickly becomes intractable, demanding exponentially more processing power and time. This inability to efficiently determine the optimal allocation not only impacts the speed at which auctions can be conducted, reducing efficiency for both auctioneer and participants, but also poses a risk to overall welfare. Suboptimal solutions, arrived at due to computational constraints, can lead to inefficient allocation of goods, resulting in lost economic value for all involved – bidders may not receive the bundles they value most, and the auctioneer may fail to maximize revenue or achieve desired outcomes. Consequently, research into scalable algorithms and approximation techniques remains crucial for unlocking the full potential of combinatorial auction formats.

The core difficulty in solving the Winner Determination Problem (WDP) within combinatorial auctions stems from the combinatorial explosion of possible bid allocations. Unlike simple auctions where a single item is awarded, these auctions allow bidders to submit bids on bundles of items, creating a vast search space for the optimal assignment. The number of potential winning bid combinations grows factorially with the number of items and bidders; even a moderately sized auction with, for example, 50 items and 20 bidders, presents a search space containing 2^{50} possible allocations – a number far exceeding the capacity of brute-force computational methods. This necessitates the development of sophisticated algorithms and heuristics capable of efficiently navigating this complex landscape to identify the allocation that maximizes social welfare or revenue, without exhaustively evaluating every single possibility.

Identifying the Structural Roots of Heuristic Failure

While greedy heuristics often provide reasonably good solutions for Warehouse Disposition Problems (WDP), performance varies considerably across different instances. Analysis reveals substantial optimality gaps – the difference between the greedy solution and the optimal solution – for a subset of WDP instances. These gaps are not random; certain instances consistently yield solutions significantly worse than optimal, indicating inherent structural properties that impede greedy algorithm effectiveness. Observed optimality gaps range from minimal deviations to exceeding 20% in some cases, demonstrating that a one-size-fits-all approach to WDP solving is suboptimal and necessitates consideration of instance-specific characteristics.

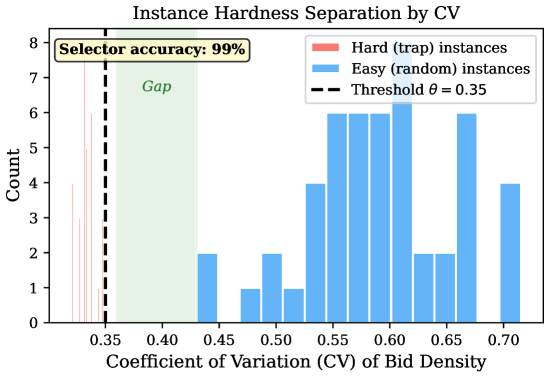

Each WDP instance is represented by a 20-dimensional feature vector designed to quantify its structural characteristics. These features encompass bid density, measured as the number of bids per unit of capacity; capacity utilization, representing the proportion of total capacity covered by bids; and value-congestion correlation, which assesses the relationship between bid values and the level of congestion at corresponding capacity levels. Additional features include statistical measures of bid values and capacities, such as means, standard deviations, skewness, and kurtosis, as well as features related to bid overlap and the distribution of bid ranges. The complete vector provides a quantifiable profile of the instance’s characteristics, enabling analysis of its inherent difficulty for heuristic algorithms.

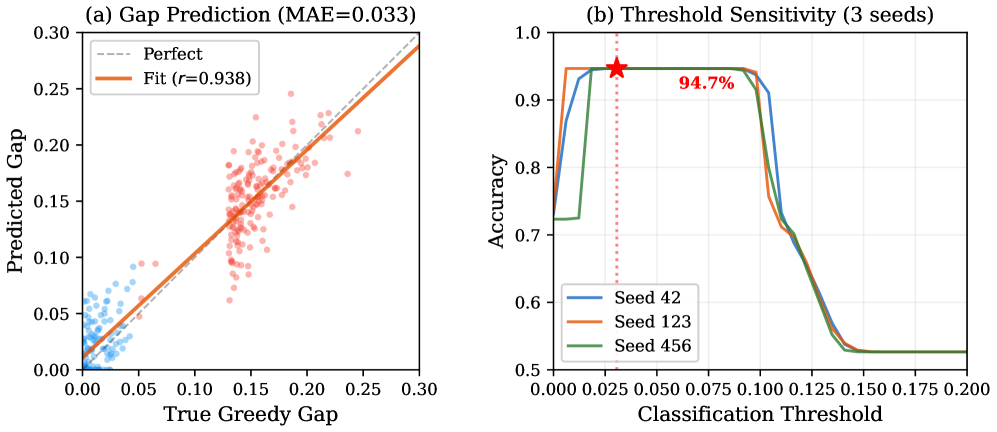

A ‘Hardness Classifier’ was trained using a 20-dimensional feature vector derived from each Weighted Dominating Set (WDS) instance to predict the difficulty of solving that instance with a greedy heuristic. Evaluation of the classifier demonstrates a strong correlation between predicted and actual greedy gaps, as measured by a Pearson correlation coefficient of 0.937. Furthermore, the classifier achieves a binary accuracy of 94.7% in correctly classifying instances as either ‘hard’ or ‘easy’ for the greedy heuristic, indicating a high degree of predictive power regarding instance solvability.

Recognizing variations in instance difficulty enables a shift from uniformly applying heuristics to a more strategic, problem-specific approach. By accurately predicting the likely performance of a greedy algorithm on a given WDP instance, computational resources can be allocated more efficiently; instances predicted to be easily solved can be dispatched to a fast, lightweight greedy solver, while those anticipated to exhibit large optimality gaps can be directed towards more computationally expensive, but potentially higher-performing, methods like local search or integer programming. This targeted methodology optimizes overall solution quality and reduces the average computation time required to achieve a given level of performance across a diverse problem set.

Adaptive Algorithm Selection for Enhanced Efficiency

The Hybrid Allocator is a dynamic algorithm selection mechanism designed to optimize performance across a range of Wireless Deployment Problem (WDP) instances. It functions by initially predicting the computational difficulty of each WDP instance, and then assigning it to the most appropriate solving algorithm. This predictive assessment allows for the strategic application of either a fast, greedy heuristic for simpler instances, or a more computationally intensive Heterogeneous Graph Attention Network (HeteroGAT) for complex instances requiring higher solution quality. The selection is performed on a per-instance basis, enabling the system to adapt to varying problem characteristics and prioritize overall efficiency.

The Hybrid Allocator utilizes a greedy heuristic for instances predicted to be of low difficulty, prioritizing computational speed over absolute optimality. This heuristic operates by making locally optimal choices at each step without considering the global implications, resulting in a significantly faster solution time compared to more complex algorithms. While this approach may yield suboptimal results for individual instances, the pre-selection process targets problems where the impact on the overall solution quality is minimized, effectively trading accuracy for efficiency in scenarios where a near-optimal solution is sufficient.

For instances of the Wireless Deployment Problem (WDP) predicted to be computationally challenging, the Hybrid Allocator employs a Graph Neural Network (GNN) – a Heterogeneous Graph Attention Network (HeteroGAT) – to determine optimal solutions. The HeteroGAT architecture is specifically chosen for its ability to process heterogeneous graph data, representing the diverse node and edge types inherent in WDP instances – such as varying antenna characteristics and signal propagation conditions. This network leverages attention mechanisms to weigh the importance of different nodes and edges during message passing, enabling it to capture complex relationships within the deployment environment. While computationally expensive compared to the greedy heuristic, the HeteroGAT’s enhanced representational power allows it to generate high-quality solutions for difficult WDP instances where simpler algorithms fail to converge on near-optimal results.

The Hybrid Allocator achieves an overall optimality gap of 0.51% when solving WDP instances, representing a significant performance improvement over baseline approaches. Specifically, this result demonstrates a 17-fold reduction in the optimality gap compared to both a purely greedy heuristic and a standalone Graph Neural Network (GNN) implementation – the Heterogeneous Graph Attention Network (HeteroGAT). This optimization is achieved by strategically allocating instances; easier problems are resolved quickly with the greedy heuristic, while complex instances benefit from the superior, though computationally intensive, accuracy of the HeteroGAT. The 0.51% gap represents the average deviation from the optimal solution across a test dataset.

Graph Structure Reveals Hidden Relationships in Resource Allocation

The HeteroGAT model represents the Wholesale Delivery Problem (WDP) as a ‘Supply Chain Graph’ to explicitly model relationships between bids and resources. Nodes in this graph represent both bids – requests for delivery – and resources – the vehicles available to fulfill those requests. Edges define the connections between them, indicating which resources can potentially serve which bids, and are directed to reflect the flow of goods from supply to demand. This graph structure allows the model to capture complex dependencies arising from capacity constraints, geographical locations, and delivery requirements, moving beyond simple linear optimization approaches to represent the WDP as a relational problem suitable for graph neural networks.

The HeteroGAT architecture leverages principles observed in graph structures such as Erdős-Rényi Graphs to model the dependencies within the combinatorial problem. Erdős-Rényi Graphs, characterized by random edge creation, provide a foundation for understanding connectivity and relationships between nodes. In the context of the WDP, this translates to representing bids and resources as nodes and their relationships as edges, enabling the GNN to effectively capture the complex interplay between them. This approach allows the model to move beyond simple pairwise comparisons and consider the broader network of dependencies when evaluating potential solutions, which is critical for achieving optimal results in combinatorial optimization problems.

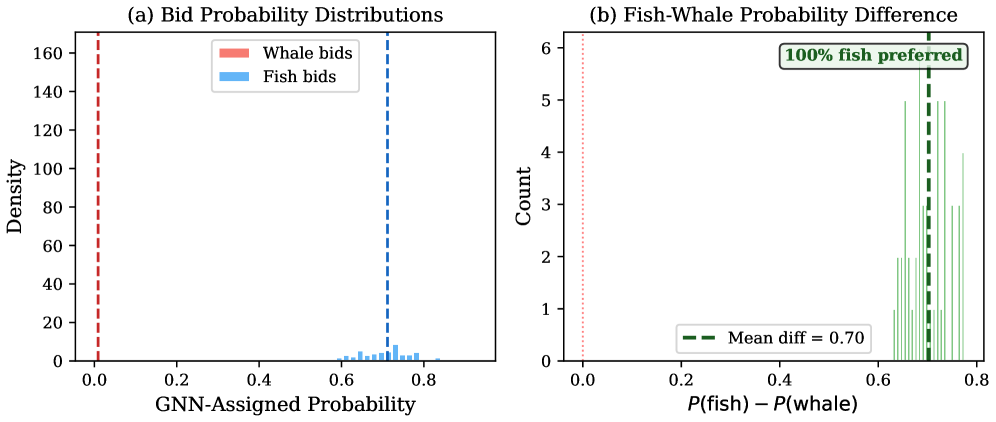

Performance evaluations on adversarial instances demonstrate a significant advantage for the Graph Neural Network (GNN) approach. Specifically, the GNN achieved an average optimality gap of approximately 0% when tested against deliberately challenging problem instances. This contrasts sharply with the performance of greedy algorithms, which exhibited optimality gaps ranging from 3.75% to 59.24% on the same adversarial datasets. These results indicate that the GNN is substantially more effective at finding optimal or near-optimal solutions for complex combinatorial problems, particularly when faced with instances designed to exploit the weaknesses of heuristic approaches.

Experimental results with the WDP demonstrate that utilizing a graph-based approach, specifically the HeteroGAT, yields substantial performance improvements over traditional greedy algorithms. On adversarial test instances, the HeteroGAT achieved an average optimality gap of approximately 0%, a significant reduction compared to the 3.75-59.24% range observed in greedy methods. This indicates that explicitly modeling the relationships between bids and resources as a graph allows the model to explore a larger solution space and identify more optimal solutions than approaches that treat these elements in isolation. The demonstrated gains validate the principle that incorporating graph structure can be highly effective in addressing combinatorial optimization problems.

Towards Adaptive Intelligence in Combinatorial Optimization

The demonstrated efficacy of combining graph neural networks with traditional greedy algorithms underscores a critical principle in combinatorial optimization: problem instance characterization is paramount. Rather than relying on a single, universally superior algorithm, this research reveals that performance is strongly tied to the specific features of each instance. Identifying these features – such as the presence of deceptive high-value bids, as seen in ‘Whale-Fish Trap’ instances – allows for intelligent algorithm selection, effectively routing problems to the solver best suited to exploit their characteristics. This adaptive approach moves beyond the limitations of one-size-fits-all solutions, paving the way for more robust and efficient algorithms capable of tackling the diverse challenges inherent in real-world optimization problems, and suggesting that future progress will depend increasingly on sophisticated instance analysis and dynamic algorithm assignment.

The discovery of ‘Whale-Fish Trap’ instances – scenarios where a conspicuously high, yet ultimately misleading, bid overshadows a genuinely more valuable combination of items – opens compelling avenues for future investigation into strategic deception within combinatorial auctions. These instances reveal a vulnerability in auction mechanisms, suggesting that bidders can exploit cognitive biases or information asymmetry to lure competitors into suboptimal choices. Further research could explore the prevalence of such traps across different auction designs and bidder populations, as well as develop algorithms capable of detecting and mitigating their influence. Understanding the dynamics of intentional deception-and its impact on auction efficiency and revenue-is crucial for designing robust and trustworthy auction systems in various real-world applications, from spectrum allocation to online advertising.

A key achievement of this research lies in the development of an algorithm selector capable of consistently directing instances to the most effective solver. Demonstrating 99% routing accuracy, the selector reliably distinguishes between problem characteristics where a Graph Neural Network (GNN) excels and those better suited to a traditional greedy algorithm. This high degree of precision minimizes computational waste and maximizes solution quality, effectively leveraging the strengths of both approaches. Such adaptive routing represents a significant step toward automated algorithm configuration, promising more efficient and robust solutions for a wide range of complex combinatorial optimization challenges without requiring manual tuning or expert intervention.

The development of this hybrid algorithm selector signifies a move beyond static optimization techniques towards systems capable of dynamically assessing and responding to problem complexity. Rather than relying on a single, universally applied algorithm, this approach allows for the intelligent distribution of combinatorial challenges – those frequently encountered in fields like logistics, resource allocation, and network design – to the solver best suited for the task. This adaptability isn’t merely about achieving incremental improvements in efficiency; it lays the groundwork for algorithms that can learn from problem structure, potentially generalizing to entirely new classes of combinatorial challenges and ultimately exhibiting a form of algorithmic intelligence. The success demonstrated here suggests a future where algorithms aren’t simply programmed to solve problems, but rather equipped to discover the most effective solution strategy, mirroring the flexible problem-solving capabilities observed in natural systems.

The pursuit of algorithmic efficiency, as demonstrated in this work on combinatorial auctions, aligns with a fundamental mathematical principle: elegance through provable correctness. The study’s success hinges on accurately classifying instance hardness-determining whether a given problem demands the sophisticated reasoning of a Graph Neural Network or yields to a simpler, greedy approach. This mirrors G.H. Hardy’s assertion that “A mathematician, like a painter or a poet, is a maker of patterns.” The patterns here aren’t visual or lyrical, but computational – identifying the inherent structure of auction instances to select the most appropriate solution method, thereby crafting an efficient and provably sound algorithm. The emphasis on predicting hardness, rather than brute-force computation, embodies a refined mathematical approach to problem-solving.

What’s Next?

The demonstrated capacity to predict computational hardness in combinatorial auctions, while promising, merely shifts the locus of the problem. The current approach circumvents intractability by intelligently routing instances; it does not, however, resolve it. A truly elegant solution would not rely on empirical observation to dictate algorithmic choice, but on a formal understanding of the problem’s structure – a provable classification of instance characteristics that definitively demarcates tractable from intractable cases. Such a framework remains elusive.

Future work must move beyond feature engineering – the artful, yet ultimately ad-hoc, selection of potentially relevant characteristics. The reliance on Graph Neural Networks, while effective, feels suspiciously akin to pattern recognition without comprehension. The network learns that an instance is hard, not why. A more robust approach would integrate formal complexity theory with machine learning, seeking to derive hardness predictions from provable properties of the auction’s underlying graph structure.

Ultimately, the field requires a more fundamental reassessment. The pursuit of ever-more-sophisticated heuristics, however successful in practice, offers only incremental improvements. True progress demands a rigorous mathematical foundation – a formalization of auction hardness that transcends empirical observation and unlocks the possibility of genuinely efficient, provably correct solution algorithms.

Original article: https://arxiv.org/pdf/2602.14772.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

- Where to Change Hair Color in Where Winds Meet

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Brent Oil Forecast

2026-02-18 06:08