Author: Denis Avetisyan

Researchers have developed a novel framework that enhances graph neural networks’ ability to identify anomalous data at test time through iterative self-improvement.

This work introduces SIGOOD, a test-time training method leveraging energy-based models and prompt engineering for robust out-of-distribution graph detection.

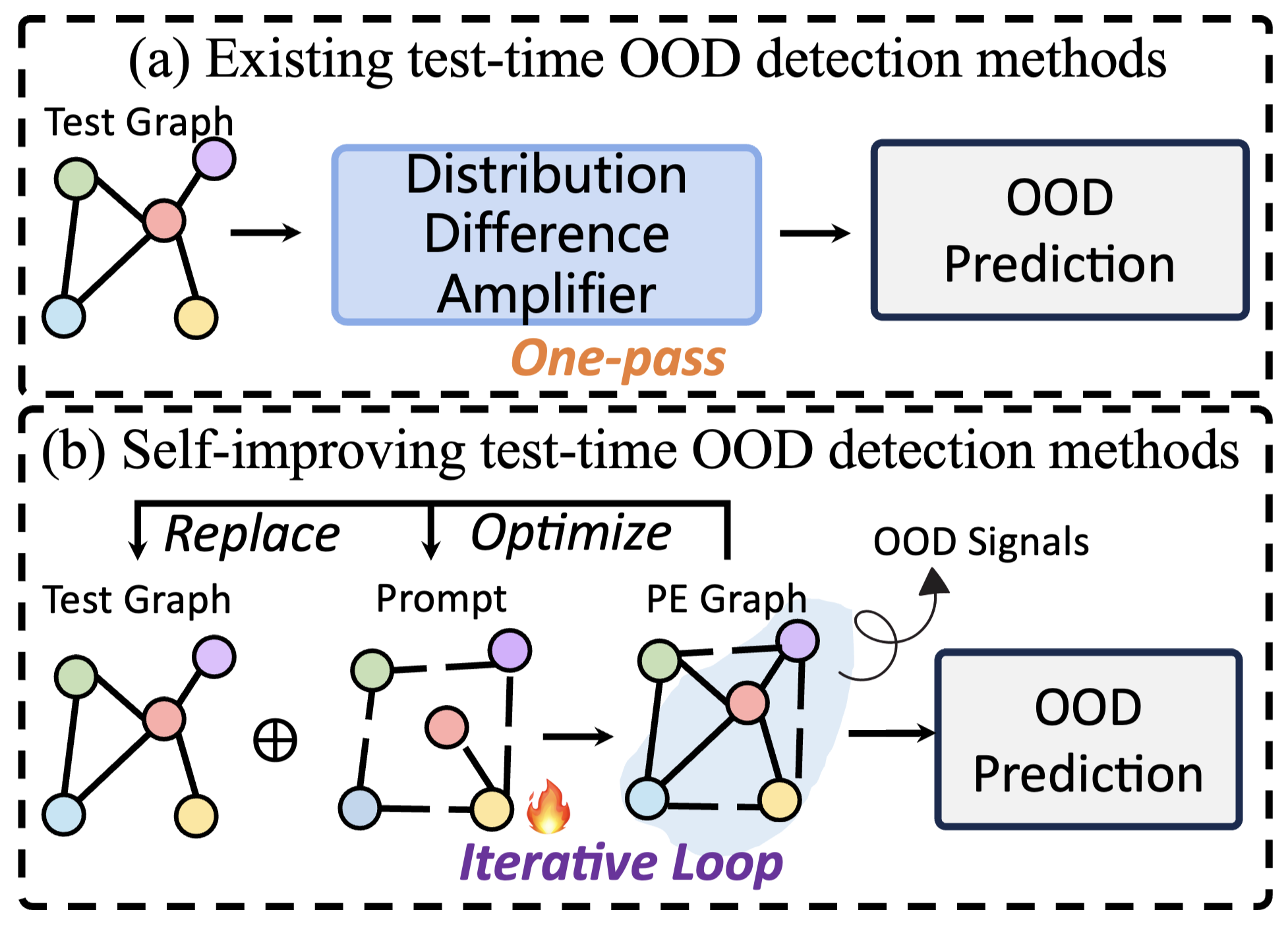

Detecting distributional shift remains a critical challenge for reliable graph neural network deployment in real-world scenarios. The work ‘From Subtle to Significant: Prompt-Driven Self-Improving Optimization in Test-Time Graph OOD Detection’ introduces SIGOOD, a novel framework that amplifies subtle out-of-distribution signals in graphs through iterative, energy-based optimization of prompting strategies during test time. By leveraging a self-improving loop, SIGOOD refines prompt-enhanced graph representations to improve OOD detection accuracy without requiring labeled data. Could this approach unlock more robust and adaptive graph neural networks capable of navigating previously unseen data distributions?

The Illusion of Generalization: Why GNNs Struggle in the Real World

Graph Neural Networks (GNNs) have demonstrated remarkable capabilities in analyzing complex relational data, yet their practical application is often hampered by a significant vulnerability: diminished performance when encountering graphs that deviate from the data used during their training. This fragility poses a critical challenge for real-world deployment, where the diversity of graph structures is virtually limitless and the assumption of a static data distribution rarely holds true. While GNNs excel at generalizing within familiar graph types, even subtle shifts in connectivity patterns, node features, or graph size can lead to substantial accuracy drops. Consequently, systems relying on GNNs – ranging from fraud detection and drug discovery to social network analysis – are susceptible to unpredictable failures when confronted with unforeseen graph characteristics, underscoring the urgent need for robust methods capable of handling distributional shifts and ensuring reliable performance across diverse graph landscapes.

The core difficulty in deploying Graph Neural Networks (GNNs) in dynamic, real-world scenarios lies in their sensitivity to distributional shifts – specifically, the ambiguity between graphs representing familiar patterns and those indicating genuinely novel situations. Current GNNs often struggle to reliably differentiate between in-distribution (ID) graphs, which closely resemble the training data, and out-of-distribution (OOD) graphs that deviate from it; the challenge is exacerbated because these distributions aren’t always cleanly separated. A significant overlap between ID and OOD data means a GNN might misclassify a slightly altered, yet still valid, graph as anomalous, or conversely, fail to recognize a truly dangerous or unexpected structure. This ambiguity undermines the robustness of GNNs and limits their practical application where accurate identification of novel graph structures is paramount.

Existing outlier detection techniques often fall short when applied to the complex domain of graph-structured data, thereby jeopardizing the dependability of Graph Neural Networks in real-world applications. These methods, typically designed for simpler data types, struggle to capture the nuanced relationships and intricate patterns inherent in graphs, leading to both false positives and false negatives when identifying out-of-distribution examples. The overlap between in-distribution and out-of-distribution graph characteristics further exacerbates this issue, as subtle variations can easily be misinterpreted. Consequently, GNN-based systems may confidently process unseen graphs, but produce inaccurate or unreliable results, highlighting a critical need for more robust and graph-aware outlier detection strategies to ensure trustworthy performance in dynamic and unpredictable environments.

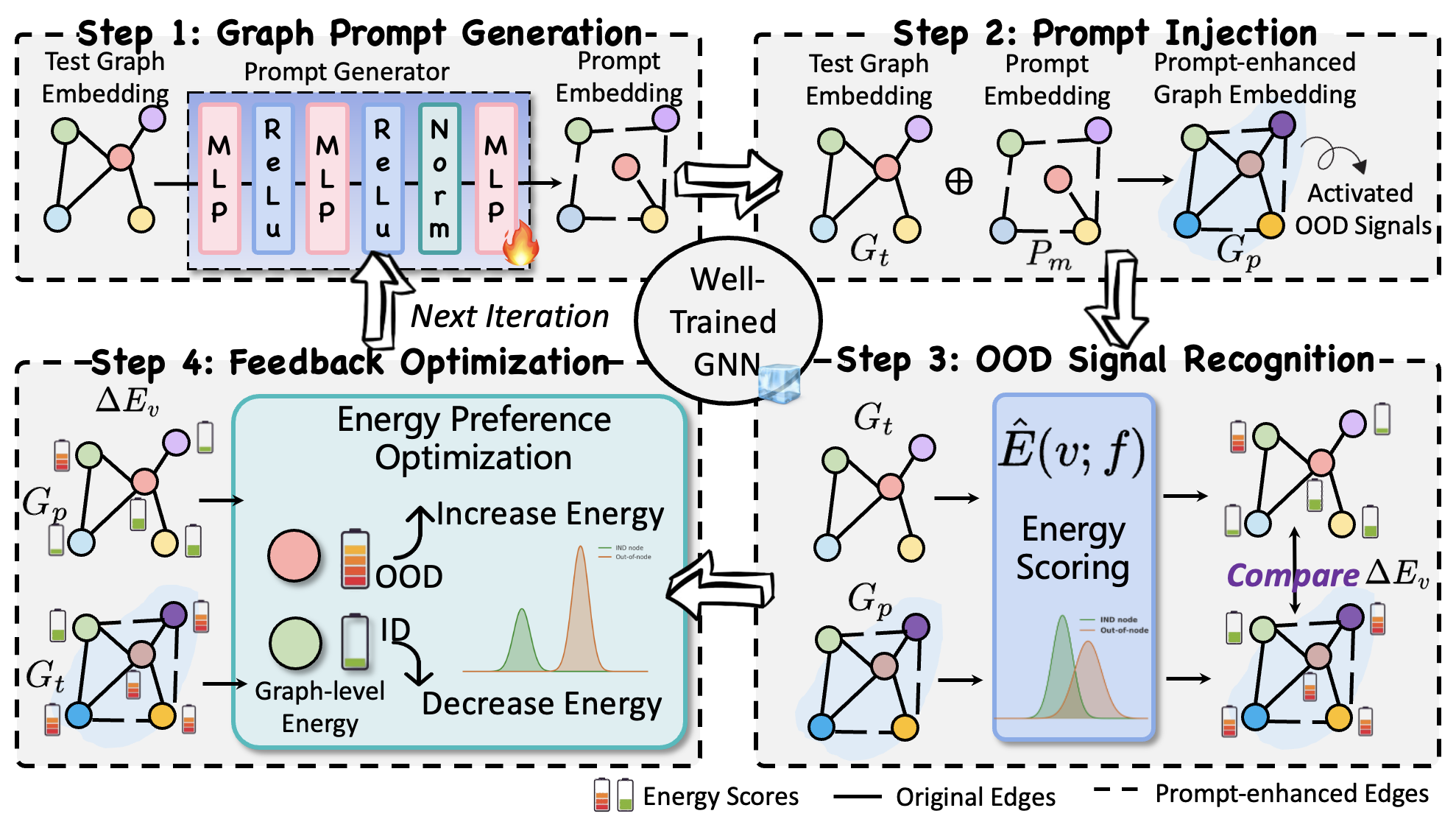

SIGOOD: A Patch for the Inevitable, Not a Cure

SIGOOD operates as a test-time Out-of-Distribution (OOD) detection framework specifically designed for graph-structured data. It distinguishes itself through the implementation of an energy-based feedback loop and iterative refinement process. This means SIGOOD assesses the likelihood of a given graph belonging to the in-distribution training data by assigning it an energy score. The framework then uses this energy score as feedback to refine its detection capabilities during the test phase, without requiring access to labeled OOD data. Through iterative adjustments, SIGOOD aims to maximize the distinction between energy scores assigned to in-distribution and out-of-distribution graphs, thereby improving the accuracy of OOD detection in dynamic and unseen data scenarios.

SIGOOD utilizes a Prompt Generator to construct a ‘Prompt-Enhanced Graph’ as a core component of its detection process. Standard Graph Neural Networks (GNNs) can struggle to identify Out-of-Distribution (OOD) inputs when the anomalous signals are weak; the Prompt Generator addresses this limitation by learning to augment the original graph structure with task-relevant information. This is achieved by generating prompts – additional node or edge features – that highlight subtle differences between In-Distribution (ID) and OOD data. The resulting Prompt-Enhanced Graph provides a richer representation, effectively amplifying the OOD signals and making them more readily detectable by the subsequent energy-based analysis. This approach contrasts with methods relying solely on raw graph features, allowing SIGOOD to improve performance on challenging OOD detection tasks.

SIGOOD utilizes an energy function to assess the Prompt-Enhanced Graph, assigning scalar values representing the likelihood of a given node belonging to the in-distribution (ID) or out-of-distribution (OOD) data. The energy function’s output is then used to guide iterative updates to the prompt itself; the prompt is modified to increase the difference – the separation – between the average energy scores of ID and OOD nodes. This optimization process aims to amplify the energy signal for OOD samples, making them more readily distinguishable from ID samples based on their energy values. The iterative refinement continues until convergence, maximizing the margin between ID and OOD energy distributions and improving detection accuracy.

SIGOOD utilizes a process of iterative refinement, termed ‘Self-Improving Iterations’, to enhance out-of-distribution (OOD) detection performance on previously unseen data. This process dynamically adjusts the prompt used to generate a Prompt-Enhanced Graph, maximizing the distinction between in-distribution (ID) and OOD data based on energy scores calculated by the framework. Empirical results demonstrate SIGOOD’s efficacy, achieving first rank on 11 of 13 benchmark datasets and an average rank of 1.2 across a comparative analysis of 88 OOD detection methods, indicating state-of-the-art performance.

Quantifying Uncertainty: The Illusion of a Clear Signal

SIGOOD employs ‘Energy Variation’ as a primary metric for detecting out-of-distribution (OOD) inputs. This variation is calculated by comparing the energy score assigned to a graph representation of the input with the energy score of the same graph after the addition of a learned prompt. A significant difference between these two energy scores indicates the potential presence of an OOD sample; a larger variation suggests a greater deviation from the training data distribution. The energy score, in this context, reflects the model’s confidence in its prediction, and the variation quantifies how much the prompt alters that confidence, serving as a quantifiable OOD signal.

The ‘Energy Preference Optimization Loss’ functions by iteratively refining prompts to enhance the distinction between in-distribution and out-of-distribution (OOD) data based on energy scores. This loss function operates by maximizing the difference in energy values between the prompt-enhanced graph and the original graph representation; a larger variation indicates a stronger OOD signal. During training, the loss calculates the preference between the energy variation of a given example and a margin, encouraging the model to increase this variation for OOD samples while maintaining it for in-distribution data. This optimization process directly guides prompt updates, effectively shaping the prompt to better highlight the characteristics that differentiate OOD instances and improve detection accuracy.

SIGOOD utilizes node embeddings as input features, representing graph structures numerically to facilitate OOD detection. Unlike methods relying on raw graph data or simpler feature representations, node embeddings capture complex relationships and structural information within the graph. This allows SIGOOD to discern subtle anomalies indicative of OOD samples, which may be missed by techniques sensitive only to overt changes in node degrees or edge counts. The use of embeddings provides a more robust and nuanced feature space, enabling the model to identify OOD instances even when they present only slight deviations from the training data distribution, resulting in improved detection performance across multiple benchmark datasets.

Evaluation of SIGOOD demonstrates a mean Area Under the Curve (AUC) of 87.72% on the Esol/MUV dataset, representing the highest AUC achieved among 88 tested methods. Performance gains were observed when compared to the previous state-of-the-art (SOTA) method, GOODAT, with improvements of 1.52% on the Tox21/ToxCast dataset and 14.20% on the ClinTox/LIPO dataset. Compared to other SOTA test-time methods, SIGOOD achieved gains of 39.02% on the ENZYMES dataset and 29.65% on the COX2 dataset, indicating a substantial improvement in out-of-distribution (OOD) detection performance across multiple benchmark datasets.

The Inevitable Drift: Managing the Unmanageable

A significant challenge in deploying Graph Neural Networks (GNNs) lies in their potential vulnerability to out-of-distribution (OOD) graphs – inputs that differ substantially from the data used during training. SIGOOD addresses this issue through proactive OOD graph identification, effectively reducing the risk of unexpected failures and performance declines in real-world applications. By continuously monitoring incoming graph data and flagging instances that deviate significantly from established patterns, the framework prevents unreliable predictions stemming from unfamiliar data. This capability is especially critical in high-stakes domains where consistent, trustworthy performance is non-negotiable, allowing for intervention or adjusted processing when OOD graphs are detected and safeguarding the integrity of GNN-driven insights.

The demand for consistently reliable graph neural networks extends beyond theoretical performance, becoming especially critical within high-stakes applications like fraud detection, cybersecurity, and drug discovery. In fraud analysis, a single misidentified transaction due to an out-of-distribution graph could result in significant financial loss; similarly, in cybersecurity, failing to detect anomalous network activity could compromise sensitive data. Drug discovery presents perhaps the most profound need for trustworthy predictions, as inaccurate assessments of molecular interactions could lead to ineffective or even harmful treatments. Consequently, the ability of a GNN to not only achieve high accuracy on known data, but also to confidently identify and appropriately handle unfamiliar graph structures, is paramount for real-world deployment and maintaining user trust in these vital domains.

The shifting nature of real-world data presents a significant challenge for graph neural networks (GNNs); however, this framework exhibits a notable capacity to adapt and improve over time, making it particularly effective in dynamic environments. Unlike static models, it doesn’t rely on a fixed understanding of the data; instead, it continuously refines its ability to identify out-of-distribution graphs through an iterative process. This self-improving characteristic allows the system to maintain reliable performance even as the underlying data distributions evolve, a crucial advantage in applications where patterns and characteristics are subject to change. Consequently, the framework isn’t simply a diagnostic tool, but a learning system designed to remain robust and trustworthy in the face of ongoing data drift, offering a pathway toward more resilient and dependable machine learning deployments.

The SIGOOD framework champions an iterative refinement process as a pathway toward more dependable machine learning systems. Rather than relying on static defenses, SIGOOD continuously learns and adapts to novel data, bolstering its ability to identify out-of-distribution (OOD) graphs. This cyclical approach – involving prediction, uncertainty estimation, and model retraining – doesn’t simply address current vulnerabilities, but builds a system capable of proactively anticipating and mitigating future risks. The framework’s design suggests a broader principle: robustness isn’t a fixed attribute, but an emergent property of systems built to learn from their own limitations and evolve with changing data landscapes. This dynamic resilience holds particular promise for critical applications where failure isn’t an option and continuous improvement is essential, extending beyond graph neural networks to inform the development of trustworthy AI across diverse domains.

The pursuit of robust out-of-distribution (OOD) detection, as demonstrated by SIGOOD’s iterative refinement, feels predictably Sisyphean. This paper champions prompt engineering to nudge graph neural networks toward better OOD signals, a tactic that merely repackages feature engineering with a fresh label. As Tim Berners-Lee observed, “The Web is more a social creation than a technical one.” Similarly, SIGOOD isn’t solving a fundamentally new problem; it’s leveraging social-or rather, algorithmic-feedback loops to polish existing methods. Production will undoubtedly find novel ways to break even the most carefully prompted energy-based models, confirming once again that everything new is old again, just renamed and still broken.

What Comes Next?

The pursuit of self-improving frameworks, exemplified by SIGOOD, feels predictably optimistic. Each iteration, each ‘controlled release’ into the wild, inevitably uncovers edge cases previously obscured by curated datasets. The elegance of prompt-driven refinement is, for the moment, untarnished, but production systems possess a remarkable capacity to generate the unexpected. The core challenge isn’t simply detecting distributional shift, but acknowledging that ‘in-distribution’ is a transient illusion.

Future work will undoubtedly focus on the robustness of these energy-based models. Scaling to genuinely complex graph structures, however, will expose the limitations of current feedback mechanisms. The current approach appears sensitive to prompt design-a precarious reliance, given the subtle art of crafting effective prompts. One suspects that, shortly, a new generation of ‘prompt engineers’ will be needed to manage the resulting chaos.

Ultimately, the true test won’t be achieving higher scores on benchmark datasets. It will be how gracefully these systems degrade when confronted with data that actively defies expectation. Legacy, after all, isn’t a collection of failures, but a memory of better times. And bugs? They are simply proof of life.

Original article: https://arxiv.org/pdf/2602.17342.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Banks & Shadows: A 2026 Outlook

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- ETH PREDICTION. ETH cryptocurrency

- QuantumScape: A Speculative Venture

2026-02-22 17:28