Author: Denis Avetisyan

Researchers have developed a novel deep learning method that leverages advanced state space models to identify subtle anomalies in hyperspectral imagery.

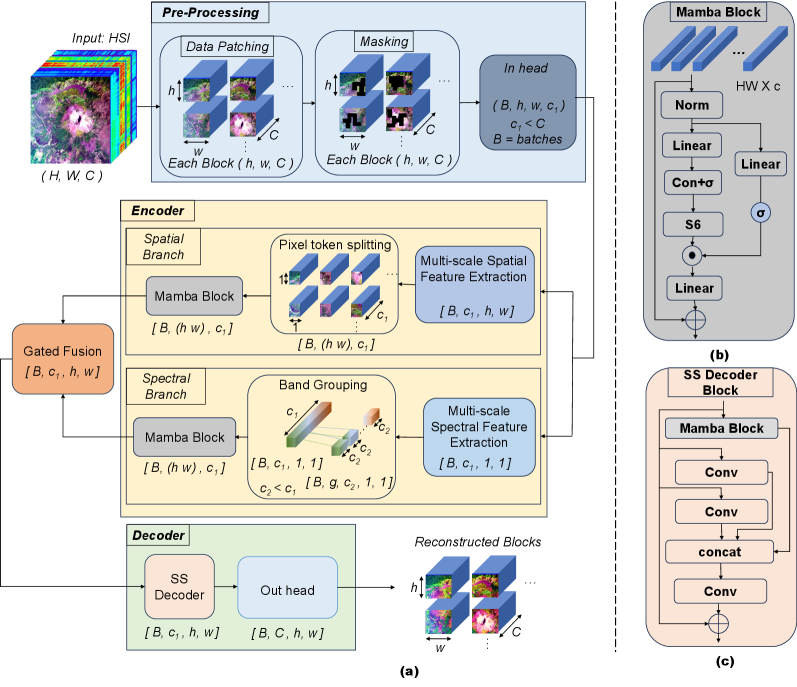

This paper introduces DMS2F-HAD, a dual-branch Mamba network with adaptive gated fusion, achieving state-of-the-art performance in unsupervised hyperspectral anomaly detection by effectively capturing long-range dependencies.

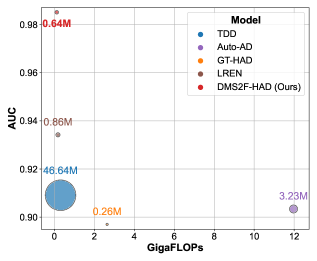

Identifying subtle anomalies within high-dimensional hyperspectral imagery remains challenging due to limitations in capturing long-range dependencies and computational efficiency. This paper introduces ‘DMS2F-HAD: A Dual-branch Mamba-based Spatial-Spectral Fusion Network for Hyperspectral Anomaly Detection’, a novel approach leveraging the linear-time modeling capabilities of Mamba state space models within a dual-branch architecture for efficient spatial-spectral feature learning. Experimental results across fourteen benchmark datasets demonstrate that DMS2F-HAD achieves a state-of-the-art average AUC of 98.78% with a 4.6x speedup over comparable methods. Could this efficient and accurate anomaly detection framework unlock new applications in remote sensing and precision monitoring?

The Illusion of Signal: Hyperspectral Data and Its Discontents

Hyperspectral Anomaly Detection (HAD) plays a vital role in diverse fields, ranging from identifying camouflaged targets in surveillance to monitoring subtle environmental changes indicative of pollution or disease. However, the very nature of hyperspectral imaging – capturing data across hundreds of narrow, contiguous spectral bands – presents a considerable challenge. This high dimensionality, while providing rich information, drastically increases computational complexity and the risk of false positives. Traditional anomaly detection algorithms struggle to effectively process this data volume without making overly simplistic assumptions about the expected spectral signatures, leading to missed detections or inaccurate alerts. Consequently, advancements in HAD are crucial not only for improving performance but also for enabling the practical application of hyperspectral technology to real-world problems demanding reliable and timely insights.

Statistical anomaly detection techniques, frequently employed in hyperspectral imaging, often produce a substantial number of false positives due to their inherent reliance on predefined background distributions. These methods typically assume data conforms to a specific statistical model – such as Gaussian or Poisson – to differentiate anomalies from normal variations. However, real-world hyperspectral data rarely adheres perfectly to these idealized distributions, especially given variations in illumination, atmospheric conditions, and material composition. Consequently, fluctuations within the natural background, which deviate from the assumed model, are incorrectly flagged as anomalies. This sensitivity to distribution mismatch significantly limits the reliability of traditional statistical approaches in complex environments, necessitating more robust methods capable of adapting to the inherent variability of hyperspectral data.

Despite advances in deep learning for hyperspectral anomaly detection, current architectures present notable limitations. Convolutional Neural Networks, while effective at identifying spatial patterns, often fall short when discerning subtle spectral differences that span wider wavelength ranges – a critical aspect of anomaly identification. This difficulty arises because these networks typically focus on local spectral neighborhoods. Conversely, Transformer models, designed to capture long-range dependencies, introduce substantial computational burdens due to the quadratic complexity associated with attention mechanisms, making them challenging to deploy in real-time or resource-constrained environments. Consequently, a key research direction centers on developing novel deep learning architectures that can efficiently model long-range spectral correlations without incurring prohibitive computational costs, ultimately improving the accuracy and practicality of hyperspectral anomaly detection systems.

Mamba to the Rescue: A Skeptic’s Look at DMS2F-HAD

DMS2F-HAD utilizes Mamba, a state space model (SSM) distinguished by its linear scaling in sequence length, addressing computational bottlenecks inherent in traditional recurrent and attention-based models. Unlike transformers with quadratic complexity O(n^2), Mamba achieves O(n) complexity through selective state spaces, enabling efficient processing of long-range dependencies in hyperspectral data. This is accomplished by dynamically filtering irrelevant information, allowing the model to focus on pertinent contextual data for improved anomaly detection without the computational expense of maintaining attention weights across the entire sequence. The selective mechanism within Mamba’s architecture facilitates a more streamlined and scalable approach to modeling sequential data, crucial for high-dimensional datasets like those found in hyperspectral imaging.

The DMS2F-HAD architecture utilizes a dual-branch processing pathway to enhance feature extraction from hyperspectral data. One branch focuses on spatial information, analyzing the relationships between neighboring pixels to identify localized patterns. Concurrently, the second branch processes spectral information, examining the unique reflectance signatures of each pixel across different wavelengths. By decoupling these two dimensions of data, the model can capture complementary features that might be obscured in a single, combined analysis. This separation allows for a more nuanced understanding of the data, improving the model’s ability to distinguish between anomalous and normal spectral signatures and spatial arrangements.

The Adaptive Gated Fusion Mechanism within DMS2F-HAD utilizes learnable weights to dynamically combine the feature maps generated by the spatial and spectral branches. This mechanism employs a gating network that assesses the relevance of each feature map to the anomaly detection task. The gating network outputs a weight between zero and one for each feature map, effectively scaling its contribution to the final feature representation. This adaptive weighting allows the model to prioritize features indicative of anomalous behavior while suppressing irrelevant or noisy information, improving both the accuracy and robustness of the anomaly detection process.

The DMS2F-HAD model demonstrates state-of-the-art performance in hyperspectral anomaly detection, achieving an average Area Under the Curve (AUC) of 98.78%. This metric indicates a high capability in distinguishing between anomalous and normal spectral signatures. The model’s design contributes to this accuracy by enabling efficient processing of hyperspectral data, which is characterized by a large number of spectral bands. This level of performance surpasses previously established benchmarks in the field, signifying a substantial improvement in anomaly detection capabilities for hyperspectral imagery.

Digging Deeper: Advanced Feature Processing and Its Limitations

The Spatial-Spectral Decoder (SSD) within the DMS2F-HAD architecture employs multi-scale feature extraction to improve anomaly detection capabilities. This process involves analyzing input data at multiple resolutions, capturing both broad spatial contexts and fine-grained details. Specifically, the SSD utilizes convolutional layers with varying kernel sizes to extract features representative of different spatial scales. These multi-scale features are then concatenated or fused to create a comprehensive spatial representation, enabling the model to identify subtle anomalies that might be missed by single-scale approaches. This technique is particularly effective in hyperspectral imagery where anomalies can manifest as localized, spatially complex patterns.

Mamba’s Selective Scan mechanism addresses inefficiencies inherent in processing sequential hyperspectral data by dynamically focusing computational resources on the most informative portions of the input sequence. Unlike traditional recurrent or convolutional approaches that process all data points equally, Selective Scan employs a learned gating function to determine which elements of the hyperspectral sequence require further analysis. This selective attention reduces computational load and memory access, improving processing speed without sacrificing accuracy. The mechanism operates by computing a context vector based on a scan of the input sequence, and then using this context to weight the importance of each element, effectively filtering out irrelevant information and concentrating on salient features within the sequential data.

Spectral Grouping, a component of the Mamba architecture, addresses the challenges of analyzing hyperspectral data by dividing the spectral dimension into overlapping sub-sequences. This segmentation allows the model to capture subtle variations and dependencies within the spectral signatures that might be lost when considering the entire spectrum at once. By processing these sub-sequences, Mamba can identify nuanced spectral features indicative of specific materials or conditions. The overlapping nature of the sub-sequences ensures contextual information is preserved, improving the model’s ability to differentiate between similar spectral responses and reduce the impact of noise or spectral distortions.

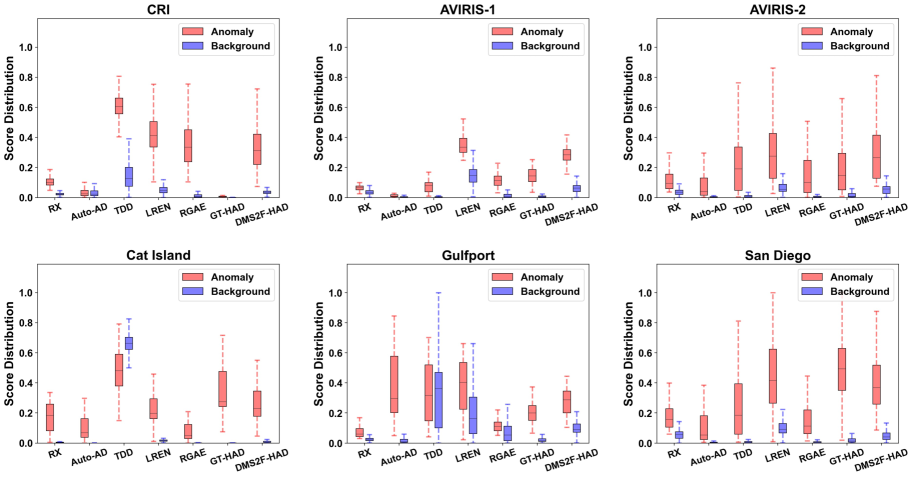

The integrated approach of Multi-Scale Feature Extraction, Selective Scan, and Spectral Grouping yields a robust feature representation for hyperspectral anomaly detection. Performance evaluation on the Gulfport dataset demonstrates an Area Under the Curve (AUC) of 98.97%. Comparative analysis reveals that utilizing gated fusion within the model improves the AUC by greater than 9% when contrasted with implementations employing addition fusion, indicating a substantial benefit from the gating mechanism in effectively integrating learned features.

The Inevitable Drift: Reconstruction, Real-World Impact, and Future Limitations

DMS2F-HAD centers on the principle that anomalies represent significant deviations from the expected norm within a dataset. To capture this ‘normality’, the system employs Autoencoders, a type of neural network trained to reconstruct its input. During operation, the Autoencoder attempts to rebuild each data point; the difference between the original input and the reconstructed output is quantified as Reconstruction Error. A high Reconstruction Error signals that the input differs substantially from the patterns the Autoencoder learned during training, thus flagging it as a potential anomaly. This approach effectively identifies outliers by measuring how well the system can explain each data point based on its understanding of the typical background, offering a powerful method for isolating unusual or unexpected events in complex datasets.

DMS2F-HAD distinguishes itself through a strategic integration of Mamba, a novel state space modeling technique, with a reconstruction-based anomaly detection framework. This combination yields a significant advantage: the model effectively balances the need for high accuracy in identifying subtle anomalies with the practical requirement of computational efficiency. Traditional methods often struggle with one or the other, demanding substantial resources or sacrificing precision. By leveraging Mamba’s capacity to efficiently model sequential data, DMS2F-HAD minimizes computational overhead while maintaining a robust ability to reconstruct normal patterns, thereby highlighting deviations as potential anomalies. This innovative design allows for the reliable processing of complex datasets without prohibitive computational costs, opening doors to real-time applications previously unattainable.

DMS2F-HAD establishes a significant leap in computational efficiency for anomaly detection. The system achieves inference speeds 4.6 times faster than conventional Transformer-based methods, a substantial improvement for real-time applications. This speed is coupled with a dramatic reduction in model complexity; DMS2F-HAD requires only 3.3 times the number of parameters and a mere 29 times the floating-point operations (FLOPs) compared to the MMR-HAD model. This minimized computational burden not only accelerates processing but also allows for deployment on resource-constrained platforms, broadening the scope of hyperspectral anomaly detection to a wider range of practical scenarios and enabling scalable solutions for large datasets.

The development of DMS2F-HAD signifies a substantial step towards practical, real-time anomaly detection within the complex datasets of hyperspectral imagery. This capability unlocks a range of possibilities across critical sectors; in environmental monitoring, subtle spectral shifts indicative of pollution or deforestation can be rapidly identified. Precision agriculture benefits from the swift detection of plant stress or disease, enabling targeted interventions and resource optimization. Furthermore, the system’s speed and efficiency extend to security applications, where it can facilitate the timely identification of unusual patterns or objects in surveillance data. By overcoming the computational limitations of previous methods, DMS2F-HAD promises to deliver actionable insights with the speed and accuracy needed for dynamic, real-world scenarios.

The pursuit of state-of-the-art accuracy, as demonstrated by DMS2F-HAD’s dual-branch Mamba network, feels predictably optimistic. It efficiently captures long-range dependencies within hyperspectral data – a feat many have claimed before. One anticipates the inevitable refactoring when production environments reveal unforeseen edge cases and scaling limitations. As Fei-Fei Li aptly stated, “AI is not about replacing humans; it’s about augmenting our capabilities.” This research, while promising in its unsupervised anomaly detection, will likely require significant human intervention to address the real-world messiness that elegantly designed architectures conveniently ignore. The claim of adaptive gated fusion sounds suspiciously like ‘yet another optimization’ – a problem someone will be debugging in six months.

The Road Ahead

The presented architecture, while achieving current benchmarks, merely shifts the complexity. State space models, even those with selective mechanisms, introduce parameters-and therefore, potential failure modes-at a rate that will inevitably outpace any perceived gains. The pursuit of long-range dependencies remains a recursive problem; each ‘solution’ necessitates increasingly elaborate mechanisms to avoid overfitting or collapsing into noise. The core issue isn’t modeling the data, but believing the model will remain stable under real-world variance.

Future work will undoubtedly explore variations on this theme: attention mechanisms layered onto state space models, perhaps, or increasingly aggressive dimensionality reduction techniques. However, the field seems preoccupied with elegant solutions rather than robust ones. The true challenge lies not in improving anomaly detection, but in reducing the rate of false positives-a problem that demands a deeper understanding of the underlying data distributions, not simply a more sophisticated algorithm.

The promise of unsupervised learning in this domain remains largely unfulfilled. Current approaches excel at identifying something unusual, but rarely articulate why. The next iteration will likely focus on explainability, though it’s a safe prediction that any attempt to provide meaningful justifications will be met with further computational burdens. The goal isn’t more intelligence; it’s fewer illusions.

Original article: https://arxiv.org/pdf/2602.04102.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- ETH PREDICTION. ETH cryptocurrency

- Games That Faced Bans in Countries Over Political Themes

- ‘Zootopia+’ Tops Disney+’s Top 10 Most-Watched Shows List of the Week

- The Labyrinth of Leveraged ETFs: A Direxion Dilemma

- Uncovering Hidden Groups: A New Approach to Social Network Analysis

- When Wizards Buy Dragons: A Contrarian’s Guide to TDIV ETF

2026-02-05 16:29