Author: Denis Avetisyan

A new framework combines the power of deep learning with explainable AI to not only detect network intrusions with greater accuracy, but also to provide security professionals with crucial insights into why those intrusions were flagged.

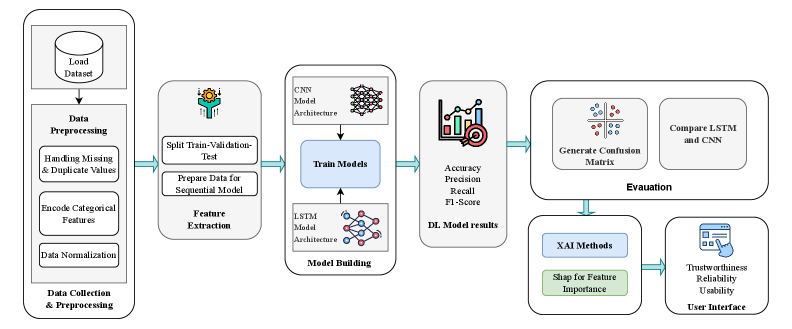

This review details a human-centered intrusion detection system leveraging CNNs, LSTMs, and SHAP values for improved accuracy and interpretability in cybersecurity applications.

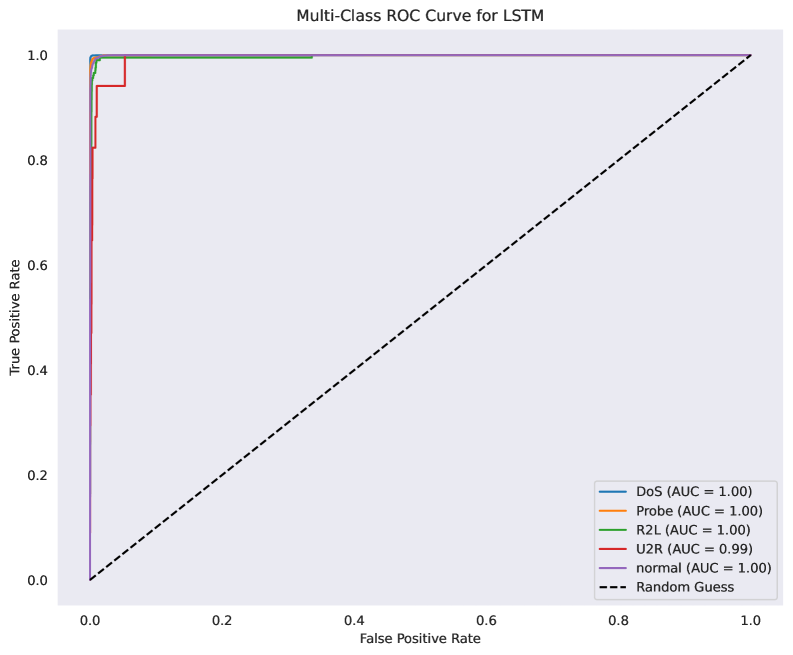

Despite advances in cybersecurity, the increasing sophistication of network threats demands intrusion detection systems that are both accurate and transparent. This need is addressed in ‘Human-Centered Explainable AI for Security Enhancement: A Deep Intrusion Detection Framework’, which proposes a novel system integrating Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks with the SHapley Additive exPlanations (SHAP) method to enhance interpretability. Experimental results on the NSL-KDD dataset demonstrate high accuracy-reaching 0.99 for both CNN and LSTM-along with improved feature understanding for security analysts. Could this human-centered approach to explainable AI pave the way for more trustworthy and adaptable cybersecurity solutions in the face of evolving threats?

The Inevitable Cascade of Alerts

Conventional intrusion detection systems, designed for a simpler network era, now face an overwhelming deluge of data. The sheer volume of network traffic, coupled with its increasing complexity stemming from diverse applications and protocols, creates a significant challenge for these systems. This often results in a high rate of false positives – legitimate network activity incorrectly flagged as malicious. Consequently, security analysts are burdened with sifting through numerous alerts, obscuring genuine threats and diminishing the effectiveness of security operations. The core issue isn’t a failure to detect attacks, but rather an inability to distinguish between benign activity and actual malicious intent amidst the noise, leading to alert fatigue and potentially missed critical events.

Modern network security faces an escalating challenge from increasingly complex attack vectors. Beyond simple denial-of-service (DoS) attempts, adversaries now employ reconnaissance probes to map vulnerabilities, utilize remote-to-local (R2L) exploits to gain unauthorized access, and leverage user-to-root (U2R) techniques to escalate privileges once inside a system. These multifaceted attacks demand a shift away from static, signature-based defenses. Intelligent security solutions are now crucial, incorporating machine learning and behavioral analysis to detect anomalous activity and adapt to evolving threats in real-time. Such adaptive systems can differentiate between legitimate traffic and malicious intent, mitigating the impact of sophisticated attacks that bypass traditional security measures and safeguarding critical network infrastructure.

Current network security methodologies frequently falter when faced with the speed and subtlety of modern cyberattacks, largely due to an inability to discern genuine threats from benign activity in real-time. Traditional signature-based systems, while effective against known malware, struggle with zero-day exploits and polymorphic attacks, generating a constant stream of alerts that overwhelm security teams. This deluge of information, often comprised of false positives, obscures critical incidents and delays appropriate responses; analysts spend valuable time investigating non-threats instead of addressing genuine security breaches. Consequently, even sophisticated networks can remain vulnerable for extended periods, as the time to detect and mitigate a threat is significantly prolonged, allowing attackers ample opportunity to compromise systems and exfiltrate data. The need for systems capable of dynamic threat assessment and intelligent prioritization is therefore paramount in the evolving landscape of cybersecurity.

The Illusion of Clean Data

The NSL-KDD dataset was created in response to limitations found in the original KDD Cup 99 dataset, specifically addressing issues with redundant records and the unrealistic network traffic profiles present in the older benchmark. NSL-KDD comprises seven types of network attacks – normal traffic, back, buffer overflow, denial-of-service, load, perlscripts, and snooper – and includes preprocessed data with fewer redundant entries. The dataset is divided into training and testing sets, allowing for standardized evaluation of intrusion detection systems; the training set contains approximately 125,972 records, while the testing set contains roughly 22,543 records. This refined structure enables researchers to more accurately compare the performance of different intrusion detection models and algorithms, fostering advancements in network security.

Prior to applying machine learning algorithms to network data, effective data preprocessing is crucial for optimal performance. Raw network data often contains features with varying scales and categorical variables incompatible with most algorithms. Min-Max Scaling normalizes numerical features to a range between 0 and 1, preventing features with larger magnitudes from dominating the learning process. Label Encoding converts categorical features-such as protocol type or service-into numerical representations that machine learning models can process. These techniques ensure data consistency, improve algorithm convergence speed, and ultimately enhance the accuracy and reliability of intrusion detection systems. Failure to properly preprocess data can lead to biased models and significantly reduced detection rates.

Convolutional Neural Networks (CNNs) automate feature extraction from network data by employing convolutional layers to identify patterns and relationships without requiring manual definition of these features. This is achieved through the application of filters that convolve across the input data, generating feature maps representing detected patterns. Subsequent pooling layers reduce dimensionality and computational complexity. The automated feature extraction process allows CNNs to learn complex, non-linear relationships directly from raw network traffic, resulting in improved detection accuracy and efficiency compared to methods relying on hand-crafted features. CNNs are particularly effective at processing sequential data inherent in network packets, identifying anomalies and malicious activity based on learned patterns.

The Allure of Temporal Awareness

Long Short-Term Memory (LSTM) networks excel at analyzing time-series data due to their recurrent neural network architecture, which incorporates memory cells capable of maintaining information over extended sequences. This is particularly relevant to network security, as malicious activities often manifest as sequential patterns within network traffic. Traditional intrusion detection systems frequently struggle with identifying these patterns due to their inability to effectively process temporal dependencies. LSTM networks address this limitation by learning and recognizing the order of events, enabling them to identify anomalous sequences that indicate potential attacks, such as multi-stage exploits or reconnaissance activity. The internal gating mechanisms within LSTM cells allow the network to selectively retain or discard information, effectively filtering out irrelevant data and focusing on critical temporal features within the network traffic stream.

A hybrid deep learning model integrating Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks offers performance advantages in intrusion detection by capitalizing on the distinct strengths of each architecture. CNNs excel at extracting spatial features from data, identifying patterns within individual network packets or data segments, while LSTMs are designed to analyze sequential data, effectively capturing temporal dependencies and recognizing patterns evolving over time. This combined approach allows the model to not only identify immediate anomalies but also to correlate events across a network traffic stream, improving the detection of complex, multi-stage attacks. Experimental results demonstrate the efficacy of this hybrid model, achieving an overall 99% accuracy, alongside an F1-score of 0.93 for LSTM and 0.86 for CNN, indicating a robust ability to balance precision and recall in identifying malicious activity.

Model performance was quantitatively assessed, achieving an overall accuracy of 99% when utilizing both Convolutional Neural Network (CNN) and Long Short-Term Memory (LSTM) architectures. Specifically, the LSTM model demonstrated a 0.93 F1-score, while the CNN model achieved a 0.86 F1-score. The F1-score, calculated as the harmonic mean of precision and recall, indicates a strong balance between minimizing both false positive and false negative identifications of threats; the higher scores for LSTM suggest improved performance in this regard compared to the CNN implementation.

The Illusion of Understanding

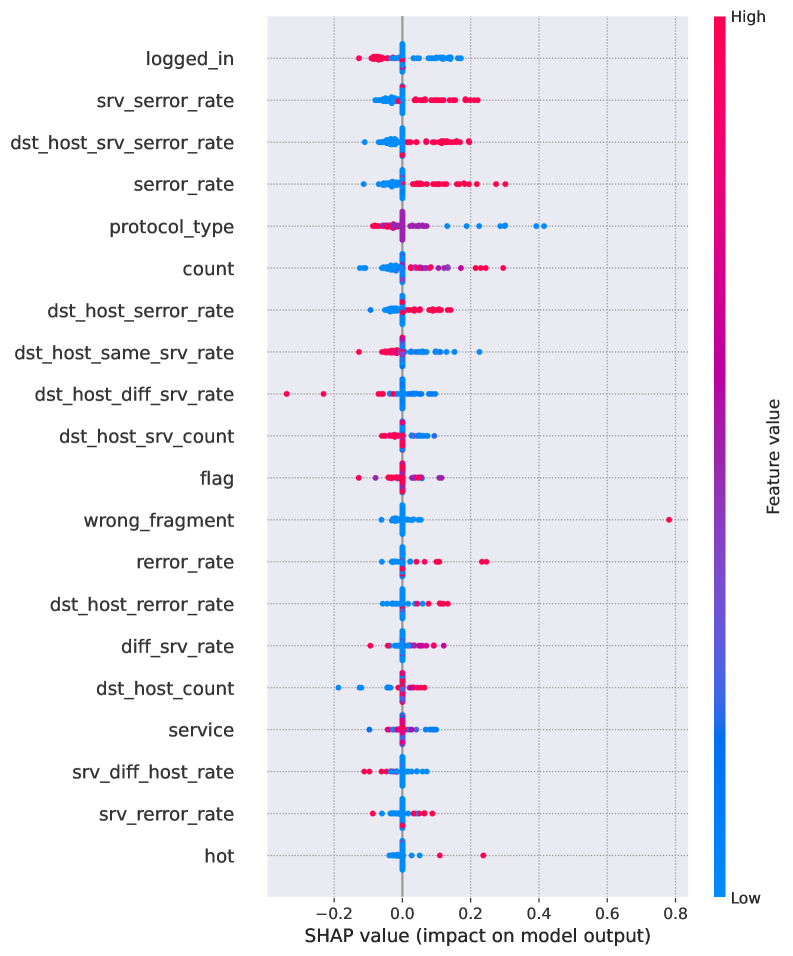

Intrusion detection systems increasingly rely on complex machine learning models to identify malicious activity, but these ‘black box’ algorithms often lack transparency, hindering trust and effective response. Explainable AI (XAI) techniques, such as SHAP (SHapley Additive exPlanations), address this challenge by illuminating the rationale behind a model’s predictions. SHAP values quantify the contribution of each input feature – network traffic characteristics, system logs, user behavior – to the final decision, effectively dissecting the model’s reasoning process. This granular level of insight moves beyond simply knowing that an attack was detected, revealing why it was flagged, and providing security professionals with a clear understanding of the factors driving the alert. Consequently, XAI isn’t merely about interpretability; it’s about building confidence in automated security systems and enabling more informed, rapid action against evolving threats.

Intrusion detection systems powered by artificial intelligence often function as “black boxes,” making it difficult to understand why a particular alert was triggered. However, techniques that quantify feature contributions reveal the specific elements driving a model’s decision. For example, an analyst can determine if an alert stemmed from a suspicious IP address, an unusual file hash, or a specific user agent string. This granular insight transcends simple alerting; it allows security teams to reconstruct the attacker’s tactics, techniques, and procedures (TTPs). By pinpointing the most influential features, analysts can validate the model’s reasoning, identify potential false positives, and, crucially, uncover previously unknown attack patterns hidden within network traffic or system logs. The ability to dissect a model’s logic transforms data into actionable intelligence, enabling a shift from reactive incident response to a proactive threat-hunting posture.

The ability to interpret the reasoning behind intrusion detection model predictions translates directly into tangible security improvements. Faster incident response becomes possible as analysts can quickly pinpoint the critical factors driving an alert, reducing investigation time and minimizing potential damage. Beyond immediate reactions, this detailed understanding facilitates the development of improved threat mitigation strategies, allowing security teams to address vulnerabilities at their source and refine preventative measures. Ultimately, this shift from reactive to proactive security empowers organizations to anticipate and defend against emerging threats, fostering a more resilient and robust security posture that extends far beyond simply identifying attacks as they occur.

The Fragile Interface with Reality

The effectiveness of any intrusion detection system hinges not solely on its ability to identify threats, but crucially on how clearly those threats are communicated to security analysts. A poorly designed user interface can overwhelm analysts with data, obscure critical information, and ultimately hinder their ability to respond effectively to genuine security incidents. Consequently, significant attention was dedicated to crafting an intuitive interface that prioritizes clarity and actionable insights. This design philosophy focused on minimizing cognitive load, presenting information in a visually digestible format, and enabling rapid decision-making under pressure, recognizing that even the most sophisticated detection capabilities are rendered useless if the analyst cannot readily interpret and act upon the presented findings.

The intrusion detection system’s user interface underwent rigorous evaluation utilizing the System Usability Scale (SUS), a widely recognized tool for assessing the ease of use and learnability of complex technologies. This standardized approach yielded a Cronbach’s Alpha of 0.60 for usability, indicating acceptable internal consistency and reliability in the collected data. While not exceptionally high, this score suggests the interface generally allows security analysts to effectively interact with the system and interpret critical information. Further refinement, guided by user feedback, could enhance the score and contribute to an even more positive user experience, ultimately improving the speed and accuracy of threat detection and response.

Evaluations of the intrusion detection system demonstrate a substantial degree of user confidence, as indicated by survey results. Specifically, analyses reveal a high Cronbach’s Alpha of 0.90 for both trust and reliability, suggesting a consistently positive perception of the system’s dependability and accuracy among its users. This metric signifies strong internal consistency within the survey responses, validating that participants generally agree on the system’s trustworthy nature. Such high levels of acceptance are crucial for effective security implementation, as analysts are more likely to utilize and act upon insights from a system they perceive as both reliable and worthy of confidence, ultimately bolstering the overall security posture.

The pursuit of robust intrusion detection systems, as detailed in this research, mirrors a fundamental struggle against entropy. Every layer of deep learning, every carefully constructed CNN or LSTM, is but a temporary bulwark against the inevitable tide of novel attacks. As Blaise Pascal observed, “All of humanity’s problems stem from man’s inability to sit quietly in a room alone.” This echoes the core challenge – systems aren’t built, they evolve. The incorporation of Explainable AI, specifically SHAP values, isn’t about achieving perfect prediction, but about understanding how a system fails, acknowledging that order is merely a cache between two outages. It’s a recognition that transparency, even in the face of uncertainty, is the only path toward sustained resilience.

What Lies Ahead?

This pursuit of human-centered explainability in intrusion detection, while valuable, merely shifts the locus of inevitable compromise. The system, built on the foundations of CNNs and LSTMs, will achieve a local maximum of performance-a temporary reprieve. Everything optimized will someday lose flexibility. The network landscapes will evolve, adversarial attacks will refine, and the explanations, so meticulously crafted with SHAP values, will become brittle, indicators of past threats rather than guides to future ones. Scalability is just the word used to justify complexity.

The true challenge isn’t building a more accurate detector, but accepting that perfect detection is an illusion. The focus should move from explanation after the fact to cultivating a resilient system that anticipates, adapts, and gracefully degrades. Consider a shift toward systems designed for continuous self-assessment and active learning-networks that admit their own limitations and proactively seek new knowledge.

The perfect architecture is a myth to keep sane. Perhaps the most fruitful path lies not in building smarter systems, but in fostering a more nuanced understanding of the inherent trade-offs between accuracy, interpretability, and adaptability. This research provides a snapshot, a temporary stabilization within a constantly shifting ecosystem-and the real work begins with acknowledging the inevitable entropy.

Original article: https://arxiv.org/pdf/2602.13271.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

- Where to Change Hair Color in Where Winds Meet

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Brent Oil Forecast

2026-02-18 01:07