Author: Denis Avetisyan

A new approach combines the power of artificial reasoning with graph networks to identify fraudulent activity in complex, text-rich data.

Researchers introduce FraudCoT, a framework leveraging chain-of-thought prompting and co-training to enhance fraud detection on text-attributed graphs.

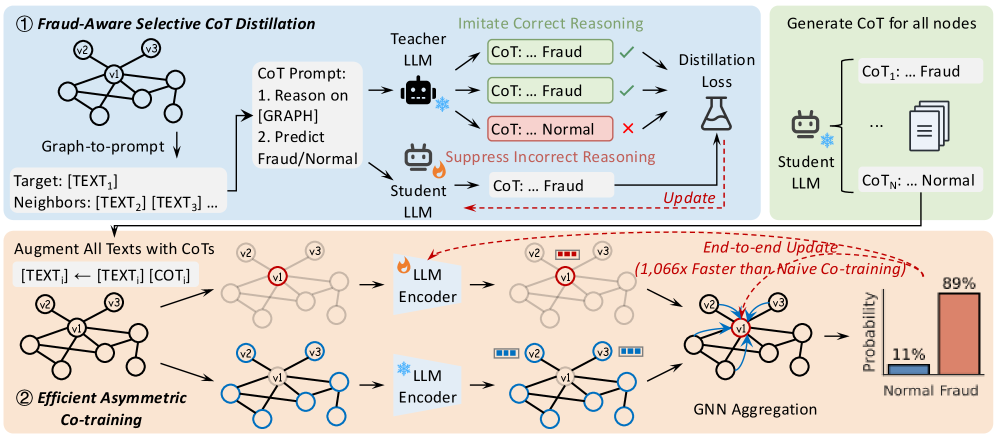

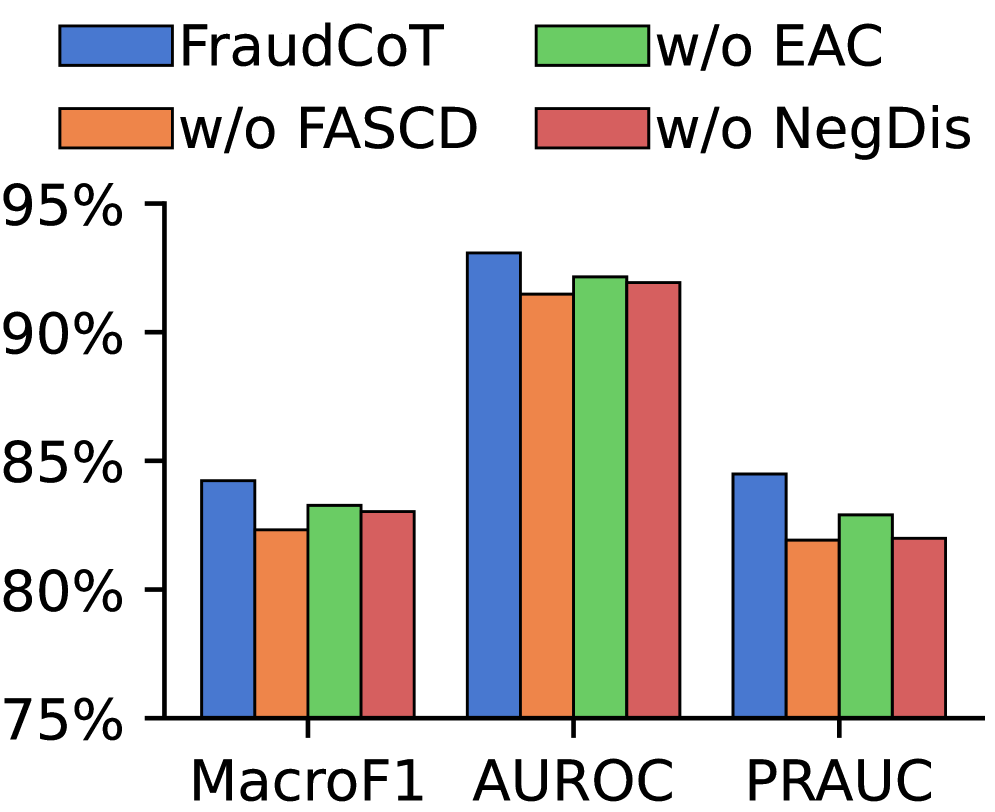

Effectively modeling both textual semantics and relational dependencies remains a significant challenge in graph-based fraud detection. This paper introduces ‘Autonomous Chain-of-Thought Distillation for Graph-Based Fraud Detection’, a novel framework-FraudCoT-that addresses this limitation by leveraging autonomous, graph-aware chain-of-thought reasoning and scalable co-training of large language models and graph neural networks. Experimental results demonstrate substantial performance gains-up to 8.8% AUPRC improvement-and a 1,066x speedup in training throughput over state-of-the-art methods. Could this approach unlock a new paradigm for efficiently and accurately detecting fraudulent activity in complex, text-rich networks?

Navigating the Complexities of Modern Fraud

Conventional fraud detection systems, designed for simpler transactional data, are increasingly overwhelmed by the sheer volume and intricate relationships present in modern networks. These systems typically rely on identifying anomalies in isolated events, but today’s fraud often manifests as coordinated activity spread across numerous entities and interactions. The exponential growth of interconnected data – encompassing social networks, financial transactions, and online communications – creates a landscape where subtle fraudulent patterns are easily obscured. Consequently, rule-based systems become ineffective due to the vast number of potential combinations, while statistical models struggle to differentiate between legitimate complexity and malicious intent. This challenge necessitates a shift towards approaches capable of reasoning about relationships and patterns within the entire network structure, rather than focusing solely on individual data points.

Successfully discerning fraudulent activity embedded within complex networks requires moving beyond conventional methods that primarily analyze isolated data points. Contemporary fraud often manifests as nuanced patterns woven into the fabric of interconnected data – transactions, communications, and relationships represented as graph structures, accompanied by extensive textual details. This necessitates innovative approaches to both reasoning and representation; simply identifying known fraud signatures is insufficient. Researchers are exploring techniques like graph neural networks, capable of learning intricate relationships within these networks, and natural language processing models that can extract subtle cues from accompanying text. These combined methods aim to model the underlying intent and behavior, enabling the detection of previously unseen fraud schemes that rely on exploiting the complexity of these interconnected systems, rather than blatant anomalies.

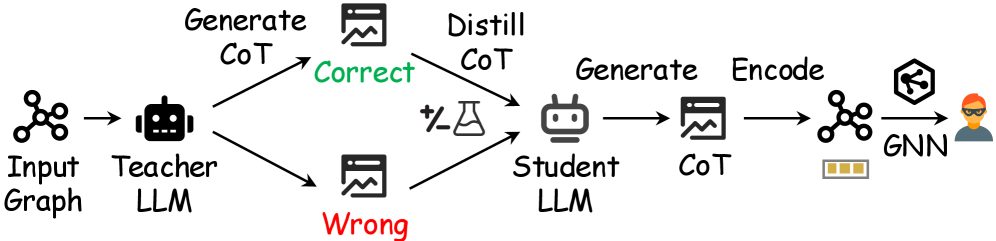

FraudCoT: A Framework for Relational Reasoning

FraudCoT establishes a unified framework for graph-based fraud detection by combining chain-of-thought (CoT) reasoning with co-training. This approach leverages the relational structure of fraud scenarios represented as graphs, utilizing Graph Neural Networks (GNNs) to encode node and edge features. CoT reasoning is then applied to these GNN-encoded representations, enabling the model to articulate its reasoning process for fraud identification. Co-training further enhances performance by iteratively training two models – a GNN and a Large Language Model – each benefiting from the other’s predictions, and reducing the need for extensive labeled data. This integrated framework facilitates a more interpretable and accurate fraud detection process compared to traditional methods.

FraudCoT leverages the complementary capabilities of Graph Neural Networks (GNNs) and Large Language Models (LLMs) to enhance fraud detection accuracy. GNNs effectively capture relational information within transaction networks, while LLMs provide reasoning abilities to interpret complex patterns and contextualize data. This integration enables FraudCoT to identify subtle fraud indicators often missed by traditional methods, resulting in a demonstrated improvement of up to 8.8% in Area Under the Precision-Recall Curve (AUPRC) when benchmarked against current state-of-the-art fraud detection baselines. This performance gain indicates a significant advancement in the framework’s ability to distinguish fraudulent activities from legitimate transactions.

Illuminating Decision Pathways with Chain-of-Thought

FraudCoT incorporates Chain-of-Thought (CoT) reasoning to provide justifications for its fraud predictions. This is achieved by prompting the model to articulate the steps taken to arrive at a given conclusion, effectively generating a textual explanation alongside the fraud score. These explanations detail the specific features and patterns that contributed to the prediction, such as unusual transaction amounts, atypical location data, or deviations from established user behavior. By making the reasoning process transparent, FraudCoT aims to improve model interpretability for human analysts and increase trust in its predictions, facilitating more informed decision-making and reducing the potential for false positives or missed fraudulent activities.

CoT-Augmented Representations extend traditional node embedding techniques by incorporating reasoning paths generated through Chain-of-Thought prompting. Standard node embeddings capture information about a node’s features and immediate connections; however, CoT-Augmented Representations also encode the explanatory logic used to arrive at a prediction for that node. This is achieved by representing the CoT reasoning steps as additional feature vectors associated with the node, effectively capturing not only what is being predicted, but also why. The resulting representation provides a richer contextual understanding of the prediction, enabling more nuanced analysis and improved model interpretability compared to embeddings based solely on node attributes and graph structure.

Selective Chain-of-Thought (CoT) Distillation is a knowledge transfer technique designed to compress the reasoning abilities of large language models (LLMs) into smaller, more efficient “student” models. This process doesn’t simply transfer predictions; it focuses on distilling the reasoning process itself, represented by the generated CoT explanations. The “selective” aspect refers to a targeted distillation approach, prioritizing the most informative reasoning steps for transfer, as opposed to indiscriminately copying all generated text. This results in a student model capable of producing accurate predictions and generating concise, relevant explanations, achieving performance comparable to the larger LLM with significantly reduced computational cost and latency.

Optimizing Efficiency Through Asymmetric Co-training

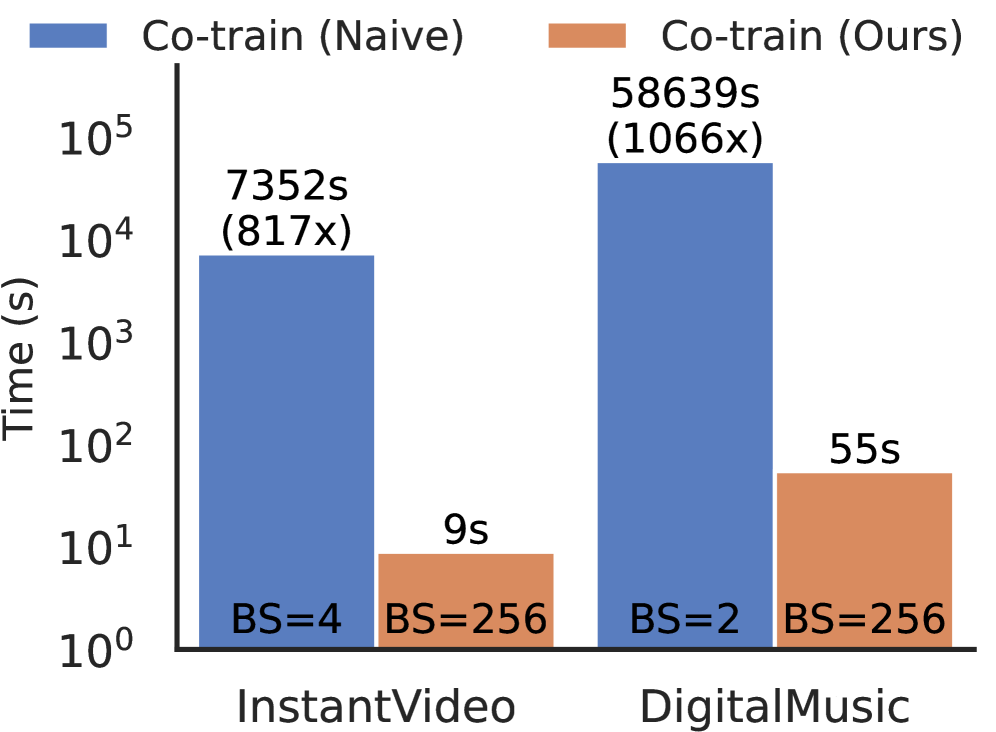

Asymmetric Co-training improves training efficiency on large graphs by separating the encoding processes for target nodes and their neighbors. Traditional co-training methods jointly encode both, creating a computational bottleneck as graph scale increases. This decoupling allows for independent encoding of these node sets, enabling parallelization and reducing the overall computational complexity. Specifically, the model maintains separate encoders for target nodes and neighbor nodes, processing them independently before feature aggregation. This architectural choice significantly reduces memory requirements and allows for faster training on graphs with millions or billions of nodes, without sacrificing representation quality.

Graph-structured data inherently contains relational information that can be exploited to improve model generalization, particularly in low-data regimes. Traditional machine learning models often treat data points as independent, ignoring these crucial relationships. By representing data as a graph, the model can leverage the connectivity patterns – identifying nodes with similar connections or roles – to infer labels for unseen nodes, even with limited labeled examples. This is achieved by propagating information across the graph, allowing the model to learn representations that capture both node features and their structural context. The efficacy of this approach stems from the principle that connected nodes are more likely to share similar labels, facilitating knowledge transfer and improving predictive accuracy when labeled data is scarce.

The framework employs end-to-end optimization utilizing an Unlikelihood Loss function to maximize fraud detection performance. This approach contrasts with traditional co-training methods by jointly optimizing all components, rather than training individual encoders separately. The Unlikelihood Loss specifically penalizes the model for predicting easily observable, non-fraudulent outcomes, thereby focusing learning on more subtle fraudulent patterns. Benchmarks demonstrate this optimization strategy achieves a training throughput speedup of up to 1,066x compared to naive co-training implementations, significantly reducing the time required to train the model on large datasets.

Towards a More Transparent and Resilient Future

FraudCoT represents a notable advancement in the field of fraud detection, moving beyond traditional ‘black box’ systems to offer both heightened resilience and enhanced clarity. Current fraud detection methods often struggle with evolving tactics and lack the ability to explain why a transaction is flagged as suspicious, leading to distrust and potential errors. This new framework addresses these shortcomings by integrating structural relationships within data with semantic understanding of the transactions themselves. The result is a system less susceptible to manipulation and capable of providing a traceable reasoning process for each determination, fostering greater confidence in its accuracy and enabling more effective preventative measures against increasingly sophisticated fraudulent activities. Ultimately, FraudCoT isn’t simply about identifying fraud; it’s about building a more transparent and dependable foundation for secure digital interactions.

FraudCoT distinguishes itself by moving beyond traditional fraud detection methods that often rely solely on transaction amounts or isolated data points. The framework ingeniously integrates structural information – the relationships between accounts, transactions, and users – with semantic data, which captures the meaning and context of each transaction. This combination allows the system to identify subtle, complex patterns indicative of fraud that would otherwise remain hidden. For example, it can detect coordinated attacks involving multiple seemingly unrelated accounts, or flag transactions disguised as legitimate purchases through nuanced semantic analysis. By considering both ‘who is connected to whom’ and ‘what is actually happening’, FraudCoT offers a more holistic and powerful approach to uncovering sophisticated fraudulent schemes, thereby improving detection rates and minimizing false positives.

FraudCoT distinguishes itself by not simply flagging potentially fraudulent transactions, but by articulating the rationale behind each assessment. This transparent approach generates clear reasoning paths, detailing how a transaction was deemed suspicious – highlighting the specific features and relationships that triggered the alert. Consequently, this heightened interpretability fosters greater trust among stakeholders, including investigators and customers, as decisions are no longer perceived as opaque ‘black box’ outputs. The ability to audit these reasoning paths also dramatically improves accountability, enabling refinement of the system, identification of biases, and ultimately, the implementation of more effective and justifiable fraud prevention strategies. This move beyond detection to explanation represents a critical advancement in building robust and reliable financial security systems.

The presented framework, FraudCoT, embodies a philosophy of systemic evolution rather than wholesale reconstruction. It skillfully integrates chain-of-thought reasoning with graph neural networks, recognizing that effective fraud detection isn’t solely about isolated data points, but understanding the relationships within text-attributed graphs. This approach mirrors the principle that infrastructure should evolve without rebuilding the entire block. As Tim Berners-Lee aptly stated, “The web is more a social creation than a technical one.” FraudCoT acknowledges this social aspect by leveraging textual data alongside graph structures, creating a system where reasoning and connection enhance detection capabilities – a testament to structure dictating behavior within a complex network.

What Lies Ahead?

The pursuit of robust fraud detection on text-attributed graphs inevitably reveals a fundamental tension: the desire for explainability clashes with the opacity of increasingly complex models. FraudCoT offers a promising step toward bridging this gap through chain-of-thought reasoning, yet the true cost of this interpretability remains to be fully tallied. The framework’s efficacy, while demonstrated, is predicated on the quality of the large language model employed; a dependence that introduces its own vulnerabilities and biases. Future work must address the potential for adversarial manipulation of these reasoning pathways, and the propagation of flawed logic from the LLM to the graph neural network.

A critical consideration lies in scaling these methods. The current architecture, reliant on co-training, introduces computational overhead. Simplification will be tempting, but every such streamlining carries the risk of diminishing the expressive power needed to capture the nuanced patterns of fraudulent activity. The field must explore more efficient distillation techniques, perhaps leveraging knowledge graphs to provide a structured foundation for reasoning, rather than relying solely on the LLM’s implicit understanding.

Ultimately, the goal is not simply to detect fraud, but to understand its evolving nature. This demands a shift from static models to adaptive systems capable of learning from new data and adjusting their reasoning processes accordingly. FraudCoT’s strength lies in its potential to formalize this reasoning; the challenge now is to build a system that can truly learn from its own deductions, and anticipate the next iteration of deception.

Original article: https://arxiv.org/pdf/2601.22949.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Banks & Shadows: A 2026 Outlook

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- ETH PREDICTION. ETH cryptocurrency

- 9 Video Games That Reshaped Our Moral Lens

- Uncovering Hidden Groups: A New Approach to Social Network Analysis

- The Weight of Choice: Chipotle and Dutch Bros

- Gay Actors Who Are Notoriously Private About Their Lives

2026-02-02 19:37