Author: Denis Avetisyan

A new machine learning framework, Tubo, dramatically improves the accuracy of network traffic predictions, paving the way for more efficient traffic engineering and resource allocation.

Tubo dynamically selects forecasting models and quantifies uncertainty to handle traffic bursts and enhance demand matrix prediction.

Despite advances in deep learning for time series forecasting, reliably predicting network traffic remains challenging due to its inherent burstiness and complex patterns. This paper introduces TUBO: A Tailored ML Framework for Reliable Network Traffic Forecasting, a novel machine learning approach designed to address these limitations. TUBO achieves significant improvements in forecasting accuracy-outperforming existing methods by up to four times-through burst processing and adaptive model selection, while also quantifying prediction uncertainty. By enabling more proactive traffic engineering, can TUBO unlock substantial gains in network performance and resilience?

The Inherent Fragility of Network Prediction

Conventional network forecasting techniques frequently falter when confronted with the realities of modern traffic, which is characterized by rapid shifts and sudden, often unpredictable, surges in demand. These methods, typically relying on historical averages or simplistic models, struggle to accurately anticipate these dynamic patterns, resulting in a mismatch between allocated resources and actual needs. Consequently, network operators often over-provision to accommodate peak bursts, leading to wasted capacity and increased operational costs, or under-provision, which manifests as congestion, packet loss, and a degraded quality of service for end-users. This inherent inability to effectively manage fluctuating traffic patterns highlights a critical limitation in current network management strategies and necessitates the development of more adaptive and responsive forecasting approaches.

Effective Traffic Engineering (TE) hinges fundamentally on the ability to predict network demand with precision; the demand matrix, representing the volume of traffic between various network nodes, serves as its core input. Accurate forecasting allows network operators to proactively allocate resources – bandwidth, processing power, and buffer space – minimizing congestion, reducing latency, and maximizing throughput. Without a reliable demand matrix, TE strategies become reactive rather than proactive, leading to inefficient resource utilization and a degraded user experience. Sophisticated algorithms and machine learning techniques are increasingly employed to refine these forecasts, accounting for temporal patterns, application-specific demands, and even external factors like time of day or special events, ultimately driving substantial improvements in overall network performance and cost efficiency.

Current network demand forecasting techniques frequently stumble when confronted with the intricate realities of data traffic. These methods, often relying on historical averages or simplified models, struggle to account for the non-stationary and highly variable nature of modern network usage-consider the sudden surges from streaming video, the unpredictable demands of cloud computing, or the impact of global events. This inadequacy stems from a failure to fully incorporate the myriad of influencing factors, including user mobility, application diversity, and the complex interplay between network protocols. Consequently, resources are often misallocated, leading to congestion, performance degradation, and a diminished quality of service; a more resilient and adaptive forecasting approach is therefore essential to navigate the ever-evolving landscape of network demand and ensure optimal performance.

Tubo: A Framework for Navigating Network Volatility

The Tubo framework utilizes a machine learning approach to predict network demand matrices, addressing the inherent challenges of fluctuating traffic patterns and burstiness common in modern networks. Traditional forecasting methods often struggle with non-stationary time series data; Tubo employs algorithms designed to adapt to these dynamic conditions. This is achieved through continuous learning and model refinement based on observed traffic, enabling more accurate short-term and long-term predictions of bandwidth requirements. The framework’s architecture is specifically engineered to handle the irregular spikes and sudden increases in traffic volume – termed “burstiness” – that can significantly impact network performance and resource allocation. By directly modeling and accounting for these dynamic elements, Tubo aims to improve the reliability and efficiency of network operations.

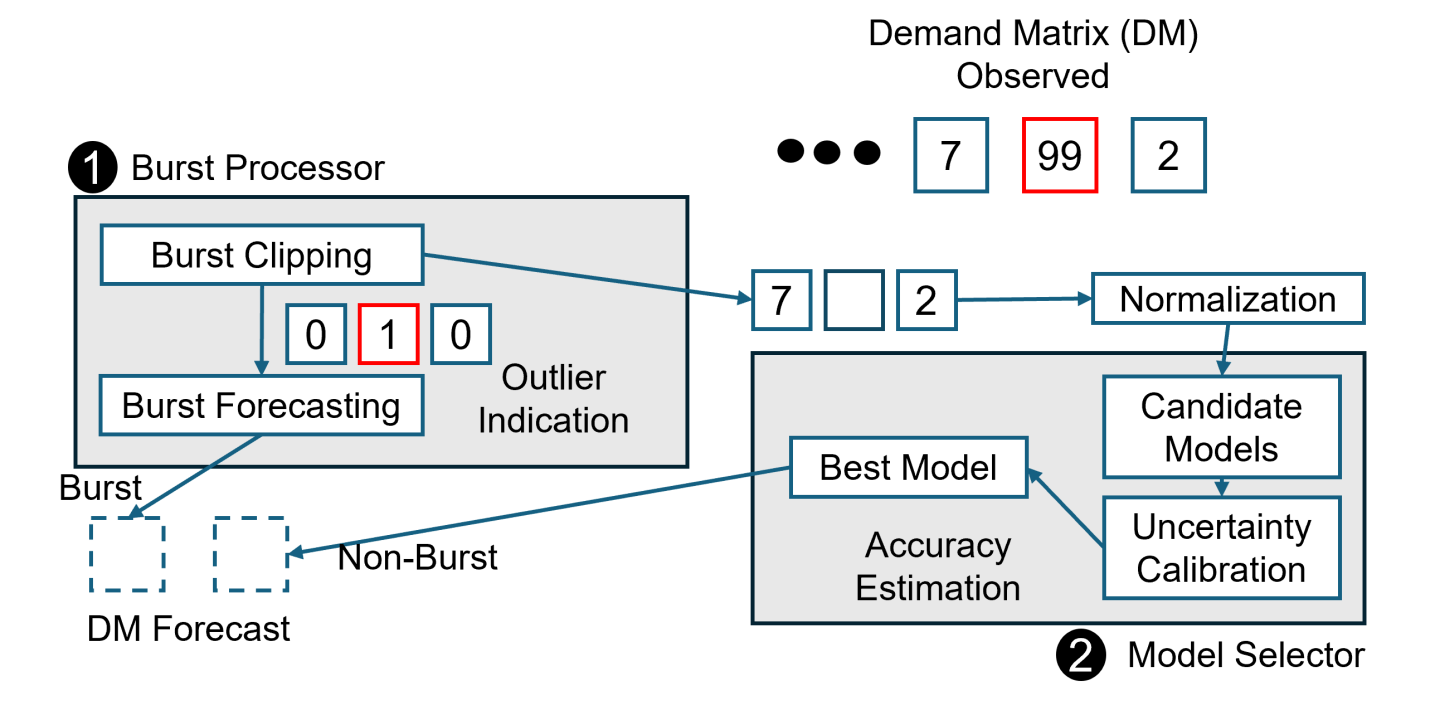

Intelligent Burst Processing within the Tubo framework functions by identifying and isolating anomalous traffic data points – termed “bursts” – that deviate significantly from established patterns. This isolation is achieved through a statistical outlier detection mechanism, employing techniques such as z-score analysis and interquartile range (IQR) calculations to dynamically define burst thresholds. By excluding these bursts from the training data used to generate forecasts, Tubo mitigates their distorting influence, leading to improved model accuracy and stability, particularly during periods of unpredictable network demand. The isolated burst data is not discarded, however; it is retained for separate analysis and can be used to inform real-time network adaptation strategies.

Tubo’s dynamic Model Selection component continuously evaluates incoming network demand data and automatically selects the forecasting model best suited to current conditions. This process utilizes a suite of pre-trained models, including statistical methods like ARIMA and machine learning algorithms such as Gradient Boosted Trees and Recurrent Neural Networks. Selection is based on a rolling performance metric, calculated from recent forecast errors, and re-evaluated at defined intervals. The system prioritizes models demonstrating the lowest Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE) on the validation set, enabling adaptation to shifts in traffic patterns and minimizing overall forecasting error without manual intervention.

Validating Tubo’s Resilience with Real-World Signals

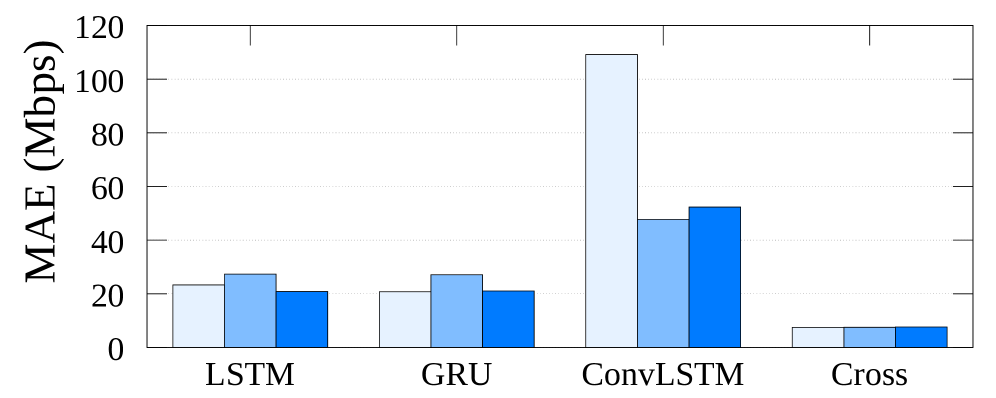

Tubo’s performance evaluation leveraged three publicly available network datasets: the Abilene Dataset, representing traffic data from a US backbone network; the GEANT Dataset, sourced from a European research network; and the CERNET Dataset, originating from the China Education Network. These datasets provided realistic network traffic patterns and volumes for testing Tubo’s algorithms under varying conditions. The Abilene dataset consists of approximately 100 million flow records collected between January 2000 and December 2002, while the GEANT dataset offers data captured from the GEANT network between 2006 and 2008. The CERNET dataset, collected from 2017 to 2018, offers a more recent representation of network traffic characteristics, allowing for a comprehensive assessment of Tubo’s adaptability to modern network environments.

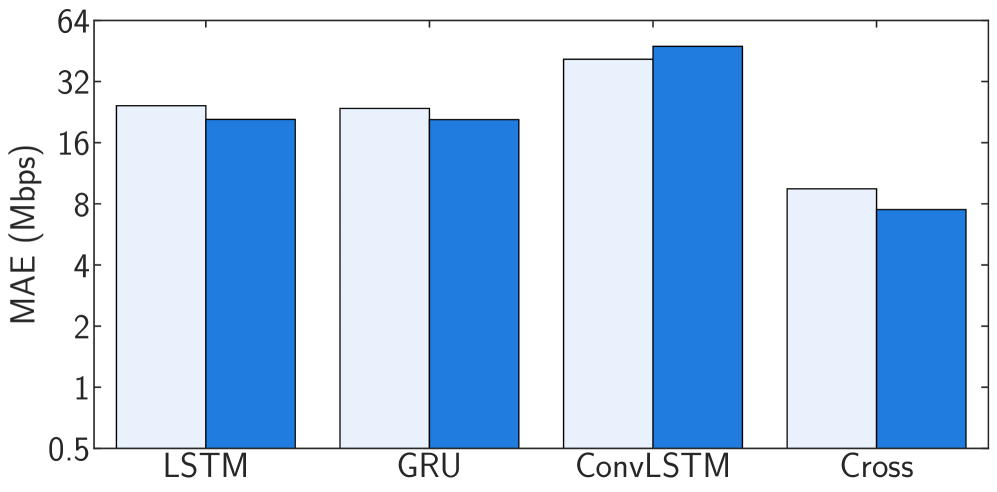

Tubo’s Model Selection component incorporated a comparative analysis of forecasting models, evaluating both transformer-based architectures and established recurrent neural networks. Specifically, the Crossformer model, representing a transformer-based approach, was assessed alongside ConvLSTM, a widely utilized convolutional Long Short-Term Memory network. This evaluation aimed to determine the optimal model for network performance prediction, considering factors such as accuracy and computational efficiency. The selection process facilitated the identification of the most suitable model for different network conditions and forecasting horizons within the Tubo system.

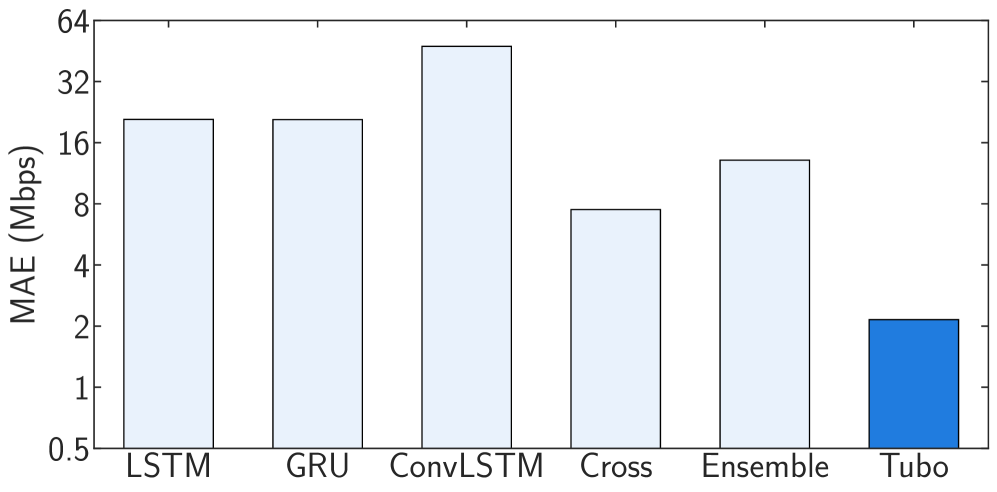

Tubo’s demand forecasting capabilities, when evaluated against established methodologies, exhibit a substantial performance gain, achieving up to a 4x reduction in Mean Absolute Error (MAE). This improved accuracy directly translates to enhanced network throughput; traffic engineering implementations utilizing Tubo’s forecasts demonstrate up to a 9x increase in throughput compared to systems employing traditional oblivious routing techniques. These gains were realized through rigorous testing and validation using established network datasets.

The Implications of Robust Forecasting: Towards Adaptive Networks

Tubo demonstrates a high degree of accuracy in identifying and predicting network bursts, a critical function for maintaining stable traffic forecasting. Evaluated against two prominent datasets – GEANT and Abilene – the system achieves a Burst Identification Rate of 94.35% and 93.89% respectively. This metric underscores Tubo’s capability to effectively distinguish genuine bursts from typical network fluctuations, allowing for more precise demand matrix predictions. The consistently high rates across diverse network environments suggest a robust and adaptable algorithm capable of handling the unpredictable nature of internet traffic and proactively mitigating potential disruptions caused by sudden surges in data volume.

Tubo’s innovative Burst Processing component significantly refines demand matrix forecasting by specifically identifying and segregating transient burst traffic from baseline network activity. Traditional forecasting methods often struggle with the unpredictable nature of bursts, leading to inaccurate predictions and potentially inefficient resource allocation. By isolating these short-lived, high-volume traffic spikes, Tubo ensures that forecasts are based on more stable and representative data. This isolation process minimizes the distorting effects of bursts, resulting in forecasts that exhibit improved stability and reliability – crucial for proactive network management and optimized performance. The component effectively filters noise from the signal, providing a clearer and more accurate picture of underlying traffic patterns and enabling more effective network planning.

To bolster the reliability of network demand predictions, Tubo employs statistical calibration techniques, notably Monte Carlo Dropout. This method doesn’t simply provide a single forecast value, but rather generates a distribution of possible outcomes, effectively quantifying the uncertainty inherent in the prediction. By running the forecasting model multiple times with randomly ‘dropped’ (deactivated) neurons, a range of potential demand matrix values is created. This allows for a more nuanced understanding of likely network behavior, moving beyond point estimates to provide confidence intervals and probabilistic forecasts. Consequently, network operators gain a clearer picture of potential risks and can proactively adjust resources, significantly enhancing the robustness of network planning and operation even when faced with unpredictable traffic patterns.

The pursuit of accurate network traffic forecasting, as detailed in this work with Tubo, highlights a fundamental truth about complex systems: simplification invariably introduces future costs. Tubo’s adaptive model selection, while improving immediate accuracy, represents a calculated trade-off-a choice of models optimized for current conditions, yet potentially vulnerable as traffic patterns evolve. As Donald Knuth observed, “Premature optimization is the root of all evil,” and Tubo’s approach, while not premature, implicitly acknowledges that each chosen simplification-each model selected-creates a form of ‘technical debt’ within the forecasting system. The framework’s strength lies not in eliminating this debt, but in managing it through continuous adaptation and uncertainty quantification, ensuring the system ages gracefully despite inevitable decay.

What’s Next?

The pursuit of accurate network demand forecasting, as exemplified by Tubo, is less about conquering uncertainty and more about achieving a temporary reprieve from its inevitable return. Each improvement in model selection and burst detection merely postpones the moment when unforeseen traffic patterns disrupt the engineered harmony. Technical debt, in this context, is analogous to erosion; gains are made against a constant force of degradation. The framework’s success hinges on adaptive model selection, yet the very notion of a ‘best’ model implies a static ideal within a dynamic system-a contradiction inherent to all predictive endeavors.

Future work will undoubtedly focus on expanding the scope of uncertainty quantification. However, a truly robust approach requires acknowledging the limits of predictability. Rather than striving for ever-finer resolution, the field might benefit from exploring methods that embrace controlled degradation-systems designed to fail gracefully, to redistribute load intelligently even in the face of inaccurate forecasts. Uptime, after all, is a rare phase of temporal harmony, not a permanent state.

The long-term challenge lies in shifting the focus from prediction to resilience. Can network infrastructure evolve beyond anticipating demand, and instead, learn to absorb and adapt to its inherent unpredictability? The answer, likely, resides not in more complex algorithms, but in a fundamental reimagining of network architecture itself-one that treats fluctuation not as a problem to be solved, but as a natural, and ultimately unavoidable, characteristic of the system.

Original article: https://arxiv.org/pdf/2602.11759.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

- Top 15 Celebrities in Music Videos

- Top 15 Movie Cougars

- Top 20 Extremely Short Anime Series

- Top 20 Overlooked Gems from Well-Known Directors

2026-02-15 22:27