Author: Denis Avetisyan

New research demonstrates the power of advanced time series models to accurately forecast day-ahead electricity prices, even in highly dynamic environments.

Time Series Foundation Models, combined with spike regularization and multivariate forecasting, significantly improve accuracy for Singapore’s electricity market.

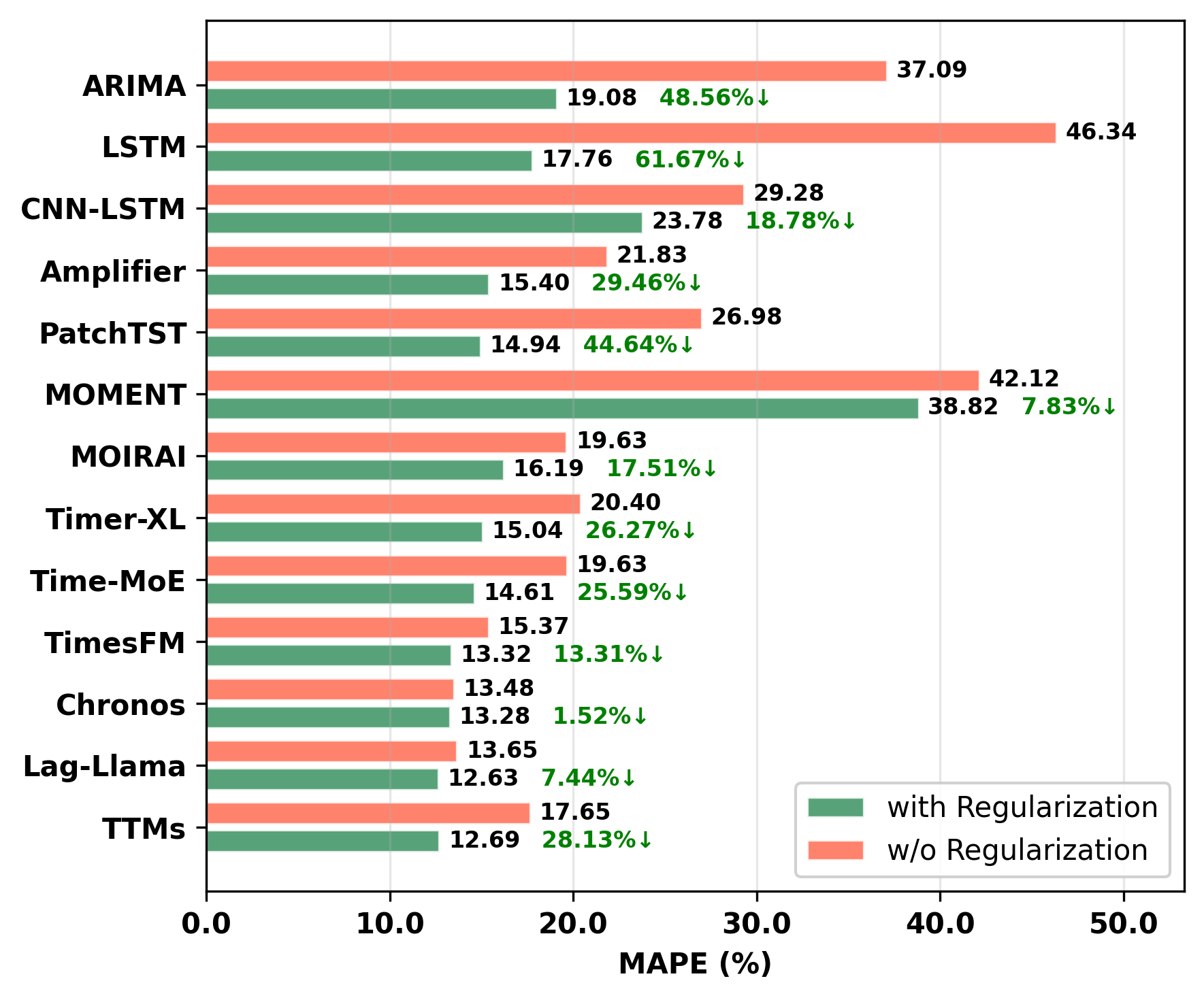

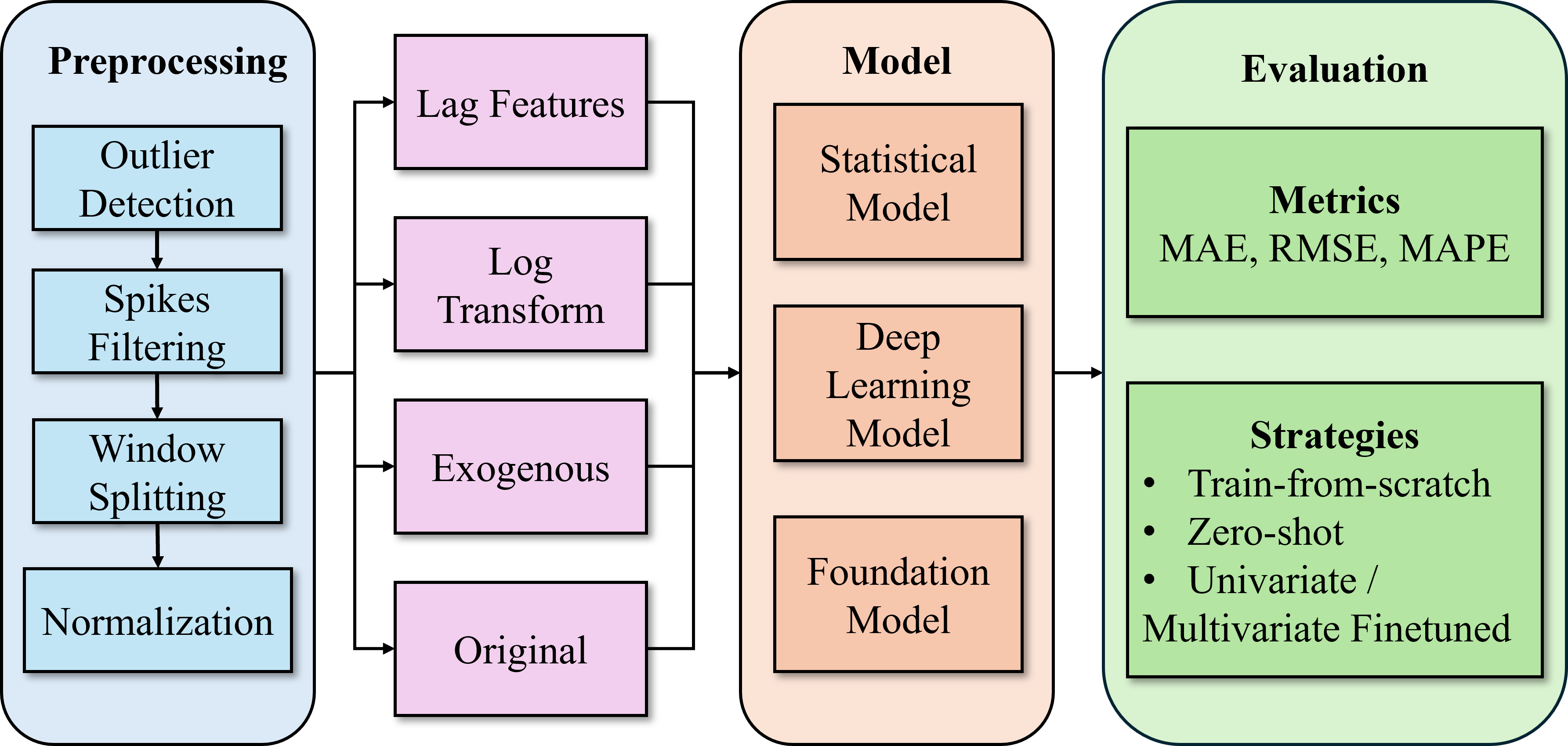

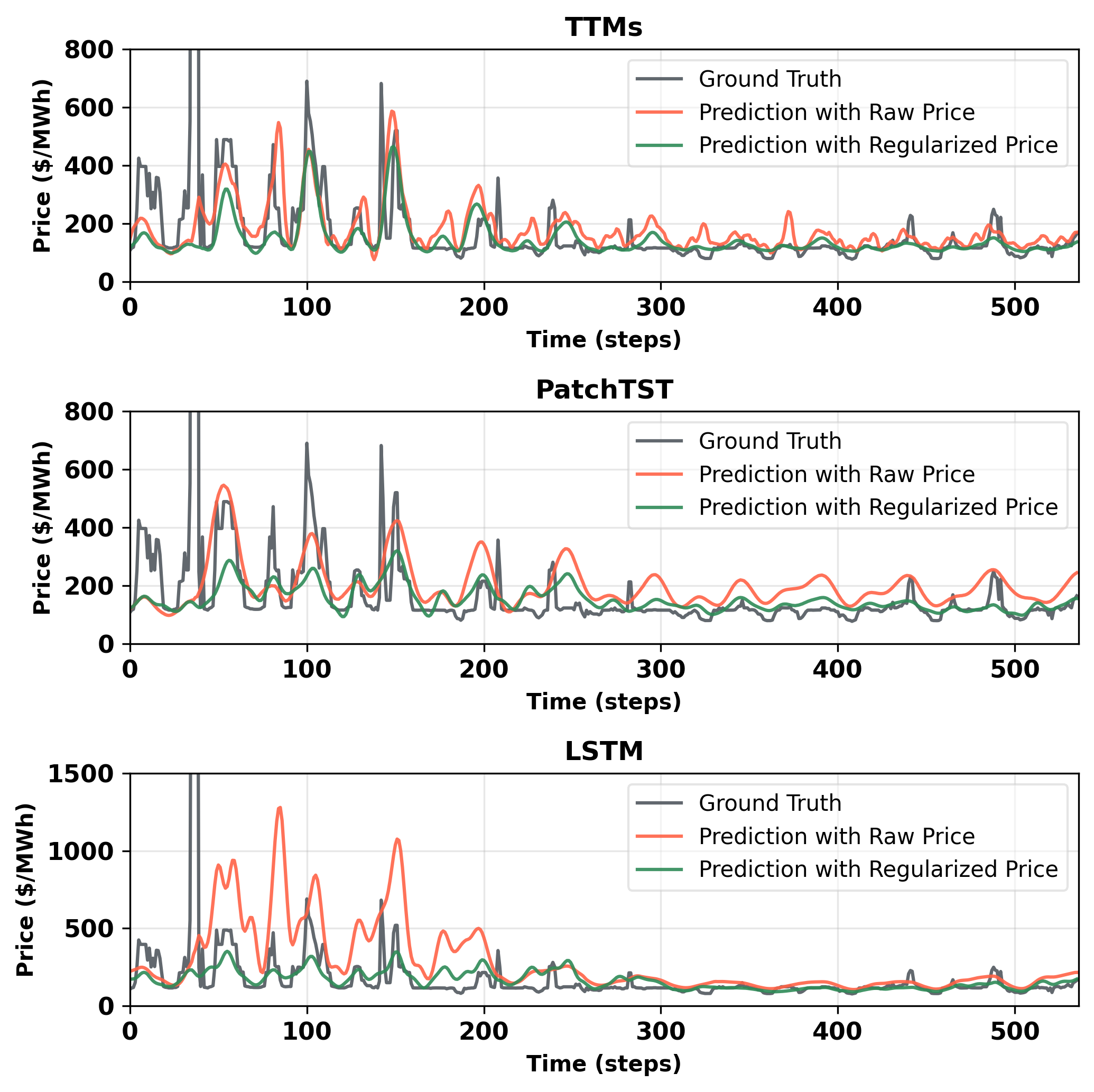

Accurate electricity price forecasting remains a persistent challenge despite increasing market complexity and volatility. This is addressed in ‘Day-Ahead Electricity Price Forecasting for Volatile Markets Using Foundation Models with Regularization Strategy’, which investigates the application of time series foundation models (TSFMs) to enhance day-ahead price predictions in Singapore’s dynamic market. Results demonstrate that TSFMs, when combined with a spike regularization strategy and incorporating exogenous factors, consistently outperform traditional statistical and deep learning approaches-achieving up to 37.4% improvement in forecast accuracy. Could these findings pave the way for more robust and reliable energy market decision-making in increasingly volatile global landscapes?

The Challenge of Precise Energy Prediction

The seamless functioning of modern electricity markets, particularly in a densely interconnected hub like Singapore, hinges on the ability to accurately predict day-ahead electricity prices. These forecasts are not merely academic exercises; they are the foundational elements upon which energy suppliers, retailers, and consumers base critical operational decisions. Precise predictions enable efficient resource allocation, minimize financial risks associated with price fluctuations, and ultimately reduce costs for end-users. Without reliable forecasts, market participants are forced to operate with increased uncertainty, potentially leading to over-generation, under-generation, or costly imbalances that disrupt grid stability and erode consumer trust. Therefore, advancements in forecasting methodologies represent a vital component in securing a resilient and economically viable energy future, extending beyond Singapore to benefit interconnected regional markets.

Electricity markets, particularly in dynamic economies like Singapore, present a forecasting challenge that often exceeds the capabilities of conventional statistical models. Techniques like Autoregressive Integrated Moving Average (ARIMA) and Generalized Autoregressive Conditional Heteroskedasticity (GARCH), while foundational, frequently stumble when confronted with the non-linear relationships and rapid fluctuations characteristic of energy pricing. These models assume a degree of stability and predictability that simply doesn’t exist in markets influenced by intermittent renewable sources, geopolitical events, and even localized weather patterns. The inherent volatility – periods of high price swings followed by relative calm – isn’t adequately captured by their linear structures, leading to persistent forecast errors and hindering optimal decision-making for energy providers and consumers alike. Consequently, researchers are increasingly exploring more advanced methodologies, such as machine learning algorithms, to better model these intricate market behaviors and improve the precision of day-ahead price predictions.

The growing dependence on imported natural gas introduces significant volatility to Singapore’s energy market, as prices are susceptible to geopolitical events and fluctuations in global supply chains. Simultaneously, weather patterns exert a powerful influence on electricity demand – increased temperatures drive up air conditioning usage, while cloud cover impacts solar energy generation. These interconnected external factors render traditional forecasting models inadequate; their linear structures struggle to accommodate the non-linear relationships between gas prices, weather conditions, and electricity demand. Consequently, advanced analytical techniques – encompassing machine learning algorithms and probabilistic forecasting – are increasingly vital to navigate these complexities and provide reliable, short-term energy price predictions, ensuring grid stability and efficient market operation.

Deep Learning’s Potential for Modeling Energy Price

Deep learning architectures, specifically Convolutional Neural Networks combined with Long Short-Term Memory networks (CNN-LSTM) and bidirectional LSTMs, demonstrate an ability to model the non-linear and temporally-dependent characteristics inherent in electricity price data. These models utilize convolutional layers to extract relevant features from price series, while LSTM layers effectively capture long-range dependencies that influence future price movements. Bidirectional LSTMs further enhance this capability by processing the time series in both forward and reverse directions, allowing the model to consider both past and future contextual information when making predictions. This approach contrasts with traditional time series methods that often struggle with the complexities and volatility present in electricity markets.

Deep learning models applied to electricity price forecasting, while effective at capturing temporal dependencies, present practical limitations regarding computational resources and data requirements. The complex architectures of models such as CNN-LSTMs and bidirectional LSTMs necessitate substantial processing power for both training and inference. Furthermore, these models typically require large volumes of historical data to avoid overfitting and achieve reliable predictive accuracy. This dependence on extensive datasets and high computational cost hinders their scalability to markets with limited historical data availability or restricts their deployment on resource-constrained platforms, impacting real-time adaptability and widespread implementation.

Implementation of logarithmic transformation and lag features demonstrably improves the accuracy of electricity price forecasting models. Logarithmic transformation stabilizes variance in the data, addressing heteroscedasticity common in financial time series and facilitating more stable model training. Specifically, this technique resulted in a 9.64% reduction in Mean Absolute Percentage Error (MAPE). The incorporation of lag features, representing past values of the electricity price, introduces historical patterns into the model, providing additional predictive power and contributing a further 3.80% reduction in MAPE. These techniques, when combined, offer a practical approach to enhancing model performance without requiring substantial architectural changes or increased computational complexity.

The Rise of Foundation Models in Time Series Forecasting

Recent advancements in time series forecasting introduce foundation models – exemplified by architectures such as Time-MoE, MOMENT, TimesFM, MOIRAI, and TTMs – that leverage pre-training on extensive datasets to achieve improved performance. Unlike traditional deep learning methods trained from scratch for each specific forecasting task, these models are initially trained on a large corpus of time series data, allowing them to learn general temporal patterns and dependencies. This pre-training phase enables the models to generalize more effectively to unseen data and reduces the amount of labeled, task-specific data required for subsequent fine-tuning or adaptation. The use of massive datasets, often incorporating diverse time series from various domains, is a key characteristic differentiating these foundation models from prior approaches and driving their improved capabilities.

Time Series Foundation Models (TSFMs) exhibit enhanced generalization capabilities and reduced reliance on task-specific training data due to their pre-training on extensive and diverse time series datasets. Traditional deep learning models for time series forecasting typically require substantial labeled data for each individual forecasting task, leading to overfitting and poor performance on unseen data. In contrast, TSFMs leverage the knowledge acquired during pre-training to adapt more effectively to new time series with limited labeled examples. This is achieved by learning robust temporal representations and patterns that are transferable across different datasets and forecasting horizons, thereby minimizing the need for extensive fine-tuning on task-specific data and improving out-of-sample accuracy.

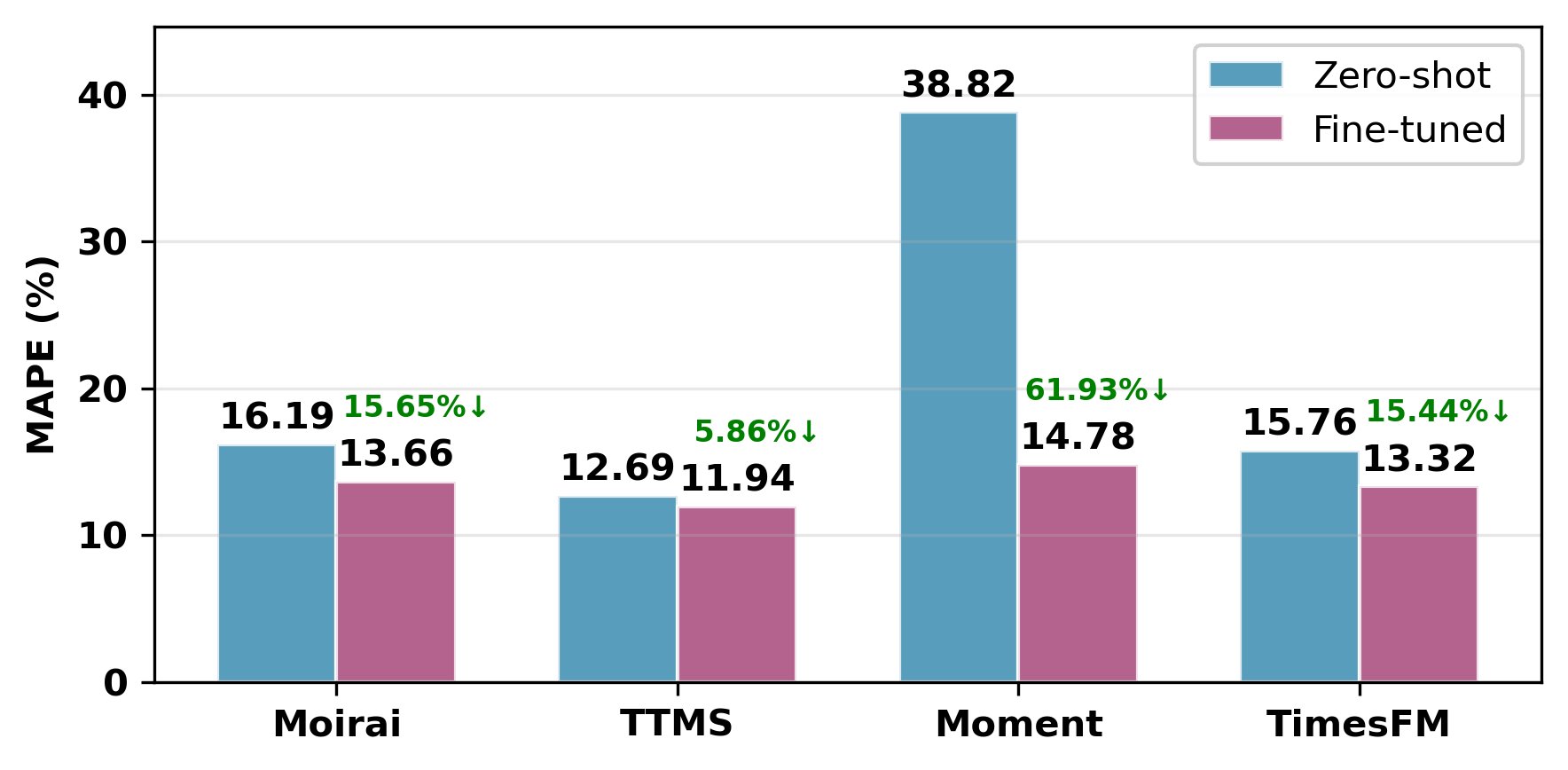

Zero-shot inference and supervised fine-tuning represent key deployment strategies for Time Series Foundation Models (TSFMs), enabling adaptation to novel forecasting tasks without extensive retraining. Zero-shot inference leverages the pre-trained knowledge of the TSFM to generate predictions on new time series data directly, requiring no task-specific examples. Alternatively, supervised fine-tuning utilizes a smaller, labeled dataset to adjust the model’s parameters for improved performance on a specific application. Recent evaluations demonstrate the efficacy of this approach; zero-shot Time Transformer Models (TTMs) have established a strong baseline performance, achieving a Mean Absolute Percentage Error (MAPE) of 12.62% without any task-specific training, indicating a substantial level of generalization capability.

Evaluating Model Accuracy and Quantifying Forecasting Performance

Forecasting model accuracy is quantitatively evaluated using several standard error metrics. Mean Absolute Error (MAE) calculates the average magnitude of errors, providing a readily interpretable measure of prediction inaccuracy. Root Mean Square Error (RMSE) also measures error magnitude but gives higher weight to larger errors due to the squaring operation, making it sensitive to outliers. Mean Absolute Percentage Error (MAPE) expresses error as a percentage of actual values, enabling comparison of forecast accuracy across different scales and series; it is calculated as \frac{1}{n}\sum_{i=1}^{n} |\frac{Actual_i - Forecast_i}{Actual_i}| \times 100. These metrics allow for objective comparison of model performance and identification of areas for improvement.

Time Series Foundation Models (TTMs) are consistently outperforming traditional forecasting methods in predictive accuracy assessments. Specifically, models leveraging TTMs, such as those employing the mft architecture, have demonstrated a Mean Absolute Percentage Error (MAPE) of 12.41% in recent evaluations. This represents a quantifiable improvement over established techniques, indicating the capacity of these models to generate more precise forecasts and reduce the magnitude of forecasting errors. The reported MAPE value provides a benchmark for evaluating the performance of other forecasting models and highlights the potential benefits of adopting a Time Series Foundation Model approach.

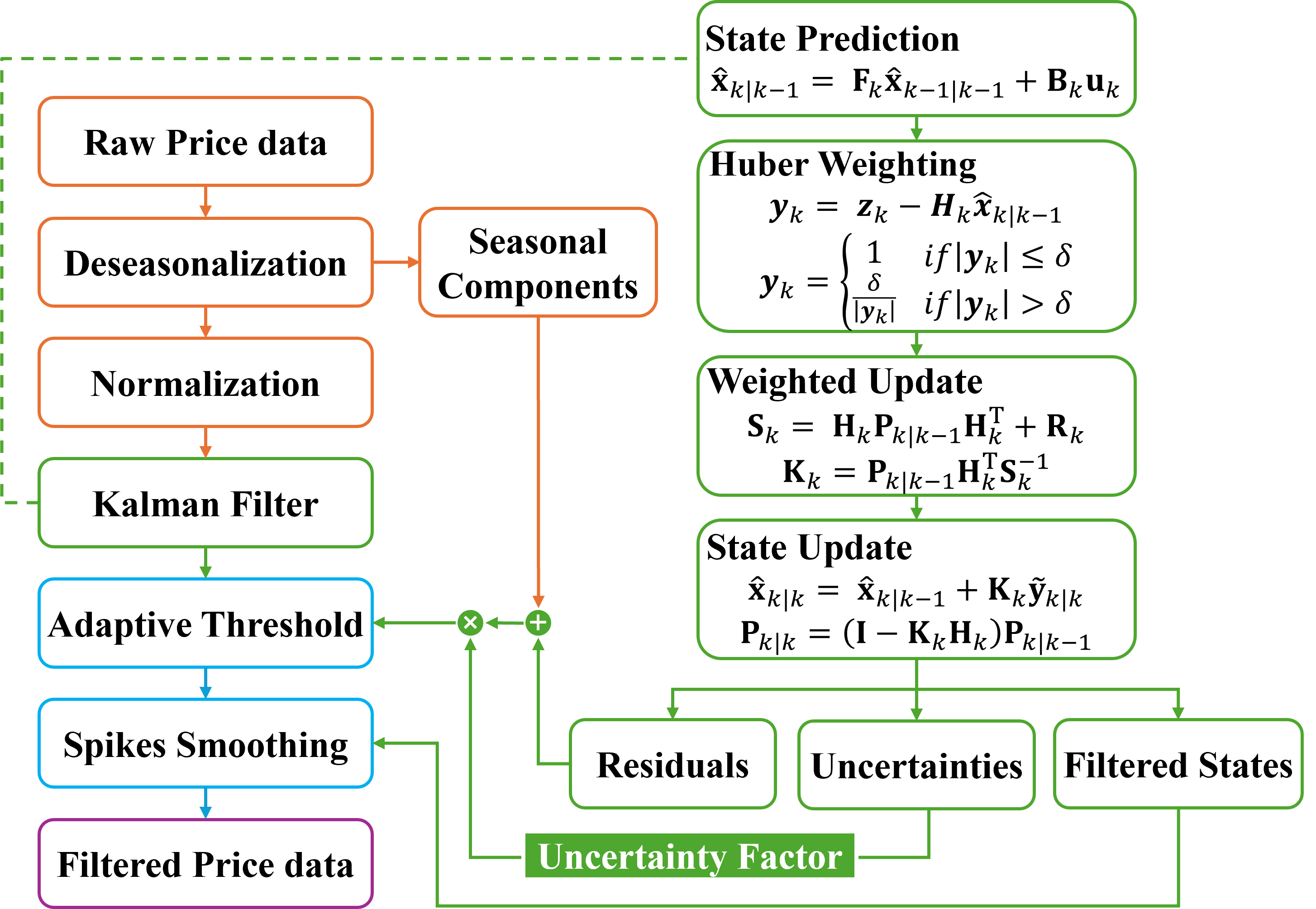

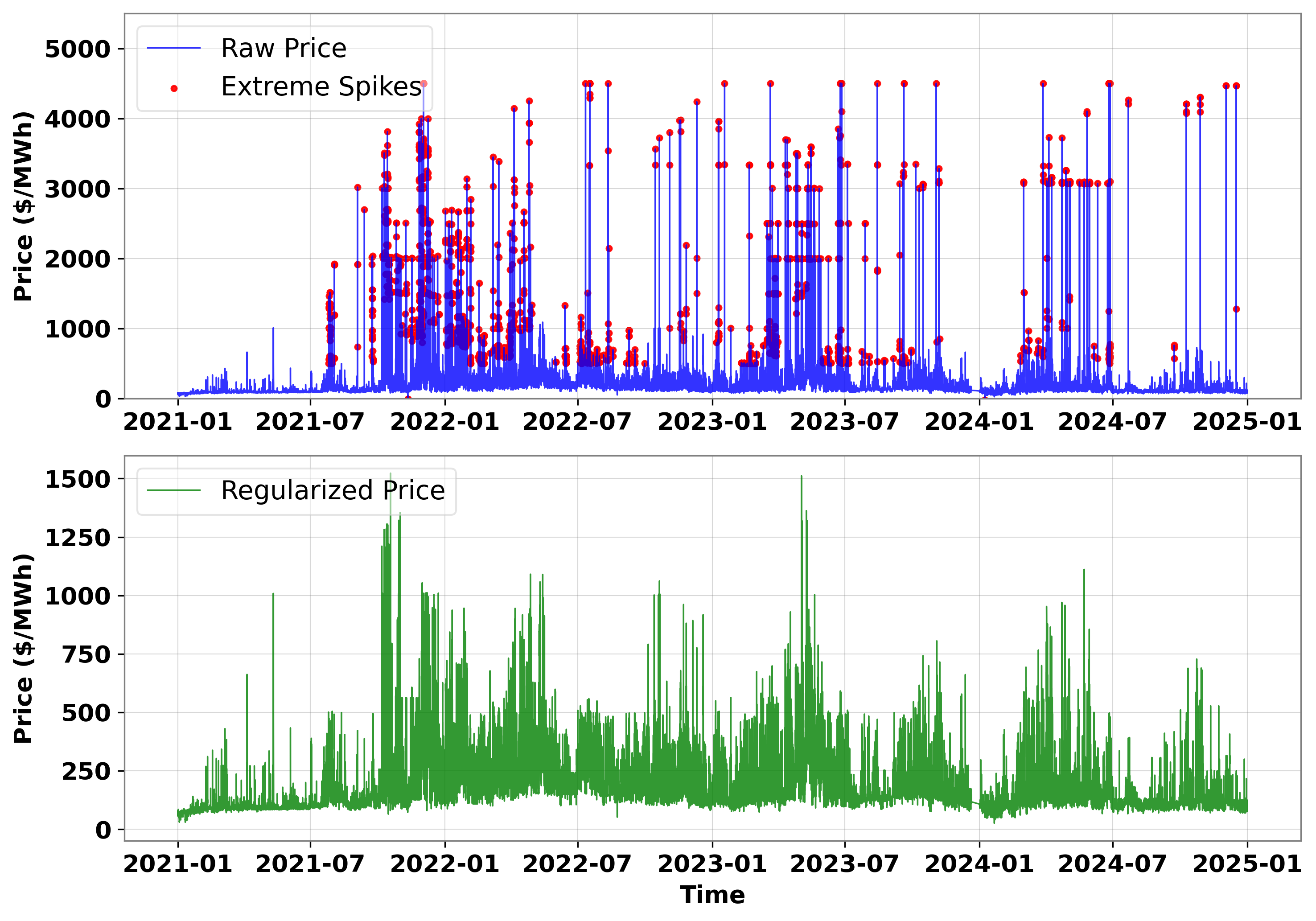

Seasonal-Trend decomposition using Loess (STL) combined with Kalman Filtering (KF) offers a methodology for addressing the impact of anomalous price fluctuations on forecasting accuracy. This technique specifically isolates and mitigates the effects of price spikes by decomposing the time series into seasonal, trend, and residual components, then applying Kalman filtering to smooth the residual data. Benchmarking against Long Short-Term Memory (LSTM) models demonstrates potential improvements in Mean Absolute Percentage Error (MAPE) of up to 61.67% when STL-KF is integrated into the forecasting pipeline, indicating a significant reduction in forecast error attributable to outlier management.

Towards a More Resilient and Sustainable Energy Future

The application of Time Series Foundation Models to electricity price forecasting represents a significant step towards bolstering energy market stability and efficiency. Traditional forecasting methods often struggle with the inherent volatility and complex dependencies within energy pricing, leading to inaccuracies that can propagate through the market. These new models, pre-trained on vast datasets of time series data, demonstrate an enhanced ability to capture nuanced patterns and anticipate price fluctuations. This improved predictive power allows for more informed trading strategies, reduced price volatility, and ultimately, a more reliable and cost-effective energy supply for consumers and industries alike. By facilitating proactive grid management and optimized resource allocation, the integration of these models promises a more resilient and sustainable energy ecosystem.

Ongoing investigations are actively refining the very building blocks of time series forecasting models, with a particular emphasis on novel architectures and enhanced training methodologies. Researchers are exploring techniques like attention mechanisms and transformer networks, adapting designs originally successful in natural language processing to the unique challenges of temporal data. Simultaneously, innovations in training – including contrastive learning and self-supervised approaches – aim to bolster model robustness and generalization capabilities. These efforts aren’t simply about achieving incremental gains in accuracy; they represent a push to quantify and minimize prediction uncertainty, providing energy market participants with more reliable information for decision-making and ultimately fostering greater stability within increasingly complex energy systems.

The refinements in electricity price forecasting, driven by Time Series Foundation Models, extend far beyond mere market stability. Accurate predictions are foundational for intelligent resource management, allowing for proactive allocation of energy reserves and minimizing waste. Simultaneously, these models facilitate grid optimization by enabling dynamic adjustments to energy distribution, responding to anticipated demand fluctuations and integrating intermittent renewable sources more effectively. Ultimately, this improved forecasting capability is pivotal in accelerating the development of truly sustainable energy systems, supporting informed investment in renewable infrastructure and fostering a more resilient and environmentally responsible energy future.

The pursuit of accurate electricity price forecasting, as detailed in this work, benefits significantly from a ruthless simplification of approach. The demonstrated success of Time Series Foundation Models, especially when paired with spike regularization, isn’t about adding layers of complexity, but about distilling the essential predictive signals. As Edsger W. Dijkstra stated, “It’s not always that I’m sad when a program won’t work, but when it works, and it’s not beautiful.” The elegance of these models, outperforming traditional methods in Singapore’s volatile market, lies in their ability to extract meaningful insights from multivariate inputs without succumbing to unnecessary complication. The focus on clarity, and the reduction of noise through regularization, echoes a fundamental principle of effective modeling.

What Lies Ahead?

The demonstrated efficacy of Time Series Foundation Models in forecasting day-ahead electricity prices, while encouraging, does not resolve the inherent difficulty of the task. The market, after all, possesses a stubborn resistance to perfect prediction. The current work establishes a functional architecture; however, it skirts the question of why these models succeed where simpler methods falter. Further inquiry should not focus solely on architectural refinements – more layers, greater parameter counts – but on a distillation of the underlying signal. What minimal representation captures the essential dynamics of price formation?

A persistent limitation remains the reliance on historical data. Singapore’s energy landscape is not static. Increased penetration of renewables, evolving demand profiles, and geopolitical factors introduce non-stationarities that current models, trained on past behavior, will inevitably misinterpret. The next iteration must incorporate mechanisms for rapid adaptation, perhaps through continual learning or the integration of exogenous variables with a more theoretically grounded relationship to price.

Ultimately, the pursuit of increasingly accurate forecasts should be tempered by a pragmatic acknowledgment of diminishing returns. The true value may not lie in predicting the absolute price, but in quantifying the uncertainty surrounding it. A model that accurately assesses its own limitations is, paradoxically, more useful than one that confidently delivers a flawed prediction. A focus on calibrated probabilistic forecasting-knowing what is not known-represents a more honest, and potentially more impactful, direction.

Original article: https://arxiv.org/pdf/2602.05430.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Top 15 Insanely Popular Android Games

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- Why Nio Stock Skyrocketed Today

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- The Weight of First Steps

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

2026-02-06 19:34