Author: Denis Avetisyan

A new multi-agent framework utilizes artificial intelligence to pinpoint skill gaps and deliver targeted learning resources, promising more effective and efficient knowledge acquisition.

This paper introduces ALIGNAgent, a system leveraging large language models for personalized learning through skill gap analysis and adaptive guidance.

While personalized learning holds immense promise, existing systems typically address knowledge tracing, diagnostic assessment, or resource recommendation in isolation. This paper introduces ALIGNAgent: Adaptive Learner Intelligence for Gap Identification and Next-step guidance, a novel multi-agent framework that integrates these components to deliver adaptive learning experiences. By leveraging large language models, ALIGNAgent accurately estimates student knowledge proficiency and identifies specific skill gaps, enabling targeted resource recommendations validated with precision scores of 0.87-0.90. Could this holistic approach unlock more effective and individualized learning pathways for students across diverse disciplines?

The Fragility of Conventional Pedagogy

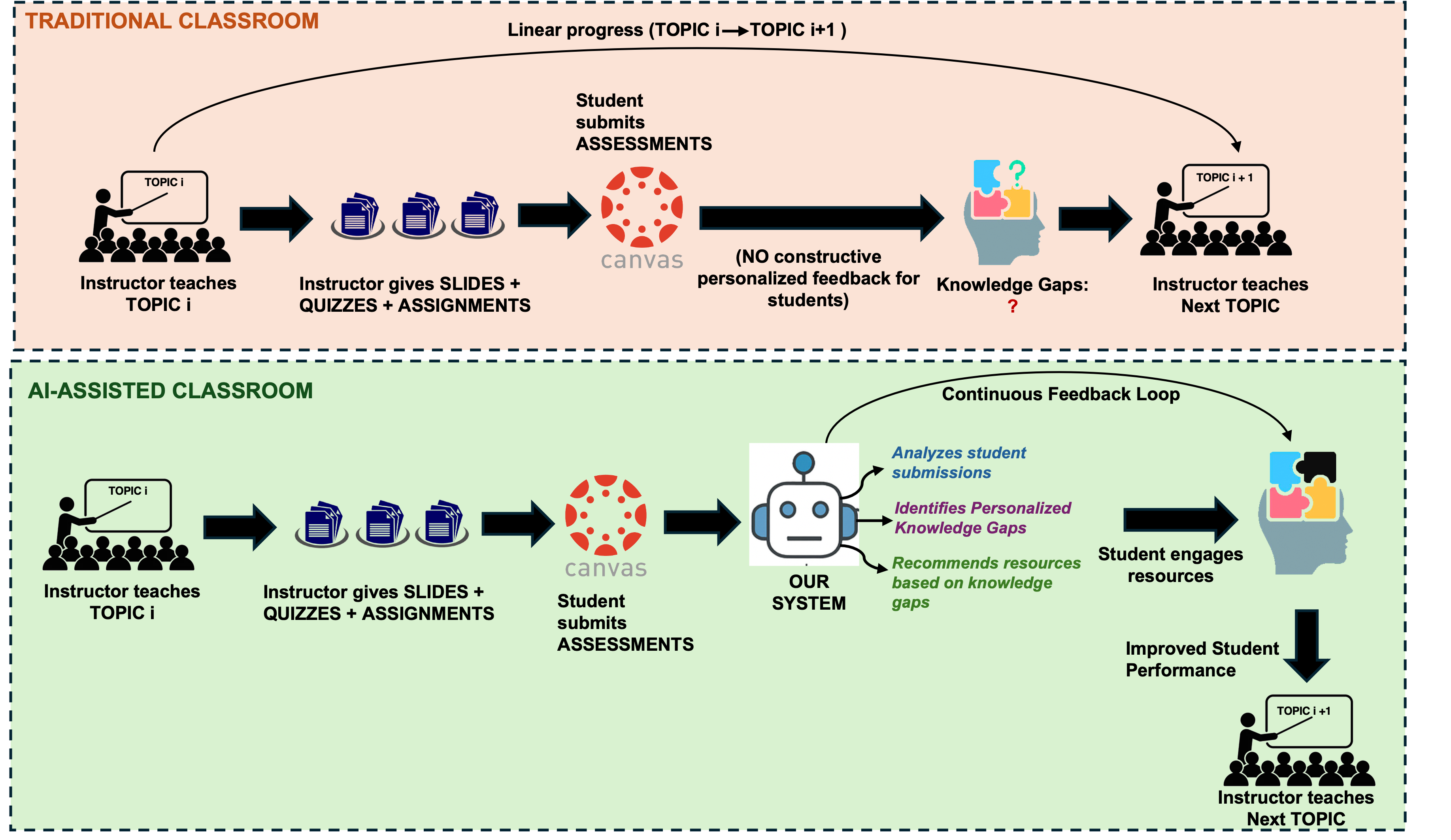

Conventional educational systems, frequently structured around standardized curricula and pacing, often overlook the diverse cognitive landscapes of individual learners. This one-size-fits-all methodology can inadvertently create knowledge gaps as students progress, leaving those who require additional support behind and failing to sufficiently challenge those who grasp concepts quickly. The resulting frustration isn’t merely motivational; it stems from a fundamental disconnect between the presented material and a student’s existing understanding, hindering the formation of robust, interconnected knowledge networks. Consequently, a significant portion of students may experience difficulties retaining information or applying learned concepts, ultimately impacting their academic performance and fostering a negative association with the learning process itself.

Truly effective learning hinges on a precise understanding of a student’s knowledge gaps – identifying not just that something is unknown, but specifically what remains unclear. This demands assessment methods far beyond simple right or wrong answers; nuanced techniques, like adaptive testing and knowledge tracing, are needed to map the contours of a student’s understanding. Such granular insight then enables truly individualized instruction, tailoring content and pacing to address specific deficits and build upon existing strengths. Rather than a one-size-fits-all approach, this pinpoint accuracy allows educators to deliver targeted interventions, maximizing learning efficiency and fostering a deeper, more lasting comprehension of the subject matter.

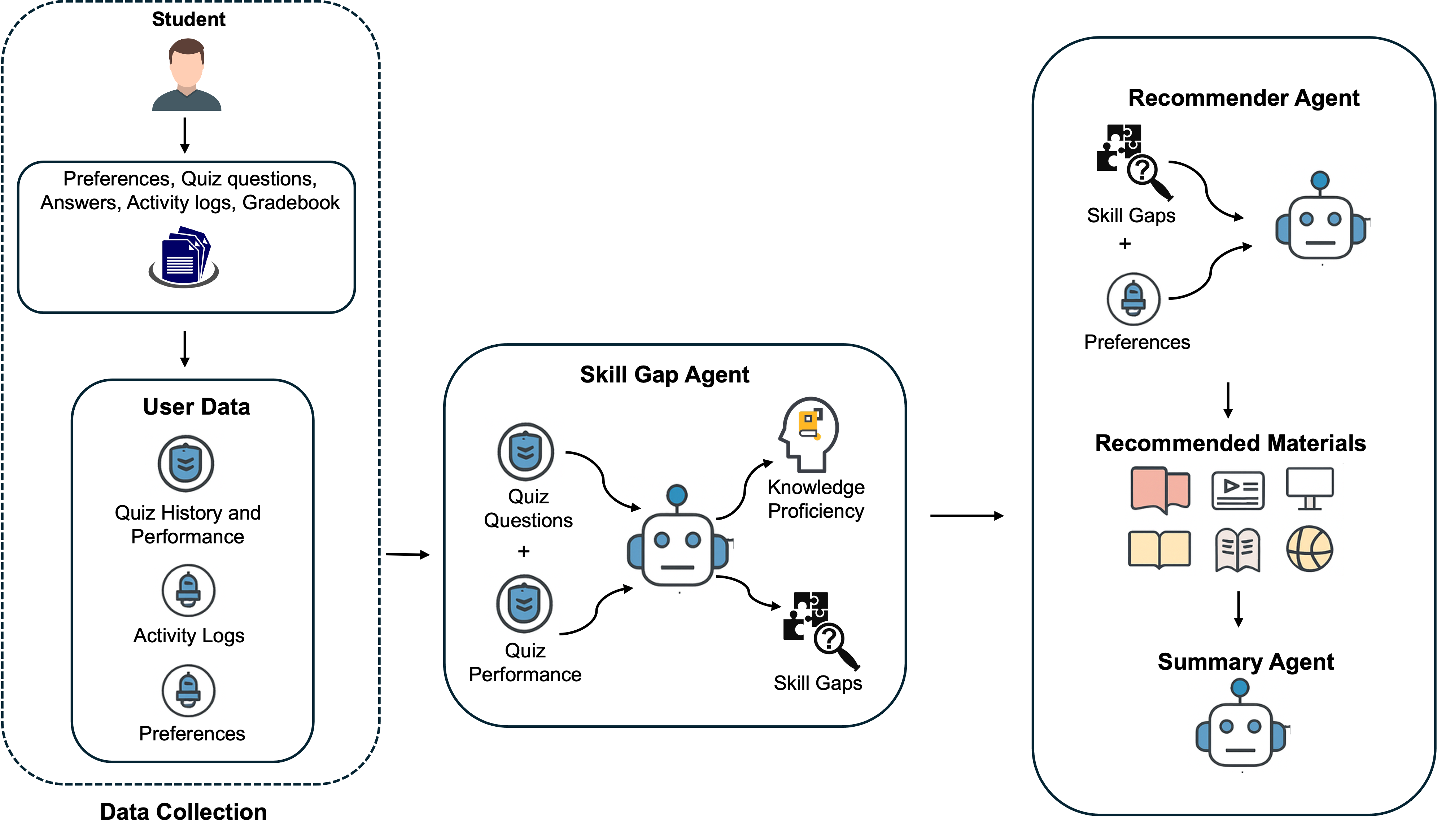

A System of Distributed Cognition

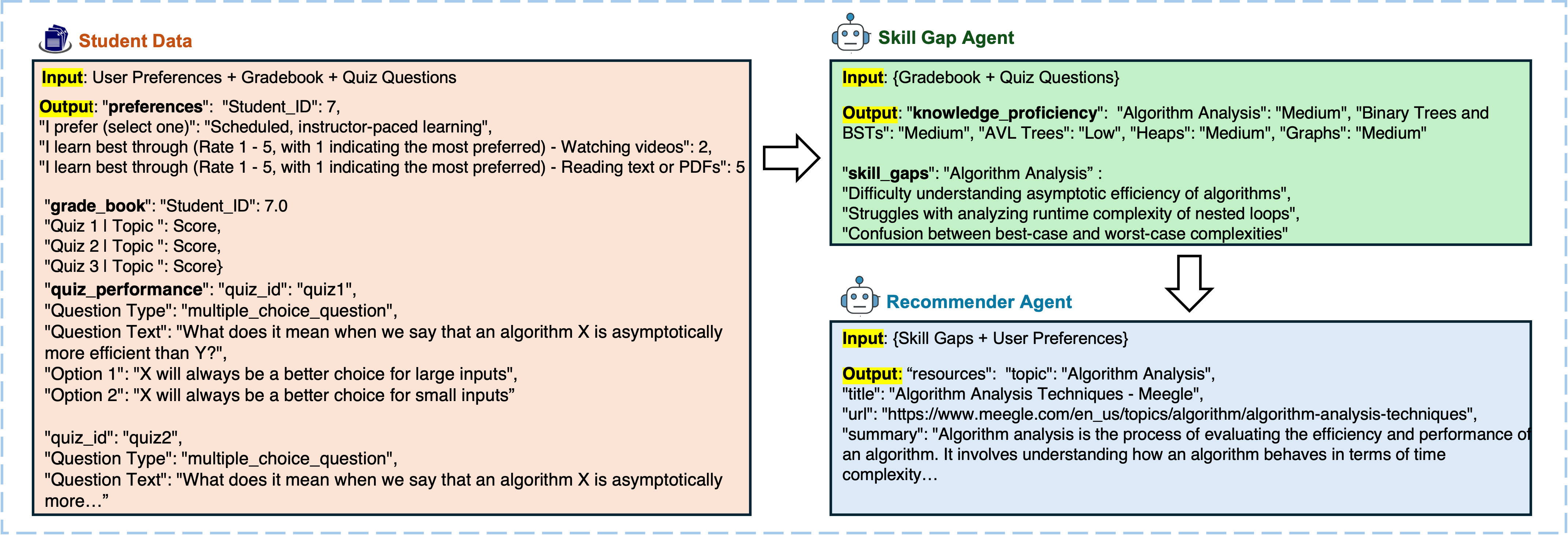

The proposed Multi-Agent System (MAS) utilizes a distributed architecture comprised of specialized agents to facilitate personalized learning. These agents operate autonomously but collaboratively, exchanging information to model individual student knowledge and learning preferences. Each agent is designed with a specific function, including knowledge assessment, resource identification, and intervention planning. Communication between agents is achieved through a defined protocol, enabling dynamic adaptation of learning pathways based on real-time student performance and evolving needs. The MAS aims to move beyond static, one-size-fits-all approaches by creating a responsive learning environment tailored to each student’s unique profile.

Knowledge Estimation within the system employs ongoing assessment of student understanding through analysis of their interactions with learning materials and completion of formative assessments. This process doesn’t rely on infrequent, high-stakes testing, but instead utilizes a continuous stream of data to build a probabilistic model of each student’s knowledge state. The output of Knowledge Estimation directly informs the Resource Recommendation engine, which selects materials tailored to address identified knowledge gaps, and drives targeted interventions – such as hints, explanations, or alternative practice problems – designed to remediate specific misunderstandings and maximize learning efficiency. The system utilizes Bayesian Knowledge Tracing and Item Response Theory to model student proficiency and predict performance on unseen tasks.

The system’s adaptive behavior is achieved through continuous feedback loops between knowledge estimation, resource recommendation, and intervention modules. As student understanding is assessed via knowledge estimation, the system dynamically adjusts resource recommendations to address identified knowledge gaps. This targeted approach minimizes exposure to already-understood material, thereby maximizing learning efficiency. Furthermore, the system can trigger interventions – such as providing hints or alternative explanations – when knowledge estimation indicates persistent difficulties, ensuring students receive support precisely when and where it is needed. This iterative process of assessment, adaptation, and intervention facilitates a personalized learning path optimized for each student’s individual needs and promotes efficient knowledge acquisition.

The Nuance of Cognitive Diagnosis

Cognitive Diagnosis Models (CDMs) represent an advancement over traditional skill-gap identification by moving beyond simply identifying whether a student possesses a skill to diagnosing why they are failing. Unlike methods that treat proficiency as a single, global attribute, CDMs assess the probability of a student possessing specific underlying knowledge components or cognitive abilities – often referred to as “knowledge states.” This is achieved through item response theory and statistical modeling of response patterns to assess mastery of these discrete cognitive attributes. Consequently, CDMs provide a more nuanced profile of student strengths and weaknesses, pinpointing specific areas of deficit which facilitates targeted instructional interventions and personalized learning pathways, leading to improved learning outcomes.

Deep Knowledge Tracing (DKT) and Bayesian Knowledge Tracing (BKT) are both probabilistic models used to estimate a student’s mastery of specific skills over time. BKT, a precursor to DKT, utilizes Hidden Markov Models to represent a student’s knowledge state as either knowing or not knowing a concept, transitioning between these states with probabilities determined by performance on related exercises. DKT, leveraging recurrent neural networks, moves beyond binary knowledge states to represent a continuous skill level, allowing for more nuanced modeling of student understanding. Both methods utilize student interaction data – such as correct/incorrect responses – to update these probabilities or skill level estimates, enabling prediction of future performance on unseen problems and facilitating personalized learning pathways. The models differ in complexity and data requirements, with DKT generally requiring larger datasets to effectively train the neural network compared to BKT’s parameter estimation approach.

The integration of Deep Knowledge Tracing and Bayesian Knowledge Tracing within the Multi-Agent System facilitates adaptive learning by continuously assessing student knowledge states and tailoring subsequent learning materials accordingly. This framework moves beyond static assessments by dynamically updating a probabilistic model of each student’s understanding based on their interactions with the system. This allows the system’s agents to identify specific knowledge gaps and deliver targeted interventions, such as providing remedial exercises or suggesting alternative learning pathways. The resulting data-driven approach enhances the efficacy of instruction by optimizing the sequence and difficulty of learning content to maximize individual student progress and improve overall learning outcomes.

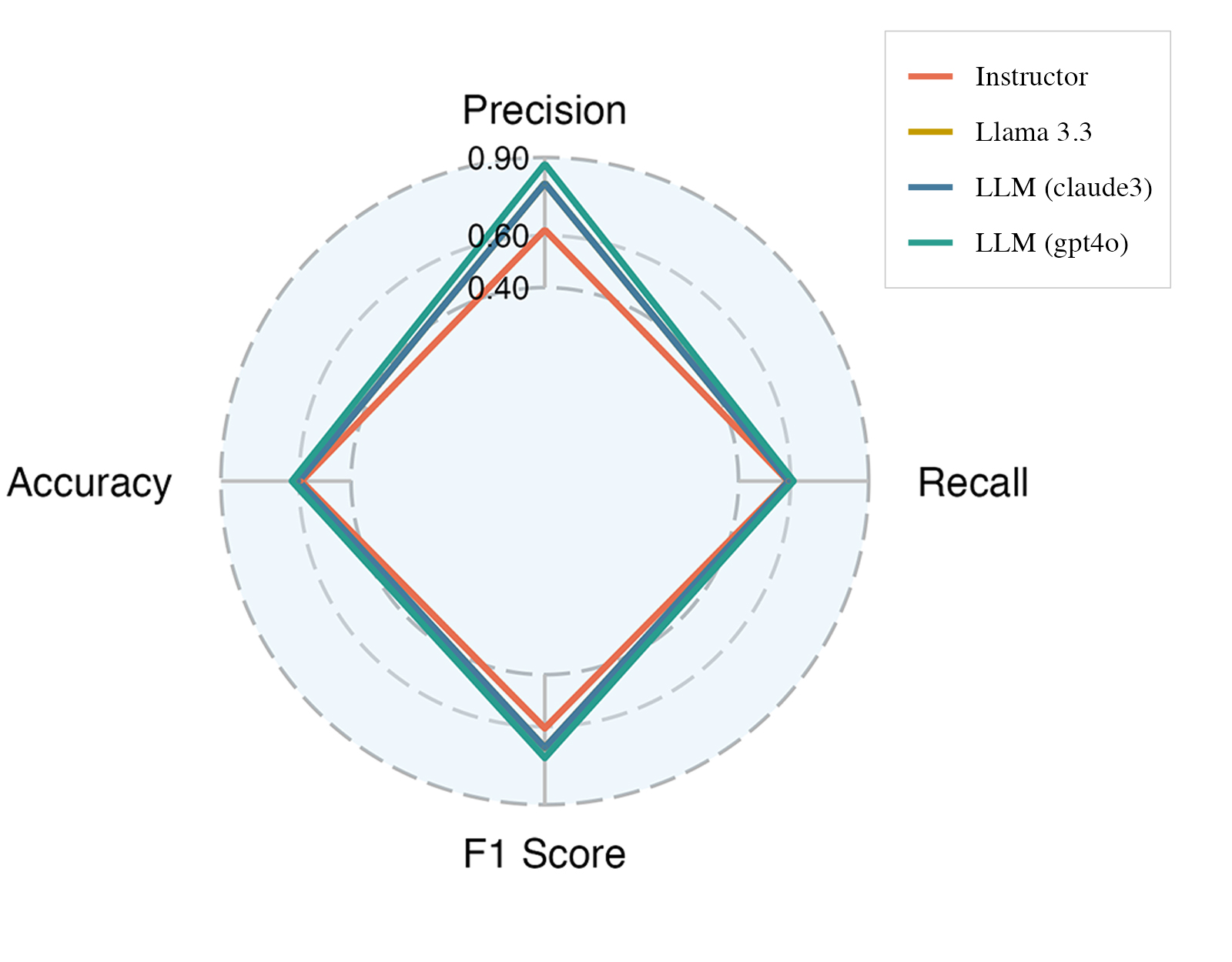

Demonstrating System Efficacy

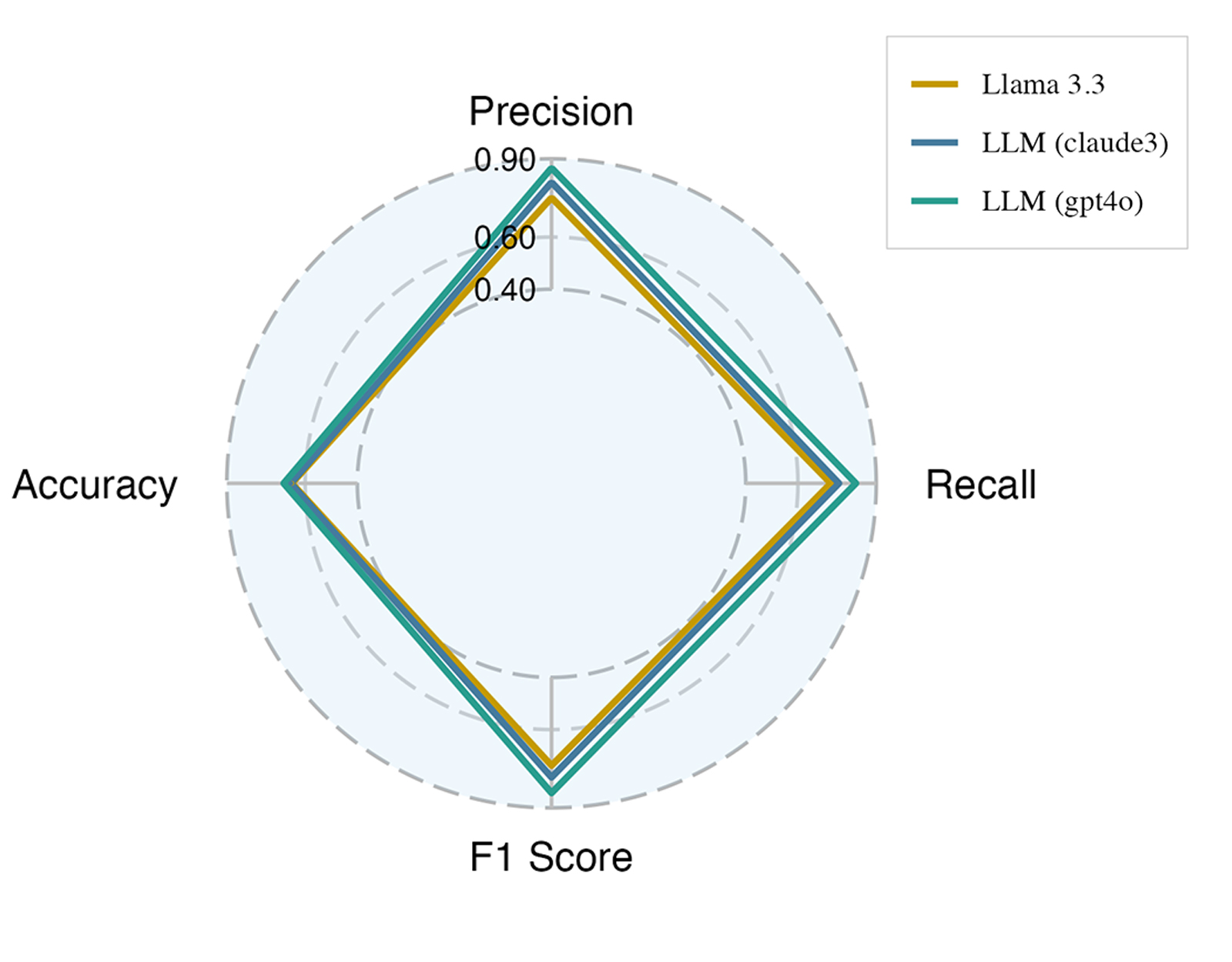

The Multi-Agent System’s efficacy isn’t simply asserted, but demonstrably quantified through a suite of established performance metrics. Accuracy, representing the overall correctness of the system’s assessments, is coupled with precision and recall to provide a nuanced understanding of its performance. Precision measures the proportion of correctly identified knowledge proficiencies out of all instances flagged as such, while recall assesses the system’s ability to identify all relevant proficiencies. Critically, the F1 score – the harmonic mean of precision and recall – offers a balanced evaluation, mitigating the potential for skewed results favoring either high precision or high recall. This rigorous approach ensures that improvements to the system are consistently tracked and validated, allowing for objective comparisons and a clear understanding of its strengths and limitations in gauging knowledge mastery.

The multi-agent system isn’t merely assessing knowledge; it actively cultivates a deeper grasp of core computer science principles. Through dynamic interactions and personalized challenges, the framework reinforces understanding of fundamental concepts such as data structures, allowing learners to move beyond rote memorization and truly internalize how these building blocks function. Similarly, the system’s problem-solving scenarios necessitate careful algorithm analysis, prompting users to consider efficiency and scalability. This practical application extends to sequential circuit design, where the agents guide users through the logical steps of circuit construction and troubleshooting, fostering an intuitive understanding of digital logic and its real-world applications. The result is a learning environment that doesn’t just test knowledge, but actively builds it, providing a more robust and lasting comprehension of these foundational topics.

The 𝖠𝖫𝖨𝖦𝖭𝖠𝗀𝖾𝗇𝗍 framework showcases a notable leap forward in gauging student understanding through automated knowledge proficiency estimation. Evaluations reveal precision rates consistently between 0.87 and 0.90, indicating a high degree of accuracy in identifying what students genuinely know. Complementing this, F1 scores – a harmonic mean of precision and recall – consistently fall within the 0.84-0.87 range, signifying a robust balance between minimizing both false positives and false negatives in assessment. This level of performance suggests the framework offers a reliable mechanism for tailoring educational content and providing personalized learning experiences, adapting to each student’s specific needs and maximizing their potential for growth.

Toward a More Responsive Pedagogy

The system’s core innovation lies in its ability to move beyond standardized curricula and cater to the unique learning trajectory of each student. Through continuous assessment and real-time analysis of performance, the Multi-Agent System dynamically adjusts the difficulty, pace, and content presentation. This isn’t simply about providing different exercises; the system identifies knowledge gaps and learning preferences, then proactively offers targeted support and tailored challenges. By fostering an environment where students are consistently engaged at their optimal level, the framework maximizes comprehension, builds confidence, and ultimately unlocks previously untapped potential – transforming education from a one-size-fits-all model to a truly personalized experience.

The precision of adaptive educational systems hinges on accurately gauging a student’s evolving understanding – a process known as knowledge tracing. Current research is heavily focused on moving beyond traditional methods, like simple correct/incorrect assessments, to incorporate more nuanced data points from student interactions. This includes analyzing response times, error patterns, and even the specific language used in open-ended responses. Simultaneously, integrating Large Language Models (LLMs) promises to dramatically enhance this capability. LLMs can not only assess the correctness of answers but also evaluate the reasoning behind them, identifying gaps in understanding that might otherwise go unnoticed. By combining advanced knowledge tracing with the contextual awareness of LLMs, future systems will become increasingly adept at tailoring educational content, offering personalized support, and expanding their effectiveness across a wider range of subjects and learning styles.

The development of this multi-agent system signifies a notable advancement in the pursuit of genuinely intelligent tutoring. Unlike traditional educational software offering a one-size-fits-all approach, this framework dynamically adjusts to each student’s unique learning profile, fostering not just immediate comprehension, but also the skills necessary for continuous, independent growth. By accurately identifying knowledge gaps and adapting the pace and method of instruction, the system empowers learners to take ownership of their education, cultivating a mindset of lifelong curiosity and problem-solving. This personalized approach promises to move beyond rote memorization, ultimately maximizing student achievement and preparing individuals for the evolving demands of a rapidly changing world, thereby establishing a new paradigm for effective and engaging education.

The pursuit of personalized learning, as demonstrated by ALIGNAgent, echoes a fundamental truth about complex systems. These systems, much like individuals acquiring knowledge, do not progress linearly but navigate gaps and refine understanding over time. As Bertrand Russell observed, “The good life is one inspired by love and guided by knowledge.” This sentiment resonates with ALIGNAgent’s core function – identifying skill deficiencies and offering targeted resources. The framework doesn’t aim to force proficiency, but to facilitate it, allowing the learner to age gracefully through the curriculum. Sometimes, observing the process of knowledge acquisition – pinpointing the gaps and offering gentle guidance – proves more valuable than attempting to accelerate it artificially. The system learns, and in doing so, allows the learner to do the same.

What Lies Ahead?

The ALIGNAgent framework, while demonstrably effective at estimating knowledge proficiency, initiates a predictable decay. Any improvement in knowledge tracing ages faster than expected; the very act of refinement introduces new vectors for error. The system’s reliance on large language models, powerful as they are, introduces a dependency on externally evolving foundations-a shifting substrate upon which personalized learning is built. Future iterations must account for this inherent temporal instability, perhaps by incorporating mechanisms for self-diagnosis of model drift or active querying of learner understanding to recalibrate internal representations.

A critical, yet largely unaddressed, limitation lies in the assumption that skill gaps are discrete, identifiable entities. The reality is messier; knowledge is a continuous landscape, and proficiency exists on gradients. Research should explore methods for representing and addressing these nuanced deficiencies, moving beyond simple gap identification towards a more holistic understanding of learner cognitive states. Rollback-the journey back along the arrow of time to reconstruct learning pathways-remains a significant challenge, demanding more than simply recommending prior resources.

Ultimately, the true test of such systems won’t be in achieving static improvements in assessment scores, but in fostering adaptive resilience. A learning framework that gracefully degrades-that acknowledges its own limitations and proactively seeks to mitigate them-will prove more valuable in the long run than one striving for unattainable perfection. The focus must shift from pinpointing deficits to cultivating a capacity for continuous self-improvement, both for the learner and the system itself.

Original article: https://arxiv.org/pdf/2601.15551.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Celebrities in Music Videos

- Top 20 Extremely Short Anime Series

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

- 20 Must-See European Movies That Will Leave You Breathless

2026-01-25 21:21