Author: Denis Avetisyan

Researchers are harnessing the power of theoretical physics and advanced flow models to build more robust and geometrically informed data generation systems.

This review details ‘GenAdS’, a framework leveraging the AdS/CFT correspondence and neural ordinary differential equations to enhance generative modeling through holographic encoding and non-Euclidean geometry.

Current generative modeling techniques often lack inherent geometric or physical principles to guide the learning process, potentially limiting their efficiency and interpretability. This paper, ‘Holographic generative flows with AdS/CFT’, introduces a novel framework, GenAdS, that leverages the \text{AdS}/\text{CFT} correspondence-a duality between gravity in anti-de Sitter space and conformal field theories-to augment flow matching algorithms with insights from theoretical physics. By representing data flows via holographic encoding and utilizing non-Euclidean geometry, GenAdS achieves faster convergence and improved data generation on benchmark datasets like MNIST. Could this approach unlock fundamentally new paradigms for generative modeling by seamlessly integrating the power of deep learning with the rich structure of quantum gravity?

Beyond Euclidean Constraints: A Generative Shift

Conventional generative models, such as Generative Adversarial Networks (GANs) and standard Normalizing Flows, frequently encounter significant hurdles when tasked with replicating the intricacies of high-dimensional data. These limitations stem from the inherent difficulty in accurately capturing the complex dependencies and subtle variations present in real-world datasets. GANs are notorious for instability during training and a tendency toward mode collapse – producing only a limited range of samples – while Normalizing Flows, though more stable, struggle with computational cost as dimensionality increases. Both approaches often require massive datasets and extensive computational resources to achieve acceptable results, hindering their applicability to scenarios where data is scarce or computational power is limited. This difficulty in modeling complex distributions represents a core challenge in the field of generative modeling, motivating the search for more efficient and robust alternatives.

Conventional generative modeling techniques, while powerful, frequently encounter difficulties when tasked with learning the intricacies of complex data distributions. Extensive training periods are often necessary, demanding significant computational resources and time, yet even with prolonged optimization, these models are prone to a phenomenon known as mode collapse. This occurs when the generator focuses on producing a limited subset of the possible outputs, effectively ignoring significant portions of the desired data distribution and resulting in a lack of diversity in the generated samples. Consequently, the resulting output often lacks the realism and variety needed for many applications, hindering the model’s ability to effectively represent the underlying data and limiting its practical utility.

Generative AdS (GenAdS) presents a fundamentally new approach to generative modeling, moving beyond the constraints of traditional techniques like Generative Adversarial Networks and standard Normalizing Flows. This framework ingeniously utilizes the geometry of Anti-de Sitter (AdS) space – a negatively curved spacetime – to provide a natural setting for learning complex data distributions. By combining AdS geometry with the principles of flow matching, GenAdS establishes a continuous flow that maps simple initial states to intricate data samples. This method circumvents common pitfalls associated with traditional generative models, such as mode collapse and the need for extensive training, offering a potentially more robust and efficient pathway to generating diverse and realistic data. The continuous flow inherent in GenAdS encourages stable training and allows for exploration of the entire data manifold, promising a significant advancement in the field of generative modeling.

Holographic Encoding and the Dynamics of Generation

GenAdS utilizes Holographic Encoding to represent data as geometric fields within Anti-de Sitter (AdS) spacetime. This process establishes a correspondence between data points and the values of physical fields defined on the boundary of the AdS space; essentially, each data point is mapped to a specific configuration of these fields. The dimensionality reduction inherent in this holographic mapping allows for complex, high-dimensional data to be represented in a lower-dimensional geometric space, facilitating analysis and manipulation. This geometric representation is crucial as subsequent processing steps, such as those implemented by Flow Matching, operate directly on these encoded fields within the AdS manifold, leveraging the properties of the space for efficient learning and inference.

Spectral Point Encoding represents data as a discrete set of coefficients within a Fourier basis, effectively transforming data points from their original spatial or temporal domain into the frequency domain. This conversion is achieved by evaluating the data at a specific set of spectral points, and then performing a Discrete Fourier Transform (DFT) to obtain the f(k) coefficients which represent the amplitude and phase of each frequency component k. Utilizing frequency-domain representation allows for several computational advantages, including efficient convolution operations and filtering, as well as reduced dimensionality through the truncation of high-frequency components with negligible energy. This approach is particularly beneficial for processing high-dimensional data, as it leverages the sparsity often present in the frequency spectrum, enabling faster and more memory-efficient computations compared to processing data directly in its original domain.

Flow Matching operates by defining a stochastic differential equation (SDE) whose solution represents the target data distribution. This technique transforms the data distribution into a simpler, known distribution – typically Gaussian – through a continuous deformation process governed by the ODE. By learning the velocity field of this deformation, the model can then generate new samples from the target distribution by solving the ODE backwards from the known distribution. In the GenAdS framework, this ODE solver is applied to the geometric fields representing the encoded data, effectively learning the underlying data distribution through the dynamics of these fields in AdS spacetime. The method’s efficiency stems from its ability to avoid the complex calculations often associated with traditional generative models like GANs, focusing instead on solving a well-defined ODE.

Path Selection and Empirical Validation

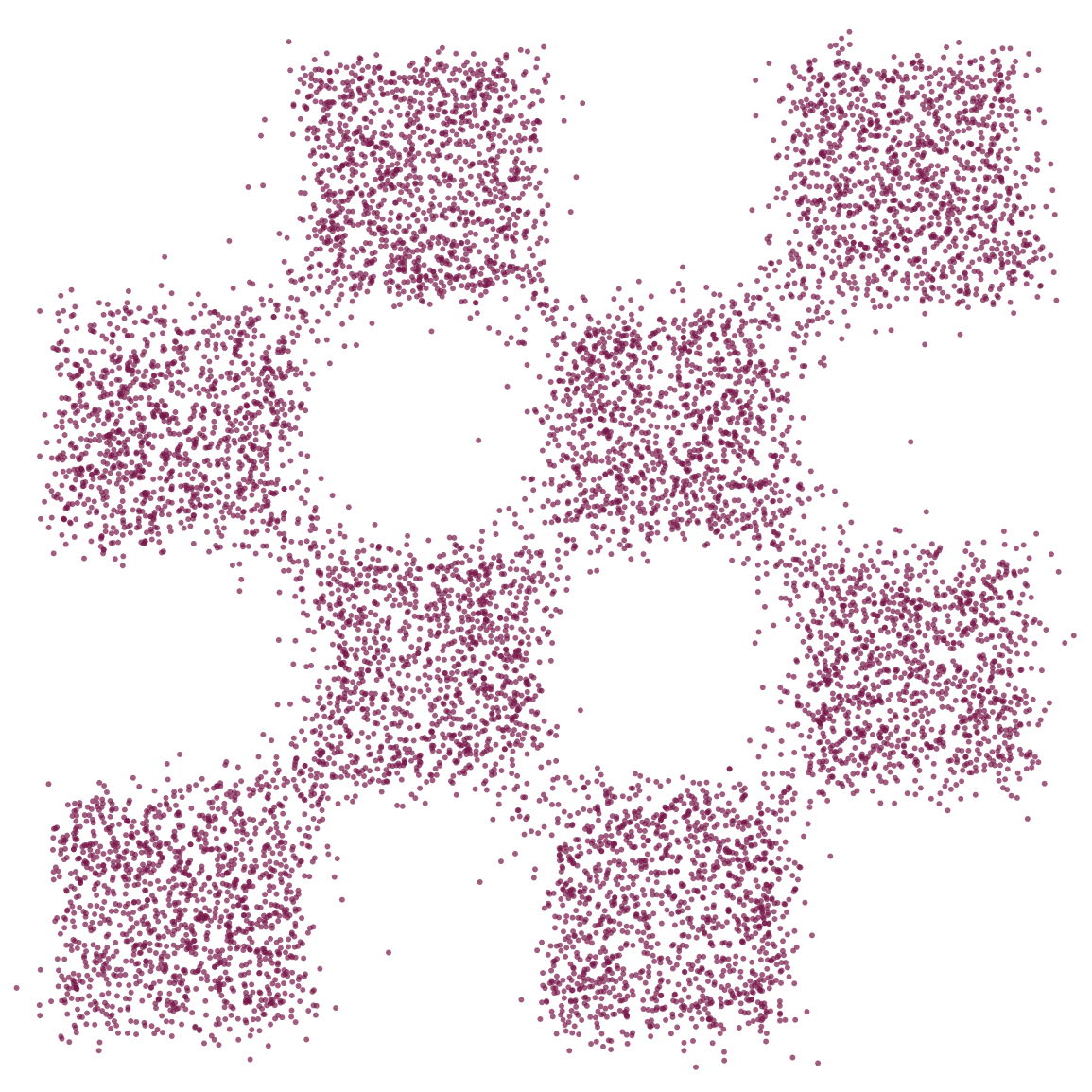

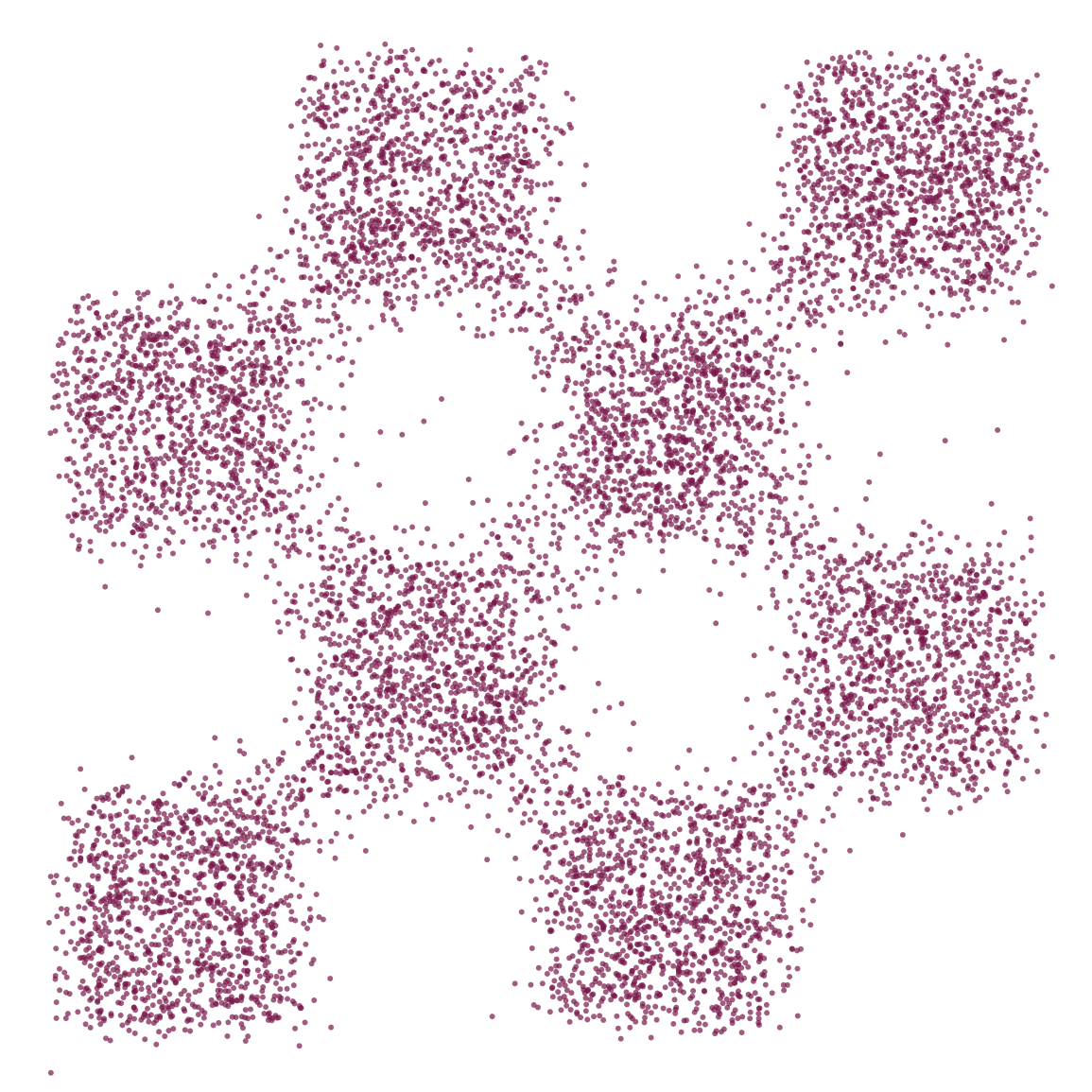

Within the flow matching framework, generative modeling is influenced by the chosen path defining the continuous transformation between data distributions. Two path options were investigated: a Linear Path, representing a straightforward, constant-rate transformation, and a Hermite Path, employing higher-order polynomial interpolation for a more nuanced and potentially efficient transformation. The Hermite Path incorporates velocity information, allowing for greater control over the generative process and potentially accelerating convergence during training. The specific characteristics of each path directly impact the velocity field used to map noise to data, thereby affecting the quality and speed of sample generation.

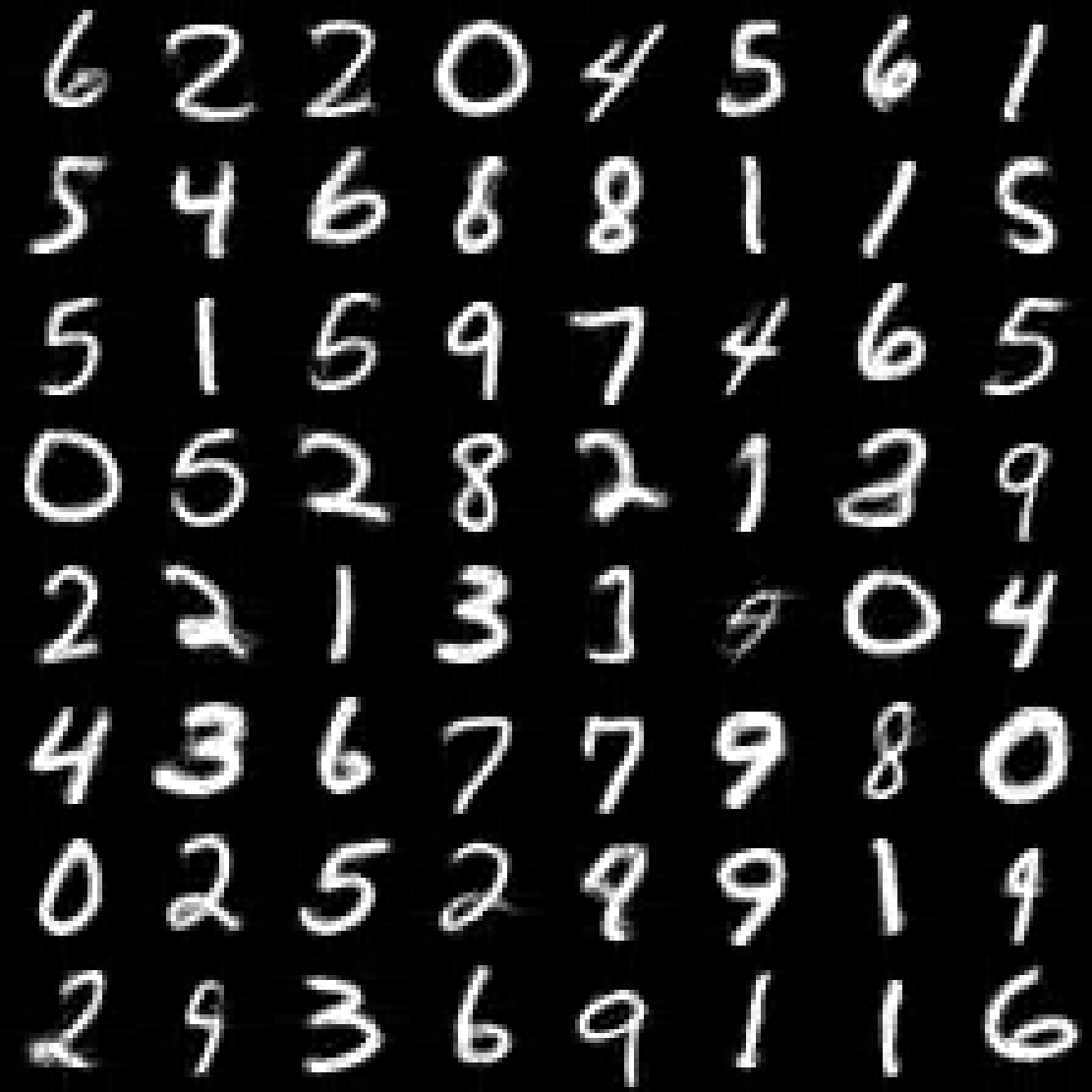

The Generative Adversarial Diffusion Solver (GenAdS) was integrated with a Convolutional Neural Network (CNN) architecture for empirical testing. Training and evaluation were conducted using two datasets: a synthetic CheckerboardDataset designed for initial performance assessment, and the widely-used MNISTDataset of handwritten digits for a standardized benchmark. This dual-dataset approach allowed for observation of model behavior in both simplified and complex data distributions. Performance metrics were then gathered from both datasets to provide a comprehensive evaluation of the GenAdS-CNN combination.

Training experiments on the CheckerboardDataset exhibited accelerated convergence during initial epochs compared to the MNISTDataset. Despite differing convergence rates, both datasets yielded models with comparable Wasserstein Edit Distance (WED) values, indicating similar generative performance. Image quality on the MNISTDataset was quantitatively assessed using the Fréchet Inception Distance (FID) metric. All trained models, regardless of path selection, were observed to have a parameter count ranging from 13,448,514 to 13,449,668.

Geometric Generative Modeling: Implications and Future Horizons

Recent advancements in generative modeling have been significantly impacted by the innovative application of GenAdS, a framework that successfully marries machine learning with the principles of theoretical physics. This approach leverages the AdS/CFT correspondence – a profound conjecture linking gravity in Anti-de Sitter (AdS) space to conformal field theories (CFTs) – to create a novel generative model. Essentially, data generation becomes analogous to exploring the geometry of an AdS space, where complex data distributions are mapped to geometric properties. The success of GenAdS suggests that geometric principles, previously confined to the realm of fundamental physics, offer a powerful new paradigm for building generative models capable of producing highly complex and nuanced data, potentially exceeding the capabilities of traditional methods. This breakthrough signals a shift toward utilizing the language of geometry and gravity to unlock new frontiers in artificial intelligence and data science.

GenAdS distinguishes itself through its utilization of Hyperscaling-violating geometry (HSVGeometry), a feature with significant implications for the types of data it can realistically generate. Unlike traditional generative models operating within Euclidean spaces, HSVGeometry allows GenAdS to model data exhibiting non-Euclidean properties – characteristics that deviate from standard geometric rules governing distance and shape. This capability is particularly relevant for datasets arising from complex systems where correlations aren’t simply defined by spatial proximity, such as certain types of turbulent flows, critical phenomena in physics, or even high-dimensional financial data. By embracing this geometric flexibility, the framework isn’t limited to mimicking data distributions confined to familiar Euclidean constraints, potentially unlocking the generation of entirely novel and realistic patterns previously inaccessible to other modeling techniques. The inherent properties of HSVGeometry, specifically its ability to modulate the relationship between scale and correlation, provide a powerful mechanism for controlling the complexity and structure of generated outputs.

Investigations into GenAdS are poised to extend beyond current capabilities, with upcoming research directed towards adapting the framework to accommodate increasingly intricate data types – encompassing modalities such as video, audio, and potentially even more complex datasets. Simultaneously, the model’s foundation in the AdS/CFT correspondence-a concept linking gravity in anti-de Sitter space to conformal field theories-offers a unique avenue for exploring fundamental questions in theoretical physics. Specifically, researchers aim to leverage GenAdS as a computational tool to gain insights into strongly correlated systems and potentially test hypotheses related to quantum gravity, effectively bridging the gap between machine learning and the frontiers of physics. This dual focus promises not only advancements in generative modeling but also a novel approach to tackling long-standing problems in theoretical science.

The pursuit of generative models, as demonstrated in this work with GenAdS, necessitates a holistic understanding of underlying structures. The paper’s innovative application of AdS/CFT correspondence to flow matching highlights the importance of geometric principles in shaping data generation. This echoes Grace Hopper’s sentiment: “It’s easier to ask forgiveness than it is to get permission.” Just as Hopper advocated for iterative progress over rigid adherence to established norms, GenAdS embraces a flexible framework, evolving the generative process by leveraging the inherent geometry and physical constraints, rather than attempting to impose a pre-defined structure. The model’s capacity to encode information within non-Euclidean spaces mirrors a willingness to explore unconventional paths toward efficient and meaningful data representation.

Beyond the Horizon

The presented framework, while demonstrating a compelling marriage of geometric intuition and generative power, ultimately highlights how little is truly understood about the underlying structure of these models. If the system survives on duct tape – patching together neural networks with borrowed physics – it’s probably overengineered. The true test isn’t replicating data distributions, but uncovering the minimal sufficient structure required for robust generalization. The current reliance on established flow matching techniques, while effective, feels akin to building a cathedral on a shack; the foundation is not yet commensurate with the ambition.

Future work must address the inherent limitations of translating between the continuous elegance of AdS/CFT and the discrete approximations of neural networks. Modularity, without a clear understanding of how components interact within the holographic encoding, is an illusion of control. The exploration of alternative geometries, beyond the readily accessible hyperbolic spaces, promises a richer landscape for data representation, but demands a corresponding increase in theoretical rigor.

The ultimate aspiration, however, extends beyond improved generative performance. This line of inquiry compels a reassessment of what constitutes ‘understanding’ in machine learning. Can physical principles genuinely constrain the solution space, or are they merely another set of inductive biases? The answer, likely, lies not in forcing a square peg into a round hole, but in recognizing that the most powerful models will emerge from structures where geometry, physics, and computation are inextricably linked.

Original article: https://arxiv.org/pdf/2601.22033.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Banks & Shadows: A 2026 Outlook

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- QuantumScape: A Speculative Venture

- MicroStrategy’s $1.44B Cash Wall: Panic Room or Party Fund? 🎉💰

- Where to Change Hair Color in Where Winds Meet

2026-01-30 20:53