Author: Denis Avetisyan

Researchers have released a comprehensive suite of environments to rigorously evaluate algorithms designed for complex, large-scale multi-agent systems.

Bench-MFG provides standardized tools and metrics for assessing performance in stationary Mean Field Games, promoting reproducible research and robust algorithm development.

Despite recent advances in multi-agent reinforcement learning, a standardized evaluation framework for algorithms tackling large-scale systems remains a critical gap. To address this, we introduce Bench-MFG: A Benchmark Suite for Learning in Stationary Mean Field Games, a comprehensive toolkit designed to rigorously assess performance across a diverse set of MFG environments. This suite features a novel taxonomy of problem classes, a method for generating statistically robust instances (MF-Garnets), and a suite of benchmark algorithms, including a new exploitability-minimizing approach (MF-PSO). By providing standardized environments and metrics, can Bench-MFG accelerate progress and foster more reproducible research in this rapidly evolving field?

Navigating Complexity: The Rise of Mean Field Games

The complexity inherent in modeling systems comprised of a multitude of interacting agents-be it flocks of birds, schools of fish, or economic actors-quickly overwhelms computational resources. Each agent’s decision influences, and is influenced by, every other agent, creating a web of dependencies that scales exponentially with population size. This phenomenon, often referred to as the ‘curse of dimensionality’, renders traditional, agent-by-agent simulations intractable for even moderately large groups. Consequently, researchers face a critical challenge: how to accurately represent the collective dynamics of these systems without being limited by the prohibitive computational cost of tracking individual interactions. Capturing emergent behaviors, such as collective motion or market trends, necessitates innovative approaches that can effectively handle this inherent complexity and provide insights into the system’s overall behavior.

As the number of interacting agents in a modeled system increases – be it financial traders, self-driving cars, or animal flocks – computational demands escalate dramatically, a phenomenon known as the ‘curse of dimensionality’. This challenge arises because the state space grows exponentially with each additional agent; tracking and predicting the behavior of every individual and their interactions quickly becomes intractable. For instance, simulating even a moderately sized population requires considering N agents, each potentially influencing and being influenced by the others, leading to a computational complexity that scales poorly with N. Traditional methods, reliant on discrete representations of each agent’s state and interactions, find their processing power overwhelmed, rendering accurate predictions impossible beyond relatively small population sizes. This limitation motivated the search for approximation techniques capable of capturing collective behavior without explicitly modeling every individual interaction.

Mean Field Game (MFG) theory provides a compelling approach to understanding systems comprising a vast number of interacting agents by shifting from tracking individual behaviors to modeling the collective impact of the population. Instead of attempting to solve for each agent’s optimal strategy in isolation, MFG approximates the influence of others through an average, or “mean field,” effectively reducing the complexity of the problem. This continuum approximation allows researchers to represent the population’s overall behavior as a continuous density function, transforming a discrete, high-dimensional problem into a more tractable continuous one. Consequently, MFG enables the analysis of phenomena previously inaccessible due to computational limitations, offering insights into diverse scenarios ranging from financial markets and crowd dynamics to urban planning and even biological systems – all by focusing on how an individual perceives and reacts to the aggregated actions of the larger group.

The emergence of Mean Field Game theory as a dominant approach to modeling complex systems with numerous interacting agents is deeply rooted in the foundational work of several key researchers. Pioneering contributions from Huang, Lasry, and Lions in the late 20th and early 21st centuries established the mathematical framework necessary to transition from discrete, agent-based modeling to a continuum approximation. Their collaborative efforts demonstrated that under certain conditions, the behavior of a large population can be effectively described by the average, or ‘mean field’, exerted by all other players. This innovative perspective allowed for the derivation of tractable equations governing population dynamics, circumventing the computational intractability previously imposed by the ‘curse of dimensionality’. By framing the problem as a dynamic system influenced by this aggregate behavior, these researchers not only provided a rigorous theoretical basis for MFG, but also opened new avenues for analyzing scenarios ranging from traffic flow and financial markets to evolutionary biology and collective decision-making.

Decoding Collective Behavior: Algorithmic Foundations

Iterative methods are commonly employed to determine solutions for Mean Field Games (MFGs) due to the inherent complexity of the coupled system of equations. Fixed Point Iteration involves repeatedly applying a contraction mapping until convergence to a stable equilibrium, while Policy Iteration alternates between policy evaluation – determining the value function given a current policy – and policy improvement – updating the policy to maximize expected rewards. Both methods rely on approximations and discretizations of the continuous state and action spaces, and their convergence rates are influenced by factors such as the step size used in the iterations and the properties of the underlying MFG model. The computational cost associated with these iterative processes can be significant, particularly in high-dimensional problems, necessitating the use of efficient numerical schemes and parallelization techniques.

Iterative algorithms like Fixed Point Iteration and Policy Iteration, while easily understood in principle, exhibit computational complexity that scales poorly with problem dimensionality and the size of the state and action spaces. Specifically, each iteration may require solving optimization problems or evaluating expected values across these spaces, leading to a significant increase in processing time and memory requirements. The computational burden is exacerbated in scenarios involving continuous state or action spaces, necessitating the use of approximation techniques or high-performance computing resources. For large-scale MFGs, the number of iterations required for convergence can also contribute substantially to the overall computational cost, making real-time or near-real-time solutions challenging to achieve.

Online Mirror Descent and Fictitious Play represent iterative solution methods for Mean Field Games (MFGs) that are well-suited for dynamic and online learning scenarios. Unlike traditional methods requiring complete knowledge of the game and simultaneous updates, these algorithms allow for sequential learning and adaptation as new information becomes available. Online Mirror Descent employs a projection step utilizing Bregman divergences to ensure stability and handle non-Euclidean spaces, while Fictitious Play iteratively best-responds to the empirical distribution of other players’ strategies. Both methods are particularly advantageous in contexts where the environment or player populations are evolving, offering reduced computational complexity compared to solving a fully-defined, static MFG, and enabling real-time decision-making based on observed interactions.

The practical implementation of Mean Field Games (MFGs) hinges on computational efficiency due to the high dimensionality and complexity inherent in modeling large populations of interacting agents. While MFGs provide a theoretically sound framework for analyzing such systems, applying them to real-world problems – including traffic flow optimization, financial modeling, and resource allocation – demands algorithms capable of delivering solutions within reasonable timeframes and resource constraints. Inefficient solution methods can render even well-formulated MFG models unusable for timely decision-making or real-time control, necessitating the development and refinement of scalable algorithms and approximation techniques to bridge the gap between theoretical frameworks and practical applications.

Engineering Robustness: Optimizing for Efficiency

In competitive multi-agent environments, minimizing exploitability is critical for ensuring strategic resilience. Exploitability, in this context, refers to the degree to which an agent’s strategy can be predicted and subsequently countered by an opponent to achieve a favorable outcome. High exploitability indicates predictable behavior, allowing opponents to consistently capitalize on those patterns. Conversely, a low exploitability score signifies a strategy that is difficult to anticipate, forcing opponents to rely on less effective, generalized responses. Therefore, agents designed for competitive scenarios prioritize minimizing exploitability to maintain consistent performance against adaptive opponents and prevent predictable losses.

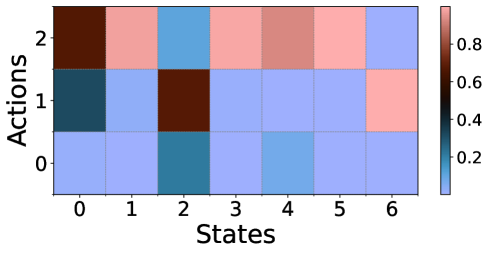

MF-PSO (Multi-Function Particle Swarm Optimization) is a black-box optimization technique developed for minimizing exploitability within Multi-Agent Reinforcement Learning (MARL) frameworks, specifically in the context of Multi-Function Games (MFGs). Unlike gradient-based methods, MF-PSO does not require knowledge of the underlying game dynamics or access to internal game states; it operates by treating the MFG environment as a non-differentiable function. This is achieved through a particle swarm approach where a population of policies is evolved, with each policy’s performance evaluated based on its resistance to exploitation by opponent strategies. The algorithm iteratively adjusts the population’s parameters, guided by the best-performing policies, to converge on strategies that are robust against predictable opponent behavior and thus minimize exploitability.

The implementation of the Bench-MFG suite, coupled with the MF-PSO optimization method, results in a demonstrated 2000x speedup when compared to established classical open-source algorithms for Multi-Agent Reinforcement Learning (MARL) tasks. This performance gain was achieved through a combination of optimized code architecture within Bench-MFG and the efficient black-box optimization capabilities of MF-PSO. Empirical results indicate a substantial reduction in computational time required to train and evaluate agents, enabling more extensive experimentation and faster iteration on MARL strategies, particularly in complex game environments.

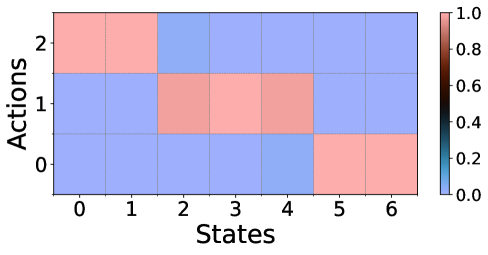

The Bench-MFG suite facilitates a granular comparison of multi-agent reinforcement learning algorithms by providing a standardized testing environment across five distinct game classes: No-Interaction, Contractive, Linear-Quadratic (LL), Potential, and Dynamics-Coupled games. This categorization allows researchers to assess algorithm performance – including metrics such as convergence speed and final reward – and, crucially, to quantify exploitability within each game structure. By providing consistent evaluation across these classes, Bench-MFG enables statistically significant comparisons, identifying which algorithms exhibit greater robustness and resistance to exploitation under specific game-theoretic conditions, and revealing performance trade-offs between different algorithmic approaches.

Expanding the Horizon: Applications and Impact

Mean Field Games (MFGs) move beyond traditional game theory by accommodating dynamic environments where the probability of an agent’s state transitioning changes based on the overall population distribution. This represents a significant departure from static game models, enabling the analysis of systems where agents’ actions collectively influence the landscape itself. Rather than assuming a fixed set of possibilities, MFGs allow transition probabilities to become state-dependent and population-aware – for example, in a foraging scenario, the availability of resources diminishes as more agents converge on the same location, altering the future prospects for all. This dynamic coupling introduces a feedback loop between individual behavior and the collective state, creating a richer and more realistic modeling framework suitable for complex systems exhibiting emergent behavior and strategic interactions at scale.

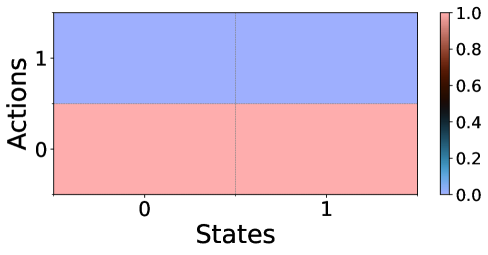

The versatility of Mean Field Games extends beyond simple interactions, incorporating specialized frameworks like No-Interaction MFGs and Contractive MFGs to precisely capture varying degrees of agent dependence. No-Interaction MFGs model scenarios where agents act independently, simplifying analysis and providing a baseline for comparison, while Contractive MFGs account for diminishing interaction effects as the population scales-a common feature in many real-world systems. This ability to modulate agent interdependence isn’t merely a theoretical refinement; it allows researchers to tailor the model’s complexity to the specific dynamics of the system under investigation, offering a powerful tool for analyzing scenarios ranging from completely independent actors to strongly coupled collectives and expanding the scope of MFG applications to a wider array of multi-agent problems.

The recent refinements to Mean Field Game (MFG) theory are dramatically broadening its practical reach, most notably into the domain of Multi-Agent Reinforcement Learning (MARL). Traditionally, MARL algorithms struggle with the curse of dimensionality as the number of agents increases; however, MFGs offer a powerful abstraction by representing the complex interactions between numerous agents with a single representative agent facing an average field. This simplification allows for scalable learning algorithms and facilitates the analysis of complex, decentralized systems. Beyond MARL, the flexibility of MFGs suggests potential applications in areas such as robotics swarms, economic modeling, and even social dynamics, where understanding and predicting collective behavior is paramount. Researchers are actively exploring how to leverage the MFG framework to address challenges in these diverse fields, paving the way for innovative solutions to complex, multi-agent problems.

Mean Field Games offer a compelling simplification of incredibly complex systems involving numerous interacting agents, effectively transforming a problem of immense dimensionality into one that is computationally tractable. This powerful abstraction allows researchers and engineers to model and analyze large-scale multi-agent systems-from robotic swarms and autonomous vehicles to financial markets and even social networks-with unprecedented detail. By focusing on the average behavior of agents rather than tracking each individual, MFGs facilitate the design of decentralized control strategies and the prediction of emergent phenomena. Consequently, this framework is not merely a theoretical tool, but a catalyst for innovation with potential applications extending far beyond its origins in game theory and economics, promising advancements in artificial intelligence, robotics, and various other scientific disciplines.

The development of Bench-MFG underscores a crucial tenet of systems design: structure dictates behavior. This benchmark suite isn’t merely a collection of environments; it’s a carefully constructed framework intended to reveal the strengths and weaknesses of various algorithms tackling stationary Mean Field Games. As Alan Turing observed, “Sometimes people who are unhappy tend to look at the world as hostile.” Similarly, without standardized evaluation tools like Bench-MFG, the multi-agent systems research landscape risks being dominated by algorithms that appear successful within limited contexts, obscuring genuine progress. The suite’s emphasis on exploitability minimization and reproducible results reflects a dedication to identifying robust solutions, moving beyond superficial performance gains to reveal underlying algorithmic qualities. It’s a clear example of how rigorous structure facilitates meaningful discovery.

The Road Ahead

Bench-MFG offers a standardized foundation, a necessary, if often overlooked, step. The suite itself does not solve the inherent difficulties of mean field game algorithms – it merely clarifies where those difficulties lie. A robust score on these benchmarks doesn’t guarantee generalizability, of course. Indeed, if an algorithm appears to excel across this suite, one should immediately suspect over-fitting to the specific structures embedded within the environments. The art, as always, is choosing what to sacrifice – computational efficiency for exploitability, perhaps, or simplicity for accuracy.

Future work will inevitably focus on expanding the suite’s coverage of game dynamics. Current benchmarks largely assume stationary distributions, a convenient simplification. However, real-world multi-agent systems are rarely static. Introducing time-varying environments, incomplete information, and more complex agent interactions will reveal the brittleness of existing approaches. Exploitability minimization, while a valuable metric, remains a moving target; a truly robust algorithm must anticipate, rather than merely react to, adversarial strategies.

Ultimately, the value of Bench-MFG rests not in providing definitive answers, but in sharpening the questions. The field requires a shift in emphasis: less focus on achieving state-of-the-art performance on isolated tasks, and more on understanding the fundamental limitations of learning in complex, competitive systems. If the system looks clever, it’s probably fragile.

Original article: https://arxiv.org/pdf/2602.12517.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Where to Change Hair Color in Where Winds Meet

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- All weapons in Wuchang Fallen Feathers

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

2026-02-17 01:22