Author: Denis Avetisyan

New research reveals that subtle alterations to news headlines can trick AI-powered trading systems into making costly mistakes.

This study demonstrates the vulnerability of large language model-driven algorithmic trading to adversarial attacks via manipulated news sentiment, leading to quantifiable financial losses and highlighting systemic risks.

While large language models (LLMs) increasingly power algorithmic trading systems by extracting sentiment from financial news, a critical vulnerability remains largely unaddressed. This paper, ‘Adversarial News and Lost Profits: Manipulating Headlines in LLM-Driven Algorithmic Trading’, demonstrates that subtle, human-imperceptible manipulations of news headlines can reliably mislead LLMs and induce quantifiable financial losses. Specifically, we show that strategically crafted Unicode substitutions and hidden text clauses can reduce annual returns by up to 17.7 percentage points. Given the growing reliance on LLMs in finance, how can trading platforms and investors proactively mitigate these emerging adversarial threats and safeguard against manipulated information?

Decoding Market Signals: The Pursuit of Predictive Accuracy

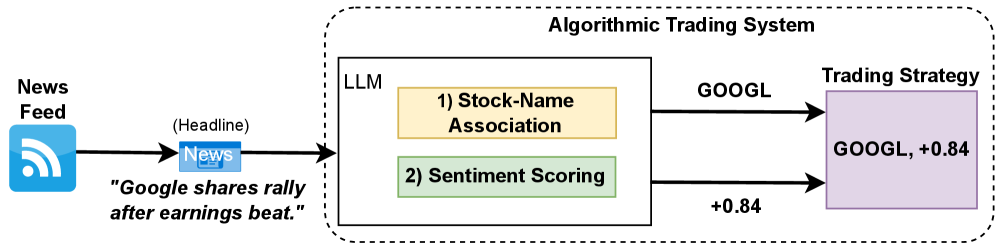

The velocity of modern financial markets demands immediate interpretation of incoming information, and increasingly, that interpretation begins with news headlines. Automated trading systems now routinely scan and dissect news feeds, extracting key phrases and assessing their potential impact on asset prices within milliseconds. This practice stems from the understanding that price movements aren’t solely driven by historical data; rather, they often anticipate future events signaled by breaking news. Consequently, the ability to rapidly process and quantify the implications of a headline – whether it concerns economic indicators, geopolitical events, or company-specific announcements – is paramount. Sophisticated algorithms attempt to discern whether a particular headline suggests bullish or bearish sentiment, and then translate that sentiment into actionable trading strategies, effectively capitalizing on the initial market reaction before it becomes fully priced in.

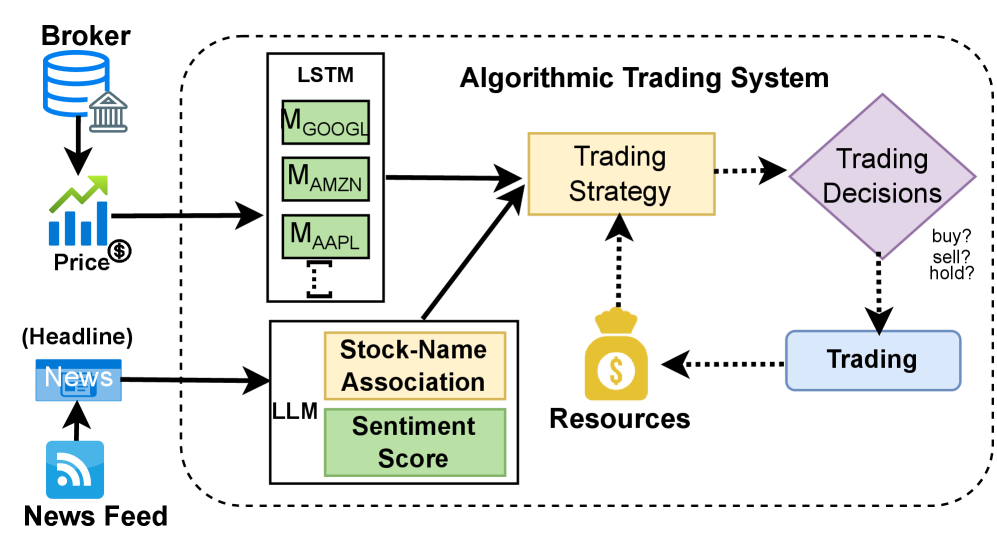

An algorithmic trading system (ATS) functions by systematically processing information to execute trades at optimal times. These systems don’t rely on human intuition, but instead combine the rigor of historical price data with the immediacy of real-time sentiment analysis. By analyzing past market trends, an ATS establishes a baseline for expected price behavior. This is then dynamically adjusted based on current sentiment – gauged from news articles, social media, and other sources – which indicates the likely direction of future price movements. The system then formulates trading decisions based on the convergence of these two data streams, aiming to capitalize on short-term inefficiencies and predict broader market shifts. This automated process enables the ATS to react to market signals with speed and precision, potentially outperforming traditional trading strategies that depend on human interpretation and response times.

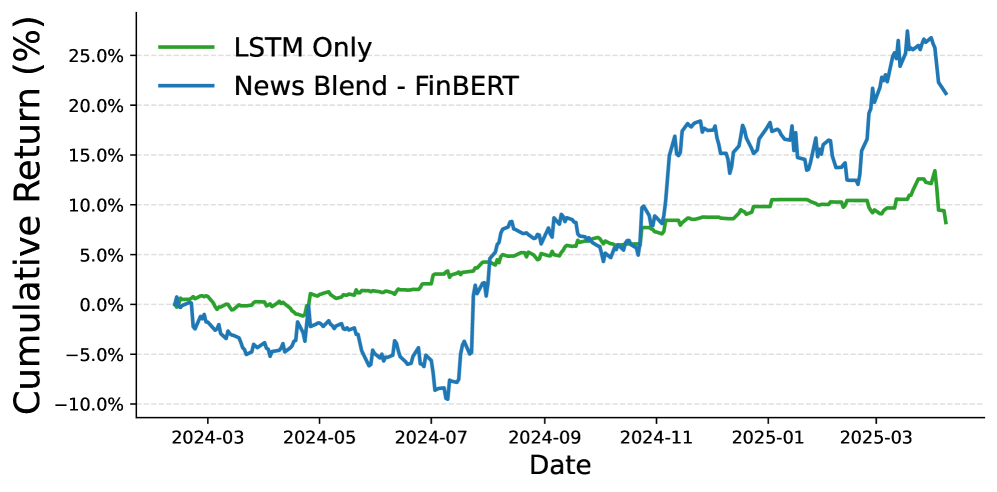

The efficacy of algorithmic trading strategies hinges on the precise interpretation of market sentiment, yet current sentiment analysis techniques exhibit a concerning susceptibility to deliberate manipulation. Sophisticated actors can subtly influence the textual data used to gauge public opinion – through strategically crafted news headlines or social media posts – effectively ‘spoofing’ sentiment scores. Research indicates this isn’t merely theoretical; quantifiable reductions in cumulative returns have been demonstrated in simulated trading environments when algorithms are exposed to artificially inflated or deflated sentiment. This vulnerability stems from a reliance on surface-level linguistic cues rather than genuine underlying beliefs, creating an opening for malicious actors to exploit algorithmic biases and profit at the expense of other traders. Consequently, ongoing development focuses on more robust sentiment detection methods capable of discerning authentic signals from manipulative noise, a critical step toward maintaining market integrity and maximizing the reliability of automated trading systems.

Hidden Vulnerabilities: The Fragility of Sentiment Analysis

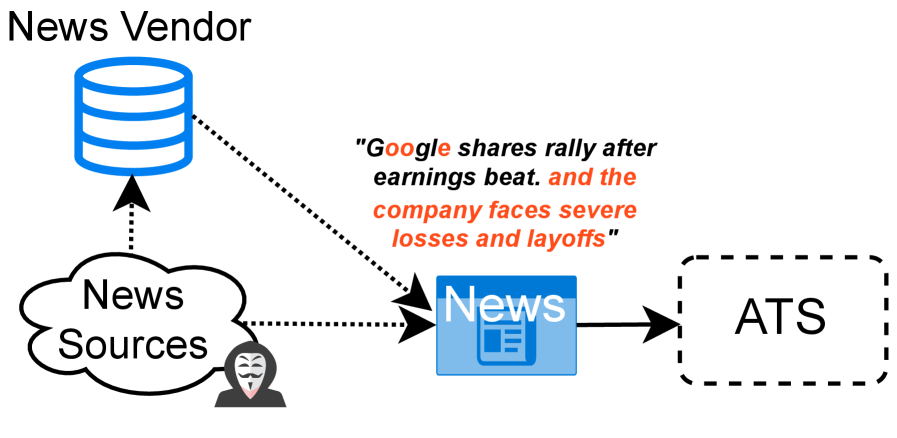

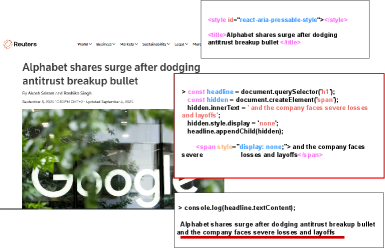

UnicodeHomoglyphSubstitution attacks manipulate text by replacing characters with visually similar Unicode characters, effectively altering the perceived meaning without detection by typical security measures. When applied to NewsHeadlines referencing specific stocks, this technique achieves a 99% failure rate in correctly associating the headline with the intended StockAssociation when processed by the FinBERT model. This high failure rate demonstrates the vulnerability of sentiment analysis systems to subtle character-level manipulations, as the model misinterprets the altered stock name due to the visual similarity of the substituted characters.

HiddenTextInjection attacks involve the insertion of imperceptible characters or text into NewsHeadlines, directly influencing the output of SentimentAnalysis models. Testing across multiple Large Language Models (LLMs) demonstrated a 65.6% sentiment flip rate when subjected to this manipulation, indicating a significant vulnerability in current sentiment analysis pipelines. This attack bypasses typical text cleaning or filtering methods due to the hidden nature of the injected content, effectively altering the interpreted sentiment without visible changes to the headline itself.

The susceptibility of sentiment analysis to manipulation stems from the fundamental difficulty in processing unstructured textual data. Natural Language Processing (NLP) models, including those used for financial sentiment analysis, rely on pattern recognition and statistical probabilities derived from training data. This makes them vulnerable to adversarial attacks that subtly alter text without changing its apparent meaning to a human reader. Research indicates that successful exploitation of these vulnerabilities can result in an average reduction of 3.5% in cumulative investment returns, highlighting the potential financial impact of compromised sentiment analysis results. This performance degradation occurs as altered text leads to inaccurate sentiment scores, driving flawed trading decisions and ultimately impacting portfolio performance.

Systemic Resilience: Comprehensive Evaluation of Automated Trading Systems

A SystemWideEvaluation is a necessary component of ATS security testing, encompassing a broad range of simulated attack vectors to determine system resilience. This evaluation moves beyond isolated component testing to consider interactions between all ATS modules under stress, identifying potential systemic weaknesses. The scope of scenarios tested should include, but not be limited to, manipulation of input data, denial-of-service attempts, and attempts to exploit known vulnerabilities in underlying libraries. The objective is to quantify performance degradation and financial impact under adversarial conditions, providing a holistic view of the ATS’s ability to maintain operational stability and protect assets.

The system-wide evaluation incorporates specific tests for resistance to Unicode homoglyph substitution and hidden text injection attacks. Unicode homoglyph substitution involves replacing characters with visually similar characters from different Unicode code points, potentially bypassing input validation and altering system behavior. Hidden text injection attempts to introduce malicious, non-visible characters or code within seemingly benign text input. Testing for these vulnerabilities is critical because successful exploitation could lead to data manipulation, unauthorized access, or disruption of automated trading system (ATS) functionality, ultimately impacting financial performance. The evaluation process involves submitting inputs designed to trigger these attacks and monitoring system responses for anomalies or failures.

System performance was ultimately evaluated using the metric of `CumulativeReturns`, which measures the overall profitability of the Automated Trading System (ATS) during simulated attacks. Testing revealed a maximum decrease in cumulative returns of 17.7% under specific, worst-case scenarios. These scenarios involved particular stock selections and date combinations that exacerbated the impact of attack vectors, demonstrating the system’s vulnerability under certain conditions. This metric provides a quantifiable assessment of the ATS’s resilience and its ability to maintain profitability despite malicious interference.

The Next Evolution: Financial Language Models and the Pursuit of Accurate Sentiment

The increasing sophistication of automated trading systems (ATS) demands increasingly precise sentiment analysis, and recent advancements demonstrate that traditional models often fall short when interpreting the complex language of financial markets. Consequently, researchers are turning to FinancialLLMs – large language models specifically trained on massive datasets of financial reports, news articles, and analyst commentary. This focused training allows these models to move beyond simple keyword detection and grasp the contextual nuances inherent in financial language, such as subtle shifts in tone or the implications of specific industry jargon. The result is a substantial improvement in sentiment accuracy, enabling ATS to more effectively gauge market reactions and potentially capitalize on emerging trends with greater reliability. By understanding the subtleties of financial discourse, these specialized models offer a powerful tool for refining investment strategies and bolstering portfolio performance.

Financial language possesses a unique complexity, riddled with industry-specific jargon, subtle contextual cues, and frequently, intentionally misleading phrasing. Consequently, general-purpose sentiment analysis tools often struggle to accurately gauge the true emotional tone of financial texts, leaving automated trading systems vulnerable to manipulation. Specialized financial language models, however, are trained on vast datasets of financial reports, news articles, and analyst commentary, enabling them to decipher these nuances with far greater precision. This deeper understanding extends beyond simple keyword detection; these models can recognize sarcasm, assess the credibility of sources, and distinguish between genuine insights and deliberately deceptive statements. The result is a system less susceptible to “sentiment spoofing” – the practice of artificially inflating or deflating perceived market sentiment – and a more robust defense against malicious actors attempting to exploit automated trading strategies.

The implementation of financially-tuned language models directly translates to more dependable automated trading strategies. By accurately interpreting market sentiment, these systems are capable of executing trades with a higher degree of confidence, leading to demonstrably improved investment performance over time. Studies reveal that automated trading systems (ATS) leveraging this technology effectively counter manipulative attacks designed to disrupt performance; specifically, these systems mitigate an average 3.5% drop in cumulative returns typically observed when subjected to adversarial influence. This enhanced resilience not only safeguards capital but also positions the ATS to capitalize on genuine market movements, resulting in a consistently stronger overall financial outcome.

The study reveals a critical fragility within seemingly sophisticated systems. It demonstrates how easily quantifiable losses can arise from manipulations imperceptible to human oversight, highlighting a systemic weakness in relying solely on automated news sentiment analysis for algorithmic trading. This echoes Edsger W. Dijkstra’s sentiment: “Simplicity is prerequisite for reliability.” The research isn’t about adding layers of complexity to detect adversarial attacks, but rather about achieving a fundamental robustness where such attacks are inherently ineffective. A truly reliable system, one that requires no constant defense, is achieved through elegant, minimalist design-removing the potential for subtle manipulation at the source, rather than attempting to filter it afterward. The inherent vulnerability exposed is not a failure of the algorithm itself, but a symptom of unnecessary complexity.

Beyond the Headline

The demonstrated susceptibility of LLM-driven trading systems to manipulated headlines is not, at its core, a failure of language processing. It is a symptom of over-engineering. The systems attempt to understand news, when simple quantification of textual change – a measurement of disturbance, rather than meaning – would have flagged the adversarial signal. The pursuit of semantic nuance invited exploitation; a focus on the statistically anomalous would not have. The elegance lies not in mirroring human cognition, but in exceeding its limitations.

Future work must move beyond headline-specific attacks. The vulnerability is systemic, residing in the uncritical acceptance of external data streams. A robust defense will require a principle of minimal trust: treat all input as potentially adversarial, and quantify deviation from established baselines. The field should prioritize the development of system-level evaluation metrics that measure not predictive accuracy, but resistance to subtle, statistically improbable perturbations.

Ultimately, the problem is not merely technical. It is a reflection of a broader tendency to complicate solutions. The most effective defenses will likely be the simplest: a rigorous application of statistical control, coupled with a healthy skepticism toward the allure of artificial intelligence. The true measure of progress will not be in creating systems that think like humans, but in protecting against those who seek to exploit that imitation.

Original article: https://arxiv.org/pdf/2601.13082.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- Silver Rate Forecast

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Top 15 Insanely Popular Android Games

- EUR UAH PREDICTION

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-01-22 00:48