Author: Denis Avetisyan

Researchers demonstrate that providing artificial intelligence with structural guidance dramatically improves its ability to automatically prove complex mathematical theorems, even with limited computing power.

Enforcing tactic skeletons at inference time enhances sample efficiency in neural theorem proving with large language models.

Despite recent advances in neural theorem proving driven by large language models and reinforcement learning, a surprising gap remains between model capability and practical performance. This work, ‘Structured Hints for Sample-Efficient Lean Theorem Proving’, investigates whether even highly-trained provers can benefit from simple structural guidance during inference. We demonstrate that enforcing a fixed schedule of tactic skeletons significantly improves proof success-achieving a 43% relative improvement on the miniF2F benchmark with the same computational budget-suggesting that structural priors remain underexploited. Does this indicate that scaling model size alone is insufficient, and that complementary approaches focusing on inference-time guidance are crucial for achieving robust and efficient automated reasoning?

Deconstructing Complexity: The Challenge of Automated Verification

The increasing complexity of modern systems – from software and hardware to biological and financial models – demands rigorous verification, a task where automated theorem proving offers a potentially transformative solution. However, despite decades of research, achieving robust and reliable automated verification remains a substantial challenge in artificial intelligence. The core difficulty lies in the sheer scale of the search space; proving even moderately complex theorems requires navigating a combinatorial explosion of possible inference steps. While humans intuitively prune irrelevant paths, current AI systems often struggle to do so efficiently, leading to impractically long computation times or failure to find a proof even when one exists. This limitation hinders the widespread adoption of automated theorem proving in critical applications where correctness and reliability are paramount, necessitating continued innovation in areas like heuristic search, proof strategy learning, and the development of more expressive and decidable logical frameworks.

The pursuit of automated theorem proving faces a fundamental hurdle: the rapid increase in computational complexity as problem size grows. Each step in a logical proof branches into multiple possibilities, leading to a “combinatorial explosion” where the number of potential proof paths quickly becomes unmanageable for even powerful computers. Consequently, traditional methods often require substantial human intervention – experts must guide the system, pruning unproductive branches and suggesting promising avenues of exploration. This reliance on human insight limits the scalability and true autonomy of these systems, hindering their ability to verify increasingly complex software, hardware, and mathematical conjectures without significant, ongoing assistance. The challenge lies not simply in finding a proof, but in navigating a vast and ever-expanding search space to efficiently locate one, or conclusively determine its absence.

Bridging Logic and Learning: Neural Theorem Proving

Neural Theorem Proving (NTP) integrates the capabilities of Large Language Models (LLMs) with the rigor of formal proof assistants, such as Lean, to facilitate automated theorem proving. Traditional automated provers often struggle with complex problems, while LLMs, despite their reasoning abilities, lack the formal verification necessary for mathematical certainty. NTP bridges this gap by leveraging LLMs to suggest proof steps within a formal system like Lean, which then verifies the logical validity of those steps. This approach allows the LLM to explore a vast search space of potential proofs, guided by the constraints of the formal system and benefiting from existing, formally verified mathematical knowledge bases like Mathlib. The combination aims to automate aspects of proof discovery, potentially assisting mathematicians in tackling challenging problems and verifying complex mathematical arguments.

The Structured Query is a critical input for Large Language Model-assisted proof generation, consisting of two primary elements: a formal theorem statement expressed in a logic suitable for the proof assistant, and a ‘Tactic Skeleton’. This skeleton provides a partial proof script, outlining initial tactics and strategies to guide the model’s search for a complete proof. By predefining a sequence of tactics, the Structured Query constrains the model’s exploration, improving efficiency and focusing its reasoning on relevant proof steps. The theorem statement defines the goal, while the tactic skeleton serves as a starting point, reducing the search space and increasing the likelihood of generating a valid and complete proof within the formal system.

The Generation Process within Neural Theorem Proving functions by iteratively completing a proof outline, or ‘Tactic Skeleton’, within the Lean interactive theorem prover. This involves the Large Language Model predicting subsequent tactic applications based on the current proof state, the theorem statement, and the existing skeleton. Crucially, the process leverages the extensive Mathlib library, a formally verified repository of mathematical definitions and theorems, to access pre-proven results and accelerate proof construction. The model can query Mathlib for relevant lemmas and definitions, incorporating them into the proof as needed, and benefiting from its rigorous verification standards to ensure the overall proof’s validity. The completed proof is then checked by Lean’s kernel for logical correctness.

Resourceful Reasoning: Optimizing Proof Search

The computational limitations inherent in automated theorem proving, referred to as a low-inference budget, directly constrain the scope of the search space explored by a model. This restriction stems from the finite nature of processing power and memory available during proof construction. Each inference step – applying a logical rule to derive a new statement – consumes computational resources. A limited budget necessitates strategic exploration; exhaustive search is impractical. Consequently, the model must prioritize promising proof paths and prune less likely avenues to remain within resource constraints, potentially sacrificing completeness for efficiency. The severity of this limitation is directly proportional to the complexity of the theorem and the expressiveness of the underlying logical system.

The Intermediate Representation (IR) functions as a structured, formalized representation of the theorem proving problem, providing explicit guidance to the generation process. By defining a constrained search space based on logical forms and dependencies, the IR reduces the computational burden of exploring irrelevant or invalid proof steps. This structural guidance allows the model to focus its inference budget on promising paths, effectively streamlining the generation process and improving the efficiency of proof search. The IR facilitates decomposition of the proof task into smaller, manageable sub-problems, each representing a specific logical inference or transformation.

A fixed prompt schedule is utilized during proof search to systematically explore varied proof strategies within computational constraints. This involves employing a pre-defined sequence of prompts, each representing a ‘tactic skeleton’ – a partially instantiated proof step. By iteratively applying these diverse prompts, the system investigates multiple potential proof paths without requiring extensive free-form generation at each step. The fixed schedule ensures a breadth-first exploration of the proof space, allowing the system to discover solutions that might be missed by a more narrowly focused, or randomly initialized, search. The prompts are designed to guide the model toward valid logical inferences, while the schedule dictates the order in which these inferences are attempted.

Measuring Progress: Benchmarking and Failure Analysis

The field of automated theorem proving benefits greatly from robust evaluation metrics, and the ‘miniF2F Benchmark’ addresses this need by offering a consistently defined, standardized environment for assessing the capabilities of neural provers. This benchmark suite comprises a collection of formal theorems, allowing researchers to objectively compare different approaches to automated reasoning without the confounding variables of varying problem sets or evaluation criteria. By providing a common ground for experimentation, the miniF2F benchmark facilitates reproducible research and accelerates progress in the development of more powerful and reliable neural theorem provers, ultimately driving advancements in areas such as formal verification and artificial intelligence.

Evaluations conducted using the miniF2F benchmark reveal a significant advancement in automated theorem proving capabilities. The developed approach successfully proved 21.72% of the benchmark’s theorems, marking a substantial 43% relative improvement over the established baseline of 15.16%. This enhanced performance demonstrates a notable leap in the system’s ability to navigate complex logical challenges and arrive at valid proofs, suggesting a more robust and efficient proving methodology. The benchmark provides a standardized platform for comparing different provers, and this result highlights the effectiveness of the techniques employed in this study for tackling formal verification problems.

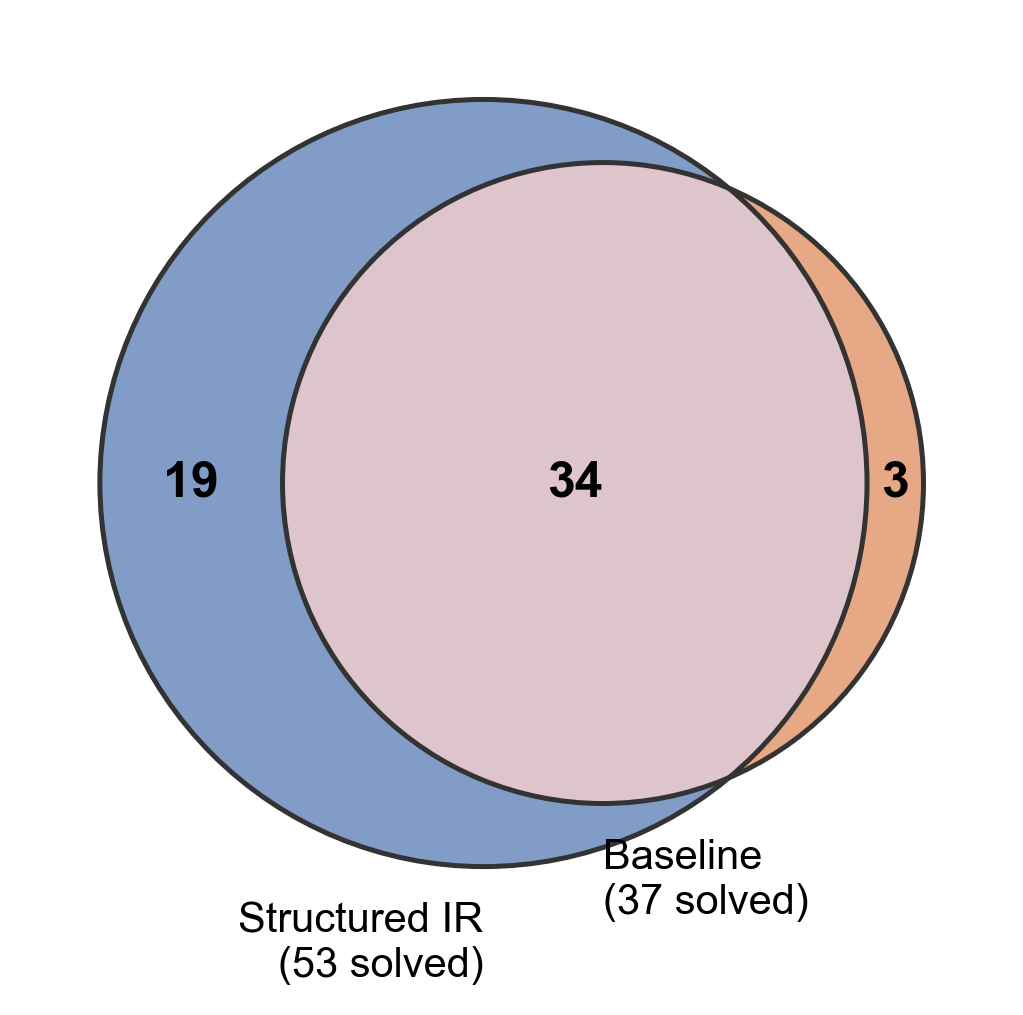

The implementation of a structured intermediate representation (IR) demonstrably expanded the capacity of the neural prover to successfully verify theorems; it achieved solutions for 19 unique theorems where the baseline system only managed 3. This significant increase highlights the effectiveness of the structured IR in enabling more complex logical reasoning. By organizing and presenting the problem in a more accessible format, the system was able to navigate the proof search space more efficiently, ultimately leading to a substantially higher rate of successful theorem verification and illustrating a key advancement in automated theorem proving capabilities.

Rigorous statistical analysis, employing a McNemar test, substantiated the observed gains in theorem proving performance. The resulting p-value of less than 0.001, obtained from a two-tailed test, provides strong evidence against the null hypothesis – that any improvement stemmed from random chance. This low p-value establishes a high degree of confidence in the reliability of the approach, indicating that the observed 43% relative improvement in pass rate on the miniF2F benchmark is statistically significant and not merely a product of variability. The test confirms that the structured intermediate representation demonstrably enhances the neural prover’s ability to solve theorems, offering a robust and dependable advancement in automated formal verification.

Beyond Verification: Towards Neuro-Symbolic Intelligence

A significant advancement lies in the burgeoning field of Neuro-Symbolic Reasoning, an approach that seeks to bridge the gap between the strengths of neural networks and formal verification techniques. Traditionally, neural networks excel at pattern recognition and flexible learning from data, but often lack the ability to provide guarantees about the correctness of their outputs. Conversely, formal verification offers rigorous proof of correctness, but struggles with the complexities and ambiguities of real-world data. This work addresses this limitation by integrating these two paradigms, allowing AI systems to not only learn solutions but also verify them with mathematical precision. By combining the adaptability of neural networks with the reliability of formal methods, researchers aim to create AI that is both intelligent and trustworthy, capable of solving complex problems while providing demonstrable assurance of their solutions’ validity – a crucial step towards deploying AI in critical applications.

Continued advancements in neuro-symbolic reasoning hinge on refining the computational methods used to navigate the vast landscape of mathematical possibilities. Current research prioritizes the development of more efficient search algorithms, aiming to drastically reduce the time and resources required to identify valid proofs or solutions. This involves exploring novel techniques in areas like heuristic search and constraint satisfaction. Simultaneously, efforts are underway to expand the scope of accessible mathematical knowledge by creating larger and more comprehensive mathematical libraries. These libraries will serve as foundational resources, enabling AI systems to draw upon a broader range of established theorems, definitions, and problem-solving strategies – ultimately boosting both the speed and reliability of automated reasoning processes. \forall x \in \mathbb{R}, x^2 \geq 0

A central ambition driving current artificial intelligence research is the development of systems capable of not simply finding solutions, but of demonstrably proving their correctness. This pursuit of verifiable AI transcends traditional problem-solving; it aims to instill a level of trustworthiness currently absent in many neural network-based approaches. Such systems would leverage formal methods-mathematical proofs and logical reasoning-to guarantee the reliability of their outputs, a crucial step for deployment in high-stakes domains like autonomous vehicles, medical diagnosis, and financial modeling. The ability to rigorously verify solutions addresses concerns about the ‘black box’ nature of deep learning, offering transparency and accountability, and ultimately fostering greater confidence in AI’s decision-making processes. This isn’t merely about achieving accuracy; it’s about building AI that can explain why its answers are correct, ensuring both performance and peace of mind.

The research presented dismantles a prevailing assumption: that sheer computational scale is the primary driver of progress in neural theorem proving. It posits a challenge to this notion by introducing structural priors – tactic skeletons – and demonstrating a significant performance boost even with limited resources. This echoes Bertrand Russell’s sentiment: “The difficulty lies not so much in developing new ideas as in escaping from old ones.” The paper doesn’t simply build a larger model; it fundamentally re-evaluates how a neural theorem prover should approach problem-solving, escaping the reliance on brute force and instead prioritizing informed, structured exploration of the proof space. This deliberate constraint, this ‘breaking’ of the scale-equals-success rule, yields surprisingly robust results, proving that intelligent guidance is often more valuable than raw computational power.

What’s Next?

The insistence on scale as the primary driver of progress in neural theorem proving feels increasingly… convenient. This work suggests a different sort of leverage-not simply more model, but a smarter constraint of the search space. Imposing structure, these ‘tactic skeletons’, isn’t about hand-holding the system to a solution; it’s about acknowledging that even intelligence benefits from a well-defined disassembly. The question now isn’t whether large language models can prove theorems, but what fundamental assumptions about problem-solving are baked into their architecture – and how brutally those assumptions are exposed when faced with formal rigor.

A predictable limitation remains: these skeletons are, themselves, hand-crafted. The real challenge isn’t achieving a marginal gain with a pre-defined structure, but automating the discovery of useful priors. Could a system learn not just to apply tactics, but to invent them? That requires a shift from treating theorem proving as a pattern completion exercise to a genuine exploration of logical space-a space where, one suspects, current models are still largely blind.

Furthermore, the constraints imposed here-computational limits-aren’t artificial. They reflect the practical realities of formal verification. Scaling to ever-larger models is a technological imperative, yes, but it sidesteps the more interesting question: can a genuinely efficient theorem prover emerge, one that prioritizes elegance and insight over brute-force computation? Perhaps the click of truth isn’t a sound of scale, but of reduction.

Original article: https://arxiv.org/pdf/2601.16172.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

- Top 15 Celebrities in Music Videos

- Top 15 Movie Cougars

- Top 20 Extremely Short Anime Series

2026-01-25 12:57