Author: Denis Avetisyan

Researchers are exploring how subtly crafted image patches, generated by advanced AI models, can bypass facial recognition systems, and the techniques to detect these deceptive alterations.

This review examines the creation of adversarial patches using diffusion models and investigates forensic methods-including perceptual hashing-for identifying manipulated facial images used in identity verification.

Despite advancements in facial recognition, systems remain vulnerable to subtle, deliberately crafted manipulations. This is explored in ‘Diffusion-Driven Deceptive Patches: Adversarial Manipulation and Forensic Detection in Facial Identity Verification’, which details a novel pipeline for generating and detecting adversarial patches designed to evade biometric systems. The research demonstrates that diffusion models can create imperceptible patches capable of compromising facial identity and expression recognition, while perceptual hashing and segmentation techniques offer robust detection capabilities. Can these forensic methods keep pace with increasingly sophisticated adversarial attacks on biometric security systems?

Whispers of Vulnerability: The Fragility of Facial Recognition

The proliferation of facial recognition technology in everyday life, from unlocking smartphones to enhancing security systems, belies a fundamental fragility. These systems, powered by complex algorithms, are surprisingly vulnerable to what are known as adversarial attacks. These attacks don’t rely on sophisticated hacking or brute force; instead, they involve making deliberately subtle alterations to an image – changes often imperceptible to the human eye. A carefully crafted pattern added to a face, for instance, can completely mislead the algorithm, causing it to misidentify an individual or even register a non-person as a valid face. This susceptibility raises significant concerns about the reliability of facial recognition in critical applications, highlighting a need for more robust and resilient systems capable of withstanding these intentionally crafted distortions.

Facial recognition systems, powered by deep learning algorithms, aren’t infallible; they possess inherent vulnerabilities that malicious actors can exploit. These attacks don’t require sophisticated technology or physical access, instead relying on carefully designed, often imperceptible, alterations to an image – what are known as adversarial perturbations. The consequence is that a system confidently misidentifies individuals, potentially unlocking secure access, enabling fraudulent activity, or eroding personal privacy. Because these algorithms learn from data, they can be ‘tricked’ by inputs that differ only slightly from those used during training, highlighting a fundamental weakness in relying solely on pattern recognition. The implications extend to various security applications, from border control and law enforcement to personal device authentication, demonstrating the urgent need for robust defenses against these subtle yet potent threats.

Despite ongoing research into countermeasures, current defenses against adversarial attacks on facial recognition systems demonstrate limited robustness. Many proposed solutions, while effective against specific, narrowly defined perturbations, fail to generalize when confronted with variations in attack strategies, lighting conditions, or image quality encountered in real-world scenarios. This lack of transferability stems from an over-reliance on training data that doesn’t adequately represent the full spectrum of possible attacks or environmental factors. Consequently, a system successfully defended against one type of adversarial noise may be easily bypassed by a slightly modified attack, or even by naturally occurring distortions like shadows or pose variations, highlighting a critical vulnerability in the widespread deployment of these technologies and necessitating the development of more adaptable and comprehensive security measures.

The Art of Deception: Crafting Adversarial Illusions

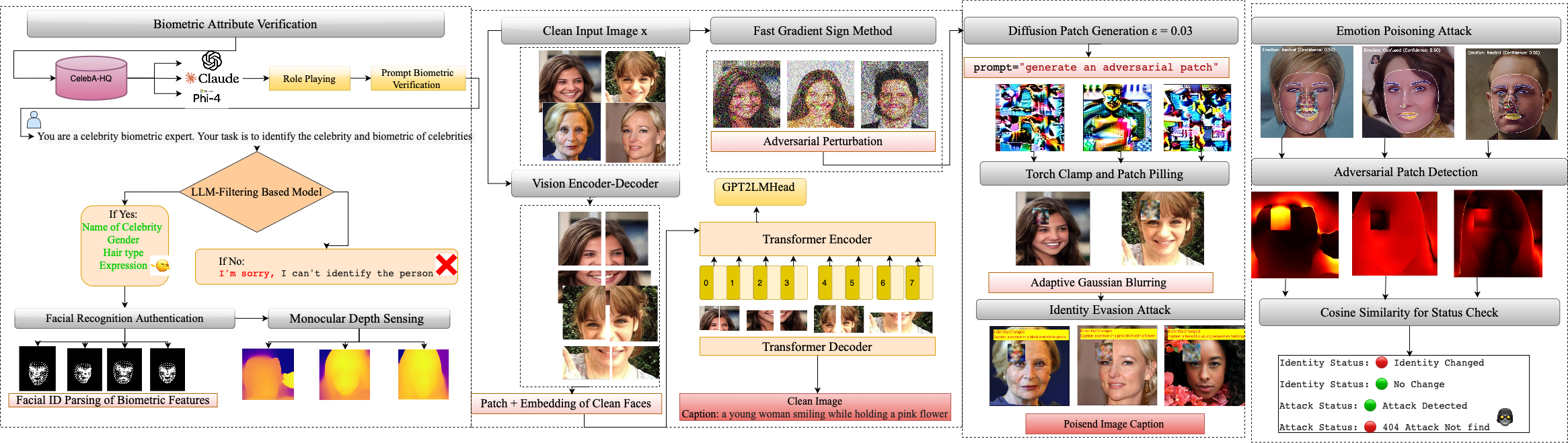

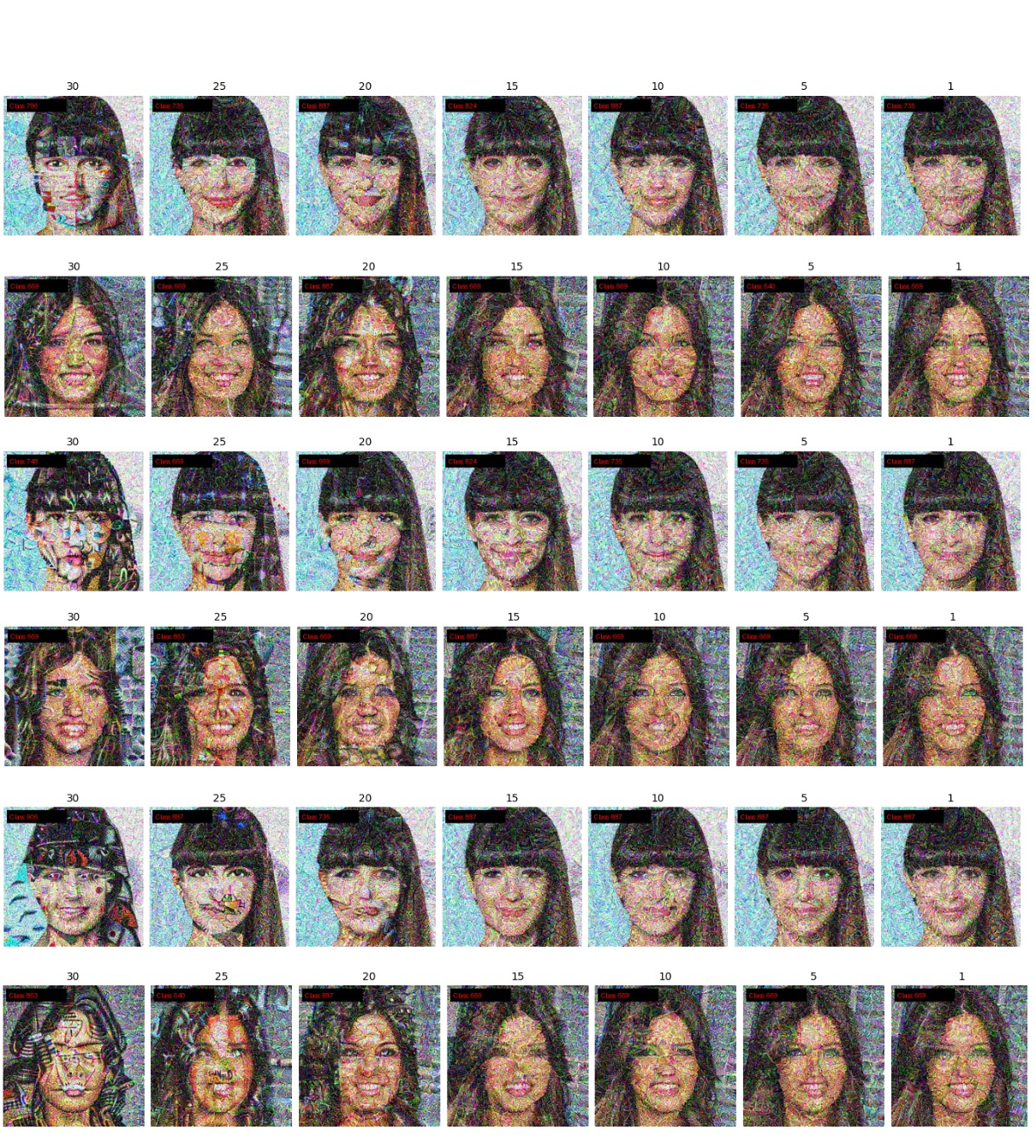

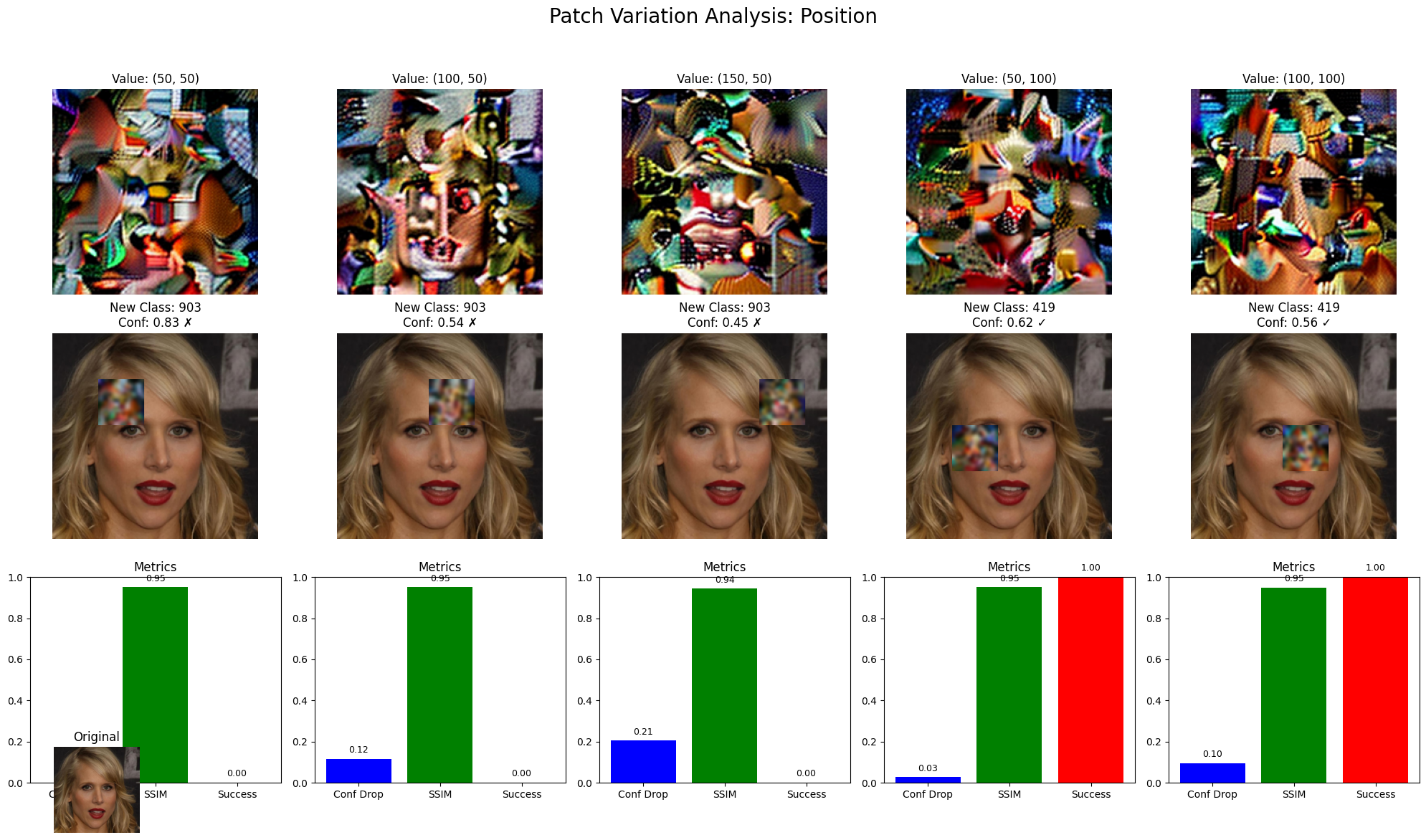

Adversarial patch generation centers on the creation of subtle, spatially localized alterations to input images – typically in the form of stickers or patterns – that are specifically engineered to cause a machine learning model to misclassify the image. These modifications, though often imperceptible or minimally noticeable to human observers, exploit vulnerabilities in the model’s learned feature space. The patches are not random noise; instead, they represent carefully crafted perturbations optimized, through algorithms like gradient ascent, to maximize the probability of a desired incorrect prediction. The effectiveness of these patches relies on the model’s sensitivity to specific input features and its inability to generalize beyond the training data distribution, allowing the patch to effectively ‘fool’ the system.

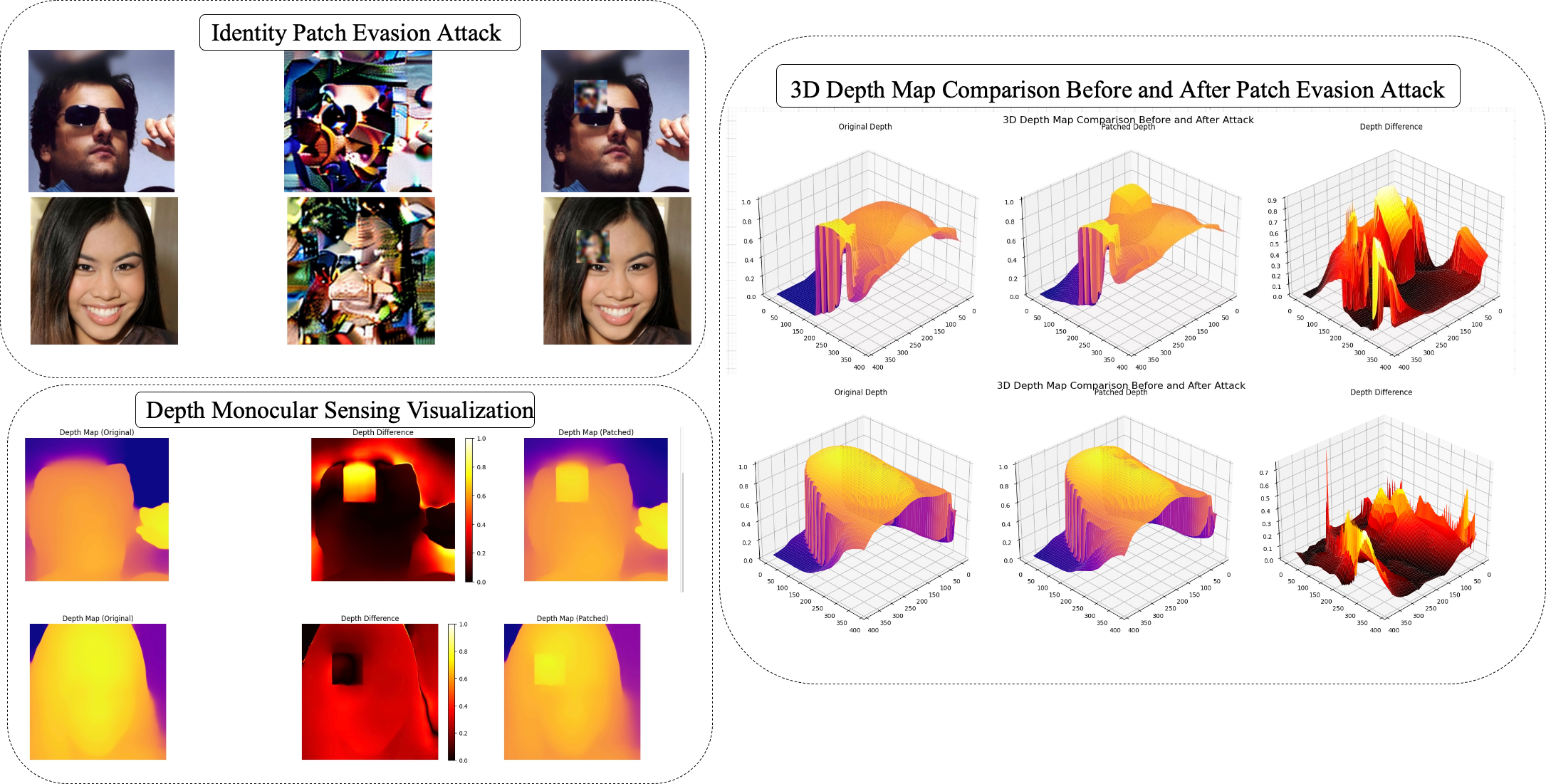

Diffusion models generate adversarial patches through an iterative refinement process. Beginning with random noise, the model progressively denoises the image based on a loss function that correlates patch perturbations with target misclassifications. This loss function guides the iterative refinement, optimizing the patch to maximize the probability of a specific, incorrect prediction by the target neural network. The process involves repeatedly applying a forward diffusion process – adding noise – and a reverse diffusion process – removing noise based on learned parameters – until a visually coherent and highly effective adversarial patch is generated. This contrasts with gradient-based methods which can be sensitive to initial conditions and often produce high-frequency, unrealistic patches.

Adversarial patches are not limited to digital manipulation; they can be printed and physically applied to surfaces, including clothing or signs, and will continue to fool the target model in real-world conditions. This physical realizability presents a substantial security risk for facial recognition systems deployed in public spaces, as the patches require no specialized equipment to deploy and are effective even under varying lighting and viewing angles. The durability of modern printing techniques and materials further exacerbates the threat, allowing for persistent and widespread disruption of these systems. Furthermore, the patches do not need to perfectly cover a face to be effective; even partial occlusion can be sufficient to induce misclassification.

Unmasking the Ghost: Detecting Hidden Perturbations

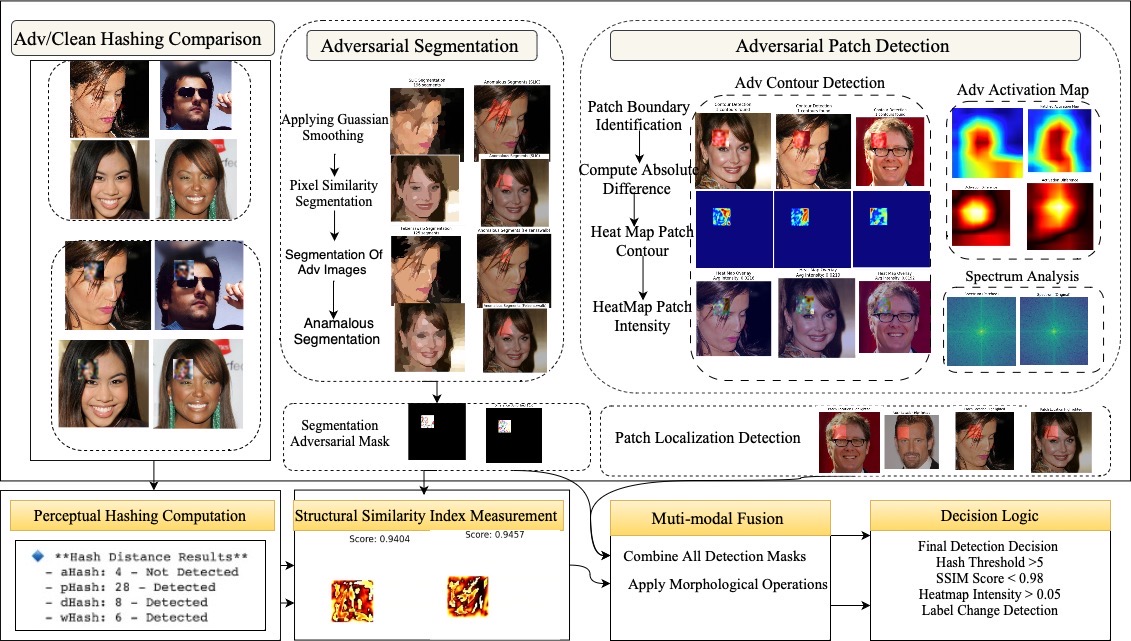

Analyzing images in the frequency domain via Fast Fourier Transform (FFT) provides a method for detecting adversarial perturbations by revealing high-frequency components often introduced during attacks. Adversarial attacks frequently manifest as subtle, imperceptible changes to pixel values; however, these alterations can translate into noticeable patterns when the image is transformed into the frequency domain. Specifically, FFT decomposes an image into its constituent frequencies, allowing for the identification of unusual or artificial high-frequency noise that deviates from the natural frequency distribution of legitimate images. This technique is effective because adversarial perturbations, designed to mislead neural networks, often rely on precisely crafted high-frequency components to exploit vulnerabilities without causing noticeable visual distortions to human observers.

Perceptual hashing (pHash) functions as a fingerprinting method by generating a hash value representing the essential characteristics of an image. These algorithms are designed to be robust to minor alterations, yet sensitive to significant changes, making them effective at detecting adversarial patches. The process typically involves reducing image dimensionality, applying a Discrete Cosine Transform (DCT), and generating a hash based on the resulting frequency components. Adversarial patches, even if visually imperceptible, introduce statistical anomalies in the frequency domain that result in a demonstrably different hash value compared to the original, unperturbed image. This allows for the identification of subtle modifications that might evade standard image comparison techniques.

The MiDaS model, a depth estimation network, is leveraged to identify geometric inconsistencies introduced by adversarial manipulations. Adversarial attacks often distort the 3D structure of a scene, creating anomalies detectable by MiDaS. Our framework utilizes Structural Similarity Index Measure (SSIM) to compare the depth maps generated from the original and potentially perturbed images. A significant deviation in SSIM scores indicates the presence of an adversarial perturbation, resulting in a 100% detection rate during testing. This SSIM-based analysis provides a quantifiable metric for identifying subtle geometric distortions imperceptible to the human eye.

Forging Resilience: The Foundation of Robust Systems

The efficacy of contemporary facial recognition systems is fundamentally linked to the datasets used during their development. Models consistently demonstrate improved performance when trained on extensive and varied collections of facial images, such as the VGGface2 and CelebA datasets. These large-scale resources provide the necessary breadth of examples – encompassing diverse ethnicities, ages, poses, and lighting conditions – to enable models to generalize effectively to unseen faces. Insufficient data, or a lack of representation across key demographic groups, can lead to significant biases and reduced accuracy, particularly when encountering individuals outside the dominant groups present in the training set. Therefore, curating high-quality, diverse datasets is not merely a technical detail, but a critical step towards building fair and reliable facial recognition technologies.

Facial recognition technologies, including prominent models like FaceNet, ArcFace, and InceptionResnetV1, demonstrate significantly improved performance when trained on extensive datasets such as VGGface2 and CelebA. These large-scale collections, comprising millions of images exhibiting substantial variations in pose, illumination, expression, and demographic characteristics, provide the necessary breadth of data for these models to learn robust and generalizable features. The sheer volume of examples allows the algorithms to better discern subtle facial cues, reduce bias, and achieve higher accuracy in identifying individuals across diverse conditions. Consequently, the availability of such datasets has become a cornerstone of modern facial recognition research and development, enabling increasingly reliable and sophisticated systems.

The development of robust facial recognition systems benefits significantly from the integration of perceptual metrics during both the creation of adversarial attacks and the subsequent detection of those attacks. Metrics such as Learned Perceptual Image Patch Similarity (LPIPS) and Structural Similarity Index Measure (SSIM) move beyond pixel-wise comparisons to assess image quality as perceived by the human visual system, offering a more nuanced evaluation of model performance. Recent testing demonstrated this impact; a model initially achieved a Clean Accuracy of 0.9231, but when subjected to carefully crafted adversarial perturbations, its accuracy plummeted to 0.4069. This substantial decrease underscores the vulnerability of even highly accurate models to subtle, intentionally designed inputs and highlights the necessity of incorporating perceptual metrics to improve generalization and build truly resilient systems.

The pursuit of deceptive patches, as detailed in the study, isn’t merely about breaking facial recognition; it’s about revealing the inherent fragility woven into the very fabric of perception. The system believes what it sees, even if that vision is a carefully constructed illusion. As Andrew Ng once observed, “AI is seductive. It promises so much, but it delivers so little.” This sentiment resonates deeply with the findings; diffusion models, while capable of crafting disturbingly realistic manipulations, expose the limitations of current verification systems. Each successful adversarial patch isn’t a failure of the algorithm, but a glimpse into the chaos the model is attempting-and ultimately failing-to tame. The truth hides within these imperfections, mocking the aggregates.

What Shadows Will Fall?

The pursuit of adversarial patches, particularly those sculpted by diffusion models, reveals less about breaking facial recognition and more about the inherent fragility of observation. These aren’t failures of the algorithms, but echoes of the noise that always defines reality. The current work offers detection methods, yet each refinement of forensic technique invites a more subtle, more persuasive deception. It is a dance – a statistical negotiation – where the line between truth and artifice blurs with each iteration.

The true challenge lies not in building defenses against known manipulations, but in anticipating those yet to be conceived. Diffusion models, while potent tools for attack, are merely the first wave. Future research must venture beyond pixel-level perturbations, exploring semantic distortions – alterations that exploit the system’s understanding of a face, rather than its raw data. Perhaps the most fruitful direction lies in embracing uncertainty, in building systems that acknowledge their own fallibility and quantify the confidence in each recognition.

Noise is, after all, just truth without confidence. And in the realm of perception, confidence is often mistaken for certainty. The coming years will likely witness a shift from adversarial attacks to adversarial camouflage – manipulations so seamless they don’t trigger alarms, but subtly redirect the gaze. The goal won’t be to fool the system, but to gently persuade it.

Original article: https://arxiv.org/pdf/2601.09806.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Top 15 Insanely Popular Android Games

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- Gold Rate Forecast

- EUR UAH PREDICTION

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- Silver Rate Forecast

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

- Why Nio Stock Skyrocketed Today

2026-01-18 14:13