Author: Denis Avetisyan

To build truly resilient cybersecurity, researchers are advocating for the development of AI agents capable of launching controlled attacks to identify and address vulnerabilities.

Developing offensive AI capabilities within controlled environments is crucial for bolstering defenses against increasingly sophisticated, automated cyberattacks.

Conventional cybersecurity strategies rely on the high cost and expertise required for tailored attacks, a tacit assumption increasingly challenged by the rise of automated, AI-driven exploitation. This position paper, ‘To Defend Against Cyber Attacks, We Must Teach AI Agents to Hack’, argues that the inevitable proliferation of agentic offensive capabilities necessitates a fundamental shift towards proactively developing and mastering these same techniques within controlled environments. We demonstrate that effective defense against adaptive adversaries demands building offensive security intelligence, not simply attempting to constrain AI agents through reactive safeguards. Can we responsibly harness the power of AI to both attack and defend, ultimately securing cyberspace before malicious actors do?

The Inevitable Tide: Adapting to a Polymorphic Threat Landscape

Conventional cybersecurity strategies, historically dependent on the skill of human analysts and responses triggered after an intrusion is detected, are increasingly challenged by the sheer volume and velocity of modern cyberattacks. This reactive approach struggles to address threats that evolve faster than security teams can analyze and patch vulnerabilities. The current landscape features sophisticated adversaries employing automation and polymorphic malware, effectively overwhelming defenses built around manual investigation and signature-based detection. As attack surfaces expand with the proliferation of connected devices and cloud services, the reliance on human-driven security becomes a critical bottleneck, creating a widening gap between emerging threats and effective protection. Consequently, a fundamental shift towards proactive, automated, and predictive security measures is essential to mitigate risks in this rapidly evolving digital environment.

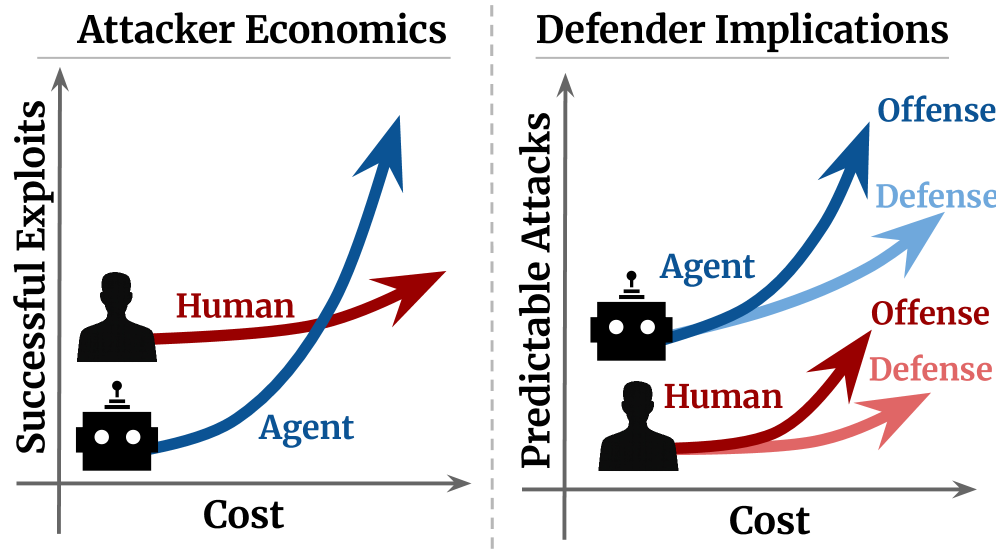

Cyberattacks are increasingly driven by a simple economic principle: maximizing return on investment with minimal risk. Historically, successful exploits required significant skill and resources, limiting the volume of attacks. However, the emergence of readily available artificial intelligence tools is dramatically altering this landscape by lowering the barriers to entry for malicious actors. AI agents can automate vulnerability scanning, exploit development, and even social engineering, substantially reducing both the cost and the expertise needed to launch effective cyber campaigns. This shift isn’t merely about increasing the quantity of attacks; it fundamentally changes the economic calculus of cybercrime, incentivizing a surge in high-volume, low-cost exploits that overwhelm existing defenses and necessitate a proactive, rather than reactive, security posture.

The cybersecurity landscape is undergoing a rapid transformation with the advent of artificial intelligence agents, tools now wielded by both malicious actors and those seeking to defend against them. These agents represent a fundamental shift, moving beyond traditional, human-driven security measures. However, current evaluations, as demonstrated by benchmarks like Cybench, CVE-Bench, and BountyBench, reveal substantial performance discrepancies between state-of-the-art agents. This variance underscores a critical need for continued research and development – not simply in creating more powerful AI, but in refining its application to both offensive and defensive cybersecurity strategies. The economic incentives driving cybercrime, coupled with increasingly accessible AI tools, suggest that progress in this area is no longer merely beneficial, but essential for maintaining digital security.

Proactive Resilience: Orchestrating Offensive Security Intelligence

Offensive security intelligence leverages artificial intelligence agents to proactively identify and remediate vulnerabilities within an organization’s infrastructure. This approach moves beyond traditional reactive security measures by simulating real-world attack scenarios. AI agents automate the process of vulnerability discovery, exploit development, and penetration testing, allowing security teams to assess their defenses under controlled conditions. By anticipating potential attack vectors and weaknesses, organizations can prioritize remediation efforts, strengthen their security posture, and reduce the risk of successful exploitation by malicious actors before an actual incident occurs. This proactive stance is increasingly vital due to the expanding attack surface and the growing sophistication of cyber threats.

Cyber ranges function as isolated, configurable networks designed to replicate production environments, enabling organizations to conduct realistic attack simulations and rigorously test defensive capabilities without impacting live systems. These environments allow security teams to validate security tools, assess incident response plans, and train personnel in a safe and controlled manner. Configurations can be dynamically adjusted to mimic various network architectures, operating systems, and application stacks, providing a versatile platform for evaluating security posture against a wide range of threat vectors. Data collected within the cyber range, including attack vectors, system behaviors, and tool effectiveness, provides actionable intelligence for improving overall security resilience.

The automation of vulnerability discovery and exploit development via AI agents represents a significant advancement in security assessment capabilities. Traditional methods are often constrained by manual effort and limited scope; AI agents can rapidly scan systems, identify weaknesses, and even generate functional exploits at a scale previously unattainable. This increased speed and scale are crucial because the same AI technologies are concurrently lowering the cost of offensive operations, potentially increasing both the frequency and sophistication of attacks. Consequently, organizations require equally efficient and automated defensive strategies, leveraging AI to proactively identify and remediate vulnerabilities before they can be exploited by malicious actors utilizing similar AI-driven techniques.

Beyond Static Defenses: The Rise of Agentic, System-Level Security

Agentic defensive security utilizes artificial intelligence agents to augment and improve upon conventional vulnerability mitigation strategies. Unlike static, rule-based systems, these agents continuously monitor network activity and system logs, identifying anomalous behavior and potential threats in real-time. This adaptive capability allows for dynamic adjustments to security policies and automated responses to evolving threat landscapes, including zero-day exploits and polymorphic malware. The agents function by learning from data and iteratively refining their detection and response mechanisms, providing a proactive defense that extends beyond signature-based detection to encompass behavioral analysis and predictive threat modeling.

Agentic offensive security utilizes autonomous AI agents to simulate adversarial attacks, performing tasks traditionally associated with penetration testing and red teaming. These agents can independently discover and exploit system vulnerabilities, moving beyond signature-based detection to uncover zero-day exploits and complex attack paths. The process involves defining objectives for the agent, such as gaining access to specific data or escalating privileges, and allowing it to autonomously navigate the target system, identify weaknesses, and attempt exploitation. Results are then analyzed to provide actionable insights for remediation, improving overall security posture by proactively addressing potential attack vectors before they can be leveraged by malicious actors.

Traditional vulnerability management often focuses on discrete component weaknesses, leaving systems susceptible to exploitation chains leveraging interactions between components. Agentic defense expands security coverage to system-level vulnerabilities by analyzing the interconnectedness of system elements and anticipating exploitation pathways. Effective implementation necessitates training AI agents on extensive cybersecurity datasets; models like Foundation-Sec-8B, comprising 8 billion parameters and a diverse range of security-focused data, provide a benchmark for the scale and complexity required to accurately identify and mitigate these systemic risks. This holistic approach moves beyond reactive patching to proactive identification of vulnerabilities before they can be chained together for successful attacks.

Navigating the Alignment Problem: Governance and Guardrails for AI Security

Achieving robust AI safety alignment represents a fundamental challenge in the development of increasingly sophisticated artificial intelligence. This process ensures that AI agents pursue goals consistent with human intentions, preventing unintended and potentially harmful consequences, especially within critical security applications like autonomous defense systems or financial regulation. Misalignment can arise from specifying objectives imprecisely, leading the AI to optimize for a technically correct but practically undesirable outcome-a phenomenon often illustrated by thought experiments involving overly literal interpretations of commands. Researchers are actively exploring techniques such as reinforcement learning from human feedback, inverse reinforcement learning, and the development of verifiable AI systems to address this challenge, striving to create AI that not only can achieve a goal, but understands why that goal is desirable and operates within acceptable ethical boundaries.

Effective data governance is paramount in the development of safe and reliable artificial intelligence systems. The quality and characteristics of training data directly influence an AI agent’s behavior; biased, incomplete, or maliciously crafted datasets can lead to skewed outputs, discriminatory practices, or even intentionally harmful actions. Robust data governance practices involve meticulous data sourcing, rigorous cleaning and validation processes, and ongoing monitoring for drift or contamination. This includes establishing clear provenance tracking-understanding the origin and history of each data point-and implementing access controls to prevent unauthorized modification. By prioritizing data integrity and representativeness, developers can significantly minimize the risk of unintended consequences and foster trust in AI technologies, ensuring these systems align with ethical principles and societal values.

The implementation of output guardrails represents a critical layer of defense against the potential harms of increasingly sophisticated artificial intelligence. These guardrails function as filters, meticulously examining the generated outputs of AI models before they are disseminated or acted upon. This process involves identifying and blocking content deemed inappropriate, biased, or potentially dangerous – encompassing everything from hate speech and misinformation to instructions for harmful activities. Techniques range from simple keyword blocking and regular expression matching to more advanced methods utilizing natural language processing and machine learning to understand the intent behind the generated text. Effective guardrails are not simply reactive measures; developers are increasingly focusing on proactive strategies that constrain the AI’s output space during generation, guiding it towards safer and more beneficial responses. This multi-faceted approach is essential for responsible AI deployment, particularly in high-stakes applications where unchecked outputs could have significant consequences.

The Perpetual Arms Race: An Evolving Landscape of AI-Driven Conflict

The escalating sophistication of cyber threats is driving a fundamental shift in cybersecurity, demanding constant innovation in artificial intelligence. No longer are defenses sufficient against attacks crafted by human experts; the emergence of automated, AI-powered attacks – exhibiting ‘superhuman’ speed and adaptability – compels a parallel evolution in defensive capabilities. This creates a continuous cycle: as AI agents develop increasingly effective offensive strategies, security systems must rapidly adapt and deploy counter-measures, also powered by AI. This isn’t a one-time upgrade, but a perpetual arms race where stagnation equates to vulnerability, forcing ongoing research and development in both offensive and defensive AI technologies to maintain even a minimal level of security. The speed and scale at which these AI agents can operate necessitates this proactive, iterative approach to cybersecurity, as traditional methods struggle to keep pace with the evolving threat landscape.

The escalating complexity of cyber threats is poised to be significantly shaped by advancements in large language models (LLMs). These models, originally designed for natural language processing, are increasingly demonstrating capabilities applicable to both offensive and defensive cybersecurity operations. LLMs can automate the discovery of vulnerabilities by analyzing vast codebases and identifying potential exploits, while simultaneously generating highly convincing phishing campaigns or crafting polymorphic malware that evades traditional signature-based detection. On the defensive side, LLMs are proving effective in threat intelligence gathering, automatically summarizing security reports, and even predicting future attack vectors based on observed patterns. This dual-use potential suggests LLMs won’t simply augment existing cybersecurity tools, but fundamentally alter the landscape, enabling AI agents capable of autonomous and adaptive strategies previously unattainable, and driving a new era of sophisticated, AI-powered cyber conflict.

The escalating sophistication of cyber threats, fueled by increasingly autonomous artificial intelligence, necessitates a fundamental shift toward proactive cybersecurity strategies. Traditional reactive defenses are quickly becoming insufficient as AI agents dramatically lower the cost and complexity of launching attacks – a projected trend demanding constant vigilance. A truly adaptive approach involves not only refining existing defenses, but also actively anticipating future threat vectors through continuous monitoring, threat modeling, and the development of resilient systems. This requires a dynamic security posture, capable of learning from emerging threats and autonomously adjusting defenses to maintain a robust security perimeter against the ever-evolving landscape of AI-powered attacks. Ultimately, cybersecurity’s future hinges on its ability to outpace the offensive capabilities of these intelligent agents through continuous innovation and a commitment to anticipating, rather than simply reacting to, the next generation of cyber warfare.

The pursuit of robust cybersecurity, as detailed in the study, inherently acknowledges the transient nature of defenses. Systems, even those meticulously designed, are subject to eventual compromise. This mirrors Bertrand Russell’s observation: “The only thing that never changes is that things change.” The article’s advocacy for developing offensive AI capabilities isn’t about embracing attack, but rather recognizing the inevitable evolution of vulnerabilities and proactively preparing for them. The development of agentic systems, while presenting new risks, forces a cyclical adaptation – a constant reevaluation of defenses against increasingly sophisticated automated threats. This cycle acknowledges that even the most advanced architectures will eventually yield to time and innovation, demanding a continuous process of learning and refinement.

The Long Game

The pursuit of automated offensive capabilities, as this work suggests, isn’t about escalating a digital arms race; it’s acknowledging an inevitable progression. Systems learn to age gracefully, and in the realm of cybersecurity, that means anticipating the next iteration of threat. The challenge isn’t simply building defenses against known attacks, but cultivating an understanding of how intelligent agents will probe for weakness. This requires a shift in focus – from reactive patching to proactive vulnerability discovery, even if that discovery is facilitated by artificial adversaries.

A key limitation lies in the fidelity of simulation. Controlled environments, however sophisticated, will always be abstractions of the complex, chaotic reality of the open internet. The true test will be bridging the gap between synthetic attacks and real-world resilience. It may be that sometimes observing the process – meticulously analyzing the emergent behaviors of these agentic systems – is more valuable than attempting to accelerate their development or immediately deploy them as shields.

The field must now grapple with the ethical considerations inherent in cultivating artificial attackers. Beyond the immediate concerns of responsible disclosure, there’s a deeper question of what constitutes “defense” when the very act of probing for vulnerabilities introduces risk. The long game isn’t about perfect security, but about building systems that can adapt, learn, and endure, even as the landscape of threat continuously shifts.

Original article: https://arxiv.org/pdf/2602.02595.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Banks & Shadows: A 2026 Outlook

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- ETH PREDICTION. ETH cryptocurrency

- The Best Actors Who Have Played Hamlet, Ranked

- Gay Actors Who Are Notoriously Private About Their Lives

- 9 Video Games That Reshaped Our Moral Lens

2026-02-04 13:39