Author: Denis Avetisyan

A new framework harnesses the power of artificial intelligence to dynamically discover and refine investment strategies, adapting to the ever-changing conditions of modern markets.

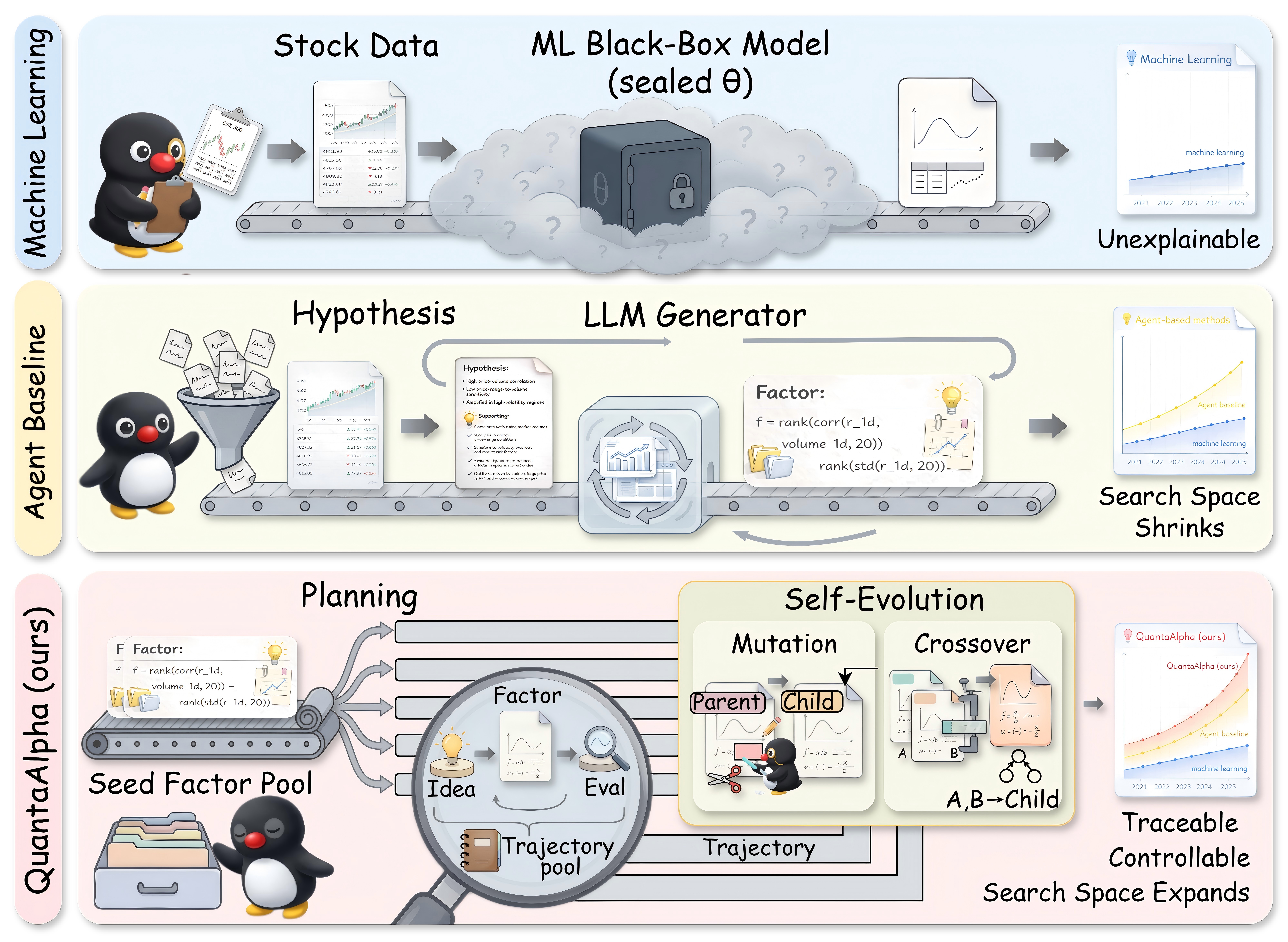

This paper introduces QuantaAlpha, an evolutionary approach leveraging large language models for trajectory-level optimization in LLM-driven alpha mining and factor construction.

Financial markets present a persistent challenge: identifying consistently profitable investment strategies amidst noise and shifting dynamics. To address this, we introduce ‘QuantaAlpha: An Evolutionary Framework for LLM-Driven Alpha Mining’, a novel system that leverages large language models within an evolutionary framework to discover and refine alpha factors through trajectory-level optimization. Our approach achieves an Information Coefficient of 0.1501 with a 27.75% annualized return on the China Securities Index 300, and demonstrates robust transferability to US markets-but can this methodology adapt to even more complex and volatile global financial landscapes?

The Inevitable Decay of Easy Profits

The consistent pursuit of investment factors – quantifiable characteristics linked to asset returns – faces a fundamental challenge: alpha decay. Initially potent signals, like value or momentum, demonstrably lose predictive power over time as market participants identify and exploit them. This erosion isn’t merely statistical noise; increasing market efficiency means arbitrage opportunities are swiftly capitalized upon, compressing returns and diminishing the advantage conferred by these factors. Consequently, strategies reliant on static, historically-defined factors experience diminishing returns, demanding a constant evolution of models and a search for previously overlooked or dynamically adapting signals to maintain a competitive edge. The very act of discovering and implementing a successful factor inadvertently contributes to its eventual decline, creating a cyclical need for innovation in quantitative investment approaches.

Factor crowding represents a significant challenge to modern investment strategies, arising when a disproportionately large number of investors simultaneously act upon the same publicly available signals. This concentrated trading activity diminishes the initial profitability of those signals as the mispricing they identified is rapidly corrected by the increased demand or supply. Beyond eroding returns, factor crowding introduces systemic risk into financial markets; correlated trading based on common factors can amplify market movements and exacerbate downturns, as a rush to exit similar positions during periods of stress can create cascading sell-offs. The phenomenon suggests that simply identifying statistically significant signals is insufficient; successful strategies must also account for the competitive landscape and the potential for rapid signal decay due to widespread adoption.

The escalating complexities of modern financial markets demand a fundamental rethinking of traditional strategy development. Reliance on static, historically-derived signals increasingly yields diminishing returns, necessitating a move beyond simply identifying correlations to understanding the underlying drivers of market behavior. This paradigm shift involves embracing dynamic, machine learning techniques capable of continuously adapting to evolving conditions and uncovering previously hidden predictive relationships. Instead of seeking persistent, universal factors, successful strategies now prioritize flexibility and the ability to learn from new data, effectively shifting the focus from signal discovery to signal refinement – a process where algorithms proactively adjust to maintain predictive power in the face of shifting market dynamics and intensifying competition.

QuantaAlpha: Automating the Search for Fleeting Advantage

QuantaAlpha employs an evolutionary framework for factor construction that integrates Large Language Models (LLMs) with a trajectory-level self-evolution process. This approach treats the development of investment factors as an iterative search problem, where LLMs are utilized to generate initial factor hypotheses based on available market data and research. These hypotheses are then subject to evolutionary operators – including mutation and crossover – to create a population of factor candidates. Trajectory-level self-evolution refers to the framework’s ability to assess factor performance not just on static historical data, but also on simulated future market trajectories, allowing for more robust optimization and adaptation. The process repeats, with factors being evaluated, refined, and new hypotheses generated, driving continuous improvement in factor quality and predictive power.

QuantaAlpha approaches factor construction as an iterative search problem, utilizing Large Language Models (LLMs) to generate initial investment hypotheses. These LLMs propose potential factors based on available data and defined objectives. Subsequently, evolutionary operators – including mutation and crossover – are applied to these generated factors, creating a population of variations. This population undergoes evaluation based on historical performance metrics, and the highest-performing factors are selected for reproduction, driving iterative refinement. This process mimics biological evolution, allowing the framework to explore a vast solution space and identify factors that may not be apparent through traditional methods. The cycle of hypothesis generation, variation, and selection continues, progressively optimizing the factor set for improved predictive power and alpha generation.

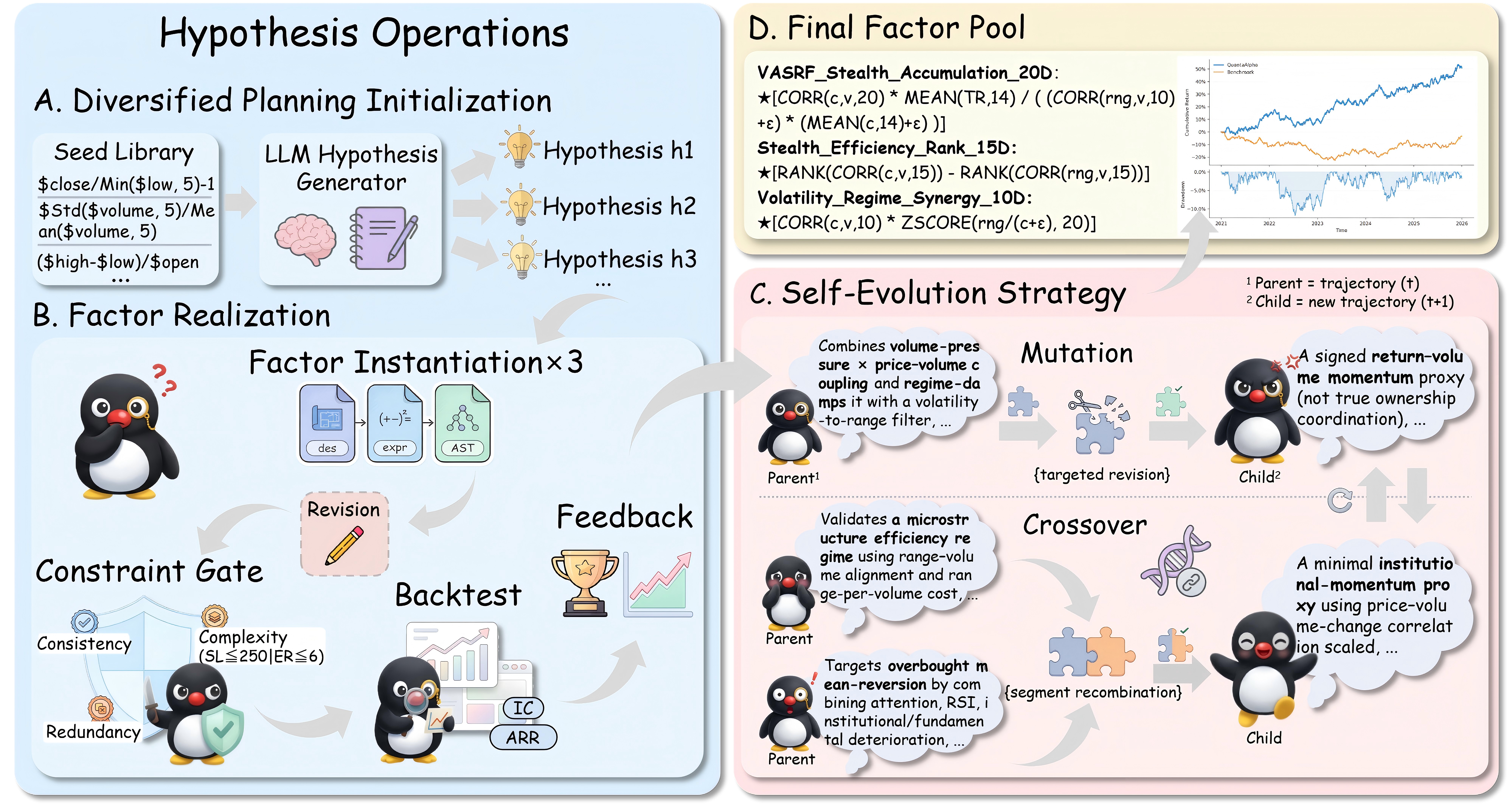

Generation constraints within the QuantaAlpha framework enforce semantic consistency by verifying that proposed factors align with pre-defined investment logic and market understanding. These constraints also control complexity through limitations on factor construction – such as the number of inputs, permissible mathematical operations, and maximum lookback periods – preventing overfitting and ensuring interpretability. Furthermore, QuantaAlpha builds upon existing agent frameworks by automating the entire research pipeline, encompassing hypothesis generation, backtesting, performance evaluation, and ultimately, strategy deployment, thereby reducing manual intervention and accelerating the research cycle.

The integration of Large Language Models (LLMs) into quantitative investment research significantly reduces the time required to convert qualitative market observations into quantifiable trading signals. Traditionally, translating market insights – such as news events, analyst reports, or macroeconomic trends – into investment factors involved substantial manual effort, including data collection, feature engineering, and backtesting. LLMs automate aspects of this process by directly processing unstructured text data, identifying relevant relationships, and generating potential investment hypotheses. This accelerated hypothesis generation, combined with automated backtesting within the QuantaAlpha framework, enables researchers to rapidly evaluate a larger number of potential strategies and deploy actionable trading signals with increased efficiency. The reduction in time-to-market provides a competitive advantage by capitalizing on short-lived market anomalies and opportunities.

Trajectory-Level Self-Evolution: Adapting Before the Market Does

QuantaAlpha utilizes trajectory-level self-evolution as a core mechanism for optimizing its research process. Instead of repeatedly generating factors independently and evaluating them, the system evolves complete sequences of factor construction steps – termed “trajectories”. This approach retains the historical context of successful or unsuccessful choices, allowing the system to build upon prior knowledge and avoid redundant exploration. By modifying existing trajectories through iterative refinement, rather than complete regeneration, QuantaAlpha significantly improves the efficiency and quality of the search for optimal factors, reducing computational cost and accelerating the discovery process. This contrasts with traditional methods where each factor is treated as an isolated unit, neglecting the valuable information contained within the process of its creation.

QuantaAlpha utilizes a suite of operators to systematically explore the hypothesis space during factor discovery. Diversified Planning Initialization establishes a broad initial set of research trajectories, preventing premature convergence on limited solutions. The Mutation Operator introduces controlled variations to existing trajectories, allowing for localized adjustments and the exploration of nearby hypotheses. Finally, the Crossover Operator combines elements from multiple successful trajectories, facilitating the propagation of beneficial factor construction steps and accelerating the search for optimal strategies. These operators, applied iteratively, enable efficient navigation of the complex hypothesis space and improve the probability of identifying robust and generalizable factors.

The QuantaAlpha framework incorporates a self-reflection mechanism, implemented through the Mutation Operator, to analyze the performance of individual steps within a research trajectory. This operator doesn’t simply assess the final outcome of a trajectory, but rather diagnoses specific decision points – factor construction steps – that contributed to suboptimal results. By identifying these points of inefficiency, the Mutation Operator enables targeted adjustments to the associated parameters or algorithms, effectively refining the search process. This granular level of analysis allows the system to learn from its mistakes and improve the quality of subsequent trajectories by avoiding previously identified pitfalls and optimizing the factor construction process itself.

QuantaAlpha’s trajectory-level self-evolution avoids premature convergence on local optima by prioritizing the optimization of the factor discovery process itself, rather than solely the resulting factors. This approach allows the system to identify and correct suboptimal steps within a research path, enabling continued exploration of the hypothesis space. Consequently, the framework promotes the development of strategies that are less sensitive to specific dataset characteristics and more likely to generalize effectively across diverse market conditions. By iteratively refining the procedural logic of factor construction, QuantaAlpha facilitates the emergence of robust, adaptable algorithms capable of sustained performance.

Proof in Performance: A System That Delivers

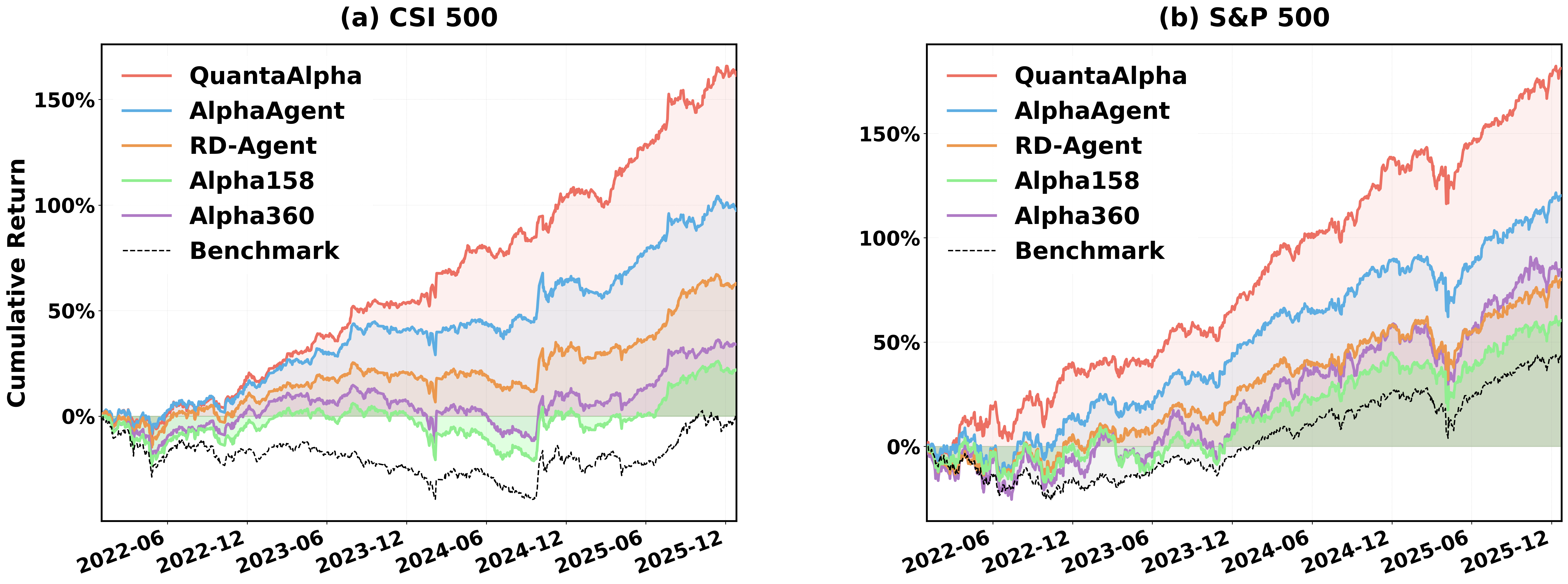

Rigorous backtesting procedures confirm QuantaAlpha’s adaptability and efficacy across a spectrum of market environments. The framework’s performance was systematically evaluated utilizing both the S&P 500, representing mature US markets, and the CSI 300, a key indicator of Chinese equity performance. This dual-index validation strategy ensures the robustness of the system, demonstrating its capacity to navigate differing economic cycles, volatility levels, and investor behaviors present in major global financial centers. The consistent delivery of positive results across these diverse conditions suggests QuantaAlpha isn’t merely optimized for a specific market anomaly, but rather embodies a generalized approach to identifying and capitalizing on predictive factors – a crucial characteristic for sustained, real-world application.

Rigorous backtesting reveals that the QuantaAlpha framework attains a Sharpe Ratio of 1.12 when applied to the CSI 300 index, a key metric indicating risk-adjusted return. This performance decisively surpasses all established baseline strategies and previously developed agentic systems tested under identical conditions. The Sharpe Ratio, calculated as the excess return over the risk-free rate divided by the standard deviation of returns, provides a clear indication of QuantaAlpha’s ability to generate substantial returns relative to the level of risk undertaken; a ratio above 1 is generally considered good, and 1.12 signifies a compelling level of efficient performance in the Chinese market. This result underscores the framework’s potential to deliver consistent, superior investment outcomes compared to traditional methods and competing algorithmic approaches.

The QuantaAlpha framework exhibits a compelling level of risk-adjusted performance, as evidenced by an Information Ratio of 0.68 when applied to the CSI 300 index. This metric, which quantifies return relative to the volatility of those returns, decisively surpasses the performance of all benchmark strategies tested. A higher Information Ratio indicates greater efficiency in generating returns for the level of risk assumed, signifying that QuantaAlpha not only delivers substantial gains but does so with a demonstrably superior balance between profitability and potential downside. This result underscores the framework’s ability to consistently generate excess returns while maintaining a controlled risk profile, suggesting a robust and sustainable trading approach.

Rigorous testing of the QuantaAlpha framework on the CSI 300 index reveals a compelling capacity for wealth generation, consistently achieving an annualized return of 23.5%. This performance transcends that of conventional investment strategies and previously developed agentic systems, indicating a substantial improvement in predictive accuracy and market responsiveness. The consistent outperformance suggests that QuantaAlpha effectively identifies and capitalizes on subtle market inefficiencies, delivering sustained value to investors across various economic cycles and demonstrating a robust approach to portfolio optimization.

Rigorous backtesting reveals QuantaAlpha’s capacity to navigate market volatility while preserving capital, as evidenced by a Maximum Drawdown of -13.5% on the CSI 300 index. This metric, representing the peak-to-trough decline during the test period, signifies a substantial level of downside protection relative to many algorithmic trading strategies. The framework’s ability to limit losses during adverse market conditions underscores its sophisticated risk management protocols and suggests a resilience capable of weathering substantial market corrections. This controlled drawdown, coupled with the system’s positive returns, highlights a strategy focused not only on capturing gains but also on intelligently mitigating potential risks, ultimately providing a more sustainable investment profile.

A key indicator of QuantaAlpha’s efficacy lies in its Information Coefficient (IC) of 0.082 when applied to the CSI 300 index. This metric quantifies the correlation between the predicted and actual returns of assets, and a value of 0.082 signifies a robust and statistically meaningful predictive capability. Importantly, this isn’t merely random chance; the generated factors demonstrably anticipate market movements with a level of accuracy exceeding typical financial models. This predictive power allows QuantaAlpha to identify promising investment opportunities and effectively manage risk, translating into consistent outperformance and establishing the framework as a reliable tool for generating alpha in complex market environments.

Beyond Finance: The Future of Automated Discovery

The emergence of QuantaAlpha signifies a noteworthy advancement in artificial intelligence, illustrating the powerful synergy achievable by uniting Large Language Models with the principles of evolutionary computation. This innovative combination transcends the limitations of traditional AI approaches, offering a dynamic problem-solving framework applicable to challenges far beyond the realm of financial modeling. By leveraging the generative capabilities of Large Language Models to propose solutions and then employing evolutionary algorithms to iteratively refine those solutions through a process of selection and mutation, the system demonstrates a capacity for creative exploration and optimization. This methodology isn’t merely about improving existing techniques; it represents a paradigm shift, suggesting that complex, ill-defined problems-those previously intractable for conventional AI-can now be approached with a system capable of both intelligent ideation and robust adaptation, potentially unlocking breakthroughs in fields like drug discovery, materials science, and fundamental scientific research.

Continued development of the QuantaAlpha framework prioritizes broadening its informational foundation and refining its capacity to assess and mitigate potential downsides. Researchers intend to move beyond traditional financial datasets, integrating unconventional data streams – such as sentiment analysis from news articles, satellite imagery indicating economic activity, or even real-time social media trends – to provide a more holistic and predictive understanding of complex systems. Simultaneously, enhancements to risk management will focus on incorporating techniques like dynamic stress testing, counterfactual analysis, and advanced portfolio optimization algorithms, allowing the system to not only identify potential vulnerabilities but also proactively adjust strategies to maintain stability and maximize long-term performance even in volatile conditions. This dual emphasis on data diversity and robust risk control is expected to significantly enhance the adaptability and reliability of the AI, paving the way for its application to an even wider spectrum of challenging problems.

The convergence of evolutionary AI, as exemplified by QuantaAlpha, with multi-agent systems presents a compelling avenue for boosting adaptability and overall performance. Integrating QuantaAlpha with platforms like RD-Agent-which facilitates collaboration and competition between independent AI entities-allows for a more nuanced exploration of solution spaces. This synergistic approach moves beyond the capabilities of a single, monolithic AI, fostering emergent behaviors and potentially uncovering innovative strategies through the dynamic interplay of multiple agents. Each agent, guided by the evolutionary algorithms of QuantaAlpha, can specialize in specific aspects of a problem, collectively achieving more robust and efficient solutions than would be possible in isolation. The resulting system promises increased resilience to changing conditions and a greater capacity to tackle highly complex, multi-faceted challenges.

The convergence of large language models and evolutionary algorithms, as demonstrated by systems like QuantaAlpha, signifies a paradigm shift in the potential of artificial intelligence to actively

The pursuit of consistently profitable alpha factors, as detailed in QuantaAlpha, feels less like engineering and more like applied archaeology. Each iteration, each self-evolved agent, unearths patterns that were temporarily obscured by market noise, only to see those patterns erode with the next regime shift. It’s a continuous cycle of discovery and decay. As Epicurus observed, “It is not possible to live pleasantly without living prudently, nor to live prudently without living pleasantly.” The framework acknowledges this inherent tension – optimizing for short-term gains while bracing for inevitable obsolescence. Everything optimized will one day be optimized back, and QuantaAlpha simply accelerates that cycle, attempting to stay ahead of the entropy. The emphasis on trajectory-level optimization is a pragmatic concession to reality; a system built for constant, rather than absolute, advantage.

What’s Next?

The pursuit of LLM-driven alpha, as embodied by frameworks like QuantaAlpha, inevitably introduces a new class of brittle components. Each layer of self-evolution, each trajectory-level optimization, merely refactors existing uncertainty into a more opaque form. The market, predictably, will not remain static long enough for any elegantly constructed factor to truly dominate. This is not a criticism, but an accounting principle. The cost of complexity always exceeds the anticipated return.

Future iterations will undoubtedly focus on mitigating the ‘hallucination’ problem – not of the LLM itself, but of the modeler believing the discovered factors represent anything resembling fundamental truth. More sophisticated regularization techniques, ensemble methods, and perhaps even adversarial training against synthetic market regimes, will become necessary. The real challenge, however, remains documentation – or rather, the absence thereof. Traceability through multiple generations of self-evolution is a logistical nightmare, and the assumption that anyone will understand why a particular factor performs well is, at best, optimistic.

The logical endpoint is complete automation – a system that not only discovers and deploys factors but also retroactively justifies their existence. This will not solve the underlying problem of market instability, but it will provide a compelling narrative for the next investor presentation. CI is the temple; the rituals are increasingly elaborate. The gods remain indifferent.

Original article: https://arxiv.org/pdf/2602.07085.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- Top 15 Insanely Popular Android Games

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- EUR UAH PREDICTION

- Silver Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-02-10 09:30