Author: Denis Avetisyan

Researchers have developed a multi-agent artificial intelligence system to automatically detect and categorize foreign interference attempts on social media platforms.

This paper presents an agentic operationalization of the DISARM framework for Foreign Information Manipulation and Interference (FIMI) investigation, utilizing multi-agent systems and advanced tactics, techniques, and procedures.

Despite increasing recognition of the threat posed by foreign information manipulation and interference (FIMI), scalable analysis remains a significant challenge for allied partners. This paper, ‘An Agentic Operationalization of DISARM for FIMI Investigation on Social Media’, introduces a novel multi-agent AI pipeline designed to operationalize the DISARM framework for automated detection and categorization of manipulative behaviors on social media. Our approach effectively scales the predominantly manual work of FIMI analysis by mapping observed tactics to standard DISARM taxonomies in a transparent manner. Will this agentic operationalization provide a critical step toward enhanced situational awareness and improved data interoperability in the face of evolving information operations?

The Rising Tide of Deception: Understanding Modern Information Warfare

The escalating prevalence of Foreign Information Manipulation and Interference (FIMI) represents a growing danger to the foundations of democratic societies and national security infrastructure. These campaigns, once largely confined to state-sponsored actors, are now increasingly diverse, involving a wider range of entities and leveraging increasingly sophisticated techniques. Beyond simple disinformation, modern FIMI encompasses coordinated inauthentic behavior, the amplification of divisive narratives, and the exploitation of cognitive biases to erode public trust and polarize communities. The sheer volume of information circulating online, combined with the speed at which these campaigns can spread – often utilizing automated bots and strategically timed content releases – presents a significant challenge to traditional detection and countermeasure strategies, demanding a proactive and adaptive defense against these evolving threats. This isn’t merely about contesting facts; it’s about undermining the very ability of citizens to discern truth from falsehood and participate meaningfully in civic life.

The sheer scale and speed of contemporary foreign information manipulation and interference (FIMI) campaigns are rapidly eclipsing the capabilities of human analysis. Previously, investigative teams could painstakingly dissect disinformation narratives, trace origins, and assess impact – a process now rendered impractical by the constant deluge of fabricated content and coordinated inauthentic behavior. This exponential growth in volume and velocity necessitates a shift towards automated detection and analysis systems. These systems utilize techniques like natural language processing, machine learning, and network analysis to identify patterns, flag suspicious activity, and prioritize threats, allowing security professionals to focus on nuanced investigations and strategic countermeasures. Without these automated tools, the ability to effectively respond to and mitigate the risks posed by sophisticated FIMI operations is severely compromised, leaving democratic institutions and national security increasingly vulnerable.

An Agentic Pipeline for Dissecting Influence Operations

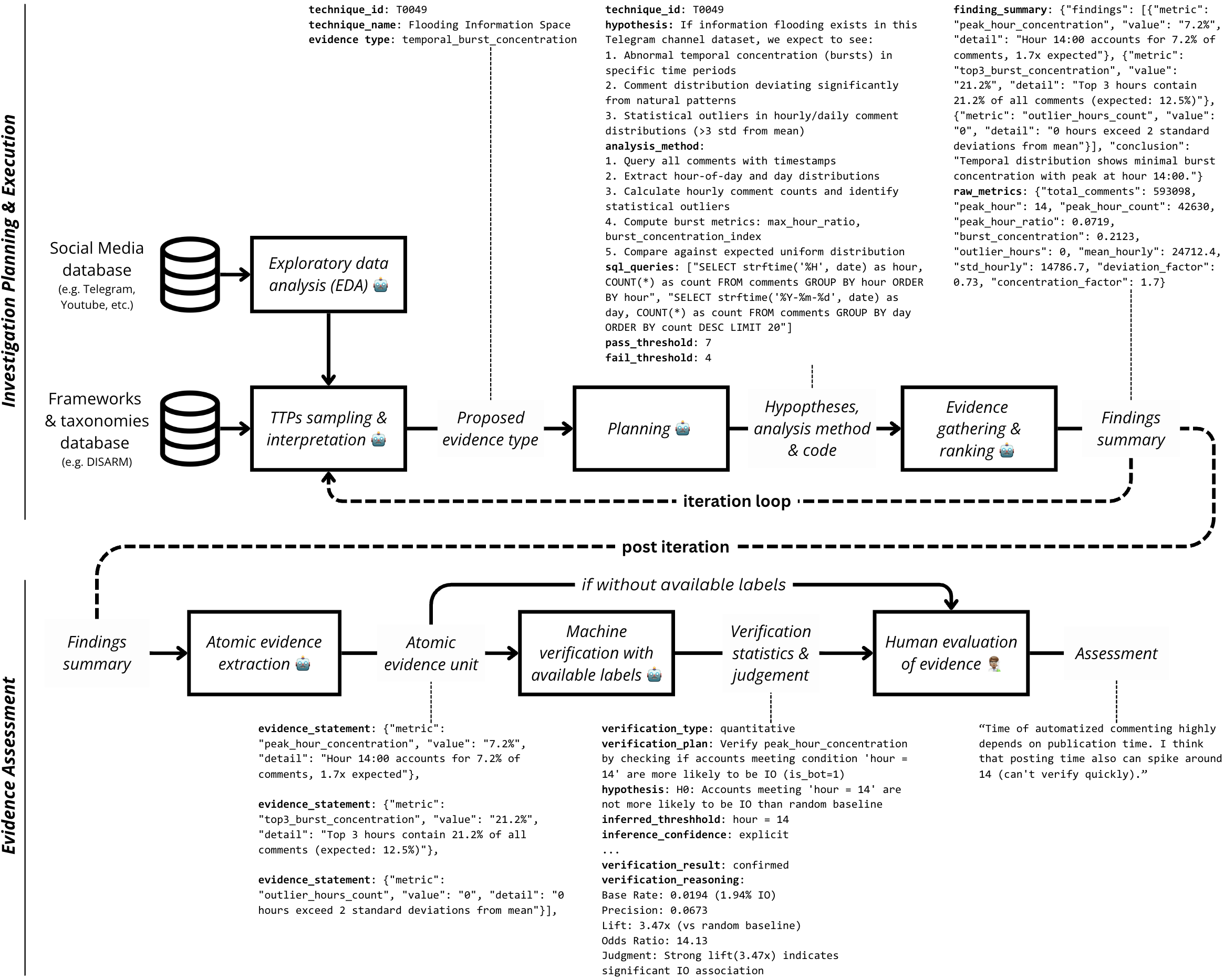

A specialized Multi-Agent Pipeline was developed to identify manipulative behaviors present in data and categorize them according to the Tactics, Techniques, and Procedures (TTPs) outlined in the DISARM Framework. This pipeline employs an agentic AI approach, utilizing multiple autonomous agents working in concert to detect and map observed behaviors onto specific DISARM TTPs. The system is designed to move beyond simple keyword identification, analyzing context and intent to accurately categorize manipulative actions. This allows for a structured and repeatable analysis of influence operations, providing a standardized method for identifying and understanding deceptive strategies.

The Agentic Pipeline employs a Technique-Guided Agent Architecture to directly implement the DISARM Framework as a structured, executable workflow. This architecture defines agents that systematically investigate potential influence operations by referencing and applying the specific Tactics, Techniques, and Procedures (TTPs) detailed within DISARM. By operationalizing DISARM in this manner, the pipeline ensures consistent analytical execution across multiple investigation cycles, mitigating subjective interpretation and enabling repeatable, auditable results. Each agent is designed to focus on identifying evidence related to specific DISARM TTPs, facilitating a standardized and comprehensive analysis of observed manipulative behaviors.

The automated influence operation analysis pipeline leverages the Claude Opus 4.5 large language model for processing and analysis. All evidence gathered during execution, alongside verification outputs generated at each stage, are persistently stored in a SQL database. This database architecture ensures both data integrity through ACID properties and scalability to accommodate increasing data volumes and investigation complexity. A single complete execution of the pipeline, from initial data ingestion to final TTP mapping, currently requires 35 minutes of API runtime and incurs a total cost of USD 11.4, based on current Claude Opus 4.5 pricing.

Validating the Pipeline Against Real-World Deception

The Multi-Agent Pipeline’s validation process incorporated two distinct real-world datasets representing coordinated inauthentic behavior. The first dataset comprised activity on the X platform (formerly Twitter) related to a Chinese influence campaign targeting Guo Wengui. The second dataset focused on a Russian campaign conducted on Telegram, specifically aimed at influencing Moldovan elections. Utilizing these datasets allowed for assessment of the pipeline’s performance against documented instances of state-sponsored disinformation and manipulation, providing a basis for evaluating its efficacy in identifying and categorizing relevant tactics, techniques, and procedures (TTPs).

Evaluation of the Multi-Agent Pipeline using real-world datasets yielded a 50% technique pass rate across 14 autonomous iterations, indicating its capacity to identify and categorize manipulative behaviors. This performance metric reflects the pipeline’s ability to map observed actions onto specific Tactics, Techniques, and Procedures (TTPs) defined within the DISARM framework. The technique pass rate is calculated based on the successful attribution of pipeline-identified behaviors to documented DISARM TTPs, providing a quantitative measure of the system’s analytical accuracy and its alignment with established influence operation methodologies.

Analysis of the Telegram dataset revealed over 30 bot accounts that had not been previously identified by human analysis. This demonstrates the pipeline’s capacity to exceed initial human performance benchmarks in bot detection. The pipeline’s foundation in Agentic AI enables it to autonomously adapt to new and evolving bot tactics, allowing for effective investigation of complex, open-ended datasets without relying on pre-defined signatures or rules. This adaptive capability is critical for identifying novel manipulative behaviors that might otherwise evade detection.

Implications for Modern Warfare and a Unified Defense

Recent analyses demonstrate that implementing the agent-based DISARM operationalization significantly improves capabilities within the complex landscape of Multi-Domain Operations. This framework fosters enhanced situational awareness by enabling a more comprehensive understanding of the operational environment, achieved through the rapid processing and synthesis of diverse data streams. Crucially, DISARM facilitates seamless interoperability between various systems and personnel, breaking down traditional data silos and promoting information sharing. This, in turn, directly supports more effective Human-Machine Teaming, allowing operators to leverage the strengths of both human cognition and artificial intelligence for improved decision-making and coordinated action. The result is a more agile and responsive force, better equipped to address the challenges of modern warfare and maintain a decisive advantage.

The system demonstrably shortens the critical thinking cycle for threat response by streamlining the initial stages of the OODA Loop – Observe and Orient. Traditionally, these phases involve significant delays in data collection, analysis, and interpretation; however, the automated pipeline rapidly processes information, delivering a condensed and contextualized understanding of the situation. This compression isn’t merely about speed; it enables a more proactive stance against evolving threats, allowing operators to bypass reactive measures and formulate effective countermeasures with markedly improved timing. Consequently, decision-makers gain a crucial advantage, moving from analyzing past events to anticipating and neutralizing future risks before they fully materialize – a vital capability in dynamic, multi-domain operational environments.

The DISARM framework’s emphasis on standardized outputs is proving crucial for effective data fusion in the face of increasingly complex transnational information threats. By structuring disparate information streams into a common format, the system enables seamless integration with existing defense networks and analytical tools. This interoperability isn’t merely about technical compatibility; it fosters a shared operational picture, allowing multiple agencies and international partners to contribute to, and benefit from, a collective defense strategy. The resulting enhanced situational awareness accelerates threat detection, clarifies attribution, and ultimately empowers a more coordinated and resilient response to disinformation campaigns and malicious cyber activity, moving beyond isolated analyses toward a truly unified defense posture.

The pursuit of identifying malicious actors in the information landscape, as detailed in this agentic operationalization of DISARM, benefits from a relentless focus on essential elements. Carl Friedrich Gauss once stated, “Few things are more deceptive than a simple appearance.” This sentiment resonates deeply with the core challenge of Foreign Information Manipulation and Interference (FIMI) investigation. The presented multi-agent system strives to strip away superficial characteristics – the ‘simple appearance’ – to reveal the underlying tactics, techniques, and procedures employed by those seeking to disrupt or mislead. By distilling complex social media data into actionable intelligence, the framework mirrors Gauss’s preference for elegant simplicity, prioritizing clarity over exhaustive detail in the pursuit of truth.

What Remains

The automation of detection is not, itself, detection. This work offers a procedural scaffolding, a means to categorize activity, but assigns no inherent malice. The system highlights patterns; interpretation remains the domain of analysis – a necessary, and likely permanent, constraint. The simplification inherent in categorization always risks obscuring nuance, a trade-off that demands continued scrutiny.

Future iterations should address the inherent asymmetry of the problem. Interference adapts; signatures calcify. A truly robust system must not merely detect known tactics, but anticipate their evolution – a predictive capacity currently beyond the reach of algorithmic formalism. The focus should shift from identifying ‘what is’ to modelling ‘what could be.’

Ultimately, the value of such systems resides not in replacing human judgment, but in alleviating its burden. The true metric of success will not be precision or recall, but the space afforded for more considered, contextual analysis. Reducing the signal-to-noise ratio is a virtue, but silence, after all, is not always golden.

Original article: https://arxiv.org/pdf/2601.15109.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- Silver Rate Forecast

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Top 15 Insanely Popular Android Games

- EUR UAH PREDICTION

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-01-22 22:44