Author: Denis Avetisyan

A new approach leverages deep learning to directly analyze x86-64 machine code, offering a streamlined path to identifying security flaws.

This review demonstrates that deep learning models can achieve comparable vulnerability detection performance to traditional static analysis techniques operating on assembly language.

Despite advances in binary analysis, vulnerability detection often relies on disassembled code, introducing potential information loss and model complexity. This paper, ‘Deep Learning-based Binary Analysis for Vulnerability Detection in x86-64 Machine Code’, explores a direct approach, demonstrating that deep learning models can effectively identify vulnerabilities directly from raw machine code. Results reveal graph-based models outperform sequential approaches, highlighting the importance of control flow, and suggest machine code retains sufficient information for accurate detection. Could this direct analysis pave the way for simpler, more efficient, and robust binary security tools?

The Evolving Landscape of Vulnerability Detection

The escalating complexity of modern software presents a significant challenge to traditional vulnerability detection methods. Manual code review, once a cornerstone of software security, struggles to keep pace with the sheer volume and intricacy of contemporary codebases, often missing subtle yet critical flaws. Similarly, dynamic testing, while valuable, can only identify vulnerabilities that are actively triggered during execution, leaving large portions of code unexplored and potential weaknesses hidden. These established techniques, though not obsolete, are increasingly proving insufficient against sophisticated attacks targeting the ever-expanding surface area of modern applications, necessitating a move towards more automated and scalable solutions capable of proactively identifying vulnerabilities before they can be exploited.

Traditional vulnerability detection methods, while historically significant, now face substantial limitations in the face of contemporary software development. Manual code review, for instance, demands considerable time and expertise, yet remains susceptible to human error – a single overlooked flaw can create a significant security risk. Similarly, dynamic testing, though valuable, struggles to comprehensively explore the vast state space of modern applications, often failing to uncover vulnerabilities hidden within complex interactions. The sheer volume of code present in today’s software projects further exacerbates these issues, overwhelming existing processes and leaving organizations vulnerable to exploits that automated systems could potentially identify. Consequently, a reliance on these outdated techniques frequently results in critical vulnerabilities remaining undetected until they are actively exploited, necessitating a fundamental shift toward more scalable and proactive security measures.

The escalating complexity of modern software necessitates a fundamental change in how vulnerabilities are identified and addressed. Traditional security practices, reliant on manual inspection and reactive testing, simply cannot keep pace with the sheer volume and velocity of code being produced. Consequently, the field is rapidly evolving towards automated vulnerability detection systems capable of analyzing massive codebases with speed and precision. These novel approaches leverage techniques like machine learning, static analysis, and fuzzing to proactively identify weaknesses before they can be exploited, shifting the paradigm from reactive patching to preventative security. This transition isn’t merely about efficiency; it’s about building a more resilient software ecosystem capable of withstanding increasingly sophisticated cyber threats, demanding continuous innovation in automated detection methodologies and scalable analysis platforms.

Deep Learning: A New Paradigm for Binary Analysis

Deep learning approaches to vulnerability detection utilize the capacity of neural networks to identify complex patterns within large datasets of machine code. Traditional signature-based methods require explicit definitions of known vulnerabilities, which are ineffective against zero-day exploits and polymorphic malware. Deep learning models, conversely, are trained on extensive corpora of both benign and malicious code, enabling them to generalize and detect novel instances of vulnerabilities based on learned characteristics. The efficacy of these models is directly proportional to the size and diversity of the training data; larger datasets improve the model’s ability to distinguish between malicious and benign code and reduce false positive rates. This data-driven approach allows for the automation of vulnerability discovery, reducing the reliance on manual reverse engineering and penetration testing.

Deep learning models utilize embedding techniques to transform binary programs into numerical vector representations, allowing algorithms to process and analyze machine code effectively. These embeddings capture semantic information by mapping similar code fragments – such as those implementing common cryptographic algorithms or exhibiting vulnerable patterns – to nearby points in a high-dimensional vector space. The process involves training the model on large datasets of binaries, enabling it to learn relationships between code structure, function, and potential security implications. Different embedding strategies exist, including those based on instruction sequences, control flow graphs, and API call sequences, each designed to emphasize specific aspects of program behavior relevant to vulnerability detection. The resulting vector representations facilitate the identification of anomalies and patterns indicative of security flaws without requiring explicit signature matching or complex static analysis.

Binary programs can be represented to deep learning models using various methods, each impacting performance and analytical capability. Sequential instruction sequences, such as those derived from disassembly, treat the program as a linear stream of commands, simplifying input but potentially losing information about control flow. Conversely, graph-based control flow representations model the program as a directed graph, where nodes represent basic blocks and edges represent control transfers; this approach explicitly captures program structure and dependencies, which is beneficial for analyzing complex logic, but introduces challenges in handling variable-length graphs and computational complexity. The choice between these representations, and others like call graphs or data flow graphs, depends on the specific security task and the characteristics of the target binary.

Architectures in Action: Graph and Sequential Approaches

Bin2Vec leverages Graph Convolutional Networks (GCNs) to generate vector embeddings of binary programs. These embeddings are derived from the program’s control flow graph (CFG), a representation where nodes are basic blocks and edges denote potential control transitions. GCNs operate directly on this graph structure, iteratively aggregating feature information from neighboring nodes to learn a contextualized representation for each node. The final embedding for the entire program is typically obtained by pooling these node embeddings. This approach allows Bin2Vec to capture structural relationships within the code, such as function calls and control dependencies, without requiring disassembly or symbolic execution; it operates directly on the binary’s control flow.

Asm2Vec leverages deep learning techniques, specifically recurrent neural networks (RNNs) and transformers, to analyze assembly language code as sequential data. This approach treats the sequence of assembly instructions as a time series, allowing the model to learn patterns and dependencies based on the order of execution. The input to the model consists of assembly instructions, often represented as numerical tokens after a preprocessing step. By processing this sequential data, Asm2Vec aims to capture the behavioral characteristics of a program as expressed through its linear instruction flow, enabling tasks such as malware detection and vulnerability analysis based on observed code patterns.

Bin2Vec and Asm2Vec, while both capable of vulnerability detection, operate on fundamentally different principles regarding program analysis. Bin2Vec leverages graph convolutional networks to analyze the control flow graph (CFG) representation of a binary, prioritizing the identification of vulnerabilities arising from structural relationships and dependencies within the code. Conversely, Asm2Vec utilizes deep learning techniques on sequential assembly code, focusing on patterns and anomalies within the linear execution flow. This distinction means Bin2Vec is more effective at detecting vulnerabilities related to how different code blocks interact, while Asm2Vec excels at identifying issues stemming from specific instruction sequences or unusual execution paths. Consequently, the choice between these approaches depends on the type of vulnerability being targeted and the specific characteristics of the program under analysis.

Tokenization, in the context of deep learning for binary analysis, involves decomposing raw assembly code sequences into discrete units, or tokens. This preprocessing step is essential because deep learning models operate on numerical data; tokenization converts the symbolic assembly instructions into a numerical representation. Common tokenization strategies include representing each instruction mnemonic, operand, and immediate value as a unique integer index within a predefined vocabulary. The resulting sequence of integers then serves as input to models like Recurrent Neural Networks (RNNs) or Transformers, allowing them to learn patterns and relationships within the program’s instruction stream. Effective tokenization significantly impacts model performance by reducing the complexity of the input and enabling the model to generalize across different code samples.

Detecting Vulnerability Patterns with Deep Learning

Recent advancements showcase the capability of deep learning models to pinpoint prevalent software vulnerabilities directly from machine code. Trained on datasets such as FormAI-v2, these models demonstrate proficiency in identifying critical error types including integer overflows, where calculations exceed the capacity of the data type; null pointer dereferences, resulting from accessing memory locations using an invalid pointer; and array bound violations, occurring when programs attempt to access data outside the defined limits of an array. This automated detection offers a promising avenue for enhancing software security by proactively recognizing these weaknesses during the development process, potentially reducing the risk of exploitation and improving overall system resilience.

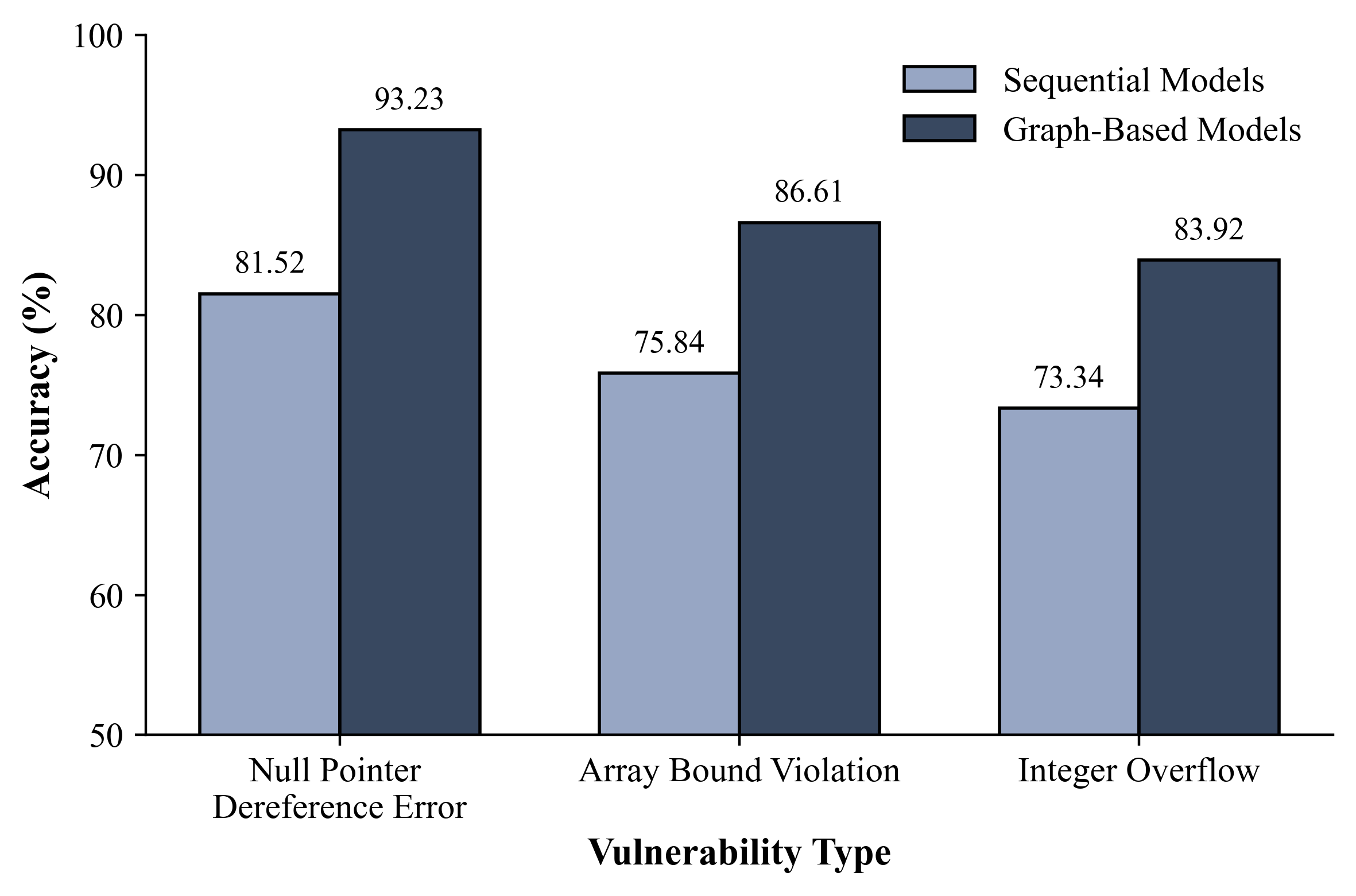

Recent studies indicate deep learning models are attaining a high degree of precision – between 80 and 90 percent – in identifying a range of Common Weakness Enumerations (CWEs). This level of performance is particularly noteworthy as it rivals the effectiveness of established vulnerability detection techniques that depend on detailed analysis of assembly language. The comparable accuracy suggests that deep learning offers a promising alternative, potentially automating aspects of code review previously requiring significant manual effort and specialized expertise. By directly learning patterns from machine code, these models demonstrate a capacity to pinpoint vulnerabilities with efficiency approaching that of traditional, more complex analytical methods.

The study revealed a nuanced performance across different vulnerability types when employing deep learning for code analysis. While the models exhibited strong accuracy in identifying Null Pointer Dereferences – likely due to the distinct patterns associated with memory access violations – detecting Integer Overflows presented a greater challenge. This suggests that current approaches, focusing primarily on static code features, may be insufficient for capturing the complex data dependencies that lead to integer overflow errors. Further research concentrating on data flow analysis – tracking how data is used and transformed throughout the program – is therefore crucial to improve the detection rate of this particularly insidious vulnerability and enhance the overall robustness of deep learning-based vulnerability detection systems.

Recent research indicates deep learning models possess a remarkable capacity to analyze machine code directly, circumventing the need for complex semantic representations traditionally employed in vulnerability detection. By processing raw x86-64 instructions, these models achieve accuracy levels – consistently between 80-90% across various Common Weakness Enumerations (CWEs) – that are competitive with methods relying on detailed assembly language analysis. This direct approach simplifies the analysis pipeline, potentially enabling faster and more scalable vulnerability discovery; it suggests that patterns indicative of flaws are, to a significant degree, discernible within the low-level instruction sequences themselves, rather than requiring higher-level contextual understanding. The ability to bypass the creation of intermediate semantic representations represents a notable advancement, streamlining the process and opening new avenues for automated code analysis.

The pursuit of vulnerability detection, as demonstrated in this research, echoes a fundamental principle of system design: structure dictates behavior. The ability to discern flaws directly from machine code, bypassing the need for assembly-level analysis, signifies a move towards simpler, more elegant solutions. This aligns with a philosophy that prioritizes clarity and minimal complexity. As Ken Thompson aptly stated, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” This sentiment highlights the value of straightforward, understandable systems, much like the approach taken in leveraging deep learning for direct machine code analysis – a system where behavior is readily observable and thus, more easily secured.

What Lies Ahead?

The demonstration that deep learning can operate effectively on raw machine code, rather than requiring translation to assembly, feels less like a breakthrough and more like a return to first principles. The field has, for a time, complicated matters with layers of abstraction. But the real question persists: what are systems actually optimizing for? Performance gains in vulnerability detection are easily quantified, yet the underlying goal isn’t simply to flag more flaws, but to reduce actual exploitable attack surfaces. The current work suggests a path toward streamlining binary analysis, but it does not, in itself, define what constitutes a ‘better’ analysis.

Simplicity is not minimalism; it is the discipline of distinguishing the essential from the accidental. The success of these models begs further inquiry into what features of machine code truly signal vulnerability. Are these models learning to identify patterns analogous to those a skilled reverse engineer would recognize, or are they exploiting statistical correlations that happen to coincide with flaws? Understanding this distinction is crucial.

Future research should move beyond simply achieving high accuracy on benchmark datasets. The focus needs to shift towards robustness-specifically, the ability to generalize to unseen code, and to resist adversarial examples crafted to evade detection. Moreover, the integration of these techniques with formal methods-systems designed to prove the absence of vulnerabilities-remains a significant, and perhaps necessary, challenge.

Original article: https://arxiv.org/pdf/2601.09157.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- ETH PREDICTION. ETH cryptocurrency

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- ‘Zootopia+’ Tops Disney+’s Top 10 Most-Watched Shows List of the Week

- ‘Zootopia 2’ Wins Over Critics with Strong Reviews and High Rotten Tomatoes Score

- Games That Faced Bans in Countries Over Political Themes

- The Labyrinth of Leveraged ETFs: A Direxion Dilemma

2026-01-15 15:44