Author: Denis Avetisyan

New research demonstrates how independent AI agents can coordinate effectively in local energy grids, achieving performance comparable to centralized control systems.

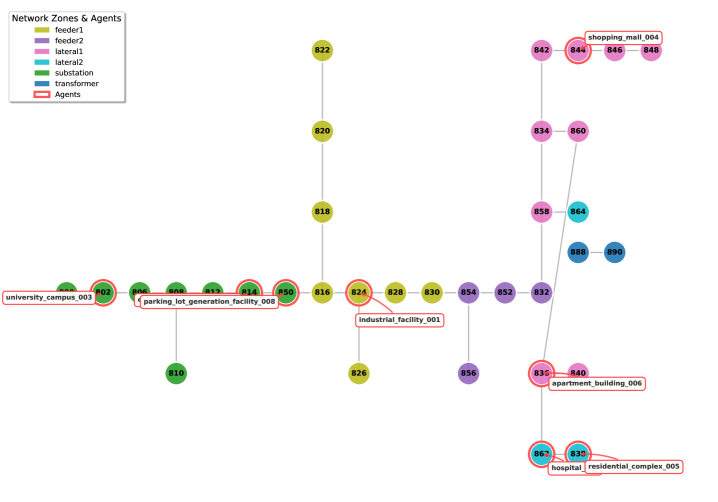

A multi-agent reinforcement learning framework, leveraging stigmergic signaling, enables implicit cooperation and near-optimal operation in decentralized local energy markets using the IEEE 34-bus topology.

Achieving optimal coordination in complex systems often necessitates centralized control, yet introduces vulnerabilities and privacy concerns. This is addressed in ‘Harnessing Implicit Cooperation: A Multi-Agent Reinforcement Learning Approach Towards Decentralized Local Energy Markets’, which proposes a novel framework for decentralized energy markets where agents approximate optimal coordination without explicit communication. Through multi-agent reinforcement learning utilizing stigmergic signaling, this research demonstrates near-optimal performance-achieving 91.7% of a centralized benchmark-while simultaneously enhancing grid stability and reducing variance by 31%. Could this approach unlock a new paradigm for robust, privacy-preserving decentralized control in other complex infrastructure networks?

The Looming Challenge of Coordination in a Distributed World

The contemporary power grid is undergoing a significant transformation, shifting from a model of large, centralized power plants to one increasingly populated by distributed energy resources (DERs). These DERs – encompassing solar photovoltaic systems, wind turbines, battery storage, and even controllable appliances – are proliferating at both residential and commercial scales. While this decentralization promises greater resilience and access to renewable energy, it simultaneously introduces formidable coordination challenges. Managing the intermittent output of renewable sources, ensuring grid stability with fluctuating supply and demand, and optimizing energy flow across a vastly more complex network require sophisticated control mechanisms. The sheer number of DERs, each operating independently yet interconnected within the grid, creates a combinatorial explosion of possible states, making traditional, centralized control approaches increasingly inadequate and necessitating innovative solutions for real-time monitoring, communication, and intelligent control.

Conventional grid management relies on centralized systems – large control centers making decisions and dispatching power – but these architectures are increasingly strained by the influx of distributed energy resources. The sheer volume of data from millions of solar panels, wind turbines, and energy storage units overwhelms these systems, creating bottlenecks and hindering real-time responsiveness. Moreover, scaling these centralized approaches to accommodate further growth in distributed generation proves costly and technically challenging. The inherent limitations of a single point of control struggle to process the dynamic, localized changes occurring across the grid, ultimately diminishing the potential benefits of a more decentralized and resilient energy future. This inflexibility creates a need for innovative solutions that can harness the power of distributed resources while maintaining grid stability and reliability.

Grid stability hinges on the seamless integration of increasingly diverse energy sources, and effective coordination mechanisms are paramount to achieving this. Renewable energy, while vital for a sustainable future, introduces inherent variability – sunlight fluctuates, wind speeds change – demanding a responsive and adaptive grid. Without precise coordination, these fluctuations can cascade into imbalances, potentially leading to blackouts or damaging infrastructure. Advanced coordination strategies, encompassing real-time data analytics, predictive modeling, and automated control systems, are therefore not merely beneficial, but essential for unlocking the full potential of renewable energy and ensuring a reliable power supply. These systems must intelligently balance supply and demand, manage energy storage, and optimize resource allocation across a distributed network, effectively transforming the grid from a static, centralized entity into a dynamic, resilient ecosystem.

Navigating Complexity: Multi-Agent Learning as a Solution

Multi-Agent Reinforcement Learning (MARL) addresses coordination challenges in systems comprised of multiple autonomous agents operating without a central controller. Traditional reinforcement learning (RL) methods are designed for single-agent scenarios, but often fail when applied to multi-agent systems due to the non-stationarity introduced by the learning of other agents. MARL algorithms allow each agent to learn optimal policies by interacting with its environment and observing the actions of other agents, ultimately leading to emergent cooperative behaviors. This is achieved through various techniques, including independent learners, centralized training with decentralized execution, and communication protocols that enable agents to share information and coordinate their actions. The decentralized nature of MARL makes it particularly well-suited for complex systems where centralized control is impractical or undesirable.

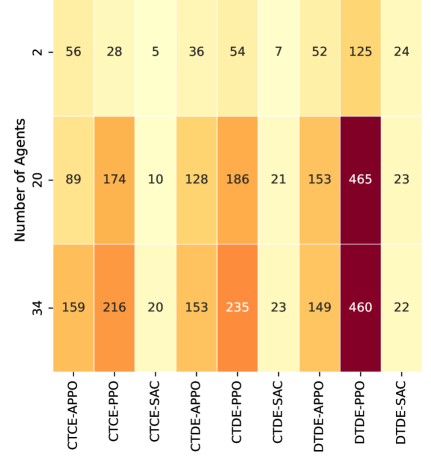

Two primary training paradigms are utilized in multi-agent reinforcement learning: centralized training with decentralized execution (CTDE) and decentralized training with decentralized execution (DTDE). CTDE involves training agents using a centralized controller with access to global state information, after which each agent independently executes the learned policy based on its local observations. In contrast, DTDE trains each agent independently, utilizing only locally observed information throughout both the training and execution phases. This distinction impacts computational complexity, communication requirements, and the ability to address non-stationarity inherent in multi-agent systems.

Decentralized Training with Decentralized Execution (DTDE) is particularly well-suited for modern power grid coordination due to its compatibility with the grid’s inherently distributed architecture. Unlike approaches requiring a central controller for training or operation, DTDE allows each grid agent – representing elements like substations, distributed generators, or controllable loads – to learn and act independently, using only local observations and communication with neighboring agents. This distributed learning process enhances robustness by eliminating single points of failure and improves scalability as the system grows, as computational demands are dispersed across the network rather than concentrated in a central location. The resulting policies are directly executable in the decentralized grid environment without requiring additional infrastructure or communication overhead, simplifying deployment and reducing latency.

Refining the System: Enhancing Learning Stability and Efficiency

APPO, or Approximate Proximal Policy Optimization, is a parallelized actor-learner algorithm designed to enhance the efficiency of multi-agent reinforcement learning (MARL). By distributing the learning process across multiple actors and a central learner, APPO allows for increased data throughput and faster convergence compared to single-agent or sequential MARL methods. The actor agents collect experiences through interaction with the environment, which are then used by the learner to update a shared policy. This parallelization reduces the impact of correlated experiences and accelerates the learning process, enabling agents to acquire effective coordination strategies with fewer environment interactions. The algorithm utilizes a clipped surrogate objective function to ensure stable policy updates, preventing drastic changes that can destabilize learning in complex multi-agent systems.

V-Trace is a reinforcement learning technique designed to mitigate issues arising from policy lag and limited experience diversity in multi-agent reinforcement learning (MARL) environments. Policy lag occurs when agents learn from experiences generated by older policies, creating a mismatch between the data and the current policy being optimized. V-Trace addresses this by weighting experiences based on the ratio of probabilities between the behavior policy (the policy that generated the data) and the target policy (the policy being updated). This weighting effectively corrects for the discrepancy caused by policy changes. Furthermore, V-Trace improves learning from diverse experiences by ensuring that all experiences contribute meaningfully to the policy update, even those occurring earlier in the training process, thereby enhancing the stability and efficiency of learning in complex multi-agent systems.

Population-Based Training (PBT) is a hyperparameter optimization technique used in multi-agent reinforcement learning (MARL) that iteratively improves agent performance and robustness. Unlike manual or grid-search approaches, PBT maintains a population of agents, each with slightly different hyperparameters. These agents explore the learning environment, and periodically, poorly performing agents are replaced by mutated copies of better-performing agents. This process allows the population to collectively adapt hyperparameters during training, leading to more effective learning and increased stability across diverse environmental conditions. The technique effectively automates hyperparameter tuning, reducing the need for extensive manual experimentation and improving overall system performance.

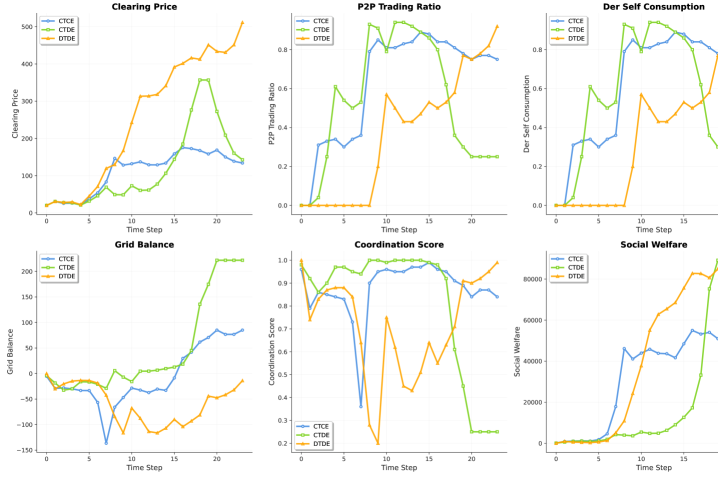

Implementation of the Distributed Twin Delayed Deep Deterministic Policy Gradient (DTDE) algorithm, coupled with the Actor-Learner Parallel Policy Optimization (APPO) method and further optimized through V-Trace and Population-Based Training (PBT), demonstrates substantial improvements in multi-agent reinforcement learning performance within a simulated grid environment. Specifically, this combined approach achieves 91.7% of the coordination quality attainable by a theoretical centralized benchmark, indicating a high degree of efficiency and stability in decentralized execution. This result highlights the efficacy of the integrated system in approximating optimal collaborative behavior without reliance on a central coordinating agent.

Towards Resilience: The Promise of Optimized Grid Operation

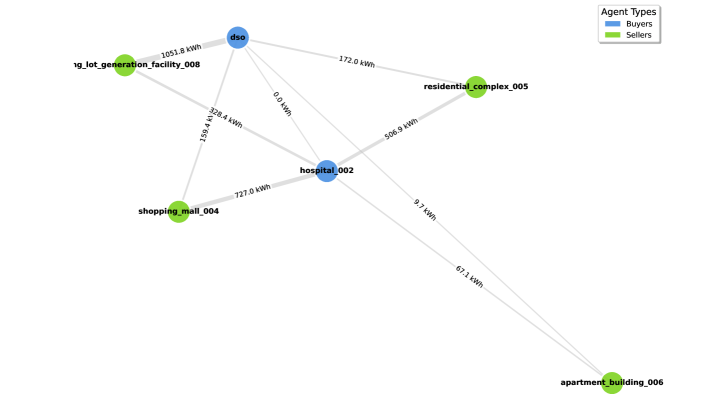

Robust coordination within a decentralized energy grid isn’t necessarily achieved through explicit communication, but rather through implicit cooperation fostered by what are known as stigmergic Key Performance Indicators (KPIs). This principle, inspired by the behavior of social insects like ants, involves agents responding to modifications in their environment – in this case, changes in grid conditions signaled by KPIs – without direct instruction from a central authority. These signals act as a form of indirect communication, allowing agents to self-organize and adjust their behavior to maintain grid stability. The system effectively leverages the collective intelligence of numerous independent entities, each reacting to the same environmental cues, which leads to emergent, coordinated behavior and a resilient power network capable of adapting to fluctuating supply and demand.

Maintaining a stable power grid requires constant equilibrium between electricity supply and demand, and this system achieves precisely that through coordinated agent behavior. The effectiveness of this balance is quantitatively measured by the Grid Balance Index, which reflects the grid’s ability to maintain consistent power flow. Simulations demonstrate a substantial improvement in this index; the decentralized, stigmergic approach consistently minimizes deviations from optimal supply-demand balance. This isn’t merely about preventing blackouts, but about creating a resilient grid capable of absorbing fluctuations from renewable energy sources and varying consumer needs, ultimately ensuring a reliable and efficient energy supply for all users.

The architecture fosters a system where decentralized energy resources aren’t simply integrated, but truly optimized for collective benefit. By enabling implicit cooperation amongst grid agents, the approach moves beyond mere supply-demand balancing to actively maximize social welfare. This is achieved by allowing resources – like solar panels, wind turbines, and energy storage – to respond dynamically to real-time conditions and participate in a collective optimization process, ultimately unlocking their full economic potential. Consequently, the system creates opportunities for increased revenue for resource owners, reduced energy costs for consumers, and a more resilient, efficient, and valuable energy infrastructure overall, surpassing the limitations of traditional, centralized energy economies.

Rigorous testing within a simulated IEEE 34-Bus Test Feeder environment confirms the practical benefits of this decentralized coordination strategy. Results indicate that the Dynamic Transmission-Distribution Edge (DTDE) approach achieves a remarkably stable grid balance, evidenced by a standard deviation of just ±39.1 kWh – a substantial improvement over the ±93.5 kWh observed with conventional Centralized Transmission-Distribution Edge (CTDE) systems. Beyond enhanced stability, the Adaptive Particle Positioning Optimization (APPO) algorithm demonstrated a key advantage: its performance scaled linearly as the number of participating agents increased, suggesting robust adaptability for large-scale deployment. Importantly, the performance difference between APPO-DTDE and a comparable centralized approach (APPO-CTCE) remained minimal, at only 8.3%, highlighting the efficiency gains achieved without compromising overall system performance.

The pursuit of decentralized control, as demonstrated in this study of local energy markets, often encounters limitations stemming from incomplete information and the illusion of predictability. This research, achieving near-optimal coordination through implicit cooperation, subtly underscores a humbling truth. As Richard Feynman once observed, “The first principle is that you must not fool yourself – and you are the easiest person to fool.” The agents, operating with local observations and stigmergic signaling, don’t need a comprehensive model; they adapt. It’s a beautifully elegant system, and a quiet reminder that theory is, at best, a convenient tool for beautifully getting lost, especially when navigating complex systems where perfect control is always an unattainable fantasy.

Beyond the Horizon

The pursuit of decentralized control, as demonstrated by this work, often feels like constructing increasingly elaborate clockwork mechanisms, hoping for emergent order. It is a comforting illusion. The system presented – agents negotiating energy distribution through stigmergic signaling – achieves impressive coordination. Yet, the very success highlights a deeper fragility. The ‘near-optimal’ performance is benchmarked against centralized control, a phantom already fading from practical reach. What happens when the assumptions underpinning the factorial design-the neat IEEE 34-bus topology, the predictable agent behaviors-begin to warp? Sometimes matter behaves as if laughing at the laws imposed upon it.

Future work will inevitably explore scaling these multi-agent systems. But a more profound challenge lies in embracing genuine heterogeneity. Real energy markets are not collections of identical agents. They are chaotic assemblages of prosumers, regulators, and failing infrastructure. Simplified models – these ‘pocket black holes’ of abstraction – can only reveal so much. The true abyss lies in attempting to model the unmodelable: the irrationality, the emergent crises, the cascading failures that define complex systems.

Perhaps the most fruitful avenue lies not in striving for ever-more-realistic simulations-diving into the abyss rarely yields useful maps-but in designing systems robust enough to tolerate imperfection. A system that anticipates its own limitations, that gracefully degrades rather than collapses, may ultimately prove more valuable than one that chases an unattainable ideal of optimality. The question isn’t whether these agents can achieve perfect coordination, but whether they can survive the inevitable discord.

Original article: https://arxiv.org/pdf/2602.16062.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- 17 Black Actresses Who Forced Studios to Rewrite “Sassy Best Friend” Lines

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Elden Ring’s Fire Giant Has Been Beaten At Level 1 With Only Bare Fists

- Anime Series Hiding Clues in Background Graffiti

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

2026-02-19 15:44