Using AI to bring back the voices of actors from the past is causing a lot of discussion in the entertainment world. Some fans love seeing beloved characters return, but others feel these AI recreations lack the genuine emotion of a real performance. Studios say they use this technology to keep popular series consistent. As the technology gets better, Hollywood is increasingly focused on the legal and ethical issues, particularly regarding actors’ rights and what happens to their voices after they’re gone.

‘The Mandalorian’ (2019–)

In the season two finale, Mark Hamill reappeared as a younger Luke Skywalker thanks to visual effects and artificial voice technology. The production team used Respeecher to recreate his voice from his original trilogy days. This raised questions among fans about whether it’s right to use digital copies of actors instead of their actual performances. The technology built a performance using old recordings, so Hamill didn’t have to record any new dialogue.

‘The Book of Boba Fett’ (2021–2022)

Okay, so seeing Luke Skywalker back on screen was interesting, especially because of how they did it. They really leaned into AI to recreate his younger voice, pulling audio from old interviews and footage. Honestly, though? While the tech is impressive, the voice just didn’t quite feel like Luke. It lacked that subtle emotional depth you get from a real performance. It really struck me as another example of studios opting for digital solutions – basically, recreating characters with technology instead of finding new actors to take on those iconic roles. It’s a trend, and this project definitely showcased it.

‘Obi-Wan Kenobi’ (2022)

James Earl Jones, the iconic voice of Darth Vader, has retired and given Lucasfilm permission to use artificial intelligence to continue the character’s voice. The company used AI to recreate his distinctive, intimidating tone from the original films made in the late 1970s. Though his family supported this decision, it sparked a debate about what this means for voice actors in big franchises. This was one of the first times a famous performer has officially allowed their voice to be digitally replicated for future use.

‘Top Gun: Maverick’ (2022)

As a huge fan, I was so thrilled to see Val Kilmer return as Iceman! It was really moving, especially knowing he’s been battling throat cancer and lost his natural voice. The filmmakers worked with this amazing AI company, Sonantic, and they built a digital version of his voice using old footage. It wasn’t just a voice replacement, though – it really sounded like him, and allowed for some incredibly touching scenes with Tom Cruise’s Maverick. Honestly, it felt like a really respectful and beautiful way to let him continue playing a character he’s loved for so long, and a lot of fans felt the same way – it was a genuinely compassionate use of the technology.

‘Alien: Romulus’ (2024)

The new film included a character named Rook who looked and sounded like the late actor Ian Holm. Filmmakers used AI and facial scanning technology to bring back the appearance of his character from the original 1979 movie. While the Holm estate approved the recreation, many viewers were upset by it. They felt uneasy about digitally bringing back an actor who had passed away years before the film was made.

‘Rogue One: A Star Wars Story’ (2016)

As a fan, it was incredible to see Grand Moff Tarkin return in the film, especially considering Peter Cushing passed away years before. They used a combination of an actor, Guy Henry, physically portraying him, and some really advanced technology to recreate Cushing’s voice and likeness. Tarkin’s appearance was a big part of the story, but it also sparked a lot of debate about whether it’s right to digitally recreate actors after they’ve passed away. It felt like a turning point for the industry, setting a precedent for how posthumous digital doubles might be used in future films like ‘Rogue One’ and beyond.

‘Ghostbusters: Afterlife’ (2021)

In the final scene of the movie, the character Egon Spengler appeared as a ghost to help the new heroes. Because actor Harold Ramis had passed away, the filmmakers used a combination of stand-ins and computer effects to bring him back on screen. They paid close attention to both his appearance and voice to accurately represent the original character in ‘Ghostbusters Afterlife’. This decision was meant to give fans a satisfying ending, but it also sparked debate about the ethics of digitally recreating deceased actors in films.

‘The Flash’ (2023)

As a huge movie fan, I was really excited to see ‘The Flash,’ especially when I heard they were bringing back some classic Supermen! They used CGI to include Christopher Reeve and George Reeves, and it was cool seeing those versions of the character again. But honestly, the effect wasn’t quite right, and a lot of people – myself included – felt a little uneasy about it. It got me thinking about how far we should go with bringing back actors digitally when they’re no longer around to approve it. It’s a tricky situation, and this movie really brought that debate to the forefront.

‘Road House’ (2024)

A lawsuit was filed against the remake of the classic action film by its original screenwriter, R. Lance Hill, due to the studio’s use of artificial intelligence during production. The complaint stated that the studio used AI to recreate actors’ voices and complete the film ‘Road House’ while actors were on strike with SAG-AFTRA. Although the studio argued the AI was only used for temporary audio and not in the final version, the situation caused widespread concern within the entertainment industry. This case is now a key example of the growing legal issues surrounding AI and the protection of actors’ rights.

‘Indiana Jones and the Dial of Destiny’ (2023)

The film began with a digitally de-aged Harrison Ford, showing him as a younger Indiana Jones. To make the effect convincing, the sound team used artificial intelligence to recreate his voice from the early 1980s. They did this by enhancing old recordings and adjusting the tone to remove any signs of aging in his voice. The result was a smooth and natural transition, letting the movie revisit the classic feel of the original films.

‘Coming 2 America’ (2021)

James Earl Jones reprised his role as King Jaffe Joffer in the sequel to ‘Coming to America’ after many years. Because of his age, filmmakers used digital technology to help preserve the power and quality of his famous voice. This allowed him to convincingly portray the king once again, and it created a seamless connection between the original movie and ‘Coming 2 America’ for viewers.

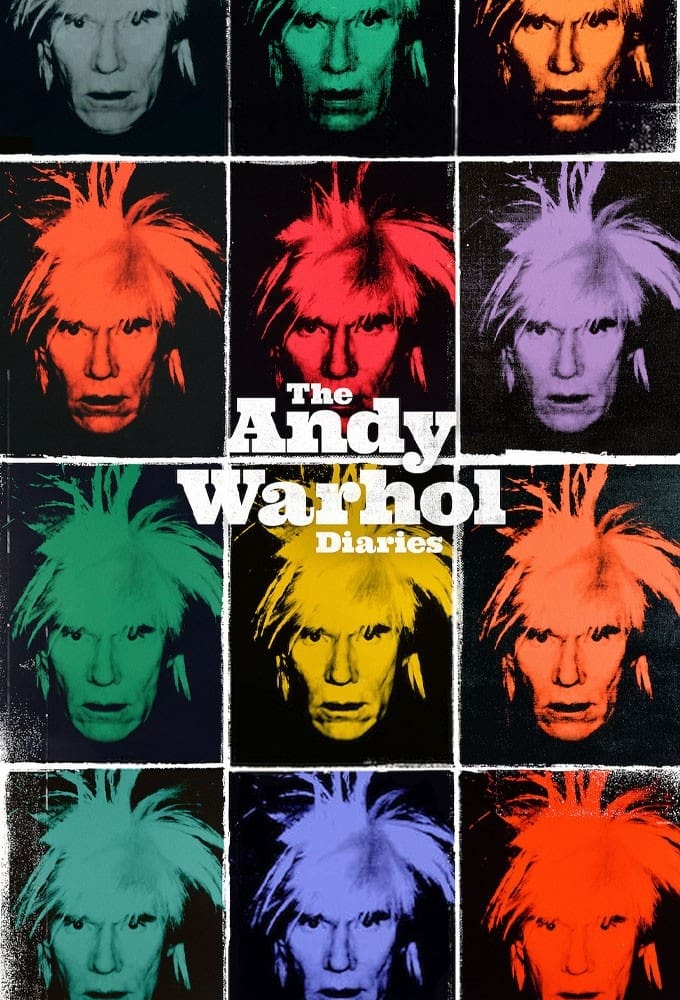

‘The Andy Warhol Diaries’ (2022)

The documentary series ‘The Andy Warhol Diaries’ used artificial intelligence to recreate Andy Warhol’s voice, allowing him to ‘read’ his personal journals. The filmmakers trained software on existing recordings to mimic his unique, monotone speaking style. While the creators believed this technology let Warhol share his story directly, many critics debated whether it’s ethical to use AI to recreate the voice of someone who has passed away. The series demonstrates AI’s power to make history feel intimate, but it also raises important ethical questions.

‘Here’ (2024)

The movie ‘Here’ used cutting-edge AI to make Tom Hanks and Robin Wright appear younger throughout the story, showing them at different ages. The technology allowed the actors to play the roles themselves, with AI digitally adjusting their looks and voices on the spot. This was done to tell a multi-generational story without needing different actors for each time period. The film’s heavy use of AI marks a significant change in how established actors are used in filmmaking today.

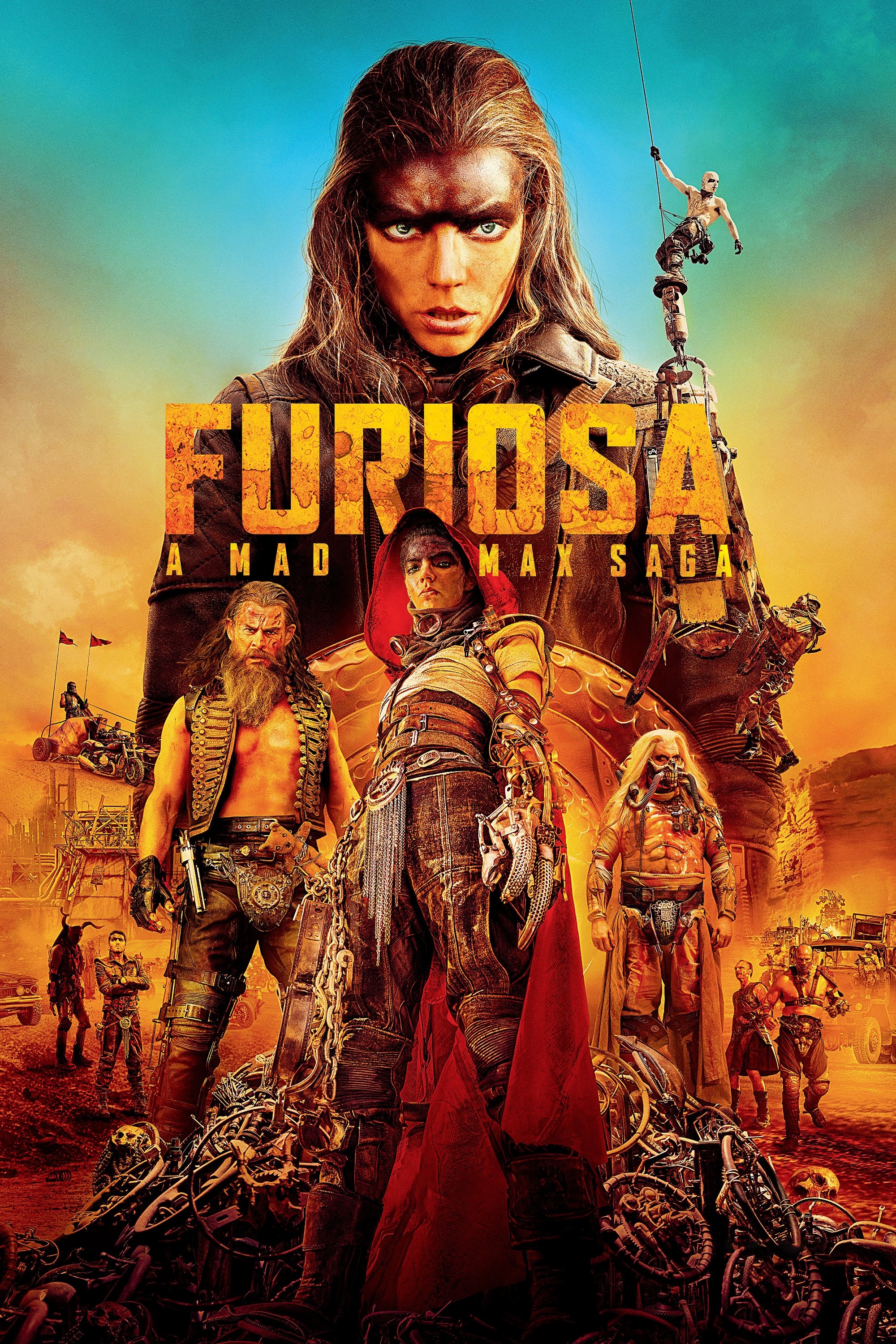

‘Furiosa: A Mad Max Saga’ (2024)

As a huge cinema fan, I was really impressed by something I learned about ‘Furiosa: A Mad Max Saga’. Director George Miller used some clever AI technology to make Furiosa’s voice sound consistent throughout the film, even as she aged. They blended the voices of the young actress, Alyla Browne, with Anya Taylor-Joy’s, so it felt like the same person growing up. It wasn’t about making something fake, but about creating a really immersive experience and showing how AI can actually help with artistic storytelling – it was seamless and really added to the film!

‘Star Wars: The Rise of Skywalker’ (2019)

Making the final film in the saga was difficult after Carrie Fisher, who played General Leia Organa, passed away. The filmmakers honored her memory by using previously recorded footage and advanced digital technology to include her character in ‘The Rise of Skywalker’. They combined this with existing audio and voice actors to create her last scenes, a tribute that also raised questions about how far digital recreations should go.

Please share your thoughts on the use of AI voices for legacy characters in the comments.

Read More

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Celebrities in Music Videos

- Top 20 Extremely Short Anime Series

- Best Video Games Based On Tabletop Games

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

2026-01-26 02:23