Author: Denis Avetisyan

A new study explores how analyzing the emotional tone of financial news using advanced AI can improve the accuracy of stock price forecasting.

This review evaluates the efficacy of large language models for financial sentiment analysis and its impact on time-series stock price prediction using transformer networks and ensemble methods.

Predicting stock price movements remains a significant challenge despite advances in financial modeling and machine learning. This paper, ‘Impact of LLMs news Sentiment Analysis on Stock Price Movement Prediction’, investigates the potential of large language models (LLMs) to enhance stock forecasting through sentiment analysis of financial news. Results demonstrate that incorporating LLM-derived sentiment features can modestly improve prediction accuracy across several time-series architectures, with DeBERTa and ensemble models yielding the strongest performance. Could these findings unlock more robust and nuanced approaches to quantitative finance and investment strategies?

The Illusion of Prediction: Why We Chase Sentiment

The pursuit of consistently predicting stock price fluctuations has long captivated and challenged financial analysts, yet conventional methodologies frequently demonstrate limited efficacy. Historical data analysis, while valuable, often fails to account for the unpredictable influence of news events, investor psychology, and broader economic shifts. Statistical models, reliant on past performance, struggle to adapt to novel circumstances or anticipate sudden market corrections. Consequently, a significant gap persists between predictive accuracy and real-world investment success, prompting exploration into alternative data sources and analytical techniques that can better capture the complex dynamics driving stock valuations. This ongoing difficulty underscores the need for innovative approaches capable of identifying subtle signals and incorporating a more holistic understanding of market forces.

The integration of qualitative data, sourced from financial news and assessed through sentiment analysis, presents a compelling approach to improve the predictive power of quantitative financial models. Historically, these models have relied heavily on numerical data – price, volume, and economic indicators – often overlooking the subtle, yet impactful, influence of public perception and market psychology. Sentiment analysis techniques now allow for the automated extraction of subjective information from news articles, social media, and analyst reports, quantifying the overall tone – bullish, bearish, or neutral – surrounding specific assets. By incorporating this ‘sentiment score’ as a variable alongside traditional metrics, models can potentially capture shifts in investor confidence and anticipate market movements that might otherwise be missed, offering a more holistic and responsive prediction capability.

Recent advancements in Large Language Models (LLMs) have fundamentally reshaped the field of financial sentiment analysis. Prior techniques often relied on simplistic keyword spotting or rule-based systems, struggling with the subtleties of language like sarcasm, irony, or contextual nuance. LLMs, however, possess an unprecedented ability to parse and interpret the complexities of financial text – news articles, analyst reports, social media posts – identifying not just the presence of positive or negative terms, but why a particular statement conveys a specific sentiment. This deeper understanding allows for a more accurate assessment of market mood and its potential impact on asset prices, moving beyond superficial indicators to capture the underlying drivers of investor behavior. The result is a significant leap in predictive power, promising more robust financial models and potentially, more informed investment strategies.

Fine-Tuning the Noise: Specialized Language Models

General-purpose language models often lack the specialized vocabulary and contextual understanding required for accurate sentiment analysis of financial text. Models such as FinBERT, RoBERTa, and DeBERTa address this limitation through fine-tuning on large datasets of financial news, filings, and reports. This process adapts the models’ parameters to better recognize financial terminology, understand nuanced language specific to economic events, and ultimately improve sentiment prediction accuracy compared to models trained on general text corpora. The resulting models demonstrate enhanced performance in tasks like classifying the sentiment of earnings calls or predicting market reactions to news events.

Transformer architectures, utilized by models like FinBERT and RoBERTa, address limitations of prior recurrent and convolutional neural networks in natural language processing. These architectures employ self-attention mechanisms, allowing the model to weigh the importance of different words in a sentence when determining context. This is achieved through parallel processing of the input sequence, unlike sequential processing in RNNs, enabling the capture of long-range dependencies critical for understanding financial text. The self-attention layers compute relationships between all words, creating contextualized word embeddings that better represent the meaning of each term within the specific financial context of a news article, thereby improving sentiment analysis accuracy.

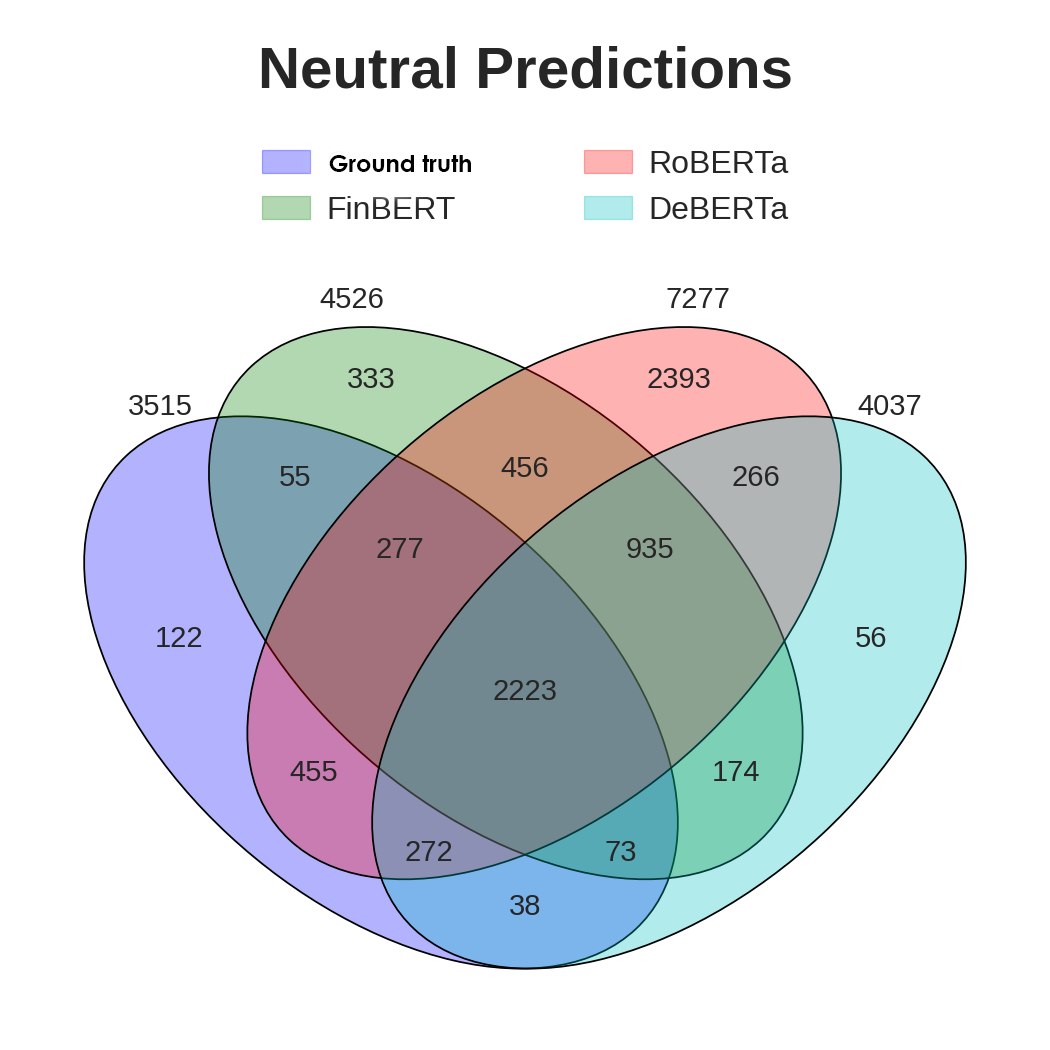

In this study, the DeBERTa model achieved approximately 75% accuracy in predicting financial sentiment. Further performance gains were observed when utilizing an ensemble model; combining the outputs of FinBERT, RoBERTa, and DeBERTa resulted in an approximately 80% accuracy rate. This indicates that while DeBERTa performed best as a single model, aggregating predictions from multiple fine-tuned transformer models improved overall sentiment prediction accuracy in the context of financial text analysis.

The Illusion of Control: Ensemble Models and Their Limits

Ensemble models function by aggregating the predictions of multiple base models – such as Logistic Regression, Random Forest, Support Vector Machines (SVMs), and deep learning architectures – to produce a more accurate and stable final prediction. This approach mitigates the risk of relying on a single algorithm that may be susceptible to specific data characteristics or noise. By combining diverse models, ensemble methods reduce variance and bias, leading to improved generalization performance on unseen data. The robustness of ensemble models stems from the error correction process; individual model errors are often compensated for by the strengths of other models within the ensemble, resulting in a more reliable prediction overall.

Ensemble models for financial prediction rely on supervised learning using labeled datasets that correlate news sentiment with subsequent stock performance. Datasets like SEntFiN 1.0 provide examples of financial news articles paired with observed stock movements, allowing algorithms to learn the association between textual sentiment-positive, negative, or neutral-and directional price changes. The training process involves algorithms identifying patterns within the text that statistically correlate with increases or decreases in stock prices, enabling the model to predict future movements based on the sentiment expressed in new articles. Accurate labeling of these datasets is crucial for model performance, as the learned relationship is directly dependent on the quality of the training data.

An ensemble Support Vector Machine (SVM) model, leveraging the FinBERT, RoBERTa, and DeBERTa transformer architectures, attained approximately 80% accuracy in predicting stock movement trends. This performance represents a substantial improvement over a Long Short-Term Memory (LSTM) model, which achieved 57.47% accuracy on the same predictive task. The higher accuracy of the SVM ensemble demonstrates the benefit of combining multiple, diverse language models to capture complex relationships within financial news data and improve the reliability of stock movement predictions.

Measuring the Inevitable: Performance Metrics and Their Limitations

Performance evaluation of predictive models utilizes a suite of quantitative metrics tailored to the model’s task. For classification problems, Accuracy represents the ratio of correct predictions to total predictions, while the F1-score provides a balanced measure of precision and recall. The Area Under the Receiver Operating Characteristic curve (AUC) assesses the model’s ability to distinguish between classes. Regression models are evaluated using metrics such as Root Mean Squared Error (RMSE), which measures the average magnitude of errors, Mean Absolute Error (MAE), representing the average absolute difference between predicted and actual values, and R2, also known as the coefficient of determination, indicating the proportion of variance in the dependent variable that is predictable from the independent variables. These metrics collectively provide a comprehensive assessment of model performance, allowing for comparative analysis and optimization.

The selected performance metrics – Accuracy, F1-score, AUC, RMSE, MAE, and R2 – facilitate a holistic assessment of model performance across distinct predictive tasks. Accuracy and F1-score are primarily utilized for classification problems, quantifying correct predictions and the balance between precision and recall, respectively. Area Under the Curve (AUC) further evaluates the discriminatory power of classification models. Conversely, Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R2 are specifically designed for regression tasks, measuring the average magnitude of errors and the proportion of variance explained by the model. Utilizing this combination of metrics ensures a comprehensive understanding of model strengths and weaknesses, regardless of whether the task involves categorizing data or predicting continuous values.

The ensemble model demonstrated strong performance in sentiment prediction, achieving approximately 80% across four key metrics: accuracy, precision, recall, and F1-score. Accuracy represents the overall correctness of predictions, while precision measures the proportion of correctly identified positive sentiments out of all predicted positive sentiments. Recall indicates the proportion of actual positive sentiments that were correctly identified, and the F1-score is the harmonic mean of precision and recall, providing a balanced measure of the model’s performance. This consistent performance across these metrics suggests the model effectively identifies and classifies sentiment with a high degree of reliability.

Chasing Shadows: The Future of Sentiment-Driven Finance

Recent advancements in financial forecasting leverage the power of sophisticated language models, coupled with ensemble methods, to potentially unlock more accurate stock price predictions. These models analyze vast quantities of textual data – news articles, social media posts, financial reports – discerning subtle shifts in market sentiment that often precede price movements. By combining the nuanced understanding of language with the robustness of ensemble techniques – which aggregate predictions from multiple models – researchers aim to mitigate the risks associated with relying on any single predictive algorithm. This integrated approach doesn’t simply identify what is being said, but rather how it’s being said – the emotional tone, the level of confidence, and the underlying intent – offering a more holistic and potentially profitable assessment of market trends. The combination offers a promising pathway towards more informed and data-driven investment decisions, surpassing the limitations of traditional quantitative analysis.

Predictive accuracy in sentiment-driven finance hinges on ongoing advancements across several key areas of machine learning. Researchers are actively exploring novel data representation techniques, moving beyond simple word counts to capture nuanced contextual information and semantic relationships within financial text. Simultaneously, investigations into model architecture-including hybrid approaches that combine the strengths of recurrent neural networks, transformers, and ensemble methods-aim to improve the ability to discern patterns and trends. Crucially, feature engineering-the careful selection and transformation of input variables-remains a vital component, with efforts focused on identifying and incorporating non-textual data, such as macroeconomic indicators and trading volume, to create more robust and reliable predictive models. These combined efforts promise to unlock increasingly sophisticated insights from financial data, ultimately leading to more precise and profitable investment strategies.

The advent of sentiment-driven finance promises a fundamental shift in how investment strategies are developed and executed. By processing and interpreting vast streams of textual data – news articles, social media posts, and financial reports – these technologies move beyond traditional quantitative analysis to incorporate the often-overlooked influence of public perception. This allows for the identification of subtle shifts in market sentiment, potentially predicting stock price movements before they are reflected in trading volume. Consequently, investors can refine their portfolios, mitigate risk, and capitalize on emerging opportunities with a level of precision previously unattainable, moving toward a more data-driven and responsive financial landscape. The technology isn’t intended to replace established methods, but rather to augment them with a powerful new layer of insight, fostering more informed and potentially more profitable decision-making.

The pursuit of predictive accuracy, as demonstrated by this work on LLMs and stock movements, inevitably courts obsolescence. It’s a Sisyphean task; each refinement, each layer of abstraction added to the forecasting model, simply establishes a new baseline for future errors. As Thomas Kuhn observed, “the map is not the territory.” This paper meticulously constructs a map-a sentiment-driven model-but the territory of the market will always shift, rendering even the most sophisticated algorithms incomplete. The promise of improved prediction, while momentarily compelling, is ultimately a temporary reprieve before the next wave of unpredictable data arrives, demanding yet another architectural overhaul. It’s a beautiful, fragile structure built on sand – and production will find a way to expose the flaws.

What’s Next?

The predictable enthusiasm for applying yet another ‘revolutionary’ architecture to financial forecasting is… familiar. This work demonstrates, unsurprisingly, that more data – even data distilled through the opaque logic of a large language model – can nudge prediction accuracy. The real question, perpetually unanswered, is whether such improvements translate to actual, risk-adjusted profit after transaction costs. One suspects the backtests are always conducted on data that hasn’t yet learned to hate the model.

Future iterations will undoubtedly explore increasingly elaborate LLM architectures and ensemble techniques. A more pressing concern is robustness. These models are trained on news – a medium inherently prone to bias, sensationalism, and carefully constructed narratives. Expect production deployments to reveal a disconcerting tendency to mistake marketing copy for market signals. Better one well-understood, slightly inaccurate statistical model than a hundred black boxes confidently hallucinating alpha.

The inevitable push toward ‘real-time’ sentiment analysis will be particularly interesting. Parsing the subtle nuances of financial news at scale is a fool’s errand. The market doesn’t react to sentiment; it reacts to trades. The focus should shift from predicting what people feel about a stock to understanding why they are buying or selling. That, however, requires data that is far more difficult – and far less glamorous – to obtain.

Original article: https://arxiv.org/pdf/2602.00086.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Banks & Shadows: A 2026 Outlook

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- HSR 3.7 story ending explained: What happened to the Chrysos Heirs?

- ETH PREDICTION. ETH cryptocurrency

- 9 Video Games That Reshaped Our Moral Lens

- The Best Actors Who Have Played Hamlet, Ranked

- Gay Actors Who Are Notoriously Private About Their Lives

2026-02-03 22:36