Author: Denis Avetisyan

A new approach, AdvSynGNN, boosts the performance and resilience of graph neural networks on complex, real-world datasets.

AdvSynGNN employs adversarial synthesis and self-corrective propagation to enhance robustness against heterophily and improve scalability.

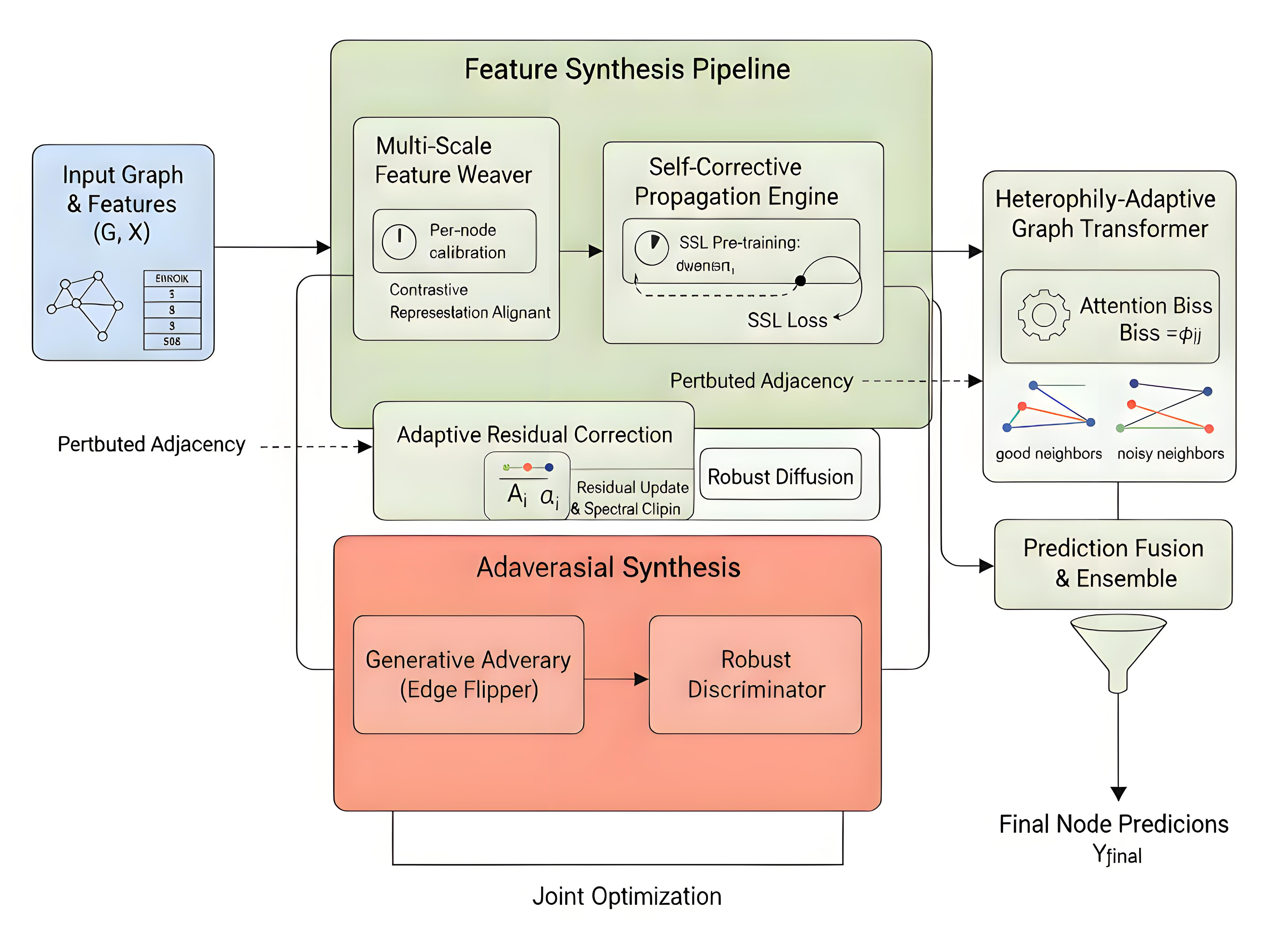

Despite the growing success of graph neural networks, their performance remains vulnerable to noisy or non-homophilous graph structures. To address these limitations, we introduce AdvSynGNN: Structure-Adaptive Graph Neural Nets via Adversarial Synthesis and Self-Corrective Propagation, a novel framework that enhances robustness through multi-resolution structural encoding, adversarial training, and adaptive propagation. Specifically, AdvSynGNN leverages an adversarial process to synthesize robust initializations and refine node representations via a self-corrective scheme guided by per-node confidence. By effectively mitigating the effects of structural noise and heterophily, can this approach unlock more reliable and scalable graph learning in complex real-world applications?

Deconstructing the Graph: Fragility as a Feature

Graph Neural Networks, while demonstrating remarkable capabilities in various domains, frequently encounter challenges when applied to real-world datasets. These networks often rely on the assumption of clean, complete data; however, many practical graphs are inherently noisy and incomplete. Missing connections, inaccurate node attributes, or the presence of irrelevant information can significantly degrade performance, leading to unreliable predictions. This fragility stems from the GNN’s dependence on the graph’s structure and features; even minor distortions can disrupt the learning process and compromise the model’s ability to generalize. Consequently, robust methods for handling these imperfections are essential to unlock the full potential of graph learning in practical applications, where data quality is rarely ideal.

Conventional Graph Neural Networks, while demonstrating remarkable capabilities, exhibit a significant vulnerability to even minor alterations in the underlying graph data. Studies reveal that subtle shifts in graph structure – such as the addition or removal of a single edge – or slight noise in node features can dramatically degrade predictive performance. This fragility stems from the inherent assumption within most GNN architectures that the graph remains static and its feature distributions consistent between the training and deployment phases. Consequently, their application in real-world scenarios – characterized by constantly evolving networks and imperfect data – is severely hampered, limiting their potential in dynamic environments like social networks, transportation systems, and financial markets. Addressing this sensitivity is therefore paramount to unlocking the true utility of graph learning.

The efficacy of Graph Neural Networks hinges on a foundational, yet often unacknowledged, assumption: that the structure of the graph and the characteristics of its nodes remain stable between the periods of model training and its subsequent application. This consistency is rarely observed in real-world scenarios, where graphs are subject to constant evolution – nodes are added or removed, connections change, and node attributes drift over time. Because GNNs learn patterns predicated on a specific graph configuration, even minor alterations during inference can significantly degrade performance, introducing substantial errors in predictions. This sensitivity stems from the model’s inherent reliance on the training data’s specific topological and feature landscape; a mismatch between this learned environment and the dynamic reality undermines the model’s ability to generalize effectively, highlighting a critical limitation in deploying GNNs to constantly changing systems.

The successful integration of graph learning into real-world scenarios hinges on overcoming inherent vulnerabilities to noisy or incomplete data. Currently, many applications demand adaptability-consider social networks where connections and user attributes are constantly evolving, or critical infrastructure where sensors may fail. Without robust methods to handle these perturbations, the predictive power of Graph Neural Networks diminishes, limiting their utility. Consequently, research focused on improving the resilience of these models isn’t merely academic; it’s a necessary step toward unlocking the full potential of graph-based insights in diverse fields, ranging from drug discovery and materials science to fraud detection and recommendation systems. Addressing this fragility will enable reliable deployment in dynamic environments and foster greater trust in graph learning’s capabilities.

Forging Resilience: Adversarial Propagation as a Defense

Adversarial Propagation enhances model robustness by deliberately introducing targeted structural noise into the graph data during the training process. This is achieved by applying perturbations to the adjacency matrix, simulating potential real-world graph imperfections or adversarial attacks. The model is then forced to learn representations that are less sensitive to these structural variations. By training on a distribution of slightly altered graphs, the model develops an ability to generalize better to unseen, potentially noisy or corrupted graph data, effectively increasing its resilience to adversarial manipulations and improving overall performance on imperfect graph structures.

Adversarial Propagation enhances model robustness by introducing perturbations that mimic real-world data imperfections during the training process. These simulated disturbances, applied to the graph structure, act as stress tests, revealing vulnerabilities in the model’s feature extraction and predictive capabilities. By repeatedly exposing the model to these artificially created weaknesses, the training process incentivizes the development of features that are less sensitive to noisy or corrupted input data. This proactive approach fosters the creation of resilient features, improving the model’s generalization performance and overall stability when confronted with imperfect or adversarial graph data in practical deployments.

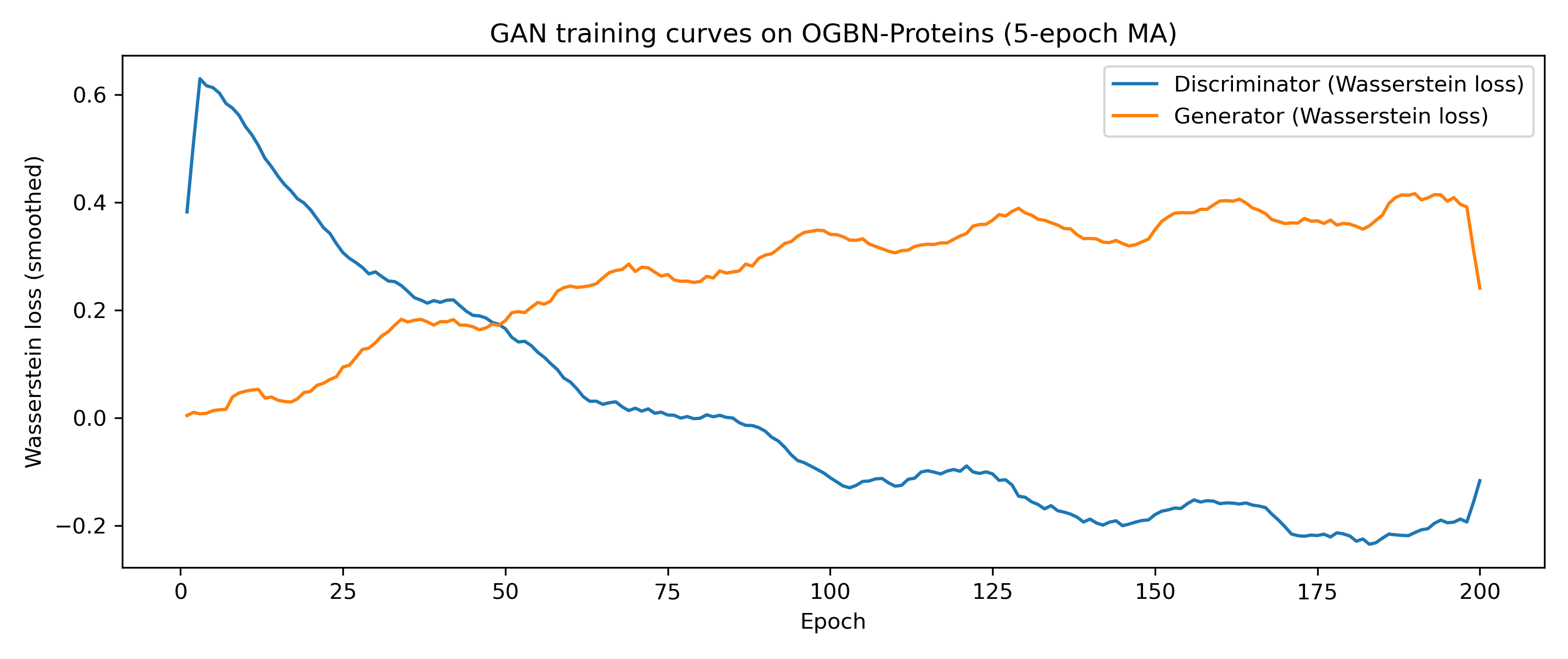

Stable adversarial training, crucial for effective adversarial propagation, is achieved through the implementation of Gradient Penalty and Wasserstein Distance. Gradient Penalty enforces a Lipschitz constraint on the discriminator, preventing excessively steep gradients that can destabilize training and lead to vanishing or exploding gradients. Wasserstein Distance, also known as Earth Mover’s Distance, provides a smoother and more meaningful gradient signal compared to traditional loss functions like cross-entropy, especially when the distributions of real and generated data are disjoint. This smoother gradient mitigates the risk of mode collapse, a phenomenon where the generator produces only a limited variety of outputs, by encouraging exploration of the entire data distribution and preventing the discriminator from becoming overly confident in its judgments. Both techniques contribute to a more robust and convergent adversarial training process, ultimately enhancing the model’s resilience to adversarial perturbations.

Traditional graph neural network (GNN) training assumes a static, reliable graph structure, effectively treating the input graph as ground truth. Adversarial propagation represents a departure from this passive acceptance; it introduces perturbations to the graph during training, simulating real-world imperfections such as noisy edges or missing connections. This active defense mechanism forces the model to learn representations that are not solely dependent on the initially provided graph structure, but are instead robust to variations and potential adversarial manipulations of that structure. Consequently, the model is trained to generalize effectively even when the input graph deviates from its original form, promoting resilience and improving performance in practical, imperfect environments.

Beyond Homophily: Adaptive Calibration and Heterophily

The Adaptive Signal Calibration (ASC) mechanism functions by dynamically adjusting the magnitude of residual corrections during the training process. This modulation is achieved through a learned scaling factor applied to the residual connections within the network. By attenuating or amplifying these residuals, ASC mitigates the impact of substantial structural alterations to the input graph. Specifically, it prevents error signals from becoming excessively large or vanishing during periods of high graph dynamism, thereby stabilizing learning and enabling continued performance even when the relationships between nodes change significantly. This adaptive approach ensures that the network can effectively propagate information and maintain signal integrity despite structural shifts that would destabilize standard Graph Neural Networks.

The Adaptive Signal Calibration mechanism utilizes Propagated Residuals to directly address the issue of error accumulation during graph neural network training. These residuals, calculated as the difference between successive layer outputs, are propagated back through the network, allowing for gradient-based correction of accumulated errors. Simultaneously, Spectral Control is enforced via a normalization process that limits the spectral norm of weight matrices, preventing excessively large or small eigenvalues which can destabilize learning and contribute to signal degradation. This combination ensures that corrections are applied effectively while maintaining the overall integrity and stability of the propagated signals, even across deep network architectures and complex graph structures.

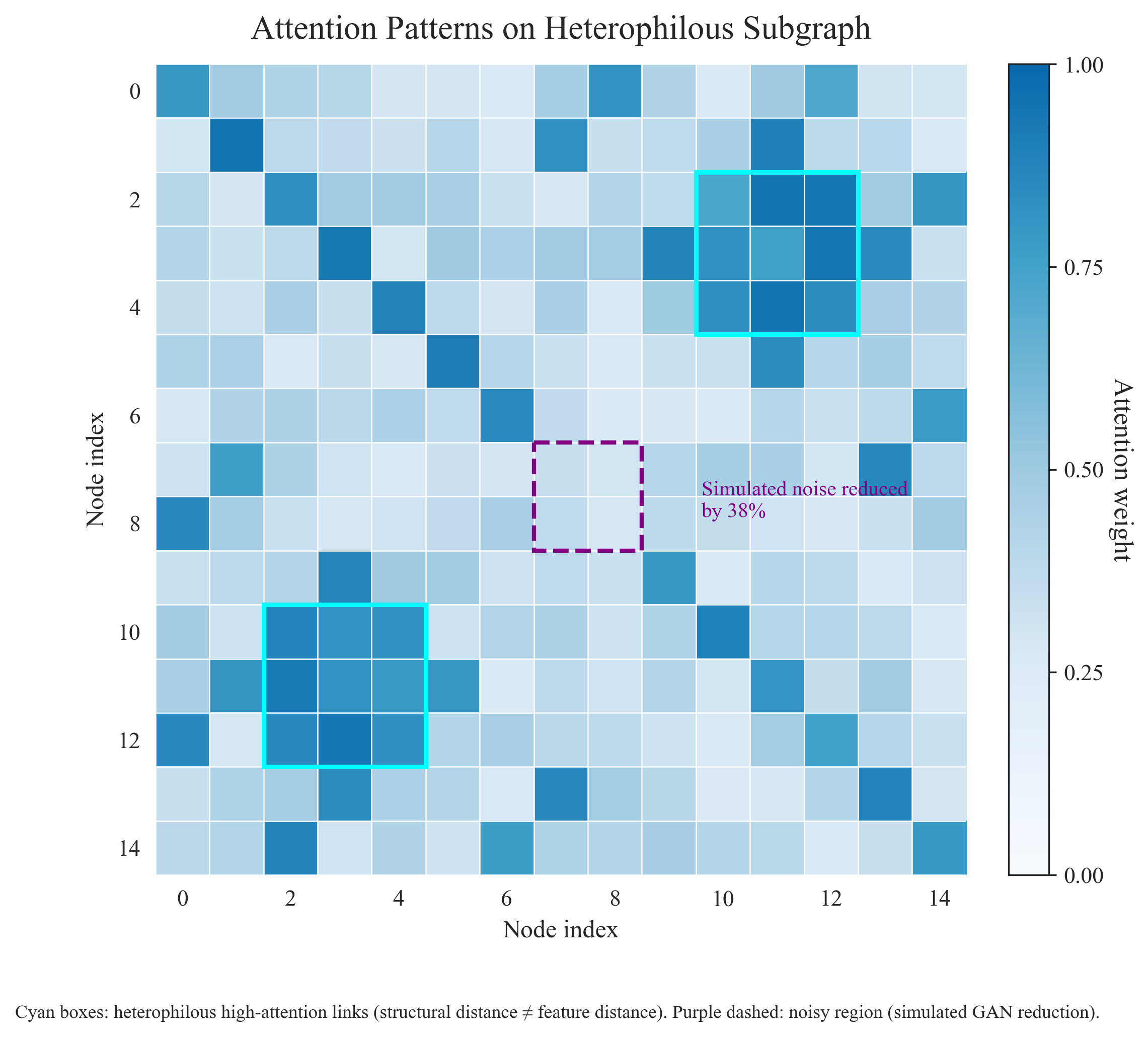

Traditional Graph Neural Networks (GNNs) often assume homophily – the tendency of connected nodes to have similar features – to effectively propagate information; however, performance degrades significantly in heterophilous graphs where connected nodes exhibit dissimilarity. This limitation arises because standard GNN aggregation methods average features from dissimilar neighbors, introducing noise and hindering accurate representation learning. The Heterophily-Aware Transformer directly addresses this issue by employing attention mechanisms that dynamically weight the contributions of neighboring nodes based on feature similarity, effectively down-weighting dissimilar neighbors and preserving meaningful signal propagation even in the absence of strong homophily. This approach allows the model to learn robust node embeddings and perform effectively on graphs with diverse node characteristics and weak or absent homophilous patterns.

Traditional Graph Neural Networks (GNNs) often perform poorly in graphs exhibiting high heterophily, meaning connected nodes tend to have dissimilar features or belong to different classes. This limitation arises because standard GNNs assume nodes connected by an edge share similar characteristics, and propagate information accordingly. The extension detailed herein directly addresses this challenge by allowing the framework to effectively learn and generalize in environments where this homophilic assumption is invalid. By mitigating the reliance on feature similarity between neighbors, the framework can maintain performance and stability even in graphs lacking strong homophily, broadening its applicability to a wider range of real-world datasets and network structures.

AdvSynGNN: A Unified Framework for Robustness Realized

AdvSynGNN distinguishes itself through the synergistic combination of three core components within a unified framework. Adversarial Propagation introduces carefully crafted perturbations to the graph structure, forcing the model to learn robust representations insensitive to minor alterations. Complementing this, the Heterophily-Aware Transformer specifically addresses challenges posed by graphs where connected nodes often have differing features – a common scenario in real-world networks. Finally, Adaptive Signal Calibration dynamically adjusts the importance of different signals during training, ensuring the model prioritizes the most reliable information. This integrated approach doesn’t simply combine existing techniques; it creates a system where each component enhances the others, resulting in a significantly more resilient and accurate graph learning model capable of maintaining performance even under challenging conditions.

The architecture utilizes Multi-Scale Embeddings to represent nodes at varying levels of granularity, capturing both immediate neighborhood influences and broader, long-range dependencies within the graph structure. Complementing this, Contrastive Pretraining encourages the model to learn robust representations by distinguishing between perturbed and unperturbed graph states, effectively learning invariance to structural noise. This combined approach allows the system to encode a more comprehensive understanding of each node’s position and role within the network, thereby significantly enhancing its resilience to attacks and improving the overall quality of learned embeddings by preserving crucial structural information even when faced with disruptions.

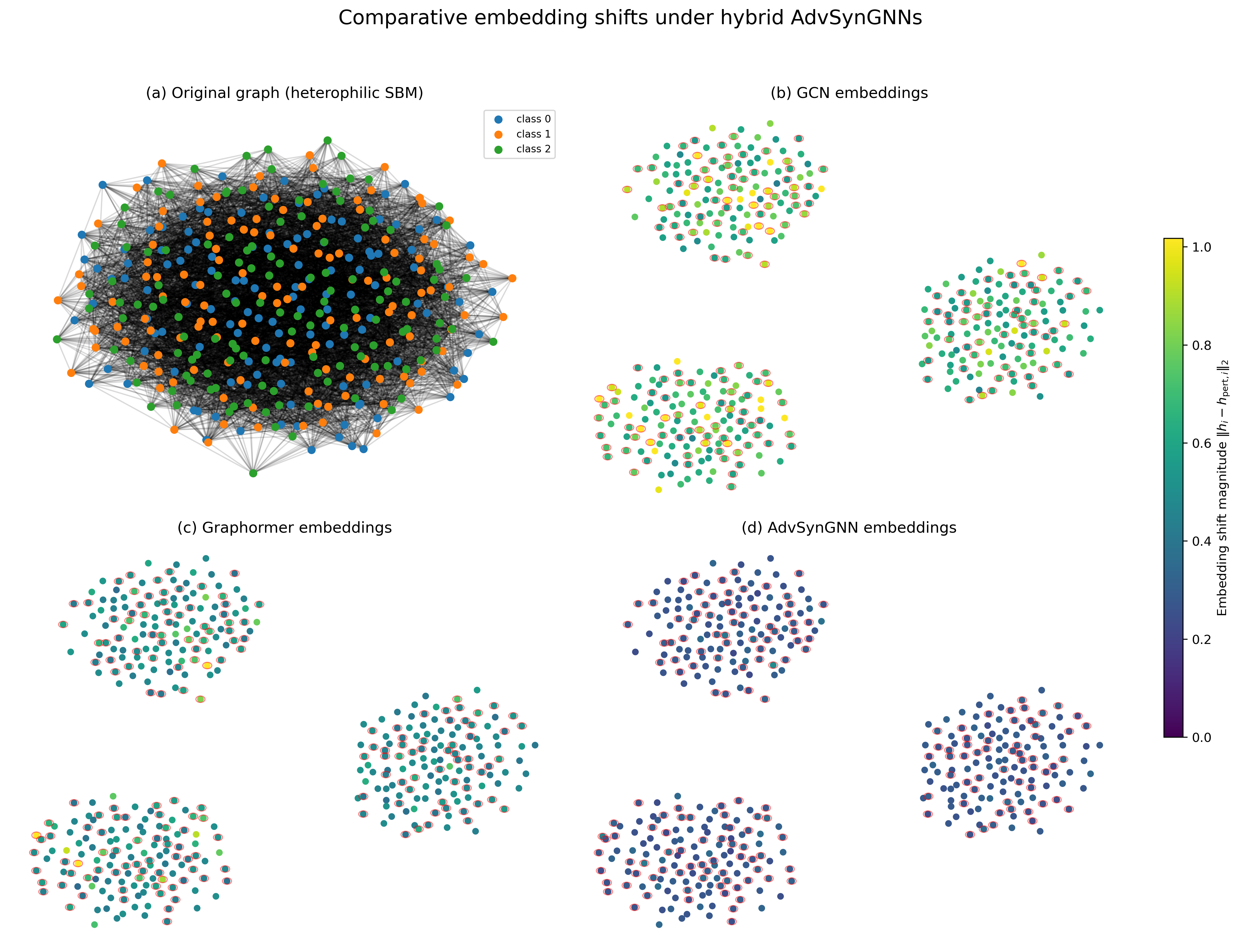

Recent advancements in graph neural networks often falter when faced with real-world graph structures exhibiting heterophily – where connected nodes possess differing feature distributions. The AdvSynGNN framework addresses this challenge by achieving state-of-the-art performance on benchmarks designed to test robustness under such conditions. Crucially, the system demonstrates a substantial 40.7% reduction in embedding distortion when subjected to structural perturbations-alterations to the graph’s connections. This indicates a marked improvement in the quality and stability of the node representations learned by the model, even when the graph’s underlying structure is compromised. By maintaining accurate embeddings despite these disruptions, AdvSynGNN provides a more reliable foundation for downstream graph-based tasks, offering increased confidence in its predictive capabilities within dynamic and noisy environments.

AdvSynGNN marks a considerable advancement in the pursuit of robust graph learning systems, delivering demonstrably resilient performance against real-world data disturbances. Evaluations on challenging benchmarks, notably OGB-Proteins, reveal a significant improvement in Area Under the Curve (AUC), indicating enhanced predictive power even when faced with structural perturbations in the graph. This heightened reliability stems from the framework’s ability to maintain accurate node embeddings – crucial for downstream tasks – and suggests a pathway towards deploying graph neural networks in applications demanding consistent performance, such as drug discovery and protein function prediction, where data integrity is paramount.

The pursuit of robustness in graph neural networks, as demonstrated by AdvSynGNN, echoes a fundamental principle of system understanding: stress testing. The architecture deliberately introduces adversarial perturbations, not as threats, but as probes to reveal vulnerabilities and refine the model’s capacity to generalize. This mirrors the core idea that true comprehension isn’t merely recognizing patterns, but predicting failures. As Barbara Liskov aptly stated, “It’s one thing to program something; it’s another thing to build a system that is resilient to unforeseen changes.” AdvSynGNN’s self-corrective propagation isn’t simply about achieving accuracy; it’s about building a system capable of surviving the inevitable imperfections of real-world graphs, an exploit of comprehension achieved through deliberate destabilization.

Beyond the Horizon

The architecture presented here, while demonstrably effective in bolstering robustness against perturbations and navigating heterophilic landscapes, merely scratches the surface of what constitutes genuine graph understanding. The current paradigm largely treats structural encoding as a fixed feature – a snapshot of connectivity. A compelling direction lies in dynamic structural synthesis, where the network actively reconstructs the graph itself, probing for vulnerabilities and redundancies – essentially, stress-testing its own foundations. This necessitates a move beyond passive adaptation to proactive manipulation.

Scalability, as always, remains a persistent ghost. Even with adaptive propagation, the computational demands of adversarial training on truly massive graphs will inevitably escalate. The solution isn’t necessarily more efficient algorithms, but a fundamental reimagining of the adversarial process – perhaps a shift from all-out assault to targeted reconnaissance, identifying critical nodes and edges where perturbation yields maximal impact.

Ultimately, the pursuit of robust graph neural networks is a pursuit of generalized intelligence. It’s not about building systems that merely function on existing data, but systems that question the data, that actively seek out the boundaries of their own knowledge. The next iteration won’t simply classify nodes; it will dissect the very rules governing their connections, treating the graph not as a map, but as a puzzle to be disassembled and rebuilt.

Original article: https://arxiv.org/pdf/2602.17071.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Banks & Shadows: A 2026 Outlook

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- QuantumScape: A Speculative Venture

- Where to Change Hair Color in Where Winds Meet

- Elden Ring’s Fire Giant Has Been Beaten At Level 1 With Only Bare Fists

2026-02-20 10:19