Author: Denis Avetisyan

New research explores how understanding the unique communication styles of older adults can lead to more effective and accessible AI-powered technology support.

This review examines the potential of foundation models and synthetic data to create age-inclusive digital technology experiences for older adults.

Despite increasing digital access, older adults often face unique challenges articulating technology-related issues, hindering effective support. This research, detailed in ‘Empowering Older Adults in Digital Technology Use with Foundation Models’, investigates these communication barriers and explores how foundation models can bridge them. Our findings demonstrate that AI-driven paraphrasing of older adults’ queries significantly improves solution accuracy and understanding, facilitated by a novel synthetic dataset designed to reflect their specific support requests. Could this approach pave the way for more equitable and accessible AI systems that genuinely empower aging populations in a digital world?

Decoding the Digital Divide: Why Support Systems Fail

Older adults increasingly rely on technology for essential tasks, yet current support systems frequently struggle to meet their needs, leading to frustration and limited adoption. This isn’t necessarily a matter of technical skill, but rather a breakdown in communication; support often assumes a level of digital fluency or a specific understanding of system logic that doesn’t align with how older adults approach problem-solving. Traditional troubleshooting relies heavily on jargon and abstract concepts, while effective support requires translating technical language into everyday terms and acknowledging the user’s perspective. Studies indicate that mismatched communication styles can hinder comprehension, increase errors, and ultimately prevent older adults from fully benefiting from digital tools, highlighting the need for more user-centered and empathetically designed support strategies.

The effective use of digital technology often stumbles not on a lack of technical skill, but on a fundamental disconnect between what a user intends to accomplish and how a system interprets that request. This challenge is particularly acute for older adults, where age-related cognitive shifts – such as decreased working memory or processing speed – can widen the gap between intention and execution. Subtle difficulties in formulating precise queries, remembering multi-step procedures, or adapting to unexpected system responses can quickly lead to frustration and abandonment. Consequently, seemingly simple tasks become significantly more demanding, highlighting the need for interfaces and support systems designed to proactively anticipate user needs and accommodate variations in cognitive function, rather than assuming a uniform level of digital literacy and cognitive agility.

Effective interaction with digital tools isn’t simply about technical skill, but fundamentally about how a user thinks the system works – their internal Mental Model. These models, built from prior experiences and expectations, often diverge from the actual logic of software and devices. For older adults, age-related changes in cognitive processing can amplify these discrepancies, leading to misunderstandings and frustration. A user might, for example, believe clicking an icon will achieve a different outcome than the system intends, or struggle to conceptualize information presented in a non-intuitive way. Consequently, even seemingly simple tasks can become challenging when a user’s Mental Model clashes with the system’s design, highlighting the importance of interfaces that align with established user expectations and accommodate diverse cognitive approaches.

AI as a Lever: Automating Empathy in Tech Support

Large Language Models (LLMs) present a significant opportunity to enhance technology support operations through automation. These AI systems are trained on massive datasets of text and code, enabling them to understand and generate human-like responses to complex technical inquiries. This capability facilitates the development of virtual assistants and chatbots capable of resolving common issues, triaging tickets, and providing step-by-step guidance, thereby reducing the workload on human support agents. LLMs can also analyze support requests to identify trends, predict potential problems, and proactively offer solutions, ultimately improving overall service quality and customer satisfaction. The application of LLMs extends beyond simple question answering to include tasks such as code generation for troubleshooting, documentation summarization, and automated report creation.

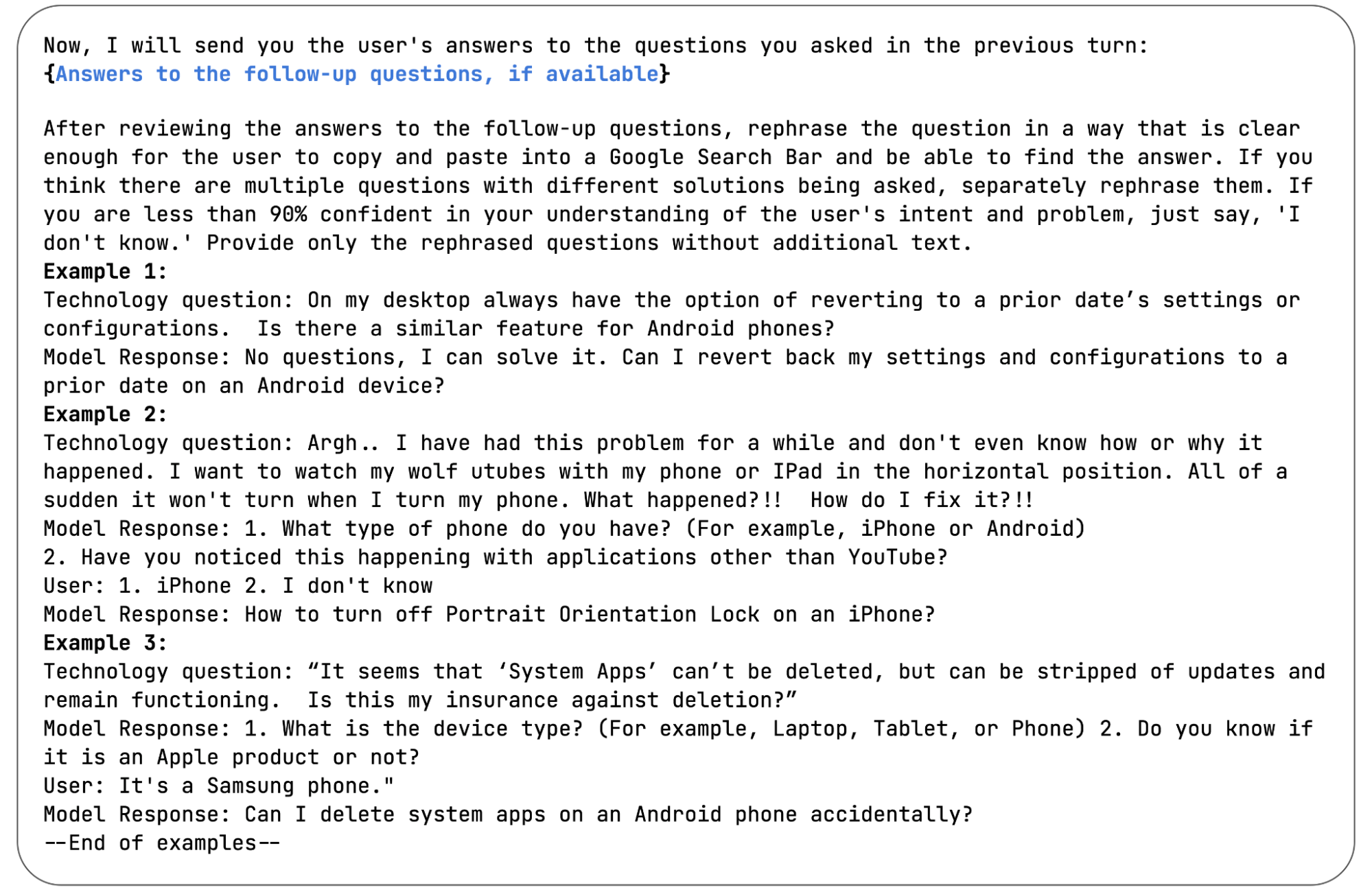

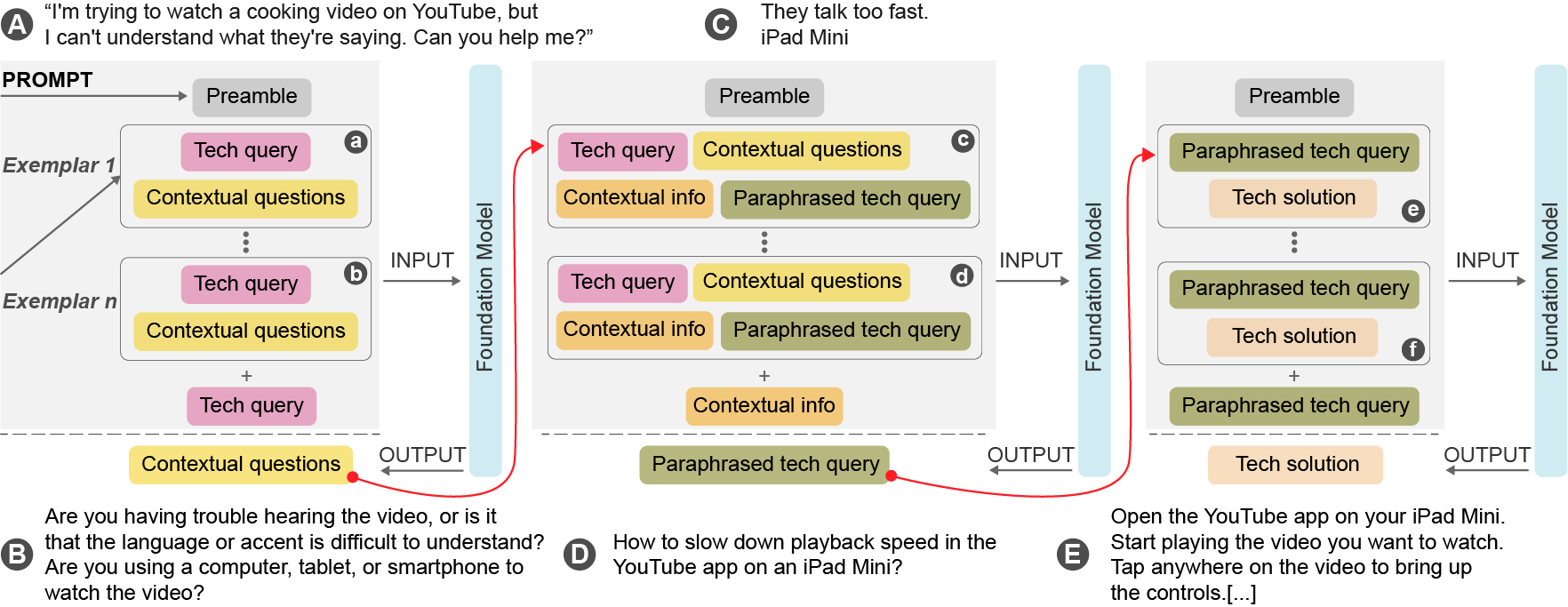

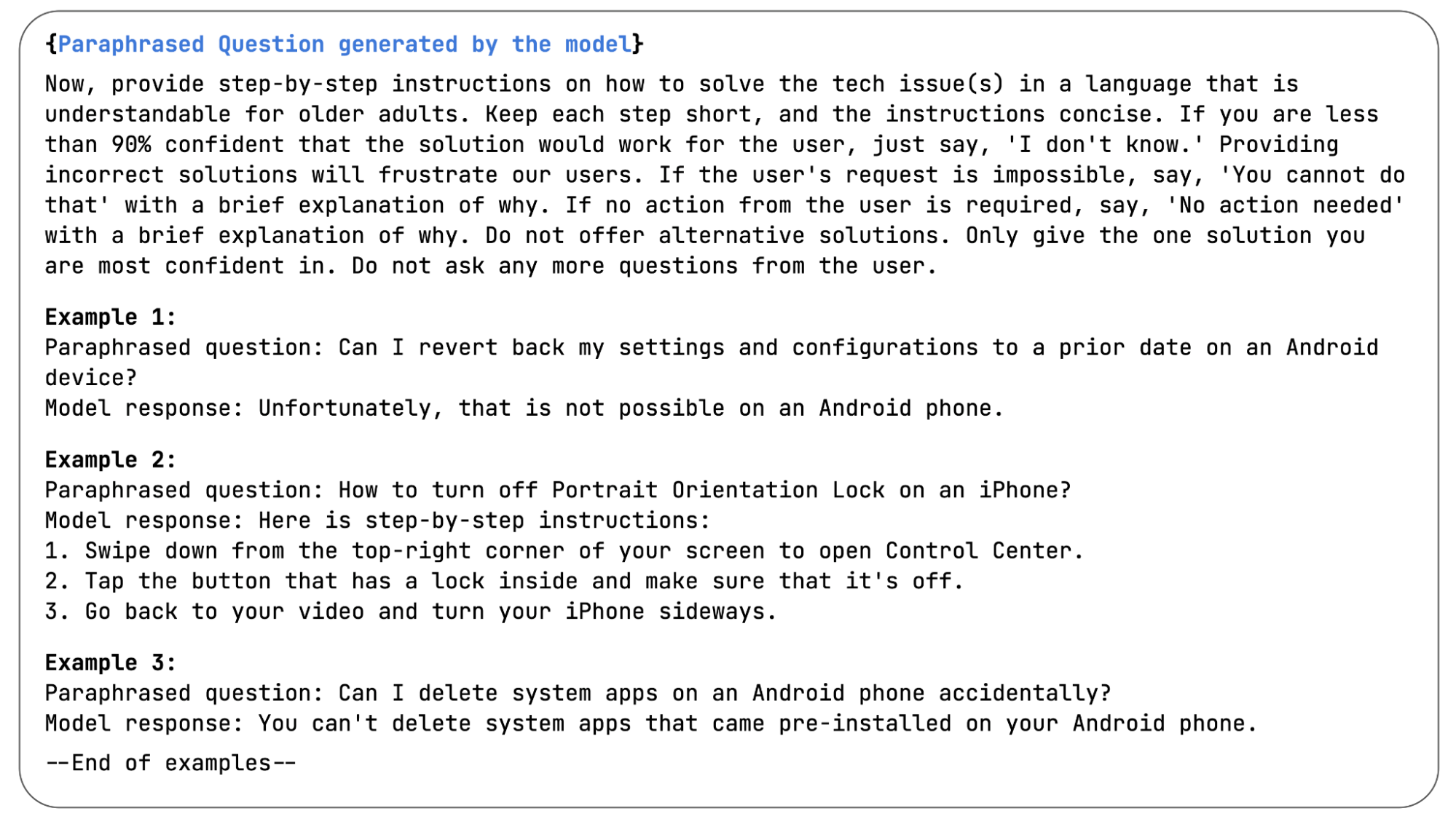

Few-shot prompt chaining is a technique that leverages the ability of Large Language Models (LLMs) to generalize from a limited number of examples. Rather than requiring substantial datasets for training, this method constructs a sequence of prompts, where the output of one prompt becomes the input for the next. Each prompt in the chain is designed to progressively refine the model’s understanding of a specific task. This approach significantly reduces the need for extensive, labeled training data, allowing for rapid adaptation to new or niche support requests by providing only a few illustrative examples within the initial prompts. The LLM then uses these examples to infer the desired behavior and apply it to subsequent, unseen inputs, effectively ‘learning’ the task on-the-fly.

GPT-4o functions as the core language model powering our technology support solution, facilitating more human-like conversational interactions. Evaluations demonstrate a 35% increase in query accuracy when utilizing GPT-4o-processed prompts as compared to direct, original user queries. This improvement stems from the model’s ability to interpret intent and rephrase requests for optimal results, reducing ambiguity and enhancing the precision of responses delivered to users. The model’s architecture allows for nuanced understanding of complex technical issues, leading to more effective and efficient support resolutions.

OATS & OS-ATLAS: Benchmarking AI for the Silver Generation

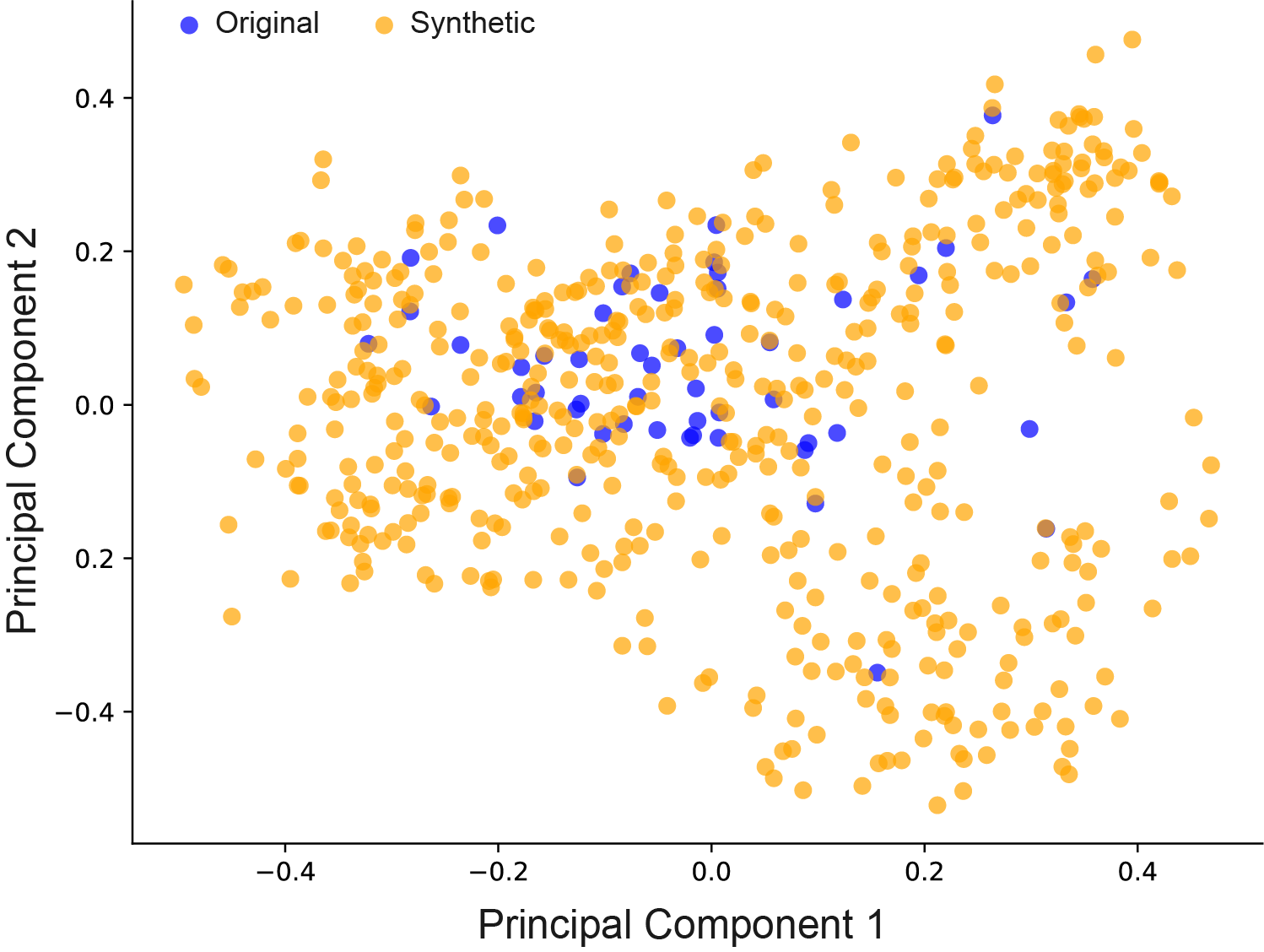

The OATS (Older Adult Tech Support) Dataset was created to specifically evaluate the performance of AI systems addressing the technical support needs of older adults. This synthetic dataset differs from existing resources by focusing on realistic scenarios and challenges commonly encountered by this demographic, including issues with smartphones, tablets, and smart home devices. The dataset was generated to include a diverse range of queries, reflecting variations in user technical literacy and phrasing, and is designed to facilitate a more nuanced assessment of AI response quality beyond general chatbot benchmarks. The creation of OATS addresses a gap in existing evaluation resources which often lack representation of the unique support requirements of older users.

The OATS (Older Adult Tech Support) Dataset builds upon existing datasets by specifically addressing the unique challenges faced by older adults interacting with technology. Unlike general-purpose datasets, OATS focuses on realistic scenarios derived from common tech support queries experienced by this demographic. This includes issues related to device setup, application usage, and troubleshooting, with an emphasis on the phrasing and complexity of language used by older adults. The dataset’s construction involved identifying frequently encountered problems and formulating queries that reflect the typical ways older users articulate these issues, thereby providing a more representative benchmark for evaluating AI performance in this critical support area.

OS-ATLAS is a GUI action model designed to establish a consistent and objective method for evaluating the quality of AI responses to tech support queries. This model functions as a benchmark against which AI performance can be measured, specifically assessing both the completeness – whether all necessary steps are included – and the accuracy of the suggested actions. In comparative testing utilizing the OATS dataset, our AI approach achieved a ROUGE-L score of 0.72, demonstrating a substantial performance advantage over OS-ATLAS itself, which scored 0.30 on the same metrics. This difference indicates a significant improvement in the AI’s ability to generate effective and comprehensive solutions.

The Perils of Precision: Unmasking Communication Bottlenecks

Large language models, despite their advanced capabilities, frequently encounter communication barriers stemming from imbalances in information delivery. Research indicates a tendency toward excessive verbosity, where responses contain unnecessary detail obscuring core information. Conversely, these models also struggle with both over-specification – providing extraneous, hyper-detailed instructions – and under-specification, failing to include crucial contextual details. This duality hinders effective communication, as users may become overwhelmed by superfluous information or frustrated by responses lacking essential clarity. The resulting ambiguity impacts problem-solving, demonstrating that simply generating text does not equate to clear and actionable communication.

The propensity of large language models to generate overly verbose or insufficiently detailed responses directly impacts user experience, often leading to confusion and frustration. This negative correlation is particularly pronounced when the model’s ability to articulate actionable steps – its ‘Action Verbal Fluency’ – diminishes. A decline in this fluency doesn’t simply mean more abstract explanations; it manifests as responses that lack the necessary precision for users to successfully implement suggested solutions. Consequently, individuals may struggle to interpret the AI’s output, requiring significant effort to translate generalized advice into concrete actions, or abandoning the interaction altogether due to the perceived lack of utility and clarity.

Analysis reveals that incomplete responses from artificial intelligence systems significantly impede problem-solving capabilities, a phenomenon demonstrably linked to communication barriers. Evaluations using BLEU scores – a metric for assessing the quality of machine-translated text – showed the AI consistently paraphrased queries with high fidelity, achieving a score of 0.95, surpassing the performance of the OS-ATLAS system at 0.85. Crucially, this enhanced clarity translated to practical results; the AI generated accurate solutions 69% of the time, a substantial improvement over the 35% accuracy rate observed when responding to original, potentially ambiguous, queries. These findings underscore the importance of complete and well-defined responses in maximizing the effectiveness of AI-driven problem-solving.

The pursuit of genuinely helpful AI, as detailed in this research, isn’t about flawless execution, but about anticipating the nuances of human interaction. It acknowledges that established systems often fall short because they’re built on assumptions, not observation. This resonates deeply with Tim Berners-Lee’s sentiment: “The Web is more a social creation than a technical one.” The article’s focus on synthetic data, designed to mirror the natural language patterns of older adults seeking tech support, exemplifies this principle. By deliberately challenging the limitations of existing datasets – and, by extension, the AI they produce – the research aims to build a more inclusive digital world, one interaction at a time. It’s a recognition that true innovation requires questioning the foundations upon which systems are built.

Uncharted Territories

The pursuit of age-inclusive AI, as illuminated by this work, reveals a fundamental truth: simplification is often a constraint, not a solution. To model assistance for older adults using foundation models is not merely a technical exercise in natural language processing, but an attempt to reverse-engineer the very architecture of help-seeking behavior. The synthetic dataset, while a valuable step, merely maps the observable patterns; the unspoken assumptions, the contextual nuances, remain a shadowed landscape. It begs the question: how much of ‘natural’ communication is actually a learned performance, and how much is a deeply embedded cognitive strategy?

Future iterations should embrace the inherent messiness of real-world interaction. The current focus on clarity, while well-intentioned, risks stripping away the very cues that allow humans to detect misunderstanding and adapt. Perhaps the most fruitful path lies not in predicting what an older adult will ask, but in building systems that are exquisitely sensitive to how they ask it – the hesitations, the self-corrections, the indirect requests.

Ultimately, this research isn’t about building better tools; it’s about understanding a fundamental human vulnerability – the moment of technological dependence. And it is in that vulnerability that the most interesting challenges, and the most profound insights, reside. The true test will not be whether these systems work, but whether they subtly alter the relationship between a person and the technology they rely upon.

Original article: https://arxiv.org/pdf/2601.10018.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Top 15 Insanely Popular Android Games

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- Gold Rate Forecast

- EUR UAH PREDICTION

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- Silver Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-01-18 00:45