Author: Denis Avetisyan

Researchers have developed a novel model, Dualformer, that analyzes time series data in both the time and frequency domains to significantly improve long-term prediction accuracy.

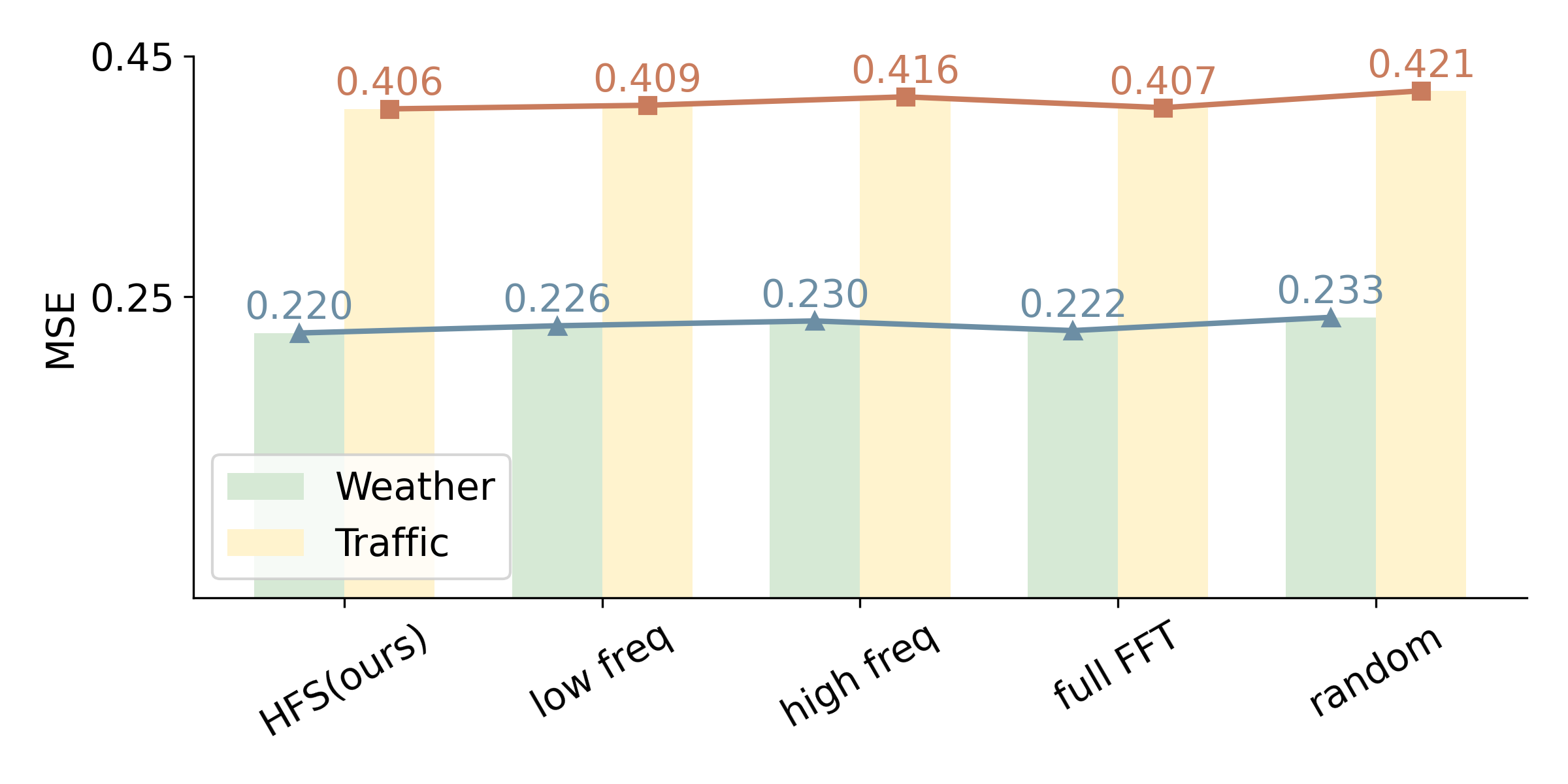

Dualformer leverages hierarchical frequency sampling to address inherent biases in Transformer models, achieving state-of-the-art results on standard time series benchmarks.

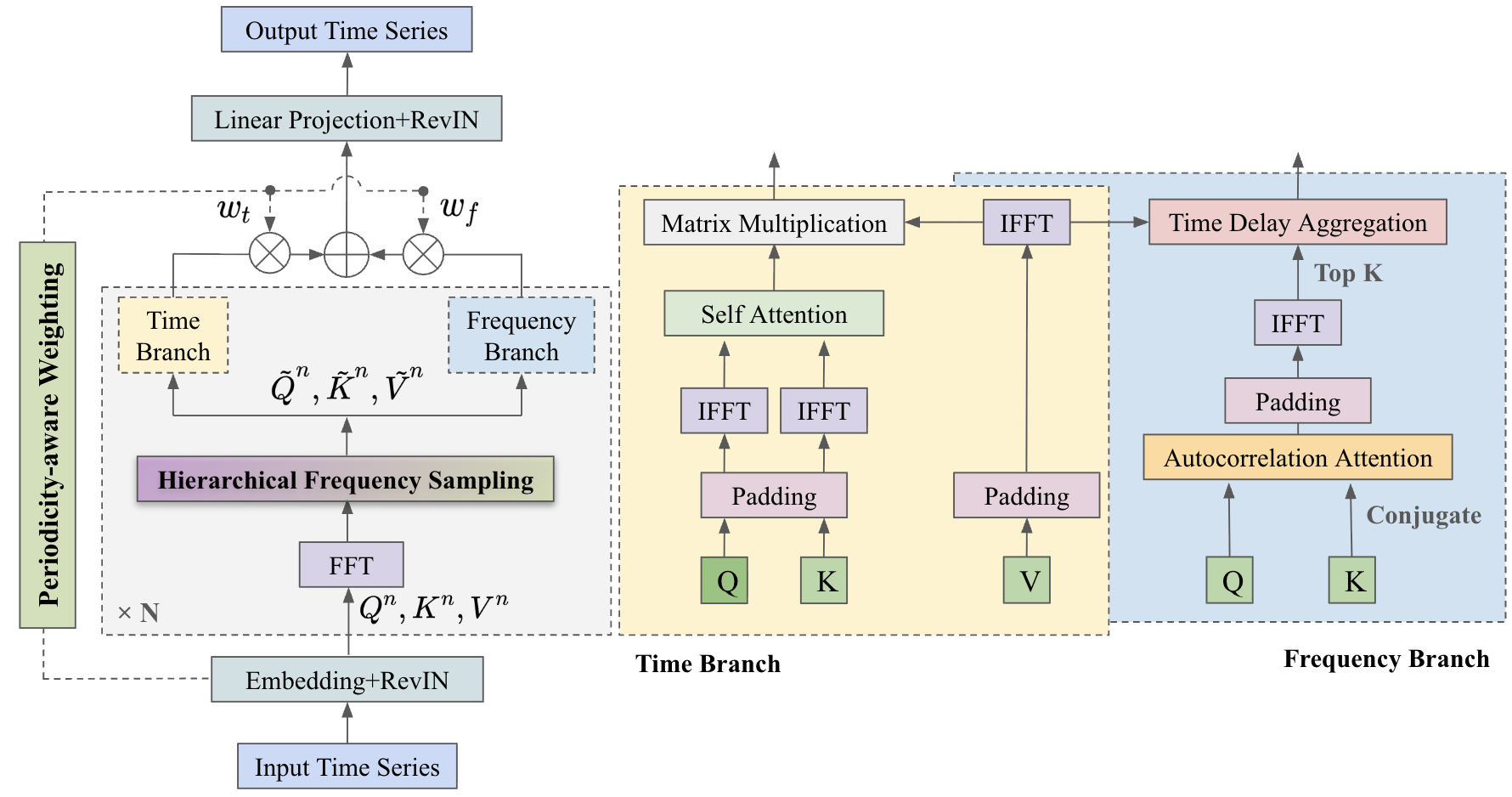

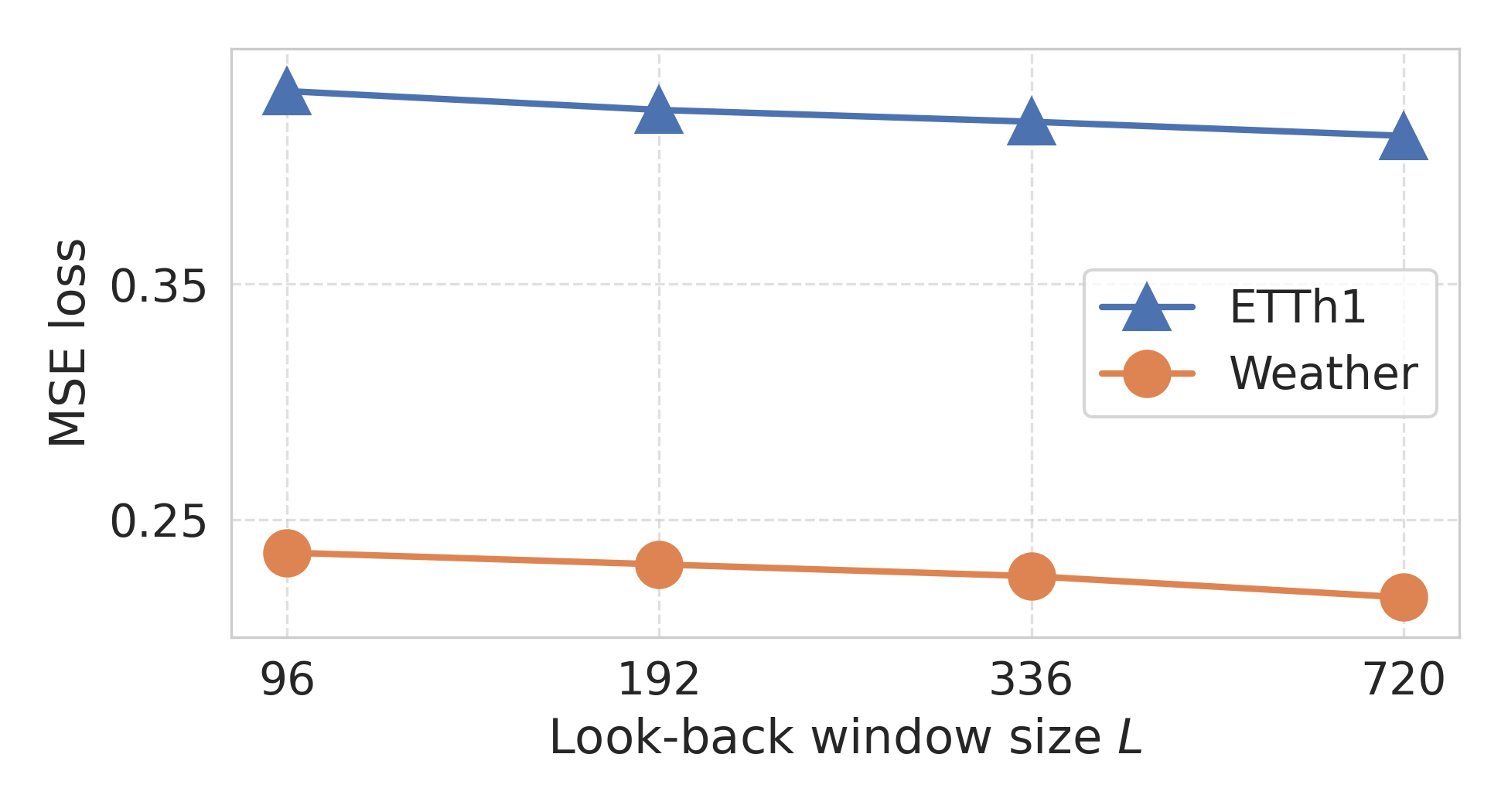

Despite the promise of Transformer-based models for long-term time series forecasting, their inherent low-pass filtering effect can limit performance by attenuating crucial high-frequency information. To address this, we introduce ‘Dualformer: Time-Frequency Dual Domain Learning for Long-term Time Series Forecasting’, a novel framework that explicitly models temporal dynamics in both time and frequency domains via a dual-branch architecture and hierarchical frequency sampling. This approach preserves high-frequency details while capturing long-range dependencies, enabling more accurate and robust forecasts, particularly for complex or weakly periodic data. Can this dual-domain learning strategy unlock new capabilities in time series analysis and forecasting beyond traditional methods?

The Inevitable Horizon of Prediction

The ability to accurately predict trends extending far into the future – long-term time series forecasting – underpins critical decision-making across numerous fields, from economic planning and resource management to climate modeling and epidemiological projections. However, achieving this predictive power is remarkably difficult due to the inherent complexities embedded within real-world data; these include non-stationarity, seasonality, the presence of outliers, and, crucially, the intricate, often chaotic, interplay of numerous influencing factors. While short-term forecasts can often leverage recent patterns, extending predictions further requires modeling these complex relationships and anticipating shifts in underlying dynamics, a task that quickly becomes computationally expensive and susceptible to error as the forecast horizon lengthens. Consequently, despite advancements in statistical modeling and machine learning, reliable long-term forecasting remains a substantial scientific and practical challenge.

Conventional time series forecasting techniques, such as autoregressive integrated moving average (ARIMA) and exponential smoothing, frequently exhibit diminished accuracy when predicting values far into the future. This performance decline stems from their limited capacity to effectively model long-range dependencies within the data – subtle relationships spanning extended periods. These methods typically excel at capturing immediate, short-term patterns, but struggle to propagate information across numerous time steps, causing errors to accumulate as the forecast horizon lengthens. Consequently, predictions for distant future values become increasingly unreliable, highlighting the need for more sophisticated approaches capable of retaining and utilizing information from the distant past to inform present and future estimates. The inherent difficulty in capturing these dependencies represents a fundamental challenge in long-term forecasting, prompting research into techniques like recurrent neural networks and transformers designed to overcome these limitations.

The Dual Nature of Temporal Insight

Dualformer utilizes a time-frequency dual-domain framework to improve time series forecasting accuracy by integrating both temporal and frequency-based analyses. Traditional time series models primarily operate within the time domain, while frequency-domain methods, such as the Fourier transform, can effectively identify and isolate periodic components. Dualformer aims to combine the strengths of both approaches; time-domain analysis captures sequential dependencies and trends, while frequency-domain analysis excels at recognizing recurring patterns, even those obscured within complex data. This integrated framework allows the model to leverage complementary information, leading to a more robust and accurate representation of the underlying time series dynamics and improved forecasting performance.

Dualformer improves time series forecasting by concurrently analyzing data in both the time and frequency domains. Traditional time series models often focus exclusively on temporal relationships, potentially missing crucial periodic components. By decomposing the time series into its frequency constituents – identifying dominant frequencies and their corresponding amplitudes – Dualformer can explicitly model seasonality and cyclical patterns. This dual analysis allows the model to capture complex, non-linear relationships and dependencies that might be obscured when considering only the time-based representation, resulting in more accurate forecasts, particularly for data exhibiting strong periodic behavior or multiple interacting seasonalities.

Dualformer’s dual-branch architecture utilizes hierarchical frequency sampling to distribute frequency components across layers based on their importance. The process begins with a comprehensive frequency analysis of the input time series. These frequency components are then strategically assigned to different layers within the network; lower layers typically process high-frequency components responsible for capturing short-term fluctuations, while higher layers handle low-frequency components representing long-term trends and periodicities. This intelligent allocation minimizes redundancy and optimizes computational efficiency by ensuring each layer focuses on a specific frequency band relevant to its processing level. The hierarchical structure also facilitates the capture of multi-scale patterns by allowing interactions between frequency components at different levels of abstraction.

Adapting to the Rhythm of the Data

Dualformer employs a Periodicity-Aware Weighting mechanism that modulates the contribution of its time and frequency domain processing branches. This dynamic adjustment is achieved by calculating a weighting factor based on the input signal’s characteristics; signals exhibiting strong periodicity receive a higher weighting for the frequency branch, while aperiodic signals prioritize the time branch. This allows the model to adapt its processing strategy, effectively leveraging the strengths of each branch based on the specific properties of the input data and optimizing the overall fusion process. The weighting is not static; it is computed for each input segment, enabling granular adaptation to varying signal characteristics within a single data stream.

The Dualformer model utilizes the Harmonic Energy Ratio (HER) to dynamically weight the contributions of its time and frequency processing branches. HER is calculated as the ratio of the energy contained within the harmonic components of the input signal to the total signal energy; a higher HER value indicates stronger periodicity. This ratio is then employed to modulate the weighting applied to each branch, increasing the influence of the branch best suited to capture the dominant signal characteristics. Specifically, signals with high HER receive greater weighting from the frequency branch, leveraging its capacity to analyze periodic components, while aperiodic signals prioritize the time branch. This adaptive weighting scheme ensures optimal feature fusion based on the input signal’s inherent periodicity, enhancing the model’s ability to represent diverse audio characteristics.

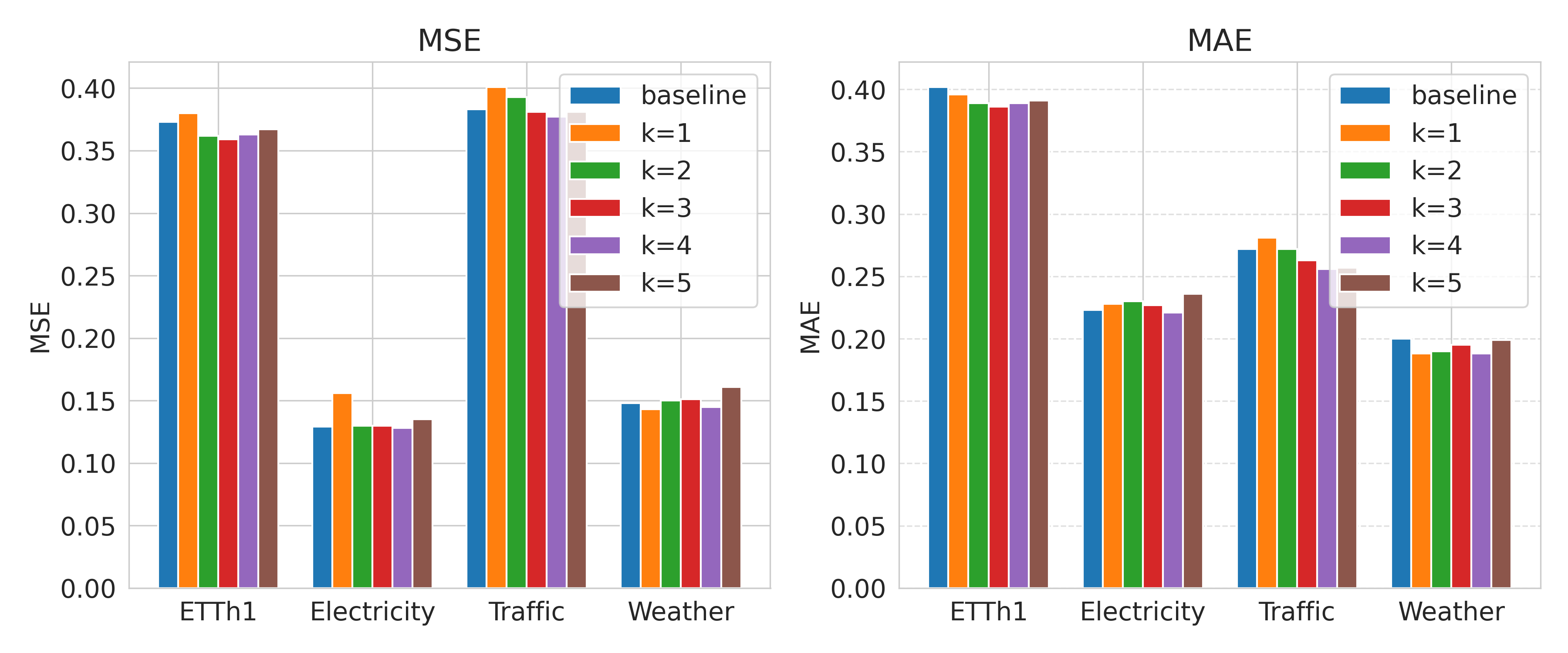

Performance evaluations demonstrate that Dualformer achieves lower Mean Squared Error (MSE) than current state-of-the-art models in a majority of tested scenarios. Specifically, across eight benchmark datasets, Dualformer outperformed these models in 13 out of 16 average cases. This consistent improvement indicates the efficacy of the Dualformer architecture in signal processing tasks and its ability to generate more accurate results compared to existing methods, as measured by the MSE metric.

To mitigate potential signal degradation, the Dualformer architecture incorporates Reversible Instance Normalization (RIN). RIN allows for the reconstruction of original activations during the forward pass, reducing information loss associated with normalization layers. Simultaneously, the design carefully addresses the Low-Pass Filtering Effect, a phenomenon where repeated filtering operations – inherent in deep neural networks – can attenuate high-frequency signal components. By optimizing layer configurations and filter parameters, the Dualformer minimizes this effect, preserving crucial signal details and contributing to improved performance across various datasets.

The Inevitable Proliferation of Models

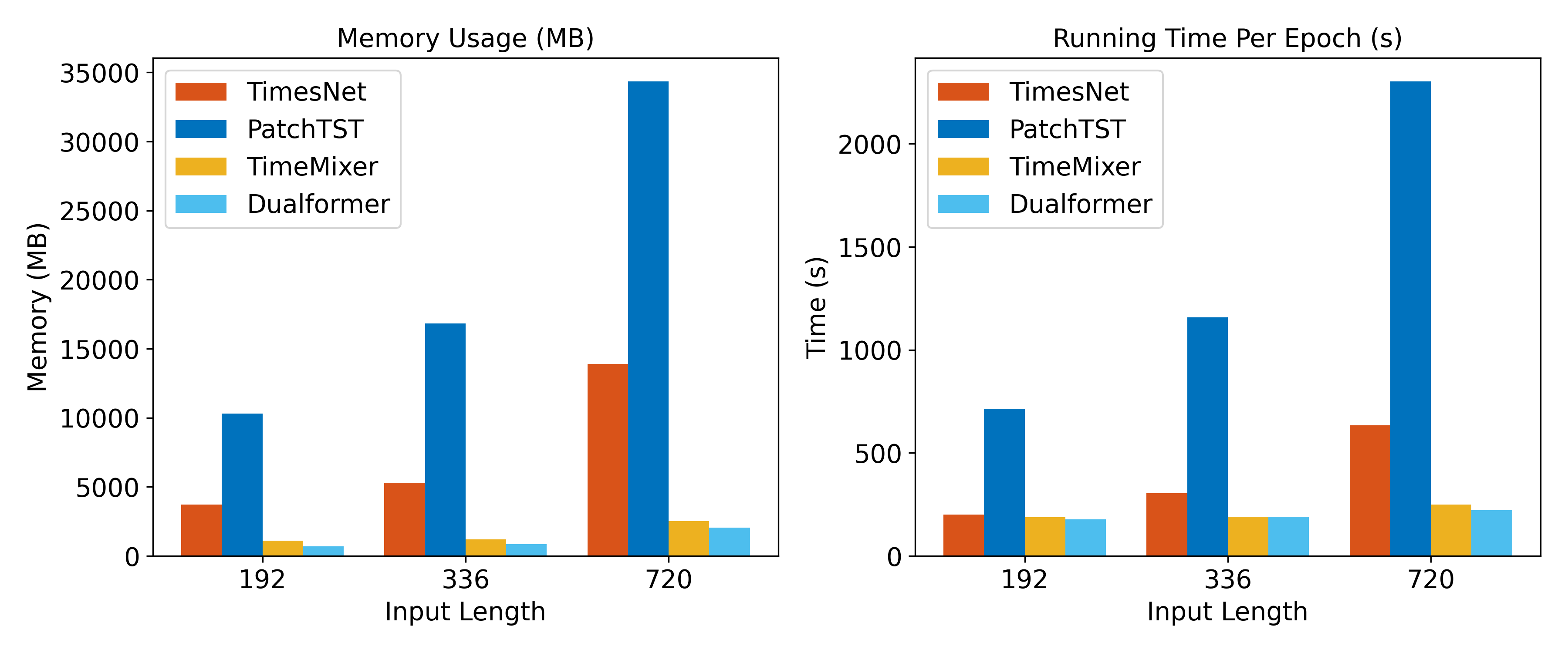

Although Dualformer represents a notable step forward in long-term forecasting, the field continues to benefit from diverse approaches, each with unique capabilities. Models like TimeMixer and PatchTST, for example, offer complementary strengths by utilizing different architectural designs and focusing on specific aspects of temporal data processing. TimeMixer leverages a simplified structure to efficiently capture long-range dependencies, while PatchTST employs a patching strategy inspired by image recognition to improve performance and scalability. These alternative models aren’t intended to replace Dualformer, but rather to provide researchers and practitioners with a broader toolkit, allowing them to select the most appropriate technique – or even combine multiple approaches – based on the specific characteristics of the time series data and the forecasting task at hand. This ongoing innovation underscores the dynamic nature of the field and the continued pursuit of more accurate and efficient long-term forecasting methods.

The field of time series analysis continues to rapidly evolve, with researchers consistently developing novel approaches to improve forecasting accuracy and efficiency. Beyond architectures like Dualformer, models such as TimeMixer and PatchTST offer distinct advantages in capturing temporal dependencies, while Frequency-Improved Legendre Memory Models represent a unique pathway by leveraging spectral characteristics and memory-augmented techniques. This ongoing innovation isn’t merely about achieving incremental gains; it signals a fundamental shift towards more nuanced and adaptable models capable of handling the complexities inherent in real-world time series data, promising breakthroughs in areas ranging from financial forecasting to climate modeling and beyond.

Dualformer distinguishes itself through demonstrable scalability, a crucial factor when dealing with extensive time series datasets. Evaluations reveal a memory footprint ranging from 712MB to 2046MB, indicating efficient resource utilization even with complex models. Critically, the model maintains a swift processing speed, completing a single epoch of training within 177 to 222 seconds. These performance metrics suggest Dualformer offers a practical balance between accuracy and computational demands, allowing researchers and practitioners to tackle long-term forecasting problems without prohibitive resource constraints. This efficiency is vital for real-world applications where timely predictions are paramount and computational resources are often limited.

The decomposition of time series into their constituent frequencies, a process fundamentally enabled by the Fast Fourier Transform (FFT), continues to be a cornerstone of effective analysis and preprocessing. This spectral analysis doesn’t merely reveal periodic patterns hidden within the data; it allows for targeted filtering of noise and the isolation of dominant trends. By transforming the time-domain signal into the frequency domain, researchers can identify and remove unwanted frequencies, effectively smoothing the data and improving the accuracy of forecasting models. Furthermore, understanding the frequency components can inform feature engineering, allowing for the creation of more robust and informative inputs for machine learning algorithms. The FFT’s efficiency and widespread availability ensure its continued relevance, even as more complex models emerge, solidifying its role as an essential tool for extracting meaningful insights from time-dependent data.

The pursuit of accurate long-term time series forecasting, as demonstrated by Dualformer, isn’t about constructing a perfect predictive engine, but rather cultivating a system capable of revealing inherent limitations. This work acknowledges the low-pass filtering bias within traditional Transformer architectures-a revelation, not a bug-and addresses it through frequency domain analysis. As Paul Erdős once stated, “A mathematician knows a lot of formulas, but a good one knows where to apply them.” Dualformer doesn’t simply apply attention mechanisms; it strategically samples frequencies, recognizing that the true power lies not in brute-force computation, but in understanding the landscape of possible failures and building resilience against them. The model’s architecture, therefore, isn’t a solution, but a prophecy of potential shortcomings, consciously feared and meticulously addressed.

The Horizon Recedes

Dualformer’s exploration of time and frequency domains offers a valuable, if predictable, lesson. The pursuit of forecasting accuracy often leads to increasingly elaborate feature engineering – a temporary reprieve from the fundamental uncertainty inherent in complex systems. This work, while demonstrating immediate gains, merely reshapes the landscape of error, not eliminates it. The low-pass filtering bias, addressed through hierarchical sampling, will inevitably give way to new, unforeseen spectral artifacts as the model encounters data distributions not represented in current benchmarks. A system isn’t a machine to be perfected, it’s a garden – and gardens always require tending.

The true challenge lies not in squeezing incremental improvements from existing architectures, but in embracing the inherent limitations of prediction. Resilience lies not in isolation, but in forgiveness between components. Future work might consider explicitly modeling uncertainty, moving beyond point forecasts to probabilistic distributions that reflect the true scope of the unknown. Perhaps the focus should shift from maximizing accuracy to minimizing the cost of inaccurate predictions – a pragmatic approach acknowledging that all forecasts are, ultimately, educated guesses.

The seductive allure of state-of-the-art performance should be tempered with a healthy skepticism. Every architectural choice is a prophecy of future failure. The field progresses not by building ever-taller towers of complexity, but by cultivating a deeper understanding of the underlying dynamics – and accepting that some patterns, by their very nature, resist prediction.

Original article: https://arxiv.org/pdf/2601.15669.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

- Top 15 Movie Cougars

- Top 20 Extremely Short Anime Series

- Top 20 Overlooked Gems from Well-Known Directors

- ‘The Substance’ Is HBO Max’s Most-Watched Movie of the Week: Here Are the Remaining Top 10 Movies

2026-01-24 15:20