Author: Denis Avetisyan

A new approach uses continuous normalizing flows on spectral manifolds to identify safety-critical anomalies in autonomous driving systems, offering improved robustness and interpretability.

This paper introduces Deep-Flow, an unsupervised framework for continuous anomaly detection in autonomous vehicles leveraging manifold-aware spectral spaces.

Current safety validation for autonomous vehicles struggles to scale detection of rare, high-risk scenarios using traditional rule-based systems. To address this, we present a novel framework, ‘Conditional Flow Matching for Continuous Anomaly Detection in Autonomous Driving on a Manifold-Aware Spectral Space’, which leverages continuous normalizing flows on low-rank spectral manifolds to characterize and detect out-of-distribution driving behaviors. This approach, termed Deep-Flow, achieves an AUC-ROC of 0.766 on the Waymo Open Motion Dataset and reveals a critical predictability gap beyond simple kinematic danger, identifying semantic non-compliance such as lane violations. Could this mathematically rigorous foundation for statistical safety gates enable truly objective, data-driven validation for the safe deployment of autonomous fleets?

The Inevitable Uncertainty of Prediction

The reliable forecasting of other road users’ movements is paramount to the safe operation of autonomous vehicles, yet current predictive techniques frequently falter when faced with unusual or dangerous situations. While standard methods excel in common traffic conditions, their performance degrades significantly when encountering rare events – a pedestrian darting into the street, a sudden lane change, or an unexpected obstacle. This vulnerability arises because these systems are often trained on datasets that underrepresent such critical scenarios, leading to inaccurate predictions and potentially hazardous responses. Consequently, a vehicle’s ability to anticipate and react to these low-probability, high-impact events remains a substantial challenge in achieving full autonomy, demanding more robust and adaptable predictive models.

Conventional trajectory prediction methods, particularly those relying on autoregressive models, often exhibit limitations when confronted with the unpredictable nature of real-world driving. These models, designed to predict future states based on past observations, can suffer from accumulated error – a phenomenon known as drift – leading to increasingly inaccurate forecasts over time. Moreover, they typically focus on predicting a single, most likely trajectory, failing to adequately represent the full spectrum of plausible behaviors an agent might undertake. This inability to capture the inherent uncertainty in driving scenarios – such as a pedestrian suddenly stepping into the road or a vehicle executing an unexpected maneuver – poses a significant safety risk, as autonomous systems may be unprepared for deviations from the predicted path and struggle to react effectively to unforeseen events.

Predicting the future movements of agents – vehicles, pedestrians, cyclists – demands more than simply extrapolating from past behavior; it requires acknowledging the fundamental uncertainty woven into real-world driving. A generative approach addresses this by learning the underlying distribution of possible trajectories, rather than a single, most-likely path. This allows the system to produce a range of plausible futures, reflecting the myriad ways a situation could unfold, and crucially, to assess the probability of rare, yet critical, events. By modeling this inherent stochasticity, these methods can better prepare autonomous vehicles for unexpected maneuvers or ambiguous intentions, improving safety and reliability in complex and unpredictable environments. The strength of a generative model lies in its capacity to not just forecast what might happen, but to quantify the likelihood of various outcomes, informing more robust decision-making.

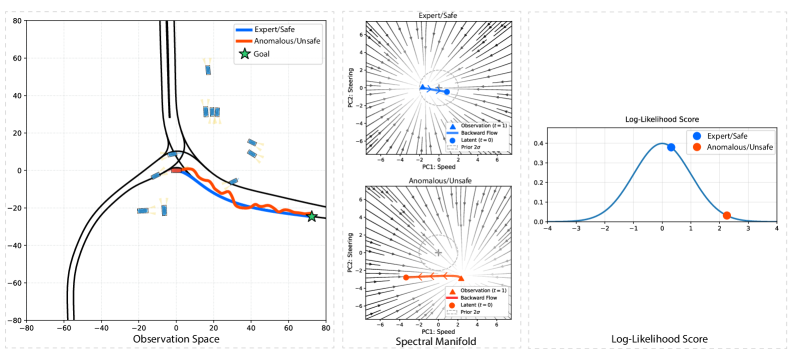

Flows of Probability: Modeling the Expected and the Anomalous

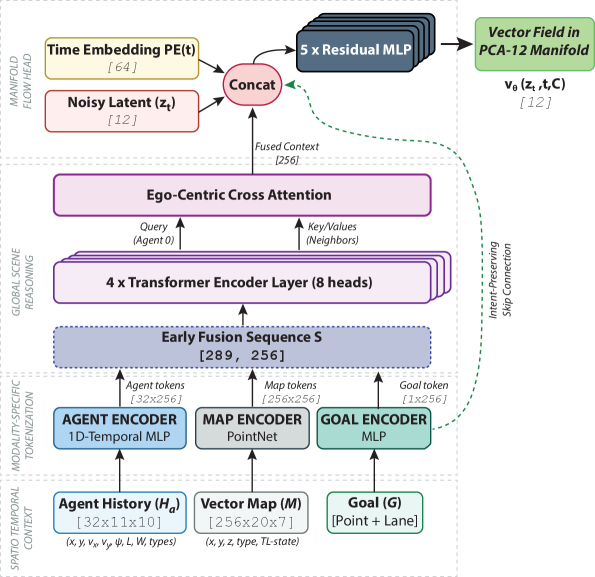

Deep-Flow employs Continuous Normalizing Flows (CNF) as its core generative modeling technique. CNF learns a probability distribution representing typical driving behaviors by transforming a simple, known distribution – often a Gaussian – through a continuous sequence of invertible transformations. This allows the model to capture complex, multi-modal distributions inherent in driving data, unlike traditional methods. By learning this distribution, Deep-Flow can then assess the likelihood of any given trajectory; trajectories with low probability are flagged as anomalous. The CNF architecture enables efficient density estimation and sampling, crucial for real-time anomaly detection and trajectory prediction in dynamic driving scenarios.

The Deep-Flow framework employs a Spectral Manifold Bottleneck to improve trajectory modeling by reducing the dimensionality of the state space using Principal Component Analysis (PCA). This dimensionality reduction is not purely for computational efficiency; it also enforces kinematic smoothness by representing trajectories on a lower-dimensional manifold that captures essential kinematic constraints. The PCA transformation identifies principal components representing the dominant modes of variation in driving behavior, effectively filtering noise and irrelevant details. By constraining the generative model to learn within this reduced space, the framework produces more realistic and efficient trajectory predictions, as the learned distribution focuses on plausible, kinematically sound driving maneuvers.

Goal-Lane Conditioning and Kinematic Complexity Weighting are integrated into the Deep-Flow framework to improve the accuracy of generative modeling, particularly in challenging driving scenarios. Goal-Lane Conditioning provides the generative model with information regarding the intended lane of the vehicle, allowing it to predict future trajectories conditioned on a specific driving goal. Kinematic Complexity Weighting assigns higher importance to trajectories exhibiting greater kinematic complexity – defined by metrics such as acceleration and steering angle – thereby prioritizing the learning of safety-critical maneuvers like emergency braking or lane changes. This weighting effectively resolves ambiguity at decision points by favoring trajectories that demonstrate proactive and deliberate control, leading to more realistic and reliable anomaly detection.

The Cost of Knowing: Efficient Likelihood Estimation

Calculating the log-likelihood within Cascaded Normalizing Flows (CNFs) necessitates solving ordinary differential equations (ODEs) to trace trajectories through the flow. This ODE integration process, while fundamental to the likelihood computation, presents a significant computational burden, particularly with high-dimensional data or complex flow architectures. The cost scales with the number of function evaluations required to accurately integrate the ODEs along each trajectory, impacting the overall efficiency of the likelihood estimation. Accurate anomaly scoring relies on precise log-likelihood values; therefore, minimizing the computational cost of ODE integration is critical for practical applications of CNFs.

Deep-Flow achieves computational efficiency in likelihood estimation by forgoing the use of Hutchinson’s Trace Estimator, a method often employed in calculating the trace of a matrix, which can introduce variance and computational cost. Instead, Deep-Flow directly optimizes the computation of the log-likelihood function during training. This optimization focuses on reducing the computational burden associated with evaluating the Jacobian determinant within the change of variables formula used for normalizing flows. By accurately and efficiently computing the likelihood, Deep-Flow provides a reliable basis for generating anomaly scores, which are critical for identifying deviations from expected data distributions.

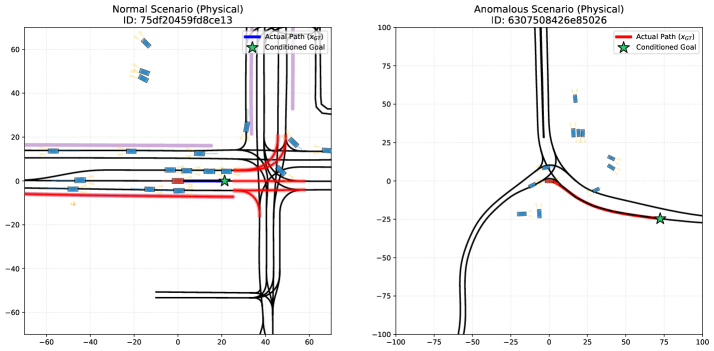

The Anomaly Score, generated by the Deep-Flow model, provides a quantitative assessment of a trajectory’s divergence from the established distribution of normal operational data. This score is calculated based on the log-likelihood of the observed trajectory given the learned normal behavior; lower scores indicate a greater deviation and, consequently, a higher probability of anomaly. This likelihood-based approach allows for a robust differentiation between expected and unusual patterns, facilitating reliable anomaly detection in various applications, and provides a standardized metric for comparison across different trajectories and time periods. The score’s direct relationship to the underlying probability distribution ensures that detected anomalies are statistically meaningful and less susceptible to false positives.

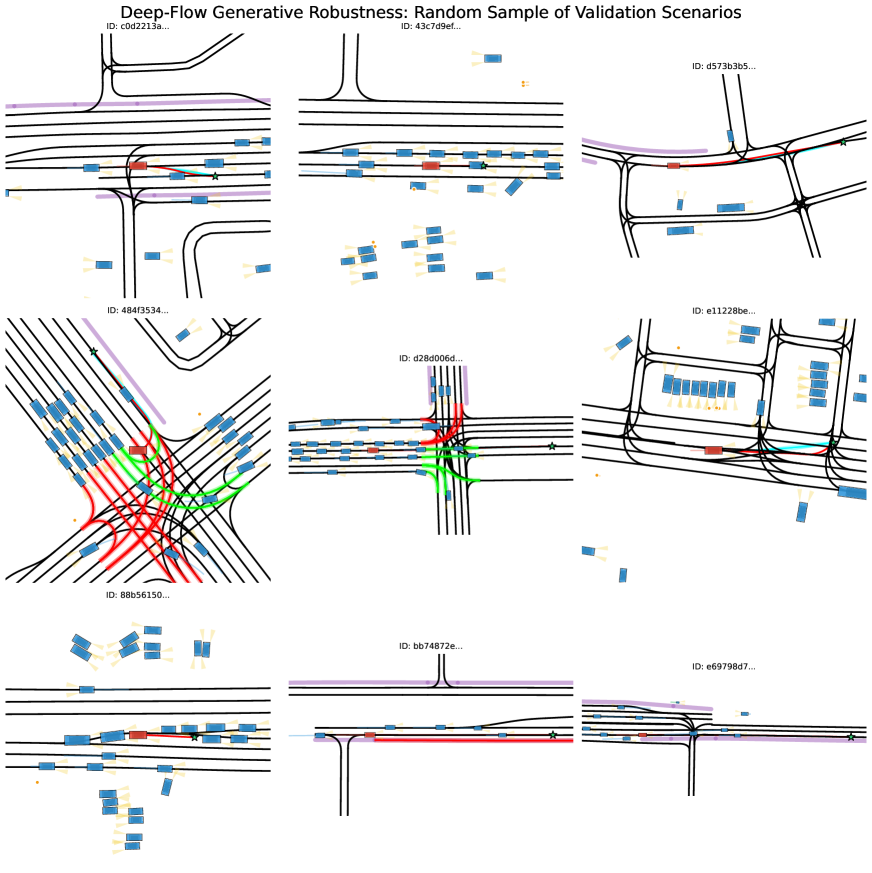

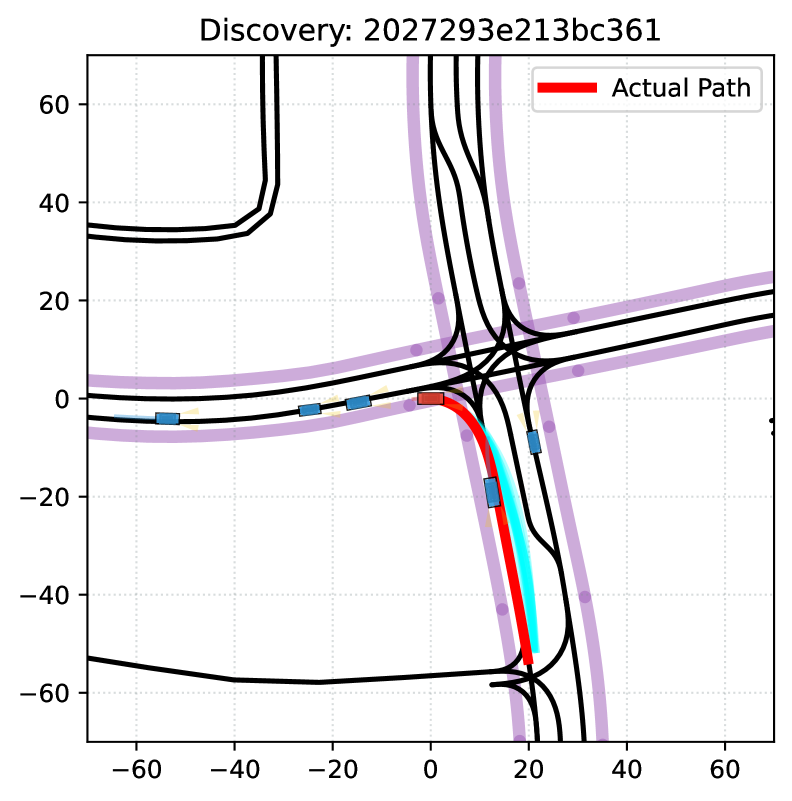

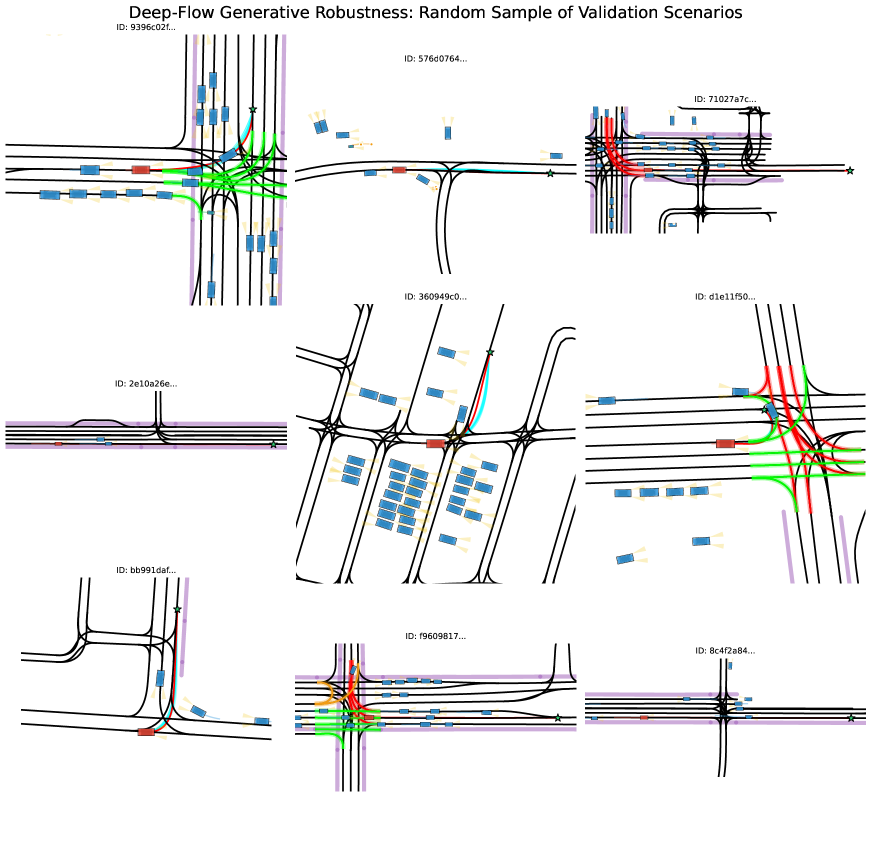

Validation on the Open Road: Performance on the Waymo Dataset

The efficacy of Deep-Flow in real-world scenarios was rigorously tested using the Waymo Open Motion Dataset (WOMD), a benchmark known for its complexity and scale in capturing urban driving conditions. This evaluation focused on the system’s capacity to discern anomalous trajectories – those deviating from expected, safe behaviors – within densely populated and dynamic environments. Results demonstrate Deep-Flow’s robust performance in identifying these potentially hazardous movements, proving its ability to process intricate interactions between vehicles, pedestrians, and other road users. The model successfully navigated the challenges presented by the dataset, showcasing a crucial step towards reliable anomaly detection for autonomous systems operating in complex urban landscapes.

Evaluations utilized the Area Under the Receiver Operating Characteristic curve (AUC-ROC) to rigorously quantify Deep-Flow’s performance in identifying critical safety events within the challenging Waymo Open Motion Dataset. The model achieved a score of 0.766, demonstrating a substantial advancement over currently available methods for anomalous trajectory detection. This metric highlights the model’s improved ability to distinguish between safe and potentially hazardous movements in complex urban scenarios, suggesting a heightened capacity for proactive risk assessment and mitigation within autonomous systems. The achieved AUC-ROC score not only validates the model’s effectiveness but also positions it as a promising solution for enhancing the overall safety and reliability of self-driving technologies.

The successful evaluation of Deep-Flow on the Waymo Open Motion Dataset provides compelling evidence for its capacity to bolster the safety and dependability of autonomous vehicles. By accurately identifying anomalous trajectories – those indicative of potential hazards – the system offers a crucial layer of predictive capability currently lacking in many self-driving systems. This isn’t simply about detecting errors after they occur, but anticipating them before they escalate into critical events, allowing for preemptive adjustments and potentially averting collisions. The demonstrated performance suggests Deep-Flow could become an integral component in reducing accident rates and fostering greater public trust in autonomous driving technology, ultimately contributing to a more secure and efficient transportation ecosystem.

Beyond Individual Trajectories: Towards Holistic Scene Understanding

Current trajectory anomaly detection, as demonstrated by Deep-Flow, primarily focuses on individual agent behavior. However, real-world driving scenarios are inherently social, requiring consideration of interactions between multiple agents. Future research aims to bridge this gap by integrating Deep-Flow with established models of agent interaction, notably Social Force Fields. These fields simulate the forces – both attractive and repulsive – that influence an agent’s movement based on the presence of others. By combining Deep-Flow’s ability to identify unusual individual paths with the contextual awareness of Social Force Fields, systems can better predict and interpret potentially dangerous situations arising from complex multi-agent dynamics. This synergistic approach promises a more nuanced understanding of scene behavior, moving beyond simply detecting what is unusual to understanding why it is happening within the broader context of the environment.

A truly comprehensive interpretation of driving scenarios demands moving beyond the tracking of individual vehicles to encompass the intricate web of interactions between all road users. Future systems aim to model not just where a vehicle is going, but why, by inferring the intentions driving its behavior and anticipating how those intentions will manifest in its trajectory. This requires accounting for social norms, implicit communication – a pedestrian making eye contact, a car signaling a lane change – and the predicted responses of other agents. By integrating trajectory modeling with frameworks that simulate agent interactions, autonomous systems can move beyond reactive responses to proactive anticipation, creating a more nuanced and safer navigation experience even within dense, unpredictable urban environments.

The convergence of trajectory anomaly detection with comprehensive scene understanding promises a new generation of autonomous vehicles poised to excel in complex urban settings. By anticipating potential hazards and proactively adjusting to the behaviors of surrounding agents – pedestrians, cyclists, and other vehicles – these systems move beyond reactive responses. This shift towards proactive navigation isn’t simply about avoiding collisions; it’s about seamlessly integrating into dynamic traffic flows, predicting maneuvers, and making informed decisions even amidst unpredictable events. Consequently, the development of such systems fosters increased reliability and safety, enabling autonomous vehicles to confidently navigate the most challenging and congested urban landscapes and ultimately unlocking the full potential of self-driving technology.

The pursuit of anomaly detection, as demonstrated by Deep-Flow, echoes a fundamental truth about complex systems. It isn’t about building a perfect guardian, but rather cultivating a responsive ecosystem capable of adapting to the unforeseen. The framework’s reliance on spectral manifolds, a clever abstraction of driving data, isn’t a solution, but a prophecy-a commitment to a certain kind of failure, the graceful degradation of performance as conditions shift. As Edsger W. Dijkstra observed, “It’s always possible to do things wrong.” This work accepts that inevitability, and seeks not to eliminate error, but to contain and interpret it, acknowledging that scalability is simply the word used to justify complexity, and that the pursuit of absolute safety is, ultimately, an exercise in managing acceptable risk.

The Horizon Beckons

The pursuit of anomaly detection, particularly within the brittle context of autonomous systems, inevitably reveals a deeper truth: the map is not the territory, nor is the manifold a refuge. This work, by attempting to constrain the search for ‘normal’ to a spectral space, merely postpones the inevitable confrontation with the genuinely novel – the event that cannot be represented as a slight deviation from past experience. Each elegantly crafted normalizing flow is, at its core, a formalized expression of hope that the future will resemble the past, a prophecy destined for revision.

The emphasis on unsupervised learning, while pragmatic, hints at a fundamental difficulty. To truly anticipate failure, one must model not just what is normal, but why it might break. The next iteration will not be about refining the flow, but about incorporating causal models, however imperfect, into the spectral representation. Expect to see systems that explicitly reason about the limitations of their own knowledge, acknowledging the inherent incompleteness of any ‘normal’ model.

Ultimately, this line of inquiry will either yield a system capable of graceful degradation – one that understands how to be surprised – or will reinforce the suspicion that true autonomy requires something beyond pattern recognition. The spectral manifold, like all abstractions, is a boundary; the interesting events will always lie just beyond it, whispering of the chaos that underpins all order.

Original article: https://arxiv.org/pdf/2602.17586.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Banks & Shadows: A 2026 Outlook

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- QuantumScape: A Speculative Venture

- Is Taylor Swift Getting Married to Travis Kelce in Rhode Island on June 13, 2026? Here’s What We Know

- Here Are the Best TV Shows to Stream this Weekend on Hulu, Including ‘Fire Force’

2026-02-22 04:07