Author: Denis Avetisyan

A new hybrid system blends technical analysis, machine learning, and financial sentiment to dynamically adapt to market conditions and generate consistent alpha.

This review details a regime-adaptive equity strategy leveraging AI to improve risk-adjusted returns compared to traditional methods.

Traditional financial strategies often struggle to adapt to evolving market dynamics and capitalize on complex, multi-faceted data. This is addressed in ‘Generating Alpha: A Hybrid AI-Driven Trading System Integrating Technical Analysis, Machine Learning and Financial Sentiment for Regime-Adaptive Equity Strategies’, which proposes a novel algorithmic trading system combining technical indicators, machine learning, and sentiment analysis to achieve robust, regime-adaptive performance. The resulting hybrid model demonstrably outperformed major benchmarks-delivering a 135.49\% return over 24 months-suggesting the potential of multi-modal AI in financial markets. Could this approach herald a new era of intelligent, self-adjusting trading strategies capable of navigating increasingly volatile conditions?

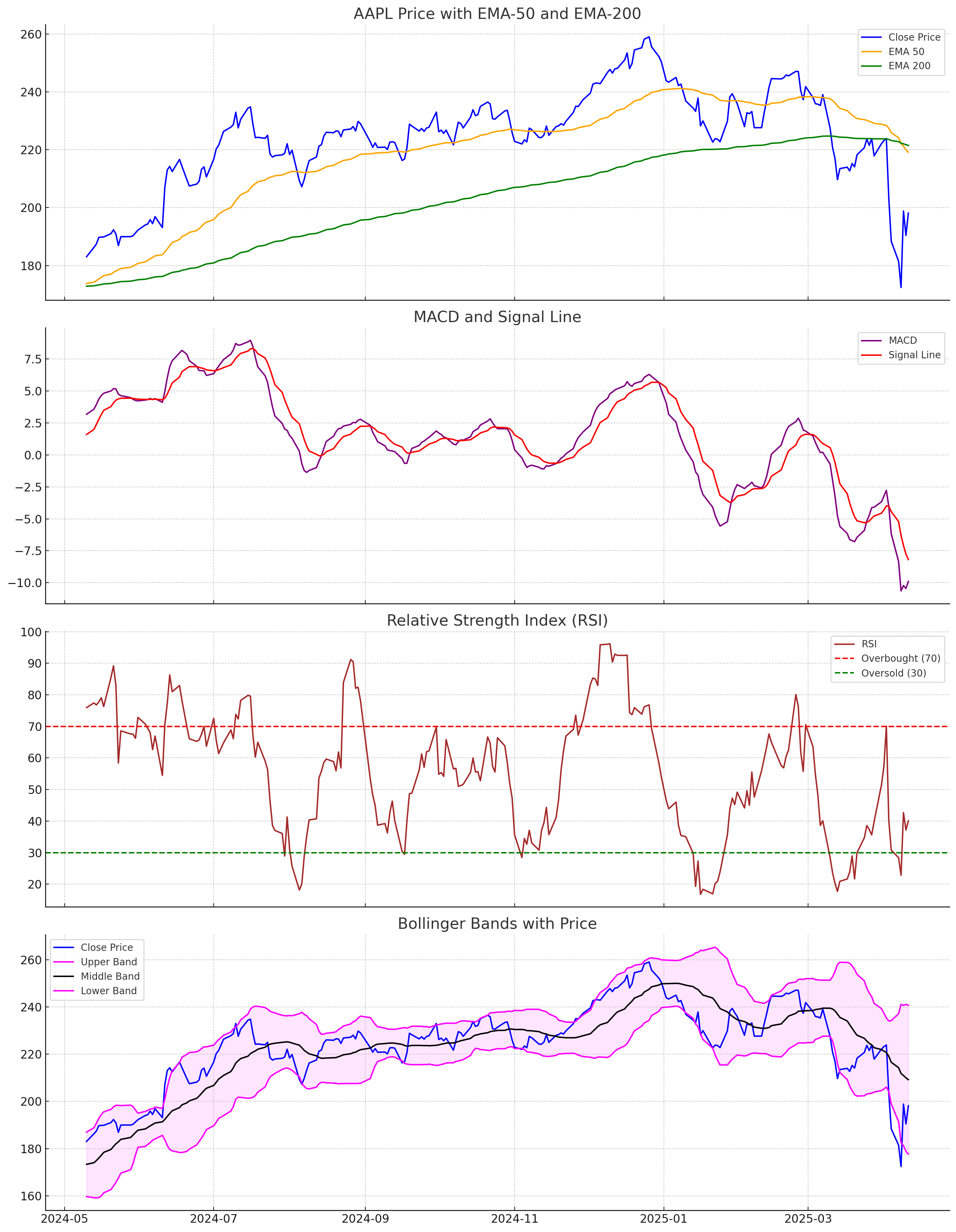

The Illusion of Control: Why Traditional Indicators Fail

Despite their continued popularity, conventional technical indicators frequently produce misleading signals when markets experience heightened volatility. These indicators, often based on historical price data and simple mathematical calculations, struggle to differentiate between genuine trend reversals and temporary fluctuations common in turbulent conditions. Consequently, traders relying solely on these signals may initiate premature or incorrect trades, leading to suboptimal decision-making and potential financial losses. The issue isn’t the indicators themselves being inherently flawed, but rather their limited ability to adapt to rapidly changing market dynamics and accurately reflect underlying risk. This susceptibility to false positives is particularly pronounced during periods of unexpected news, economic shifts, or widespread investor uncertainty, highlighting the need for more robust and context-aware analytical approaches.

Financial markets are rarely driven by a single force; instead, a complex interplay between investor sentiment, actual price movements, and inherent market volatility dictates outcomes. Focusing on isolated technical indicators – such as moving averages or relative strength indices – often provides an incomplete, and therefore misleading, picture. These indicators treat market signals in a vacuum, failing to account for how shifts in investor psychology can amplify or suppress price trends, or how sudden increases in volatility can invalidate established patterns. A comprehensive understanding requires recognizing that price action is the manifestation of sentiment and volatility, and that these elements are not independent variables but rather interconnected components of a dynamic system. Consequently, strategies based solely on isolated indicators frequently generate false signals, particularly during periods of heightened uncertainty or rapid change, and may ultimately hinder effective decision-making.

The proliferation of financial data, stemming from high-frequency trading, algorithmic strategies, and diverse information sources, has fundamentally altered the landscape for traders and analysts. Traditional methods, designed for simpler market conditions, now struggle to filter signal from noise, leading to increased instances of misinterpretation and flawed decision-making. Consequently, a shift towards more sophisticated analytical tools – encompassing machine learning, artificial intelligence, and advanced statistical modeling – is becoming essential. These tools can process vast datasets, identify subtle patterns, and adapt to changing market dynamics with a precision previously unattainable, ultimately offering the potential to uncover genuine trading opportunities obscured by the sheer volume and complexity of modern financial data.

Decoding the Noise: Sentiment Analysis and Predictive Models

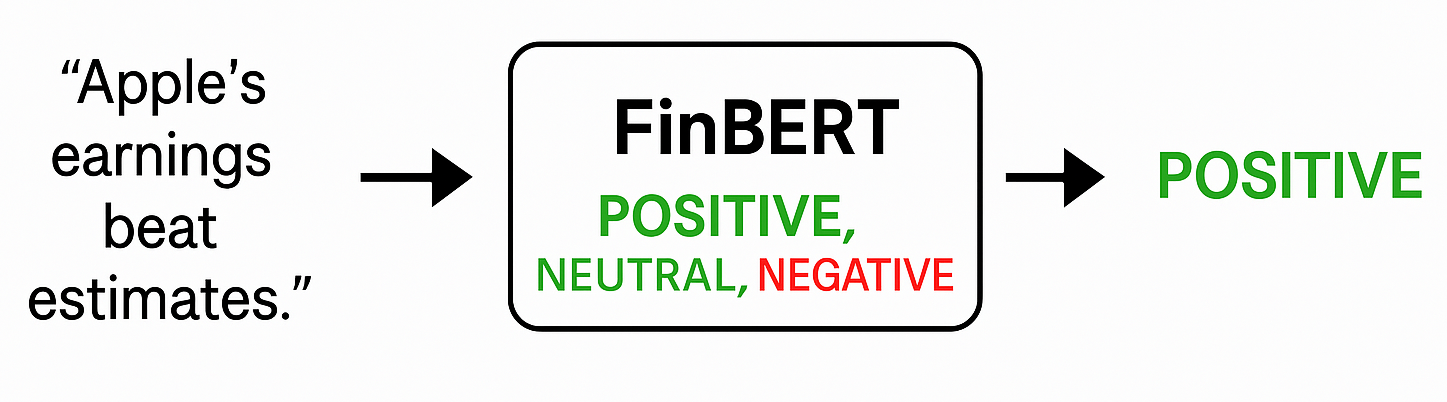

The system incorporates sentiment analysis of financial news articles to gauge overall market sentiment. This analysis processes text data to determine the emotional tone – positive, negative, or neutral – expressed regarding specific assets or the market generally. The resulting sentiment score is then used as an input feature in predictive models, functioning as a leading indicator because shifts in public perception, as reflected in news coverage, often precede actual price movements. This allows the system to potentially identify emerging trends and anticipate market reactions before they are fully reflected in trading data.

Deep learning models demonstrate efficacy in financial text analysis due to their capacity to understand context and nuance. The Transformer architecture, a neural network design, enables parallel processing of text, significantly improving efficiency compared to recurrent models. FinBERT, a BERT model specifically pre-trained on financial text, further enhances performance by incorporating financial vocabulary and understanding domain-specific language. This pre-training allows FinBERT to accurately identify sentiment, extract key entities, and classify financial news with a higher degree of precision than general-purpose language models, ultimately facilitating the extraction of actionable insights from large volumes of textual data.

XGBoost, a gradient boosting machine learning algorithm, is utilized for predictive modeling by analyzing historical financial data to identify non-linear relationships and complex patterns. This approach allows the system to move beyond simple linear regression and capture nuanced influences on price movements. Evaluation using a held-out test dataset-referred to as out-of-sample data-demonstrated an accuracy of 63% in predicting directional price changes. This metric represents the percentage of times the model correctly predicted whether the price would increase or decrease, providing a quantifiable measure of its predictive capability.

The Crucible of Backtesting: Validation and Live Execution

Backtrader provides a comprehensive environment for evaluating algorithmic trading strategies using historical data. The framework allows for the simulation of trade executions based on defined parameters and rules, facilitating the identification of performance bottlenecks and potential areas for improvement. Users can systematically test the strategy’s responsiveness to various market conditions and optimize key variables – such as entry and exit points, position sizing, and risk management parameters – through iterative testing and analysis of resulting performance metrics. This process enables developers to refine the strategy before deployment, minimizing the risk associated with live trading and maximizing potential profitability.

Deployment of the automated trading strategy utilizes Amazon Web Services (AWS) EC2 instances to provide on-demand, scalable computing resources for live execution. This cloud-based infrastructure allows for dynamic adjustment of processing power and memory allocation based on market conditions and trading volume. EC2 instances are configured to continuously monitor market data, generate trading signals according to the model’s predictions, and execute trades via the integrated Alpaca API. The scalability of AWS EC2 ensures consistent performance even during periods of high market volatility and facilitates the potential for expanding the strategy’s capacity without significant hardware investments.

The system integrates with brokerage accounts via the Alpaca API, allowing for automated trade execution driven by model predictions. Rigorous backtesting, conducted over a two-year period, demonstrated a total return of 135.49%. This performance translates to a Compound Annual Growth Rate (CAGR) of 53.46%, indicating substantial growth potential when applied to historical data. The Alpaca API facilitates order placement, position management, and real-time market data access, essential for automated strategy implementation.

The Adaptive Algorithm: Reinforcement Learning and Dynamic Markets

The trading strategy leverages reinforcement learning algorithms, prominently including Proximal Policy Optimization (PPO) and Advantage Actor-Critic (A2C), to navigate the complexities of financial markets with an unprecedented level of agility. These algorithms don’t rely on pre-programmed rules; instead, they allow the strategy to learn directly from market interactions, constantly refining its approach based on observed outcomes. Through a process of trial and error, the system dynamically adjusts its trading parameters in response to shifting conditions, effectively enabling it to capitalize on emerging opportunities and mitigate risks as they arise. This real-time adaptation is crucial, as market dynamics are rarely static, and a strategy’s ability to evolve is often the determining factor in sustained success.

The core of the trading agent’s intelligence lies in its continuous optimization through reward maximization. Unlike static algorithms, the agent doesn’t rely on pre-defined rules; instead, it learns by trial and error, assessing each trade based on its outcome and adjusting future decisions accordingly. This process is driven by a carefully engineered reward signal – a numerical value assigned to each trade that reflects its profitability and risk. The agent employs sophisticated algorithms to explore different trading strategies, incrementally refining its approach to consistently increase the cumulative reward. This iterative improvement allows the system to adapt to shifting market conditions, identify subtle patterns, and ultimately, enhance its performance over time – a key differentiator from traditional, inflexible trading systems.

The trading strategy, leveraging reinforcement learning, demonstrates a marked improvement in resilience and sustained financial gain, particularly when faced with the complexities of shifting market conditions. Unlike static, rule-based approaches, this adaptive system continuously refines its actions based on real-time performance, allowing it to navigate volatility and uncertainty with greater efficacy. Rigorous testing reveals a Sharpe Ratio reaching 1.68 – a substantial 250% increase over baseline systems – indicating not only higher returns but also a superior risk-adjusted performance, suggesting a potentially transformative approach to automated trading.

Beyond the Black Box: Explainable AI and Transparent Decisions

To address the opacity often associated with complex machine learning models, researchers are increasingly utilizing techniques like SHAP Values and LIME to illuminate the decision-making process. These methods don’t simply offer predictions; they provide insights into why a model arrived at a specific conclusion. SHAP (SHapley Additive exPlanations) leverages concepts from game theory to assign each input feature an importance value for a particular prediction, while LIME (Local Interpretable Model-agnostic Explanations) approximates the model locally with a simpler, interpretable model. By revealing which factors most strongly influenced a prediction, these tools foster transparency and build trust in AI systems, particularly crucial in sensitive applications where understanding the rationale behind decisions is paramount. This interpretability isn’t merely academic; it allows for the identification of potential biases, validation of model logic, and ultimately, more informed and reliable AI-driven outcomes.

The ability to dissect the rationale behind each prediction generated by an AI model is fundamentally reshaping risk management protocols. Instead of treating algorithmic outputs as opaque directives, analysts can now pinpoint the precise variables influencing each decision, enabling proactive identification and mitigation of potential vulnerabilities. This granular understanding facilitates strategic adjustments to trading parameters, allowing for optimized portfolio construction and improved resilience against unforeseen market fluctuations. Furthermore, by revealing the underlying logic, organizations can validate model behavior, ensure alignment with regulatory requirements, and build confidence in the long-term sustainability of AI-driven financial strategies.

The pursuit of explainable artificial intelligence is not merely an academic exercise, but a catalyst for advancement in financial applications. By illuminating the reasoning behind AI-driven trading decisions, developers can refine strategies and build confidence in automated systems. Recent backtesting demonstrates a tangible benefit of this approach; models incorporating explainability features exhibited a maximum drawdown of -15.6%, representing a significant 28% reduction in potential losses compared to similar, less transparent systems. This enhanced risk management, coupled with the ability to understand and validate AI’s logic, is fostering wider adoption throughout the financial industry, suggesting a future where sophisticated algorithms and human oversight can work in concert to optimize investment outcomes.

The pursuit of consistently generating alpha, as detailed in this study, reveals a familiar human tendency: the belief in a controllable narrative. This system, blending technical analysis with the unpredictable currents of machine learning and sentiment, is a fascinating attempt to map the market’s chaos. Yet, it implicitly acknowledges the limits of prediction. As Mary Wollstonecraft observed, “The mind, when once enlightened, will never be content to remain in darkness.” This echoes the study’s core: a constant refinement of models, a perpetual striving for clarity even when faced with the inherent uncertainties of financial markets. The system isn’t about mastering the market, but illuminating its complexities, recognizing that even the most sophisticated theory can be swallowed by unforeseen events.

What’s Next?

The pursuit of profitable signals, encoded here in layers of technical indicators, machine learning, and parsed emotionality, resembles nothing so much as attempting to chart the currents of a boundless ocean with a handful of reeds. Each successful backtest, each period of outperformance, is merely a temporary reprieve – a calm surface concealing the inevitable chaos beneath. The system may adapt to past regimes, but the market, with its infinite capacity for novelty, will undoubtedly invent new ones, rendering even the most sophisticated algorithms obsolete.

The integration of sentiment analysis introduces a particularly fragile element. Human language is notoriously ambiguous, riddled with irony, sarcasm, and subtle shifts in meaning. To believe one can distill actionable signals from this morass is to mistake correlation for causation, to build a cathedral on sand. The system’s efficacy is, therefore, tethered to the continued relevance of the training data – a precarious foundation, given the accelerating pace of cultural and economic change.

Future iterations will undoubtedly focus on increasing complexity, incorporating more data, and refining the algorithms. But each calculation is an attempt to hold light in one’s hands, and it slips away. The true challenge lies not in finding the perfect trading strategy, but in acknowledging the inherent unknowability of the market – and the limits of any model, no matter how ingenious.

Original article: https://arxiv.org/pdf/2601.19504.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Where to Change Hair Color in Where Winds Meet

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Is Taylor Swift Getting Married to Travis Kelce in Rhode Island on June 13, 2026? Here’s What We Know

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- Solel Partners’ $29.6 Million Bet on First American: A Deep Dive into Housing’s Unseen Forces

2026-01-28 06:42