Author: Denis Avetisyan

A new framework, EdgeMask-DG*, enhances the ability of graph neural networks to generalize to unseen environments by focusing on core structural features.

EdgeMask-DG* learns domain-invariant graph structures through adversarial edge masking, achieving state-of-the-art performance on several benchmarks.

Graph neural networks are vulnerable to performance degradation when faced with structural shifts across different datasets, as graph topology often acts as a confounding covariate. To address this, we introduce ‘EdgeMask-DG: Learning Domain-Invariant Graph Structures via Adversarial Edge Masking’*, a novel framework that learns domain-invariant graph structures by adaptively masking edges through an adversarial process, effectively identifying robust, shared features. This approach, which extends to feature-enriched graphs, achieves state-of-the-art results on diverse benchmarks, notably improving worst-case domain accuracy on the Cora dataset to 78.0%. Could this paradigm of adversarial edge masking unlock more generalizable and robust graph neural networks capable of thriving in unseen environments?

Deconstructing the Graph: The Illusion of Static Data

Machine learning models deployed on real-world graph data frequently encounter a performance drop due to distribution shifts – discrepancies between the data used during training and the data encountered in deployment. These shifts aren’t merely minor variations; they represent fundamental changes in the underlying data characteristics. For example, a social network model trained on user activity from 2022 might struggle to accurately predict behavior in 2024 due to evolving user preferences, the emergence of new platform features, or shifts in demographics. This phenomenon extends beyond social networks, impacting areas like fraud detection, knowledge graphs, and biological networks, where the very structure and attributes of the graph can change over time. Consequently, models need to be resilient to these dynamic changes, and traditional machine learning approaches – often reliant on the assumption of stationary data – prove inadequate without specific adaptations for handling non-stationary graph data.

Machine learning models applied to graph data frequently encounter performance degradation due to distributional shifts-changes in the underlying data between the training phase and real-world deployment. These shifts aren’t limited to alterations in node features or edge attributes; crucially, the very structure of the graph itself can change. A model trained on a social network from 2022, for example, may struggle to accurately predict relationships in a 2024 version of the same network due to new users, evolving connections, and the formation of entirely new communities. This structural variance, combined with potential changes in feature distributions, creates a significant hurdle for models reliant on static training data, demanding techniques capable of adapting to previously unseen graph topologies and feature characteristics to maintain predictive accuracy.

Cross-graph node classification serves as a compelling benchmark for evaluating a model’s ability to generalize beyond its training data, presenting a significant hurdle in dynamic graph machine learning. This task requires algorithms to accurately predict node labels in a previously unseen graph, differing in both size and structural arrangement from those encountered during training. Unlike traditional node classification, where models can leverage consistent graph properties, cross-graph scenarios necessitate the development of representations that are transferable across diverse topological landscapes. Successful performance hinges on a model’s capacity to disentangle node features from the specific graph instance, enabling it to adapt to novel connectivity patterns and maintain predictive accuracy in completely unfamiliar graph topologies. The difficulty of this task underscores the limitations of models trained on static graph datasets and motivates research into more robust and adaptable graph representation learning techniques.

EdgeMask-DG: Forcing the System to Reveal Its Assumptions

EdgeMask-DG employs a min-max adversarial framework to generate domain-invariant edge masks. This framework operates by training two competing neural networks: a TaskNet and a MaskNet. The MaskNet is specifically designed to identify and mask edges within input images, with the objective of maximizing performance degradation on the TaskNet. Simultaneously, the TaskNet is trained to maintain its performance on the primary task despite the masking applied by the MaskNet. This adversarial process iteratively refines the MaskNet to isolate edges that are detrimental to generalization and forces the TaskNet to learn robust features independent of those domain-specific edges, ultimately resulting in edge masks that promote domain invariance.

The EdgeMask-DG framework employs an adversarial process consisting of two neural networks: a TaskNet and a MaskNet. The TaskNet is trained to perform the primary task, such as semantic segmentation or object detection, while the MaskNet is simultaneously trained to identify and mask edges within input images. This masking is specifically designed to target edges that are indicative of domain-specific characteristics. The adversarial nature of the training arises from the TaskNet attempting to maintain its performance despite the masking applied by the MaskNet, and the MaskNet attempting to effectively obscure domain-specific edges in a manner that degrades TaskNet performance. This competitive dynamic forces the TaskNet to learn robust features that are less reliant on these masked, domain-specific edges.

The EdgeMask-DG framework promotes domain generalization by intentionally degrading the input with masked edges and then training the TaskNet to remain accurate despite this degradation. This adversarial process compels the TaskNet to prioritize and utilize features that are not reliant on the masked, domain-specific edges. Consequently, the model learns to identify and focus on edges and features that are consistently predictive across multiple domains, enhancing its ability to generalize to unseen target domains where those specific edges may not be present or reliable. The TaskNet’s sustained performance under masking serves as a signal that it has effectively learned domain-invariant representations.

Amplifying the Signal: Enriched Graphs and Adversarial Refinement

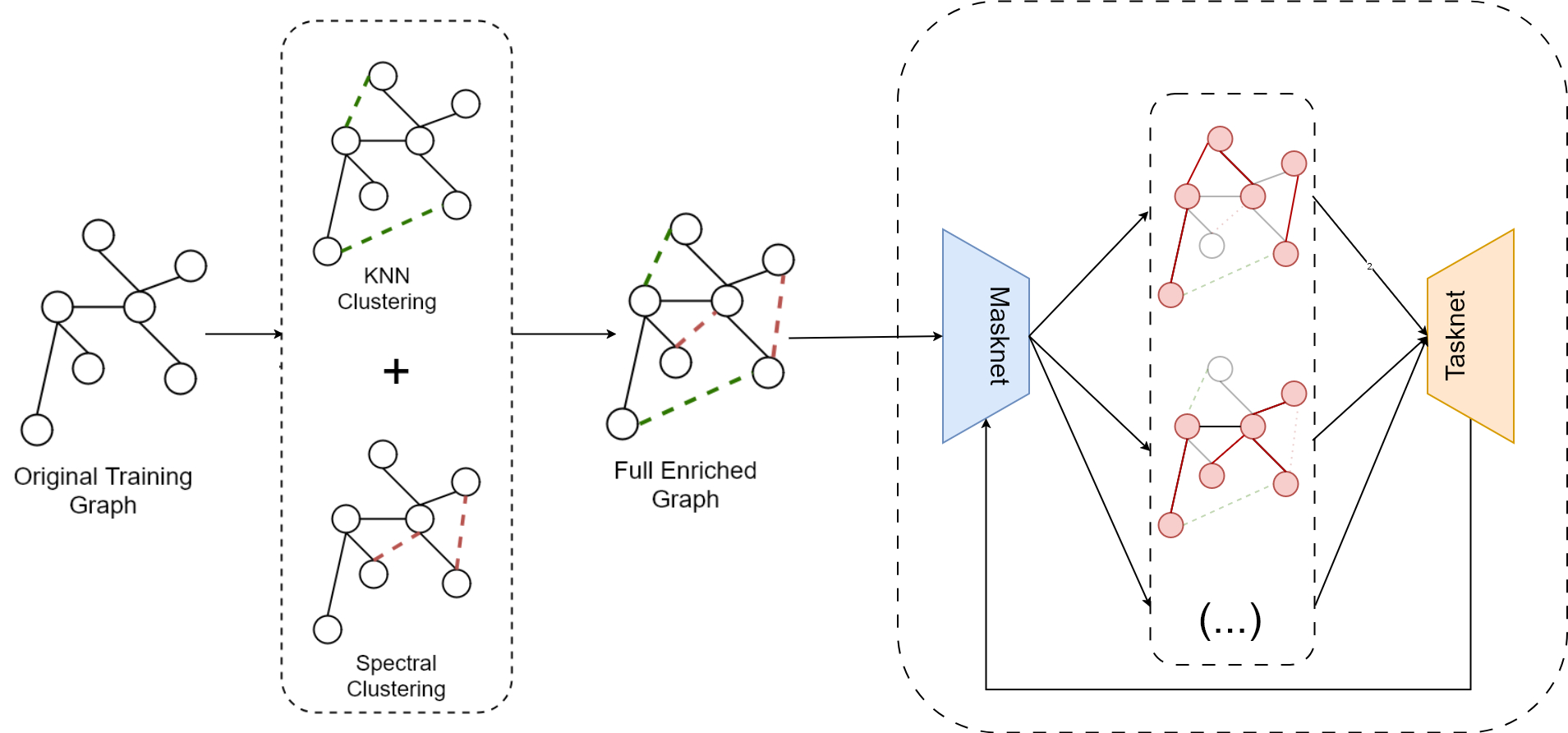

EdgeMask-DG* enhances graph representation by augmenting the original graph structure with additional edges determined by node feature similarity. This enrichment process utilizes algorithms such as k-Nearest Neighbors and Spectral Clustering to identify nodes with comparable feature vectors. Edges are then added between these structurally similar nodes, effectively increasing the density of the graph and providing the TaskNet with a more comprehensive understanding of relationships beyond the initially defined adjacency matrix. This expanded graph representation serves as a foundation for the adversarial training process, enabling improved generalization to unseen graph domains.

The construction of the enriched graph representation in EdgeMask-DG* leverages both k-Nearest Neighbors (k-NN) and Spectral Clustering algorithms to identify and connect nodes exhibiting high feature similarity. k-NN establishes connections based on the ‘k’ most similar nodes according to a chosen distance metric, while Spectral Clustering utilizes the eigenvalues of a similarity matrix derived from node features to partition nodes into structurally related groups, and then creates edges between nodes within the same group. This process adds edges to the original graph, effectively increasing the information available to the TaskNet by highlighting implicit relationships between nodes that may not be directly connected in the initial graph structure. The resulting enriched graph provides a more comprehensive representation of node relationships, facilitating improved generalization performance on downstream tasks.

The integration of an enriched graph representation with the adversarial masking process yields measurable performance gains when applied to unseen graph domains. Specifically, testing on citation networks – ACM, DBLP, and Citation – resulted in an average Micro-F1 score of 73.81. Comparative analysis demonstrates that this approach outperforms existing methods, with ablation studies indicating an improvement of 0.5 to 2.3 percentage points over Empirical Risk Minimization (ERM). This performance lift is attributed to the combined effect of data augmentation through enriched graph construction and the targeted masking of nodes during adversarial training.

Beyond Static Models: Implications for a Dynamic Reality

Graph machine learning frequently encounters performance degradation when applied to datasets differing from the training environment – a phenomenon known as domain shift. This poses a significant challenge for real-world applications where data distributions are rarely static. The proposed framework tackles this issue head-on by focusing on learning representations that are insensitive to domain-specific characteristics. Instead of memorizing patterns unique to a particular dataset, the method aims to extract core relational information that generalizes across diverse graph structures and node features. This adaptability allows models to maintain robust performance even when presented with previously unseen data, minimizing the need for costly retraining and enabling reliable predictions in dynamic and unpredictable environments. By prioritizing domain-invariant learning, the framework moves beyond brittle, dataset-specific solutions towards more flexible and universally applicable graph machine learning systems.

A significant challenge in applying graph machine learning to real-world scenarios lies in the frequent shifts between datasets, often requiring costly and time-consuming model retraining. This method circumvents this limitation by focusing on the development of domain-invariant representations – essentially, learning features that are consistent across different datasets. By extracting these generalized features, the model demonstrates a remarkable ability to adapt to new, unseen data without extensive fine-tuning. This adaptability is achieved through a novel masking strategy that encourages the network to focus on the most crucial, domain-agnostic aspects of the graph structure, ultimately leading to more robust and efficient performance in dynamic environments.

Evaluations demonstrate the efficacy of EdgeMask-DG across diverse graph datasets; on the Photo benchmark, the framework achieves a 94.8% accuracy rate, exceeding the performance of current state-of-the-art methods by over two percentage points. This robust performance extends to the Twitch benchmark, where EdgeMask-DG attains an ROC-AUC of 59.3%, signifying a substantial advancement compared to existing approaches. Furthermore, the incorporation of sparsity regularization into the MaskNet component not only improves computational efficiency but also enhances the interpretability of the learned representations, facilitating practical implementation and deployment in real-world graph machine learning applications.

The pursuit of domain generalization, as demonstrated by EdgeMask-DG*, isn’t about eliminating variance, but about discerning the signal within it. The framework’s adaptive edge masking strategy subtly mirrors a process of controlled demolition – dismantling superficial connections to reveal the core structural integrity. This resonates with Blaise Pascal’s observation: “The eloquence of youth is that it knows nothing.” The system, initially naive to domain specifics, learns through a process of ‘breaking’ connections-masking edges-to discover the underlying, domain-invariant graph structures that truly matter. It’s a reminder that true understanding often emerges from deconstruction, and that robust knowledge isn’t built on rigid adherence, but on adaptable foundations.

What Breaks Down Next?

The assertion that a bug is the system confessing its design sins holds particular resonance when considering EdgeMask-DG. This work rightly identifies the fragility inherent in graph neural networks – their tendency to latch onto spurious correlations specific to a training domain. The adaptive edge masking is a clever intervention, a controlled demolition of the easily fooled parts of the network. But it merely addresses symptoms*. The underlying problem remains: graph structure, despite its intuitive appeal, is often a messy, overparameterized signal. Future work must confront the question of what constitutes genuinely meaningful structural information, and how to distill it-not just mask the noise.

The current paradigm largely treats graphs as fixed entities, but what if the ‘domain-invariant’ features aren’t static? Dynamic graphs, constantly rewiring themselves, expose the limitations of approaches built on the assumption of structural stability. A truly robust system will need to anticipate-even embrace-structural change. Perhaps the next step isn’t better masking, but a network capable of learning the rules governing graph evolution, treating structure not as a constraint, but as a variable to be modeled.

Finally, the benchmarks themselves deserve scrutiny. State-of-the-art results are, by definition, transient. The true test of generalization isn’t performance on existing datasets, but graceful degradation on entirely novel graph topologies-structures that actively resist the biases encoded in current training regimes. The goal shouldn’t be to achieve higher scores, but to build systems that fail interestingly-revealing, through their failures, the fundamental limits of graph-based representation.

Original article: https://arxiv.org/pdf/2602.05571.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Top 15 Insanely Popular Android Games

- Gold Rate Forecast

- EUR UAH PREDICTION

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Silver Rate Forecast

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- DOT PREDICTION. DOT cryptocurrency

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-02-08 08:31