Author: Denis Avetisyan

The ARC Prize 2025 technical report details significant progress toward artificial general intelligence, revealing how systems are learning to improve themselves through iterative refinement.

This paper analyzes the emergence of refinement loops within the ARC-AGI benchmark and proposes adaptive evaluation methods for increasingly capable AI systems.

Despite advances in artificial intelligence, achieving robust few-shot generalization remains a central challenge in the pursuit of artificial general intelligence (AGI). This ‘ARC Prize 2025: Technical Report’ details the results of the latest ARC-AGI competition, revealing a growing trend toward ‘refinement loops’-iterative program optimization strategies-as a key driver of progress. Our analysis of the competition, which attracted over 1,455 teams, demonstrates that current reasoning capabilities are increasingly constrained by knowledge coverage, raising concerns about benchmark contamination. With the introduction of ARC-AGI-3 featuring interactive reasoning challenges, can we develop benchmarks that truly assess adaptive intelligence and move beyond superficial performance?

The Illusion of Intelligence: Why Current Benchmarks Fall Short

Many contemporary artificial intelligence assessments inadvertently prioritize rote learning over genuine cognitive flexibility. Existing benchmarks frequently reward systems capable of memorizing vast datasets and recognizing patterns within them, rather than those demonstrating an ability to apply knowledge to unfamiliar situations or to reason through complex, multi-step problems. This emphasis on memorization creates a skewed perception of progress, as AI can achieve high scores on these tests without possessing the adaptable, compositional reasoning characteristic of human intelligence. Consequently, these benchmarks often fail to distinguish between systems that truly understand concepts and those merely adept at statistical pattern matching, hindering the development of AI capable of generalized problem-solving and innovative thought.

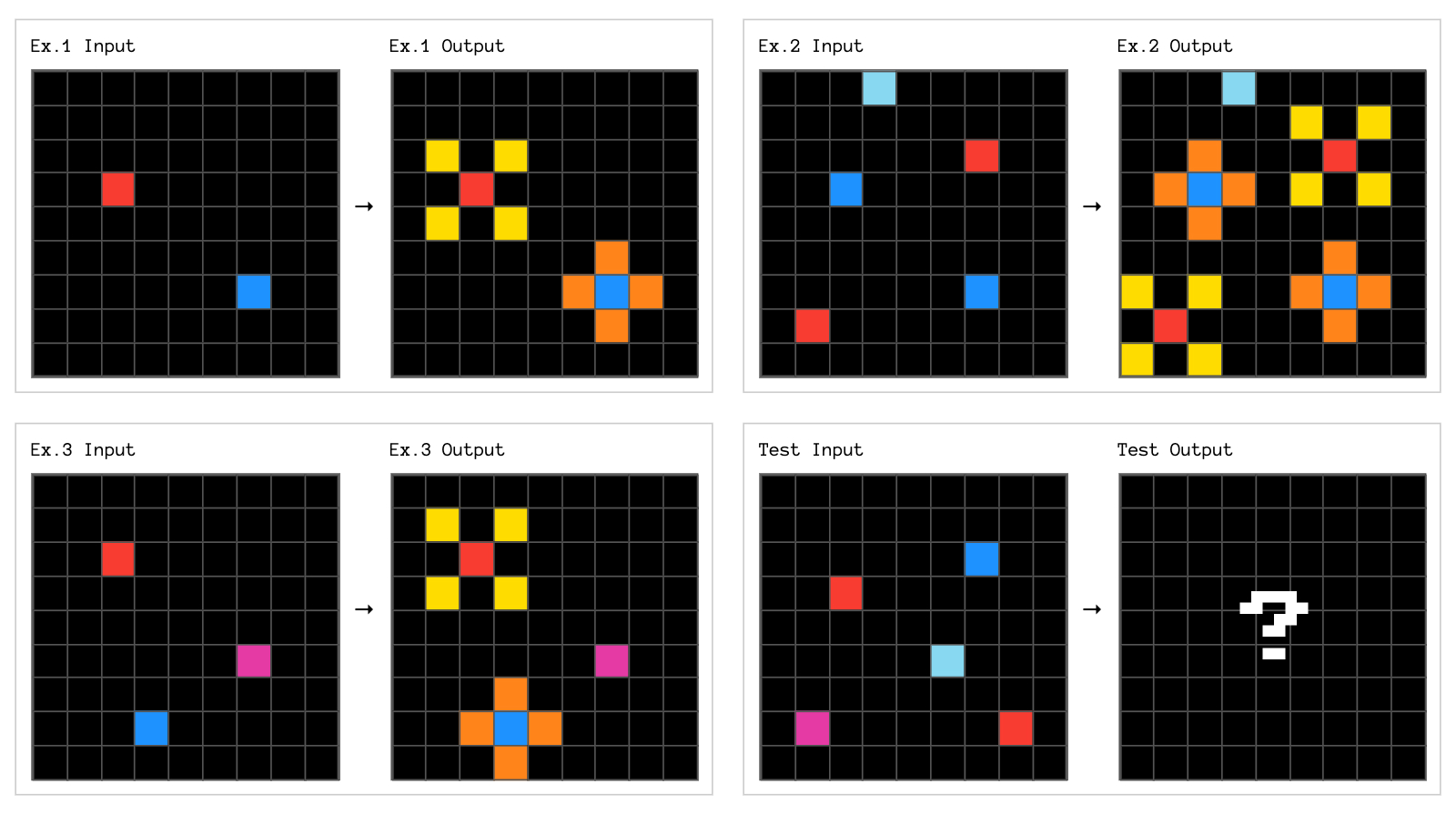

The ARC-AGI benchmark represents a significant departure from conventional AI assessments by prioritizing compositional reasoning and the ability to solve entirely new problems. Unlike many existing tests that reward pattern recognition or memorization of training data, ARC-AGI presents tasks requiring a system to combine existing knowledge in novel ways – essentially, to ‘think’ rather than ‘recall’. This is achieved through a series of questions designed to evaluate an AI’s capacity to understand causal relationships, apply abstract principles, and generalize learned information to previously unseen scenarios. The benchmark deliberately avoids relying on statistical correlations present in large datasets, instead focusing on whether a system possesses a genuine understanding of the underlying concepts, pushing the boundaries of what constitutes true artificial general intelligence.

The ARC-AGI benchmark reveals that simply increasing the size of an artificial intelligence model is insufficient for achieving genuine general intelligence. Success on these tasks-which require combining existing knowledge to solve entirely new problems-demands a foundation of core knowledge and the capacity for adaptable learning, mirroring human cognitive abilities. Notably, human testers consistently achieve perfect scores on the more challenging ARC-AGI-2 tasks, demonstrating that these benchmarks aren’t impossibly difficult, but rather highlight the crucial need for AI systems to move beyond pattern recognition and embrace robust, compositional reasoning – a feat well within the realm of possibility, as proven by human performance.

Stripping it Bare: Learning From Scratch, the Hard Way

Zero-pretraining, as applied in this research, denotes a deep learning methodology that eschews the conventional practice of initializing models with weights derived from large, publicly available datasets. Instead, models are constructed and trained entirely from random initialization, relying solely on the task-specific training data provided. This approach contrasts with transfer learning, where pre-trained weights are fine-tuned for a new task, and aims to reduce reliance on potentially biased or irrelevant information contained within those pre-trained datasets. The rationale is to foster greater adaptability and efficiency, particularly in scenarios where task-specific data is limited or where the pre-training data distribution diverges significantly from the target application.

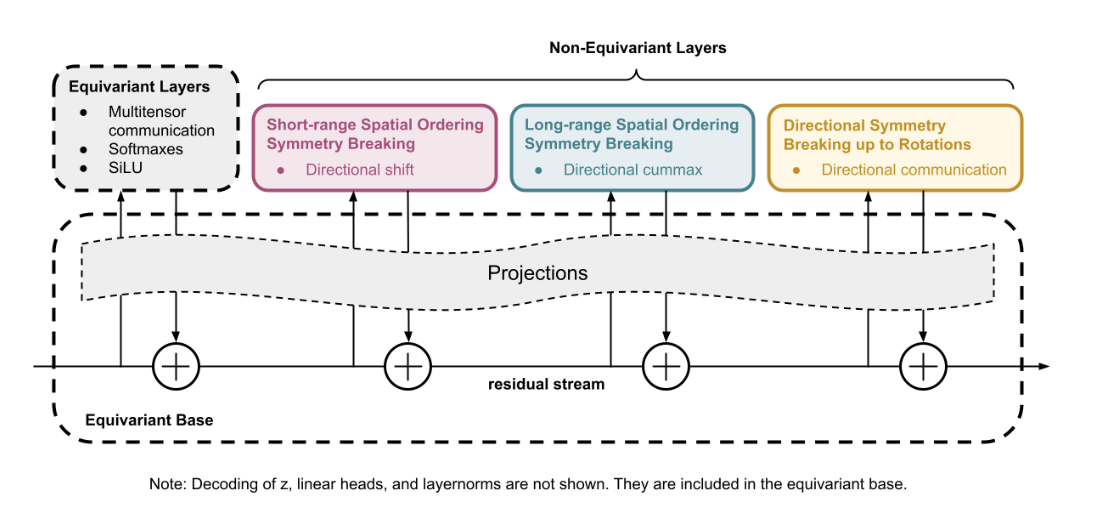

The training methodology employs a continuous refinement loop to enhance model performance through iterative feedback. This process doesn’t rely on a fixed training dataset; instead, the model is repeatedly evaluated, and its parameters are adjusted based on its performance on a stream of tasks or data points. Each iteration involves assessing the model’s outputs, identifying areas for improvement, and updating the model’s weights to minimize errors or maximize rewards. This cyclical approach allows the model to adapt to the specific characteristics of the task distribution and progressively refine its capabilities without the need for extensive pre-training or large datasets, promoting both efficiency and adaptability.

Model refinement is guided by the Minimum Description Length (MDL) principle, which prioritizes model simplicity to prevent overfitting and encourage generalization to unseen data. This approach favors models that can accurately represent the data with the fewest parameters, effectively balancing accuracy and complexity. Demonstrating this efficiency, the CompressARC model-parameterized with only 76,000 elements-achieved a score of 20% on the ARC-AGI-1 benchmark and 4% on the more challenging ARC-AGI-2, indicating a capacity for reasoning with limited resources.

Evidence, Not Hype: Performance on the AGI Frontlines

Evaluation on the ARC-AGI-2 dataset demonstrates state-of-the-art performance achieved through the implementation of test-time training, a method of adaptive learning performed during inference. This approach yielded a score of 24% on the ARC-AGI-2 private dataset, representing a current performance benchmark. Test-time training allows the model to refine its responses based on the specific challenges presented within the dataset itself, without requiring updates to model weights, and contributes to improved generalization capabilities.

Evaluation on ARC-AGI datasets indicates that a training paradigm focused on learning from scratch, combined with iterative refinement, yields performance improvements over approaches reliant on pre-trained models. This methodology addresses inherent limitations found in pre-trained models when applied to complex reasoning tasks. Specifically, the ability to adapt and refine understanding through repeated exposure and adjustment during training allows the model to overcome constraints imposed by initial parameter settings and potentially biased or incomplete pre-training data, leading to gains in areas requiring novel problem-solving capabilities.

Comparative performance analysis utilized Gemini 3 as a baseline to demonstrate the efficacy of the implemented training paradigm and compositional approach. Specifically, the Tiny Recursive Model (TRM), with a parameter count of only 7 million, achieved a 45% test accuracy on the ARC-AGI-1 dataset and 8% on the more challenging ARC-AGI-2 dataset, providing a quantifiable measure of improvement over existing models when considering parameter efficiency.

Beyond Static Problems: The Future of Interactive Reasoning

The advent of ARC-AGI-3 signifies a pivotal move in artificial intelligence assessment, moving beyond static problem-solving to emphasize interactive reasoning. This next-generation benchmark isn’t simply about providing an agent with a challenge and evaluating its initial response; instead, it probes the system’s capacity to learn through interaction with a dynamic environment. The focus is on adaptability – can the agent refine its strategies based on feedback, acquire new knowledge during the task, and ultimately improve its performance over time? This shift demands agents that aren’t merely programmed with solutions, but possess genuine learning capabilities, mirroring the way humans navigate complex, real-world scenarios where information is incomplete and strategies must evolve. By prioritizing interactive reasoning, ARC-AGI-3 aims to identify systems capable of true cognitive flexibility, a cornerstone of general intelligence.

The evolution of artificial general intelligence (AGI) is increasingly focused on dynamic, interactive scenarios, necessitating a departure from static problem-solving. Future agents must move beyond simply finding solutions to actively learning how to solve problems more efficiently through experience. This demands systems capable of building internal models of their environments, formulating hypotheses, and adapting strategies based on real-time feedback. Rather than relying solely on pre-programmed knowledge, these agents will acquire information during interactions, effectively refining their understanding and improving performance over time-a process mirroring human learning and adaptability. The ability to learn and refine strategies ‘on the fly’ represents a crucial step towards creating AGI systems that can navigate the complexities of the real world and generalize beyond the limitations of their initial training.

The pursuit of Artificial General Intelligence (AGI) is notably propelled by initiatives like the ARC Prize, which cultivates a collaborative environment for researchers and innovators. This competition doesn’t merely benchmark performance; it actively fosters shared learning and accelerates the development of increasingly sophisticated agents. The substantial increase in submissions for the ARC Prize 2025 – reaching 90 papers compared to 47 in the previous year – demonstrates a rapidly expanding and engaged community dedicated to tackling the challenges of interactive reasoning and, ultimately, achieving true AGI. This surge in participation highlights the Prize’s growing influence as a central hub for progress in the field, facilitating the exchange of ideas and driving innovation at an unprecedented rate.

The pursuit of AGI, as outlined in this report detailing the ARC-AGI benchmark, feels less like building a thinking machine and more like constructing an elaborate Rube Goldberg device. Each ‘refinement loop’-a clever attempt to nudge the system toward general reasoning-simply introduces another potential point of failure. It’s a predictable outcome, really. As Andrey Kolmogorov observed, “The most important thing in science is not to be afraid of making mistakes.” The researchers detail an adaptive benchmarking process, chasing a moving target of increasing model capabilities. One can’t help but suspect that any benchmark robust enough to truly measure ‘general’ intelligence will be quickly rendered obsolete, becoming another artifact for future digital archaeologists to puzzle over. It’s the same mess, just more expensive.

What’s Next?

The pursuit of artificial general intelligence, as evidenced by frameworks like ARC-AGI, continues to generate increasingly sophisticated systems. However, the observed ‘refinement loops’-models iteratively improving themselves-merely shift the problem. The true challenge isn’t creating intelligence, but anticipating its failure modes. Each solved benchmark becomes a new vector for unexpected exploits. Tests are, after all, a form of faith, not certainty.

Adaptive benchmarking, while necessary, feels less like scientific progress and more like an escalating arms race. As models gain capabilities, the metrics used to assess them become both more complex and more easily gamed. The focus on abstract reasoning, while laudable, risks prioritizing elegant solutions over robust performance in messy, real-world scenarios. The system that doesn’t crash on Monday mornings will ultimately prove more valuable than the one that achieves a perfect score in a controlled environment.

The field now faces a critical juncture. The question isn’t whether machines can think-they demonstrably can, within defined parameters-but whether those thoughts can be reliably aligned with human intent. The history of software development suggests that the most ingenious architectures inevitably succumb to edge cases. It’s a comforting thought, in a bleakly pragmatic way.

Original article: https://arxiv.org/pdf/2601.10904.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Top 15 Insanely Popular Android Games

- EUR UAH PREDICTION

- 4 Reasons to Buy Interactive Brokers Stock Like There’s No Tomorrow

- Did Alan Cumming Reveal Comic-Accurate Costume for AVENGERS: DOOMSDAY?

- Silver Rate Forecast

- DOT PREDICTION. DOT cryptocurrency

- Gold Rate Forecast

- ELESTRALS AWAKENED Blends Mythology and POKÉMON (Exclusive Look)

- New ‘Donkey Kong’ Movie Reportedly in the Works with Possible Release Date

- Core Scientific’s Merger Meltdown: A Gogolian Tale

2026-01-19 20:38