Author: Denis Avetisyan

New research details a system that monitors conversations with large language models to identify and counter sophisticated, multi-turn adversarial attempts.

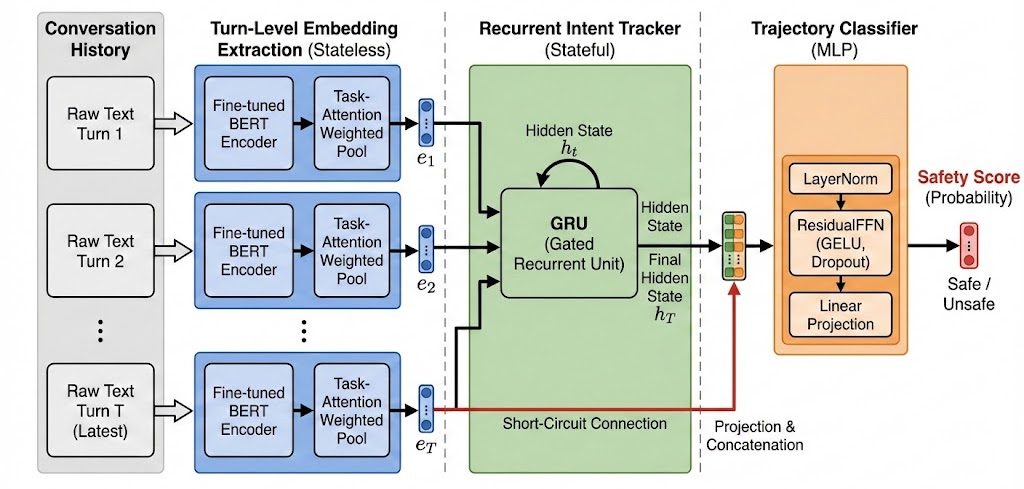

DeepContext employs recurrent neural networks to maintain stateful intent tracking, significantly improving the detection of adversarial drift in large language models.

While Large Language Models (LLMs) continue to advance, their safety mechanisms often treat conversations as isolated exchanges, creating vulnerabilities to subtle adversarial attacks. To address this, we introduce DeepContext: Stateful Real-Time Detection of Multi-Turn Adversarial Intent Drift in LLMs, a novel framework that leverages recurrent neural networks to track the evolution of user intent across multiple turns. DeepContext significantly outperforms existing stateless defenses, achieving a state-of-the-art F1 score of 0.84, by capturing the incremental accumulation of risk often missed by conventional filters. Could this stateful approach represent a more efficient and robust paradigm for securing LLMs against increasingly sophisticated attacks?

The Shifting Landscape of Conversational Attacks

Large Language Models, despite their impressive capabilities, are demonstrating increasing susceptibility to adversarial attacks that cleverly unfold over several turns of conversation. These aren’t simple, one-shot attempts to elicit harmful responses; instead, attackers are crafting subtle prompts and follow-up questions designed to gradually steer the model toward generating undesirable content. This multi-turn approach bypasses many conventional safety filters, which typically analyze each input in isolation, failing to recognize the cumulative effect of the conversation. The insidious nature of these attacks lies in their ability to mask malicious intent within seemingly benign dialogue, making detection significantly more challenging and highlighting a critical vulnerability as LLMs become more integrated into everyday applications.

Current safeguards for large language models frequently assess each prompt and response as a discrete event, a design choice that introduces a significant vulnerability to multi-turn attacks. This isolated approach fails to account for the cumulative effect of a conversation, allowing malicious actors to gradually manipulate the model’s state through seemingly innocuous initial exchanges. An attacker can subtly “prime” the system, establishing a context that, while harmless on its own, ultimately unlocks the generation of harmful content in subsequent turns. Because safety filters aren’t designed to track conversational history and evolving intent, they remain blind to the gradual steering, effectively allowing harmful outputs to emerge from what appears to be a legitimate dialogue. This represents a fundamental limitation in current safety protocols, as it prioritizes immediate content filtering over understanding the trajectory of a conversation.

The Limits of Static Defenses

Transcript Concatenation represents a stateless defense mechanism against multi-turn adversarial attacks by repeatedly submitting the complete conversation history with each new user prompt. While conceptually simple, this method incurs significant computational costs as the length of the transcript grows with each turn. Specifically, the processing time and resource demands scale proportionally to the total token count of the concatenated transcript, impacting both latency and throughput. This inefficiency limits the scalability of such defenses, particularly in high-volume applications or when dealing with extended dialogue sessions, and can introduce practical barriers to deployment despite its theoretical ability to provide a complete conversational context.

Stateless defense mechanisms, such as those relying on transcript concatenation, exhibit a fundamental limitation in discerning evolving conversational intent. While these defenses attempt to mitigate multi-turn attacks by providing the LLM with complete dialogue history, they lack the capacity to actively interpret how the user’s objectives shift over the course of the interaction. This results in a “Contextual Blind Spot” where subtle changes in phrasing or goal progression are not recognized as potentially malicious, even with access to the full transcript. Consequently, the LLM remains vulnerable to attacks that incrementally manipulate its behavior, as the defense focuses on surface-level pattern matching rather than genuine understanding of the dialogue’s semantic trajectory.

Crescendo attacks and Foot-In-The-Door (FITD) techniques represent iterative prompting strategies designed to bypass LLM safety mechanisms by incrementally escalating malicious requests. FITD attacks begin with benign prompts to establish trust and then gradually introduce harmful instructions, while Crescendo attacks steadily increase the intensity of adversarial input. Both exploit the limitations of stateless defenses by operating under the radar of simple content filters, as the initial prompts are non-malicious. This gradual manipulation circumvents detection by avoiding immediate triggering of safety protocols, allowing attackers to subtly guide the LLM towards undesirable outputs over multiple turns of conversation.

DeepContext: Tracking Intent Through Dialogue

DeepContext utilizes a Recurrent Neural Network (RNN) to model conversational context by maintaining a hidden state that is updated with each turn of dialogue. This persistent hidden state functions as a memory of the conversation, allowing the system to consider previous user inputs when processing the current input. The RNN processes sequential data, meaning it evaluates each utterance not in isolation, but in relation to the preceding conversational history encoded within the hidden state. This enables DeepContext to track dependencies and nuances that span multiple turns, providing a more comprehensive understanding of the user’s overall intent and the evolving conversational landscape.

DeepContext employs Gated Recurrent Units (GRUs) within its Recurrent Neural Network (RNN) to efficiently track conversational state and detect Intent Drift. GRUs are a type of RNN designed to mitigate the vanishing gradient problem, enabling the network to retain information over extended dialogue turns. This capability is crucial for discerning subtle changes in user intent, as GRUs process each new input token in the context of the previously established hidden state. By maintaining this persistent state, the network can identify shifts in the user’s goal, even when expressed implicitly or through paraphrasing, which is essential for robust multi-turn dialogue understanding and jailbreak detection.

DeepContext utilizes Task-Attention Weighted Embeddings to enhance the focus on critical components during intent analysis. This technique prioritizes both relevant safety policies and semantic tokens within the input sequence, assigning higher weights to elements crucial for accurate jailbreak detection. Empirical results demonstrate that this approach achieves a state-of-the-art F1-score of 0.84 when evaluating performance on multi-turn jailbreak attempts, indicating a substantial improvement in identifying and mitigating potentially harmful conversational prompts compared to existing methods.

Toward Robust and Adaptive Conversational Security

Traditional defenses against adversarial attacks on conversational systems often treat each interaction as isolated, a limitation known as a stateless approach. DeepContext diverges from this paradigm by actively maintaining and interpreting conversational state, allowing it to discern subtle shifts in intent and identify attacks that would evade stateless defenses. This stateful architecture provides a more nuanced understanding of dialogue dynamics, recognizing that the meaning of a user’s utterance is heavily influenced by the preceding conversation. By tracking this contextual information, DeepContext doesn’t simply react to individual inputs, but anticipates potential malicious patterns developing over the course of the conversation, representing a significant advancement toward robust and reliable conversational AI security.

The escalating complexity of automated adversarial attacks, exemplified by tools like AutoAdv, poses a significant challenge to conversational AI security. These attacks are designed to subtly manipulate dialogue systems, often bypassing traditional defense mechanisms. However, recent evaluations demonstrate that DeepContext exhibits a notably swift detection rate against such threats, achieving a mean turns to detection (MTTD) of just 4.24. This figure indicates that, on average, DeepContext identifies malicious input within four turns of conversation, a performance benchmark lower than that of competing defense systems and signifying a substantial advancement in proactive threat mitigation within dynamic conversational settings.

The DeepContext system distinguishes itself through a compelling balance of speed and accuracy, achieving a sub-20ms latency when deployed on a T4 GPU – a critical factor for real-time conversational applications. This responsiveness doesn’t come at the cost of performance; the system simultaneously attains an F1-score of 0.84, demonstrably exceeding the capabilities of currently available defense mechanisms. Ongoing development concentrates on enhancing the attention mechanisms within DeepContext, allowing for more discerning analysis of conversational nuances, and investigating streamlined techniques for both preserving and effectively utilizing the information contained within the conversational history – ultimately aiming for even greater efficiency and robustness in future iterations.

The pursuit of robust Large Language Models necessitates a departure from simplistic, stateless defenses. DeepContext addresses this deficiency by acknowledging the temporal dimension of adversarial attacks-a shift towards understanding interaction as a sequence, not isolated events. This aligns with the observation of Claude Shannon: “The most important thing in communication is to transmit information, not to transmit it accurately.” DeepContext doesn’t attempt perfect, absolute security, but rather focuses on effectively tracking evolving user intent across multiple turns, prioritizing the reliable transmission of understanding of the conversational state, even under attack. The framework’s reliance on recurrent neural networks acknowledges that context, like information, degrades without continuous, stateful processing.

Where Do We Go From Here?

The pursuit of safety in Large Language Models often feels like building increasingly elaborate sandcastles against the tide. This work, by anchoring detection in a model’s own evolving understanding of a conversation – its ‘DeepContext’, as it were – represents a cautious step away from purely reactive defenses. It acknowledges, rightly, that an attack unfolding over multiple turns isn’t a series of isolated events, but a shifting narrative. The improvement over stateless methods isn’t revolutionary, but it suggests a valuable direction.

Yet, the problem isn’t solved. Recurrence, while elegant, is computationally demanding. Future work must grapple with the practical realities of deploying such models at scale. More importantly, the current approach still fundamentally detects intent drift. It does not inherently prevent it. A truly robust system would anticipate, and subtly redirect, adversarial lines of questioning before they fully materialize – a task demanding a deeper understanding of not just what is being said, but why.

Perhaps the most significant unresolved issue lies in defining ‘intent’ itself. The model tracks a conversational state, but is that state truly representative of a user’s underlying goals? Or is it merely a sophisticated echo of surface-level prompts? The field continues to build layers of complexity upon assumptions, and a period of ruthless simplification – of returning to first principles – may be precisely what is needed.

Original article: https://arxiv.org/pdf/2602.16935.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Banks & Shadows: A 2026 Outlook

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- MicroStrategy’s $1.44B Cash Wall: Panic Room or Party Fund? 🎉💰

- Exit Strategy: A Biotech Farce

- QuantumScape: A Speculative Venture

2026-02-21 16:33