Author: Denis Avetisyan

Researchers are exploring symbolic reasoning as a powerful alternative to message passing in graph neural networks, offering improved expressiveness and interpretability.

This review introduces SymGraph, a framework utilizing discrete structural hashing and topological roles to generate self-explainable logical rules for graph learning.

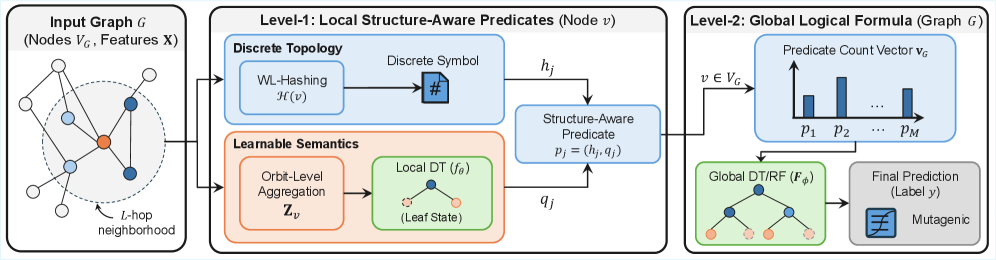

Despite the increasing adoption of Graph Neural Networks (GNNs) in critical domains, their inherent lack of transparency hinders trust and scientific insight. This paper, ‘Beyond Message Passing: A Symbolic Alternative for Expressive and Interpretable Graph Learning’, introduces SymGraph, a novel framework that moves beyond traditional message passing by utilizing discrete structural hashing and topological role-based aggregation to generate more expressive and interpretable logical rules. SymGraph theoretically surpasses the 1-WL expressivity barrier while achieving state-of-the-art performance and significant speedups-up to 100x on CPU-compared to existing self-explainable GNNs. Could this symbolic approach unlock a new era of truly explainable and scientifically useful graph learning?

The Illusion of Understanding: Why Most Graph Networks Remain Black Boxes

Graph Neural Networks (GNNs) have become increasingly proficient at analyzing and drawing conclusions from relational data – information structured as nodes and connections, such as social networks or molecular structures. However, this power often comes at the cost of transparency; GNNs frequently operate as “black boxes,” where the reasoning behind a prediction remains opaque. This lack of interpretability poses significant challenges, particularly in high-stakes applications like healthcare or finance, where understanding why a model arrived at a certain conclusion is crucial for building trust and ensuring accountability. Debugging also becomes markedly difficult, as identifying the source of errors within a complex, non-transparent network is a considerable undertaking, limiting the practical deployment of these otherwise powerful tools.

While techniques like post-hoc explanation methods attempt to illuminate the decisions of Graph Neural Networks, they often fall short of revealing genuine reasoning. These approaches typically highlight which nodes or edges most influenced a prediction – essentially pointing to correlations without explaining why those connections mattered. For instance, a saliency map might indicate a specific node was crucial, but it doesn’t detail the complex interplay of features and message passing that led to that determination. This limitation stems from the fact that post-hoc methods treat the GNN as a black box, analyzing outputs without access to the internal logic developed during training. Consequently, users are left with surface-level explanations that can be misleading or insufficient for debugging, hindering trust and responsible deployment of these increasingly complex models.

Graph Neural Networks, while powerful in their ability to analyze relationships within data, often operate as complex ‘black boxes’. This opacity stems from their fundamental architecture, which typically involves Message Passing Neural Networks that iteratively aggregate information from neighboring nodes. The process relies heavily on two key structures: the Adjacency Matrix, defining the connections between nodes, and the Node Feature Matrix, representing individual node characteristics. As information propagates and transforms through multiple layers of these interconnected matrices and non-linear activations, the initial influence of specific nodes or features becomes increasingly difficult to trace. Consequently, understanding why a GNN arrived at a particular prediction-disentangling the contributions of individual nodes and features-presents a significant challenge, hindering both trust in the model and effective debugging of its outputs.

SymGraph: Rebuilding the Foundation with Logic

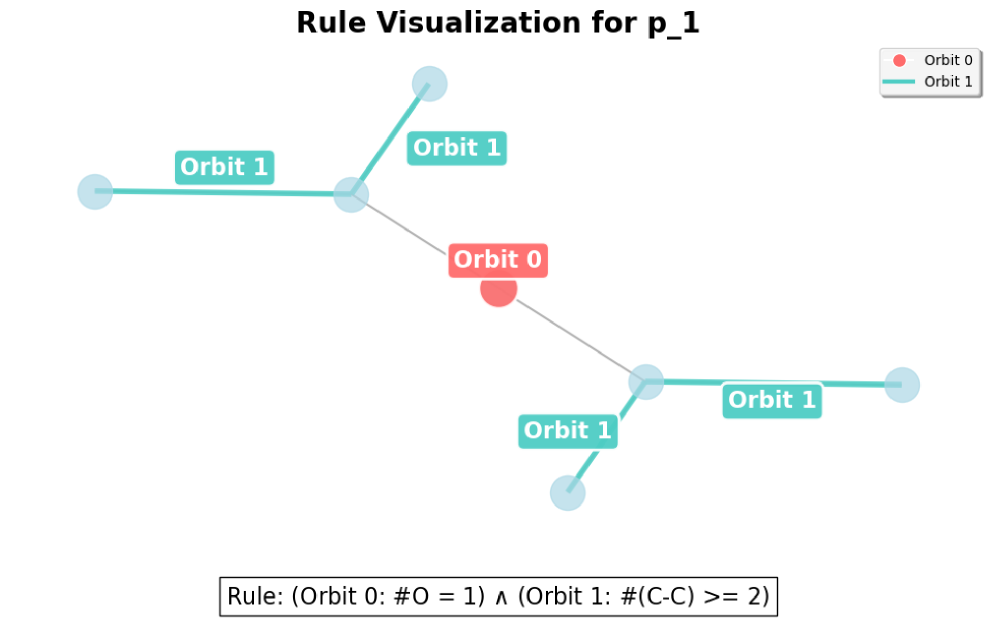

SymGraph distinguishes itself from conventional Graph Neural Networks (GNNs) by employing a Logical Rule-Based Model as its core architecture. Traditional GNNs output numerical predictions based on learned feature representations; in contrast, SymGraph generates predictions derived from explicit logical rules. This shift from numerical outputs to symbolic reasoning allows for direct interpretation of the model’s decision-making process. The framework represents knowledge and relationships within the graph as logical statements, enabling the model to justify its predictions with human-understandable rules rather than opaque numerical values. This approach fundamentally alters the nature of model output, prioritizing explainability and transparency over solely predictive accuracy.

SymGraph leverages Graded Modal Logic (GML) and Quantitative Disjunctive Normal Form (QDNF) to formally represent graph structures and decision boundaries. GML allows for the representation of graded truth values – probabilities or confidence levels – associated with logical statements about graph connectivity and node attributes. QDNF extends traditional Boolean logic by allowing for weighted disjunctions and conjunctions, enabling the representation of probabilistic relationships. Specifically, QDNF formulas are constructed using weighted clauses, where each clause represents a specific path or subgraph pattern, and the weights quantify the confidence in that pattern’s relevance to the decision. These formulas effectively define decision boundaries as weighted combinations of graph features, facilitating a transparent and logically interpretable model representation. \text{QDNF: } \sum_{i=1}^{n} w_i \cdot \bigwedge_{j=1}^{m} \phi_{ij} , where w_i are weights and \phi_{ij} are atomic formulas.

Traditional Graph Neural Networks (GNNs) typically output numerical predictions without inherent explanations of the reasoning process. In contrast, the SymGraph framework constructs models capable of generating human-readable explanations for their predictions. This is achieved by representing the decision-making process as a series of logical rules derived from the graph structure and node features. These rules, based on Graded Modal Logic and Quantitative Disjunctive Normal Form, articulate the conditions under which a specific prediction is made, providing a transparent and interpretable rationale. Consequently, users can directly inspect the logical basis for each prediction, facilitating trust and enabling debugging of the model’s behavior – a capability largely absent in conventional GNN architectures.

Encoding Graph Structure with Symbolic Precision: It’s About What You Represent

SymGraph encodes graph topology through the use of Structural Predicates, which define relationships between nodes based on their immediate neighborhood – for example, identifying nodes that are central to a densely connected subgraph or those positioned on graph boundaries. These predicates are then used to generate Discrete Structural Hashes, unique identifiers assigned to each node based on its structural role. The hashing process transforms the topological information into a symbolic representation, effectively converting graph features into logical rules suitable for machine learning. This allows the model to represent and reason about graph structure without relying on continuous vector embeddings, offering a more interpretable and precise encoding of topological characteristics.

Topological Role-Based Aggregation within the SymGraph framework operates by categorizing nodes based on their immediate topological environment – specifically, their degree, centrality, and immediate neighbors – to establish distinct ‘roles’ within the graph. Instead of treating all neighboring node features equally, aggregation functions are applied selectively, weighted by the structural similarity of the source node to each neighbor’s identified role. This process ensures that information is propagated more effectively along structurally relevant paths, prioritizing connections that reflect the overall graph topology and enabling the model to capture higher-order relational patterns beyond simple adjacency. The result is a feature representation for each node that is informed not just by its local connections, but by the broader structural context of its position within the graph.

Training of the symbolic models within SymGraph is achieved through a two-stage process leveraging Decision Trees and Combinatorial Evolutionary Search. Initially, Decision Trees are utilized to establish a foundational set of logical rules based on the encoded graph structure. Subsequently, Combinatorial Evolutionary Search refines these rules by exploring different combinations of structural predicates and parameters, optimizing for both predictive accuracy and model interpretability. This search algorithm efficiently navigates the combinatorial space of possible rules, avoiding exhaustive evaluation through selective mutation and crossover operations. The resulting models exhibit high performance on graph-related tasks while maintaining a transparent and readily understandable rule-based structure, facilitating analysis and verification of the learned relationships.

Beyond Interpretability: Extending and Enhancing GNN Capabilities

SymGraph distinguishes itself not merely as an interpretable framework, but as a powerful augmentation to existing Graph Neural Network (GNN) architectures. Rather than requiring a complete overhaul of established models, SymGraph seamlessly integrates, effectively boosting their performance and analytical capabilities. This approach allows researchers and practitioners to leverage their current GNN investments while unlocking deeper insights into graph data. By providing a readily adaptable layer of symbolic reasoning, SymGraph enhances a GNN’s ability to discern complex patterns and relationships, ultimately leading to more accurate predictions and a more comprehensive understanding of the underlying graph structure – a crucial advantage in fields demanding both predictive power and explainability.

SymGraph’s adaptability is significantly broadened through its support of SMARTS patterns, a powerful notation for representing substructures within molecules. This capability makes the framework particularly well-suited for applications in cheminformatics and drug discovery, where identifying specific chemical motifs is crucial. By enabling the incorporation of domain expertise directly into the graph representation, SymGraph can efficiently analyze and predict properties related to molecular structure and activity. The use of SMARTS patterns allows researchers to move beyond simple node and edge features, capturing more complex relationships and enhancing the model’s ability to generalize across diverse chemical datasets, ultimately accelerating the pace of materials and pharmaceutical innovation.

SymGraph represents a significant advancement in graph neural network efficiency and performance, achieving substantial speedups in training – from 10x to 100x – through the integration of LogiX-GIN principles, notably while utilizing CPU-only execution. This acceleration doesn’t come at the cost of accuracy; in graph classification tasks, the framework consistently surpasses the performance of current state-of-the-art self-explainable models. Critically, SymGraph addresses limitations inherent in traditional graph isomorphism tests, such as the Weisfeiler-Lehman test, demonstrating an ability to discern more subtle structural differences within graphs and, consequently, improve predictive power. This combination of speed and accuracy positions SymGraph as a powerful tool for analyzing complex datasets and tackling challenging problems in fields like drug discovery and materials science.

The pursuit of explainable AI, as demonstrated by SymGraph’s focus on logical rules derived from structural hashing, feels less like innovation and more like rediscovering the limitations of any system. It’s a predictable cycle; a framework arrives, promising clarity, then production inevitably exposes edge cases and unforeseen interactions. One suspects the ‘self-explainable’ label is optimistic marketing. Ada Lovelace observed, “The Analytical Engine has no pretensions whatever to originate anything.” This rings true; SymGraph doesn’t create understanding, it merely formalizes existing structural relationships-a sophisticated taxonomy, perhaps, but still just notes left for future digital archaeologists to decipher when the inevitable chaos descends. The ambition to move ‘Beyond Message Passing’ is admirable, but someone should remind them that all models are wrong, some are useful – and all eventually accrue tech debt.

The Road Ahead

SymGraph, like all attempts at ‘self-explainability,’ merely pushes the opacity elsewhere. The framework trades the inscrutability of weights for the complexity of logical rules-a lateral move, at best. Anyone who has managed a rule engine at scale understands that maintaining logical consistency is a Sisyphean task. Documentation, inevitably, becomes collective self-delusion, and the first adversarial attack will expose the brittle assumptions baked into these ‘interpretable’ structures. The claim of topological role-based aggregation being fundamentally more robust than, say, a carefully engineered feature set, feels…optimistic.

The reliance on structural hashing, while elegant, invites further scrutiny. If a bug is reproducible, then it confirms a stable system-not a desirable trait in a learning algorithm. The true test will be the framework’s performance on graphs exhibiting subtle, context-dependent relationships – those that don’t neatly decompose into discrete roles. The Weisfeiler-Lehman test, a cornerstone of this approach, is known to be insensitive to certain graph modifications. Expect production systems to discover precisely those blind spots.

Future work will undoubtedly focus on scaling these logical rules-and, consequently, scaling the maintenance burden. A more interesting, if less marketable, direction would be a rigorous investigation of the inherent trade-offs between expressiveness, interpretability, and robustness in graph learning. Anything self-healing just hasn’t broken yet.

Original article: https://arxiv.org/pdf/2602.16947.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Banks & Shadows: A 2026 Outlook

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- MicroStrategy’s $1.44B Cash Wall: Panic Room or Party Fund? 🎉💰

- QuantumScape: A Speculative Venture

- 10 Most Meaningful Demon Backstories, Ranked

2026-02-21 02:55