Author: Denis Avetisyan

A new reinforcement learning framework is proving adept at challenging long-held beliefs in extremal graph theory and constructing counterexamples to established results.

This paper introduces RLGT, a modular reinforcement learning system for automated conjecture disproving and exploration in extremal graph theory.

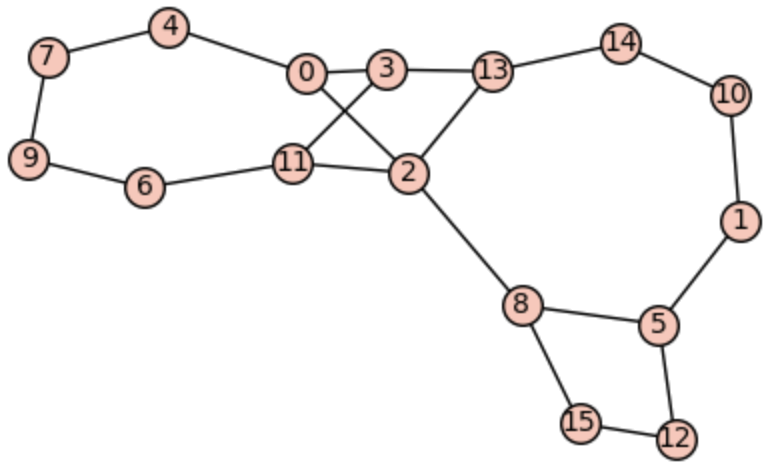

Despite longstanding challenges in extremal graph theory, recent work has demonstrated the potential of reinforcement learning (RL) to address previously intractable problems, building upon the pioneering framework introduced by Wagner. Here, we present ‘RLGT: A reinforcement learning framework for extremal graph theory’, a novel and modular RL environment designed to systematically explore graph-theoretic conjectures and inequalities, supporting both undirected and directed graphs with customizable edge coloring. This framework efficiently represents graphs and facilitates the discovery of counterexamples, as demonstrated by its successful application in refuting existing bounds on graph invariants. Will RLGT accelerate progress in extremal graph theory and unlock solutions to long-standing open problems?

Unveiling the Potential of Graph Structures

While graph theory has long served as a cornerstone for representing and analyzing relationships – from social networks to physical infrastructure – its practical application to large-scale optimization problems is often hampered by computational limitations. The core strength of graph theory lies in its ability to model interconnectedness, allowing researchers to visualize and understand complex systems; however, determining the optimal solution within these systems-such as finding the most efficient delivery route or designing a robust communication network-requires exploring a solution space that grows exponentially with the size of the graph. Traditional algorithms, while effective for smaller instances, struggle to scale to the networks characteristic of modern challenges, prompting a search for novel approaches that can efficiently navigate these complex landscapes and unlock the full potential of graph-based modeling.

Fundamental characteristics of a graph – known as invariants – such as the LaplacianSpectralRadius, GraphEnergy, and MatchingNumber, provide a powerful means of understanding its underlying structure and properties. The LaplacianSpectralRadius, derived from the graph’s adjacency matrix, reveals connectivity and spectral characteristics, while GraphEnergy quantifies the density of edges and potential for information flow. Determining the MatchingNumber – the size of the largest set of edges with no shared vertices – is crucial for optimization problems. However, calculating these invariants becomes computationally prohibitive as graph size increases; many are NP-hard problems, meaning the time required to find an exact solution grows exponentially with the number of nodes and edges. This computational bottleneck limits the application of these valuable insights to realistically sized networks encountered in fields like social science, logistics, and biology, motivating research into efficient approximation algorithms and heuristics.

The inherent complexity of graph-based problems arises from the exponential growth of possible solutions as networks scale, presenting a significant hurdle for traditional algorithms. Consider network design; determining the optimal configuration for data transmission or resource allocation requires evaluating an immense number of potential connections and pathways. Current methods often get trapped in local optima or become computationally intractable when faced with even moderately sized graphs. This limitation impedes advancements in diverse fields, from optimizing logistics and supply chains to designing robust communication networks and efficiently managing energy grids. The challenge isn’t simply finding a solution, but discovering the best solution within a staggeringly large, often disconnected, solution space – a task demanding innovative approaches to efficiently navigate and assess the myriad possibilities.

Reinforcement Learning: A Dynamic Architect for Graphs

Reinforcement Learning (RL) facilitates the training of agents to interact with and alter graph structures through a trial-and-error process. The agent receives feedback in the form of rewards or penalties based on the consequences of its actions – specifically, how modifications to the graph impact a defined objective. This allows the agent to learn a policy for navigating the graph and strategically modifying its connections, nodes, or attributes. Unlike traditional graph algorithms which operate on predefined logic, RL agents learn directly from experience, adapting their behavior based on the observed outcomes of their actions within the graph environment. This learning paradigm enables the discovery of optimal or near-optimal graph manipulations without explicit programming for each specific task.

A standardized GraphFramework is essential for the effective implementation and evaluation of reinforcement learning (RL) algorithms applied to graph-structured problems. This framework provides a consistent environment for algorithm testing, enabling reproducible results and fair comparisons between different RL approaches. Key components of such a framework include a defined graph representation, a set of permissible graph modification actions, and a mechanism for evaluating the resulting graph structure based on a specified reward function. The framework’s robustness is determined by its ability to handle diverse graph types, scales, and complexities, as well as its efficiency in executing actions and calculating rewards, thereby facilitating rigorous experimentation and accelerating progress in the field of graph-based reinforcement learning.

Employing reinforcement learning as a graph architect has demonstrably yielded constructions and optimizations exceeding the capabilities of conventional algorithmic approaches. Specifically, this methodology facilitated the disproof of 25 previously established inequalities within graph theory. This outcome replicates the findings originally reported by Ghebleh et al., confirming the efficacy of the RL-driven approach in challenging and ultimately resolving longstanding conjectures. The agent’s ability to explore the solution space without pre-defined heuristics allowed it to identify counterexamples that had eluded traditional methods, thereby providing definitive disproofs and advancing the field’s understanding of graph properties.

Diverse Environments for Rigorous Graph Intelligence

The LinearBuildEnvironment facilitates reinforcement learning research into graph construction by presenting agents with a sequential task of incrementally building a graph. Agents begin with an empty graph and, at each step, can add a single edge between two nodes. The environment is defined by a specific objective function – such as minimizing path length or maximizing network connectivity – which serves as the reward signal. This allows agents to learn optimal strategies for edge addition, effectively discovering which connections contribute most to achieving the defined objective. The linear nature of the environment simplifies exploration and enables focused learning on the impact of each incremental connection, distinguishing it from environments requiring simultaneous graph manipulation.

The GlobalFlipEnvironment presents agents with a pre-existing graph structure and tasks them with improving its performance through selective edge reversals. This environment assesses an agent’s capacity to refine a network by strategically flipping the connectivity between nodes; each flip alters the graph’s topology and consequently impacts its efficiency for a defined objective. The agent receives feedback based on the resulting graph’s performance, incentivizing the discovery of optimal configurations through edge manipulation, and thereby promoting adaptability and resource optimization within the established network structure.

The LocalSetEnvironment is designed to evaluate reinforcement learning agents’ ability to make efficient, localized modifications to a graph structure in response to changing conditions. This environment focuses on scenarios where agents can only alter a limited subset of edges at each time step, simulating dynamic network challenges like link failures or bandwidth fluctuations. The framework utilizes nine distinct reinforcement learning environments, each presenting unique graph topologies, dynamic conditions, and reward structures, thereby improving the adaptability and generalization capabilities of trained agents across a range of practical problems involving network optimization and resource allocation.

Validating Policies and Disproving Conjecture

Proximal Policy Optimization (PPO) and Reinforce are both policy gradient algorithms utilized to train agents through trial and error. These methods iteratively refine an agent’s policy – the strategy it uses to make decisions – by estimating the gradient of expected reward with respect to the policy’s parameters. PPO improves upon standard policy gradient methods by employing a clipped surrogate objective function, which constrains policy updates to prevent excessively large changes that could destabilize learning. Reinforce, a Monte Carlo policy gradient method, calculates the policy gradient using sampled trajectories and the associated rewards, requiring complete episodes before updating the policy. In this research, both algorithms were implemented to maximize cumulative reward signals obtained from interacting with the defined environments, driving agent behavior towards optimal strategies for graph manipulation and conjecture disproof.

DeepCrossEntropy improves agent performance in conjecture disproof by utilizing deep neural networks to model both the policy – the agent’s strategy for interacting with graphs – and the associated loss function, which quantifies the error in the agent’s actions. This allows for the representation of more complex policies than traditional methods, enabling the agent to explore a wider range of graph manipulations and strategies. Comparative analysis demonstrated that agents employing DeepCrossEntropy consistently outperformed those using alternative methods in disproving conjectures, indicating a significant advantage in learning effective graph optimization techniques and generating valid counterexamples.

The employed policy gradient methods, specifically PPO and REINFORCE, facilitated the development of agents capable of autonomously manipulating graph structures to disprove mathematical conjectures. These agents demonstrated an ability to construct new graphs, modify existing ones, and optimize their configurations based on reward signals. This process resulted in the automated generation of counterexamples – specific graph instances that invalidate a given conjecture – which were then validated to confirm their correctness. The automated counterexample generation and validation process, driven by the learned graph manipulation strategies, led to the disproof of multiple previously open conjectures.

Towards a Future Shaped by Graph-Based Intelligence

The reliability of graph-based intelligence systems hinges on the ability to rigorously verify and validate the structures they learn, and computational tools like SageMath are proving essential in this process. By employing SageMath’s capabilities in symbolic computation and mathematical proof, researchers can move beyond empirical testing to formally confirm the properties of these graphs – ensuring they adhere to expected topological rules and logical constraints. This allows for the detection of subtle errors or inconsistencies that might otherwise go unnoticed, bolstering confidence in the system’s reasoning and predictions. Furthermore, SageMath facilitates the exploration of graph isomorphism – determining if two graphs are structurally identical – and the calculation of \mathbb{Z}_2 \mathbb{Z}_3 Betti numbers, providing deeper insights into graph characteristics and enabling the development of more robust and trustworthy intelligent systems.

Evaluating the efficacy of graph-based intelligent agents and the quality of the graphs they generate requires robust, quantifiable metrics, and graph invariants offer a powerful solution. These invariants – properties of a graph that remain constant regardless of labeling – provide a standardized means of assessment. One such metric, the MostarIndex – calculated as the sum of \frac{1}{d(u)d(v)} for all edges (u,v), where d(u) represents the degree of node u – captures a graph’s connectivity and complexity. A higher MostarIndex generally indicates a more interconnected and potentially robust network, while changes in this index can signal improvements or degradations in agent performance during graph construction or modification. By tracking these invariants, researchers gain valuable insight into an agent’s ability to create efficient, resilient, and meaningful graph structures, facilitating comparative analysis and driving advancements in the field.

The principles of graph-based intelligence extend far beyond theoretical computation, promising substantial advancements across diverse fields. Innovative network design benefits from the ability to model and optimize connections with unprecedented efficiency, leading to more resilient and adaptable infrastructures. Resource allocation, often a complex logistical challenge, gains a powerful new tool for determining optimal distribution based on network topology and relationships. Perhaps most significantly, this framework provides a novel approach to understanding and optimizing complex systems – from biological networks and social structures to logistical supply chains and financial markets – by revealing underlying patterns and dependencies previously obscured by sheer scale and intricacy. This capability allows for predictive modeling and informed intervention, potentially unlocking solutions to some of the most pressing challenges facing modern society.

The presented Reinforcement Learning framework, RLGT, embodies a systemic approach to problem-solving within extremal graph theory. It doesn’t merely address isolated conjectures but rather learns the underlying structures governing graph behavior. This holistic perspective echoes Marvin Minsky’s assertion: “The more we understand about how the brain works, the more we realize how much it doesn’t work the way we thought it did.” RLGT, by iteratively probing the solution space and adapting its strategies, demonstrates that even well-established graph-theoretic results aren’t immutable. The framework’s modularity, a key design principle, allows for targeted interventions and a clearer understanding of how modifications ripple through the system – effectively revealing the interconnectedness of graph invariants and their implications for conjecture validity.

Beyond the Edge: Future Directions

The demonstrated capacity of RLGT to navigate the landscape of extremal graph theory – and actively disprove established tenets – reveals a fundamental shift. The limitations are not in computational power, but in the clarity of the questions posed. Current approaches still largely rely on brute-force exploration guided by heuristics; true scalability lies in distilling the essence of graph structure into learnable invariants. The framework’s modularity offers a path toward this refinement, allowing for targeted improvements in reward functions and state representations without disrupting the core architecture.

A pressing challenge is expanding the scope of applicable graph invariants. The current reliance on relatively simple features, while effective, limits the framework’s ability to address more nuanced conjectures. Integrating concepts from algebraic graph theory – spectral properties, cohomology – could provide a richer vocabulary for the agent, allowing it to discern subtle patterns and construct more sophisticated counterexamples. This is not merely a technical hurdle, but a philosophical one: can we encode mathematical intuition into a reinforcement learning agent?

Ultimately, the success of RLGT, or systems like it, will be measured not by the conjectures it disproves, but by the new questions it enables. The pursuit of extremal graph theory is, at its core, a search for underlying order. A truly scalable system will not simply find exceptions to the rule, but illuminate the rule itself, revealing the elegant simplicity that governs even the most complex networks.

Original article: https://arxiv.org/pdf/2602.17276.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Gemini’s Execs Vanish Like Ghosts-Crypto’s Latest Drama!

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Banks & Shadows: A 2026 Outlook

- QuantumScape: A Speculative Venture

- HSR Fate/stay night — best team comps and bond synergies

- ‘Peacemaker’ Still Dominatees HBO Max’s Most-Watched Shows List: Here Are the Remaining Top 10 Shows

2026-02-20 13:27