Author: Denis Avetisyan

New research reveals a disconnect between the development of automated tools to identify deceptive online designs and the practical requirements of regulatory enforcement.

This review examines the perspectives of regulatory practitioners on the challenges and opportunities of using AI-based tools to detect dark patterns and ensure compliance with legal standards.

Despite growing regulatory scrutiny of manipulative user interface designs – often termed ‘dark patterns’ – enforcement struggles to keep pace with their proliferation. This research, framed by the evocative title ‘”What I’m Interested in is Something that Violates the Law”: Regulatory Practitioner Views on Automated Detection of Deceptive Design Patterns’, investigates the chasm between academic development of automated detection tools and the practical needs of those tasked with upholding digital consumer protection. Interviews with regulatory practitioners reveal that while computing technologies hold promise, current tools fall short by failing to provide the transparency, accountability, and legal grounding required for effective enforcement. How can research better align with the evidentiary standards and workflow demands of regulatory bodies to translate detection capabilities into demonstrable legal outcomes?

The Erosion of Autonomy: Dark Patterns in the Digital Realm

The digital landscape is now heavily populated with ‘dark patterns’ – user interface designs intentionally crafted to trick individuals into making choices they likely wouldn’t otherwise. These aren’t simple usability issues; rather, they represent a deliberate exploitation of human psychology and cognitive biases. Tactics range from disguised advertisements and forced continuity – where free trials automatically convert to paid subscriptions – to confirmshaming, which guilt-trips users into opting into unwanted services. While seemingly minor individually, the cumulative effect of these manipulative designs erodes user autonomy and trust, raising significant ethical concerns about the increasingly persuasive nature of online interactions. The prevalence of dark patterns demonstrates a shift in design philosophy, prioritizing business goals over genuine user experience and informed consent.

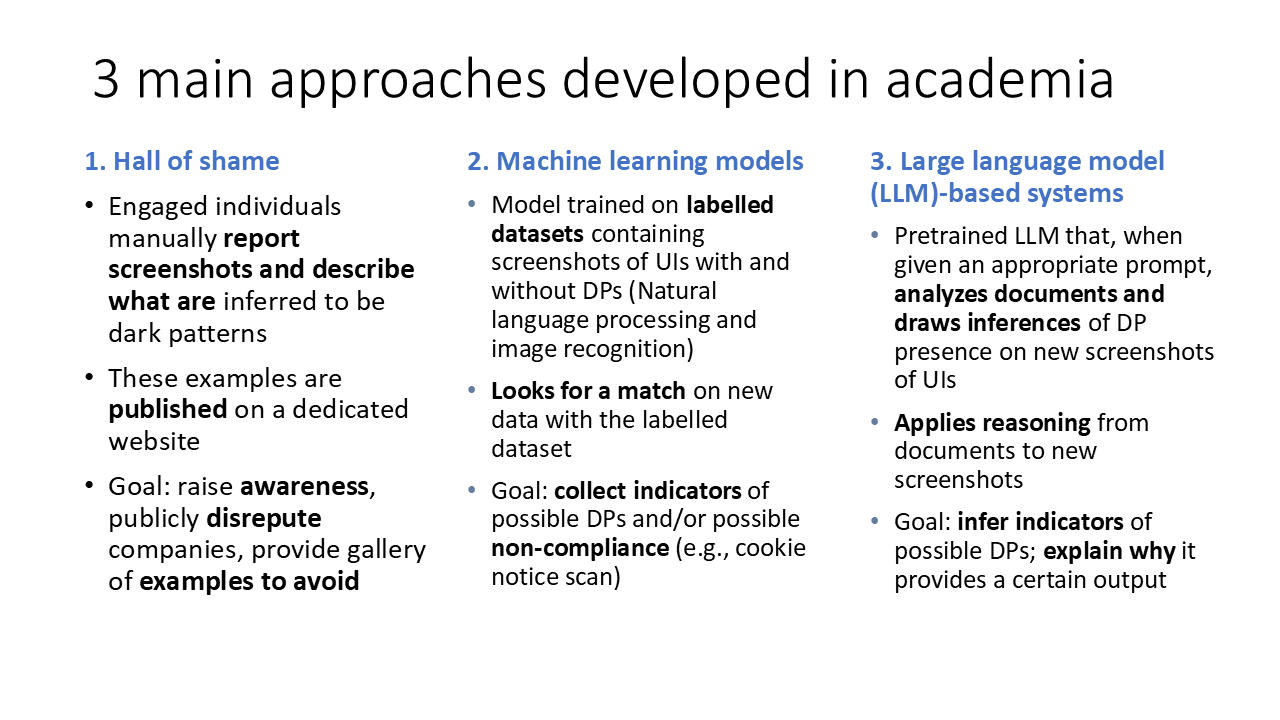

The evaluation of online interfaces for manipulative designs has historically relied on human experts painstakingly reviewing websites and applications. However, this manual approach suffers from inherent limitations; the process is exceptionally time-consuming, making it impossible to keep up with the constant emergence of new dark patterns. Moreover, subjective interpretation introduces inconsistency – what one reviewer flags as deceptive, another might not – and the sheer volume of interfaces launching daily overwhelms the capacity of even large teams. This reactive strategy perpetually lags behind those employing these tactics, creating a significant vulnerability for users as interfaces are routinely deployed before potentially harmful designs can be identified and addressed.

The sheer volume of online interfaces and the accelerating development of manipulative design tactics render traditional, manual detection of dark patterns impractical. Protecting users effectively demands automated solutions capable of analyzing interfaces at scale. These systems leverage techniques like machine learning to identify deceptive elements – from disguised advertisements to forced continuity subscriptions – far faster and more consistently than human review allows. Such automated detection isn’t simply about flagging problematic designs; it’s about proactively safeguarding potentially millions of users from exploitation and fostering a more trustworthy online environment. The development of robust and adaptable detection methods represents a crucial step in shifting the balance of power between platform designers and those they seek to influence.

Automated Interface Analysis: A Systematic Approach

Automated Detection of Design Pattern (DP) violations utilizes computational tools – specifically algorithms and scripts – to perform systematic analysis of user interface (UI) elements. This process involves parsing the HTML, CSS, and JavaScript code of a given interface to identify instances of elements such as buttons, forms, links, and input fields. These elements are then assessed against predefined rules representing established design patterns and accessibility guidelines. The automation extends to analyzing the relationships between UI components – their hierarchy, attributes, and behaviors – enabling large-scale identification of potential violations that would be impractical to achieve through manual inspection. The output of this automated process is a report detailing the location and nature of detected violations, facilitating targeted remediation efforts.

Interface Analysis, as applied to automated detection, involves the systematic examination of user interface (UI) elements and their arrangement to identify deviations from established design patterns and accessibility guidelines. This scrutiny focuses on attributes such as element types, hierarchical relationships, and attribute values – including color contrast, text alternatives, and keyboard navigation support – to determine if violations exist. The process relies on a pre-defined catalog of expected patterns and rules, against which observed UI characteristics are compared; discrepancies flag potential accessibility or usability issues. Automated tools utilize algorithms to perform this analysis at scale, processing numerous UI components and their properties to pinpoint violations that might otherwise require manual review.

Automated detection of design pattern (DP) violations requires comprehensive data acquisition from target websites and user interfaces. This data collection involves extracting information regarding element attributes – including size, position, color, and labeling – as well as the hierarchical relationships between these elements. The resulting datasets are then processed to identify instances of specific DP implementations and deviations from established guidelines. Data sources commonly include DOM structures, CSS stylesheets, and visual renderings, often obtained through web scraping or API access. The volume and variety of collected data directly influence the accuracy and scalability of the automated analysis process, necessitating robust data handling and preprocessing techniques.

Decoding Deception: Machine Learning and Linguistic Analysis

Machine Learning (ML) algorithms demonstrate efficacy in identifying dark patterns through the analysis of user interface (UI) elements and user behavior. These algorithms are trained on datasets of known dark patterns – manipulative interface designs that trick users into making choices they wouldn’t otherwise make – and learn to recognize associated features such as visual prominence of certain buttons, the use of deceptive language, and timing mechanisms designed to create a sense of urgency. The algorithms identify these patterns by quantifying characteristics like color contrast, font size, placement on the screen, and the frequency of specific wording. Effectiveness is measured by precision and recall rates, indicating the algorithm’s ability to correctly identify dark patterns while minimizing false positives. Current research focuses on improving the ability of these algorithms to generalize across different website designs and to detect novel dark patterns not present in the training data.

Large Language Models (LLMs) enhance the automated detection of dark patterns by processing the textual elements of user interfaces. These models analyze phrasing, sentence structure, and word choice to identify manipulative language commonly used in deceptive designs. Unlike rule-based systems that rely on predefined keywords, LLMs utilize contextual understanding to recognize subtle cues, such as ambiguous wording, emotionally charged language, or the strategic omission of information. This analysis extends beyond simple keyword spotting to assess the overall persuasive intent of the text, enabling the identification of dark patterns that might otherwise evade detection. LLMs are trained on vast datasets of text and user interface examples, allowing them to learn and generalize patterns associated with deceptive practices.

Cookie consent scanning represents a specialized application of automated dark pattern detection, specifically analyzing the design and language used within cookie consent interfaces. These scans evaluate elements such as pre-checked boxes, the prominence of “accept all” versus “reject all” options, and the complexity of language used to discourage opt-out choices. The process involves algorithms trained to identify manipulative UI/UX patterns common in these interactions, quantifying the degree to which a consent banner steers users towards accepting all cookies rather than exercising informed consent. Data collected from these scans can be used to assess compliance with privacy regulations like GDPR and the ePrivacy Directive, and to highlight instances of potentially deceptive design practices.

The Imperative of Oversight: Enforcement Technologies and Regulatory Action

Effective regulatory oversight is paramount in curtailing the proliferation of dark patterns and the subsequent legal violations they engender. These manipulative interface designs, which subtly coerce users into unintended actions, frequently breach existing consumer protection laws and data privacy regulations. A robust legal framework, encompassing statutes addressing unfair competition, deceptive practices, and data security, provides the necessary foundation for enforcement. However, the mere existence of laws is insufficient; diligent oversight, including proactive monitoring, investigation of complaints, and imposition of penalties, is essential to deter bad actors and ensure meaningful accountability. Consequently, the capacity of regulatory bodies to effectively identify, analyze, and prosecute dark pattern-driven violations is critical for maintaining a fair and trustworthy digital environment, safeguarding consumer rights, and fostering innovation built on ethical design principles.

Enforcement Technologies, or EnfTech, are rapidly becoming indispensable to modern regulatory oversight. These tools extend beyond simple monitoring, actively supporting compliance efforts through automated detection of deceptive design patterns and the collection of crucial evidence. EnfTech encompasses a diverse range of capabilities, from web crawlers that identify potentially manipulative interfaces to machine learning algorithms that flag suspicious user journeys. This technological support allows regulatory bodies to efficiently investigate complaints, build robust cases against organizations employing dark patterns, and ultimately ensure fairer digital interactions for consumers. By automating time-consuming processes and providing verifiable data, EnfTech is reshaping the landscape of regulatory enforcement, moving beyond reactive penalties toward proactive prevention and increased accountability.

Open source software is fundamentally reshaping the landscape of enforcement technologies (EnfTech), offering a pathway to increased transparency and collaborative development crucial for addressing complex digital violations. However, recent research reveals a significant gap between the tools currently being developed within academic circles and the pragmatic requirements of regulatory practitioners. While innovation abounds, many existing solutions lack the specific functionalities needed for robust legal evidence collection – a cornerstone of successful enforcement actions. This disconnect suggests a need for greater collaboration between researchers and regulators, focusing development efforts on creating EnfTech tools that are not only technically advanced, but also legally sound and directly applicable to real-world investigations of dark patterns and other deceptive online practices. Prioritizing these practical considerations will be vital to ensuring that open source EnfTech effectively supports regulatory oversight and fosters a more accountable digital environment.

The pursuit of automated detection of deceptive design patterns, as detailed in the research, inevitably introduces a complex interplay between technological capability and evidentiary standards. This echoes Linus Torvalds’ sentiment: “Most good programmers do programming as a hobby, and very few of them actually need to get paid for it.” The article reveals a similar dynamic; academic enthusiasm for building detection tools often outpaces the pragmatic concerns of regulators who require legally defensible, demonstrably accurate evidence. Any simplification in the detection process – automating away nuanced judgment, for example – carries a future cost in terms of legal challenge or inaccurate enforcement, demonstrating how technical debt accumulates even in the best-intentioned systems. The research emphasizes that the value isn’t simply having a tool, but ensuring its functionality aligns with the established framework of legal requirements.

What Lies Ahead?

The pursuit of automated detection for deceptive design – these ‘dark patterns’ – reveals a predictable tension. Each commit in the annals of this research is a record, and every version a chapter, yet the core problem persists: the translation of technical capability into legally admissible evidence. The tools themselves are not the destination, but rather artifacts shaped by the legal landscape – a landscape that demands more than simply identifying a pattern; it demands establishing intent, harm, and a clear violation of established precedent. Delaying alignment with these requirements is, effectively, a tax on ambition.

Future work must acknowledge this asymmetry. The focus should shift from solely improving detection rates – a metric divorced from practical application – toward establishing robust methodologies for contextualizing findings within a legal framework. The challenge isn’t merely ‘finding’ a dark pattern, but demonstrating its illegality with the requisite degree of certainty. This necessitates a deeper engagement with regulatory practitioners, not as end-users, but as co-creators of the evidentiary standards these tools must meet.

Ultimately, the longevity of this field will not be measured by the sophistication of the algorithms, but by their graceful decay – their ability to adapt and remain relevant as legal interpretations evolve and new forms of manipulation emerge. Time is not a metric to be optimized, but the medium in which these systems exist, and their true test lies in their capacity to weather its inevitable passage.

Original article: https://arxiv.org/pdf/2602.16302.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

- Crypto Chaos: Is Your Portfolio Doomed? 😱

- 17 Black Actresses Who Forced Studios to Rewrite “Sassy Best Friend” Lines

- HSR Fate/stay night — best team comps and bond synergies

- Anime Series Hiding Clues in Background Graffiti

- MicroStrategy’s $1.44B Cash Wall: Panic Room or Party Fund? 🎉💰

2026-02-19 10:34