Author: Denis Avetisyan

A new wave of artificial intelligence is fundamentally altering search, with profound implications for the information we access and how we make decisions.

This review examines the increasing prevalence of AI-driven search, its effects on information exposure, and the potential for algorithmic bias and misinformation at scale.

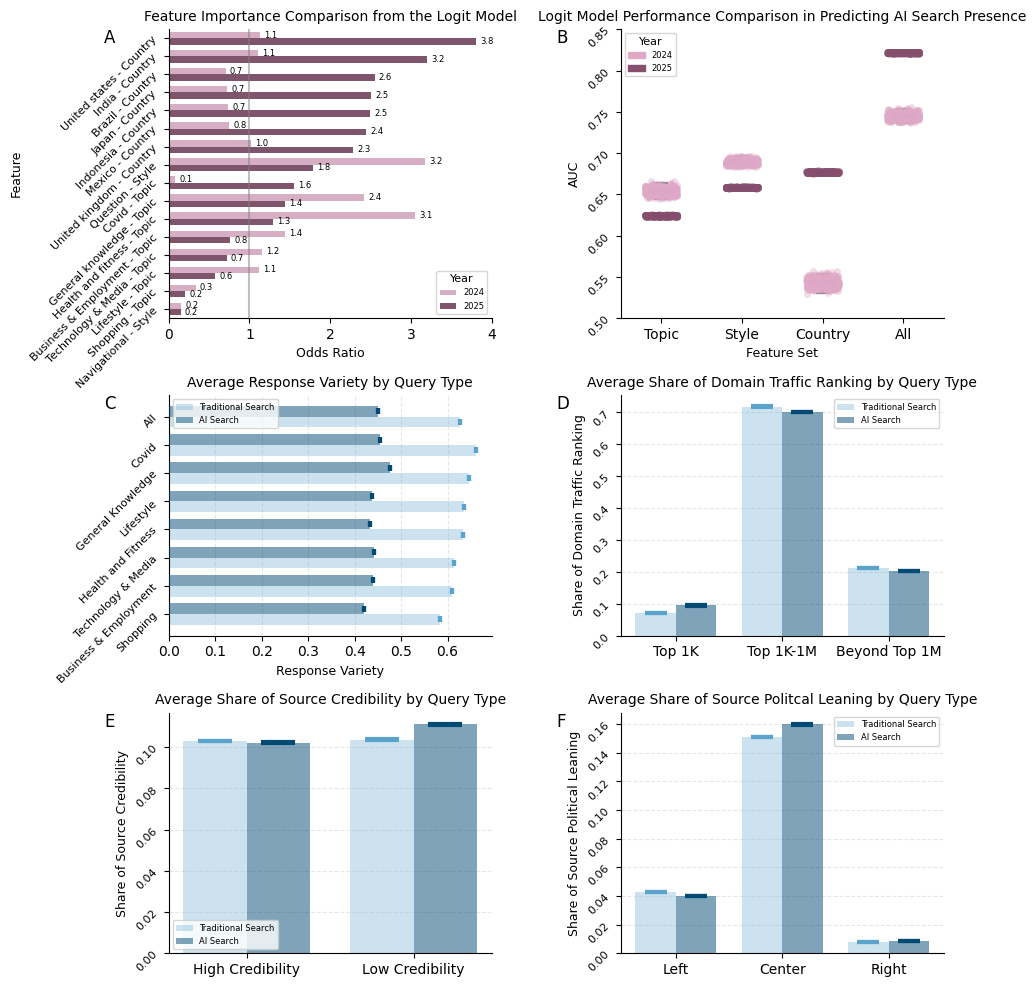

Despite increasing reliance on search engines, a comprehensive understanding of the rapidly evolving landscape of AI-driven information access remains limited. This research, ‘The Rise of AI Search: Implications for Information Markets and Human Judgement at Scale’, analyzes 2.8 million search results from 243 countries to reveal a dramatic expansion of AI search accompanied by concerning trends in information exposure and source diversity. Our findings demonstrate significant policy-driven exclusions-like the absence of AI results in France, Turkey, and China-and a 5600\% increase in AI-answered Covid queries between 2024 and 2025, alongside a surfacing of less credible and ideologically-skewed information. How will these shifts in information ecosystems impact public discourse, economic incentives, and ultimately, human decision-making at scale?

From Keywords to Understanding: The Evolution of Search

For decades, information retrieval relied heavily on matching keywords – a system that, while remarkably effective at locating documents containing specific terms, often falters when confronted with the subtleties of human language. A search for “best running shoes” might return results listing shoe retailers and articles about running shoes, but struggles to discern whether the user wants recommendations for trail running, road racing, or simply comfortable everyday wear. This limitation becomes particularly acute with complex inquiries, ambiguous phrasing, or questions requiring synthesis of information from multiple sources. The system excels at identifying what is said, but frequently misses what is meant, forcing users to sift through numerous results to find genuinely relevant information and highlighting the need for technologies capable of understanding intent, context, and the nuanced relationships between concepts.

The emergence of Large Language Models (LLMs) signals a potential revolution in how search functions, moving beyond simple keyword matching towards genuine semantic understanding of user intent. These models, trained on massive datasets, can decipher the meaning and context within a query, promising more relevant and nuanced results, even for complex or ambiguously phrased requests. However, this shift isn’t without its hurdles. LLMs are prone to “hallucinations,” generating plausible but factually incorrect information, and can be susceptible to biases present in their training data. Furthermore, ensuring the transparency and explainability of these models-understanding why a particular result was generated-remains a significant challenge, raising concerns about trust and accountability as LLMs become increasingly integrated into search technologies.

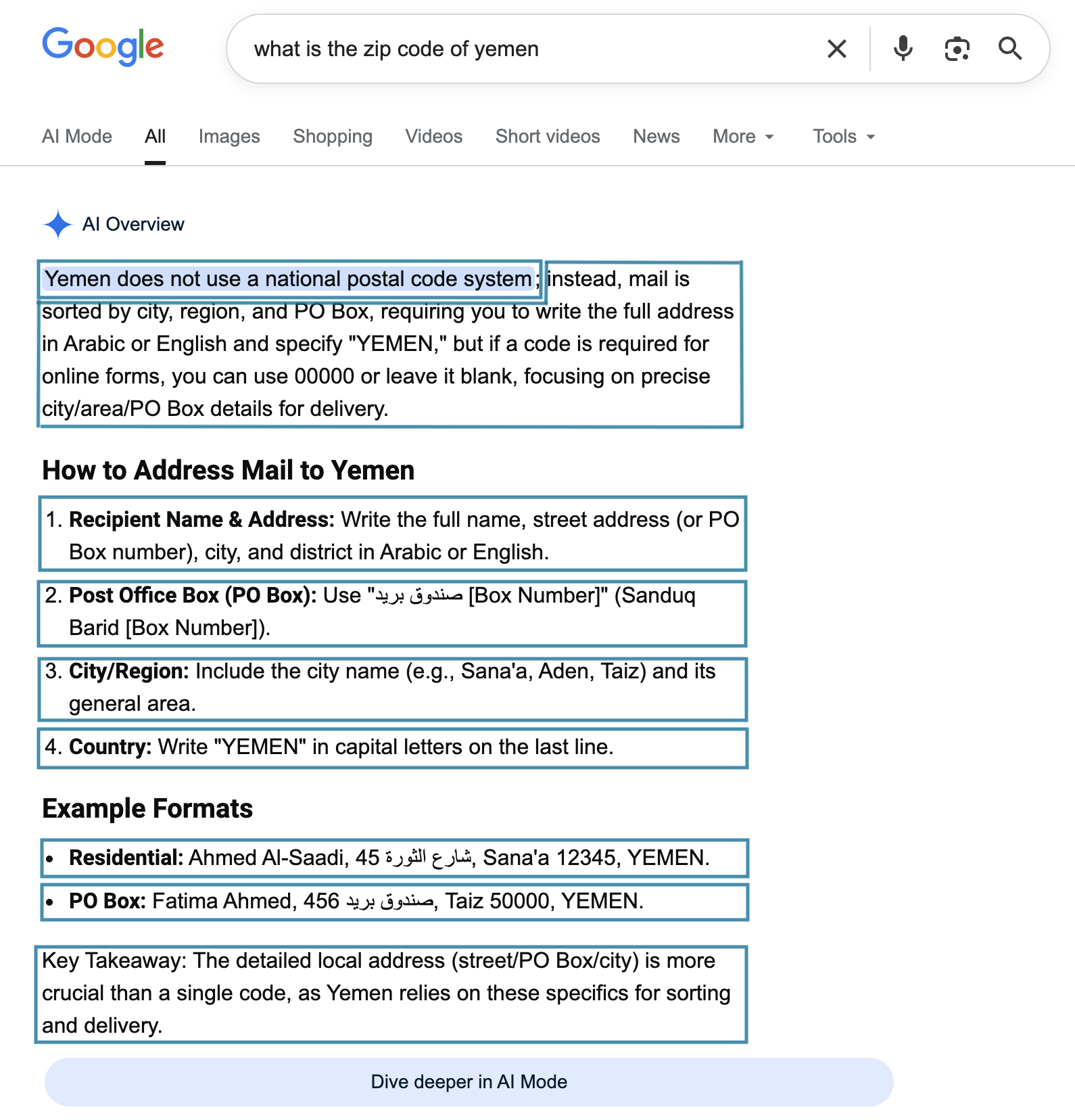

The evolution of search technology is now pivoting towards AI-driven synthesis, a process where information isn’t simply retrieved but actively constructed in response to a query. This represents a significant departure from traditional methods, promising dramatically faster answers by bypassing lengthy lists of links. However, this streamlined experience introduces a new layer of vulnerability; instead of evaluating sources directly, users rely on the AI’s interpretation and summarization. The potential for inaccuracies, biases embedded within the model’s training data, or even fabricated information-often termed ‘hallucinations’-becomes a central concern. While offering convenience, this shift necessitates a critical approach to AI-generated results, demanding users assess the underlying logic and potential limitations of synthesized knowledge.

Evaluating Accuracy and Source Credibility in AI Search

AI search systems, particularly those leveraging large language models, exhibit a propensity for generating “hallucinations” – outputs that present information as factual despite lacking supporting evidence in their training data or available knowledge sources. These inaccuracies are not simply errors of omission, but rather the confident assertion of fabricated details, potentially including nonexistent citations, data, or events. The underlying mechanisms driving these hallucinations are complex, stemming from the probabilistic nature of language models which prioritize coherent text generation over strict factual correctness. While developers are actively working on mitigation strategies, such as reinforcement learning from human feedback and retrieval-augmented generation, the risk of encountering hallucinatory outputs remains a significant limitation of current AI search technologies.

Assessing source credibility in the context of AI-driven search is significantly complicated by the velocity and volume of contemporary online information. Traditional methods of evaluation, such as examining author expertise or publication reputation, are challenged by the proliferation of user-generated content, rapidly evolving websites, and the ease with which information can be manipulated or presented out of context. The dynamic nature of the web means that a source considered reliable today may become outdated or compromised tomorrow, necessitating continuous re-evaluation. Furthermore, the increasing use of AI-generated content itself introduces a layer of complexity, as determining the original source and validating the information’s accuracy becomes more difficult. This necessitates a multi-faceted approach to source evaluation, incorporating automated tools alongside critical human assessment.

Several tools attempt to assess source reliability for use in evaluating AI search results, though each has limitations. Media Bias/Fact Check (MBFC) provides ratings based on factual reporting and bias, utilizing a methodology that considers multiple factors but is subject to human evaluation and potential subjective interpretations. Cisco Umbrella, a cybersecurity service, categorizes domains based on threat intelligence and content, offering insights into a source’s overall security profile and potential for malicious activity; however, categorization doesn’t directly equate to factual accuracy or journalistic integrity. Both resources require critical assessment of their methodologies and should be used in conjunction with other verification methods, as automated assessments are not infallible and the online information landscape is constantly evolving.

Measuring the Impact of AI on User Search Behavior

Monitoring AI exposure, defined as the frequency with which users are presented with AI-generated answers within search results, is a critical metric for assessing the technology’s impact on user behavior. Data indicates a substantial increase in AI search exposure, expanding from availability in 7 countries during 2024 to 229 countries by 2025. This rapid proliferation suggests a widespread integration of AI-driven responses into the global search landscape and necessitates ongoing measurement to understand corresponding shifts in user engagement and information-seeking patterns.

Analysis of Search Engine Results Pages (SERPs) utilizes a SERP API to collect data on organic rankings, paid advertisements, and featured snippets. To quantify the diversity of responses presented to users, techniques such as Sentence-BERT (SBERT) are applied. SBERT generates vector embeddings of search results, enabling the calculation of semantic similarity between different results. By measuring the cosine similarity between these embeddings, researchers can assess ‘Response Variety’; a higher average distance between vectors indicates a greater diversity of information presented, while clustering of vectors suggests redundancy. This methodology allows for the identification of patterns in how search engines present information, and how the introduction of AI-generated summaries impacts the breadth of results available to users.

Analysis leveraging datasets such as the Natural Questions Dataset and Google Trends indicates a correlation between AI-generated summaries and user search behavior. Specifically, users presented with AI summaries demonstrate an 80% ‘Zero-Click Rate’ – meaning they do not click on any further search results – compared to a 60% Zero-Click Rate for users who are not exposed to AI summaries. This data suggests that AI-generated responses are satisfying user information needs directly within the search results page, reducing the necessity for users to navigate to external websites.

Analysis of user search behavior revealed significant increases in AI-generated response exposure across multiple key global markets. Specifically, the United States experienced a 67% rise in AI exposure, while India saw a 60% increase. The United Kingdom, Mexico, Indonesia, Japan, and Brazil demonstrated even more substantial growth, with respective increases of 54%, 73%, 76%, 78%, and 82%. These figures represent observed changes in the frequency with which users are presented with AI-generated answers within search results during the measured period.

The Future of Search: Balancing Innovation and Maintaining Trust

The increasing prevalence of ‘Zero-Click Searches’, fueled by advancements in artificial intelligence, presents a notable shift in how users interact with information and potentially impacts website traffic. Recent analysis indicates that when encountering AI-generated summaries within search results, outbound clicks to external websites decrease by approximately 8%. This reduction is particularly significant when contrasted with a 15% decrease observed in the absence of such AI summaries, suggesting that these summaries effectively satisfy user queries directly within the search engine results page. This trend implies a fundamental change in the search paradigm, where AI aims to synthesize information and deliver concise answers, potentially diminishing the need for users to navigate to and explore external sources.

Growing anxieties surrounding the concentration of information control within AI-driven search engines have spurred regulatory discussions, notably the proposal for a ‘Link-Out Floor’. This potential regulation aims to ensure search results consistently direct users to original source material, countering the tendency of some AI systems to synthesize information and present it directly without attribution. Advocates believe a Link-Out Floor would mitigate the risk of biased or incomplete information dominating search results, fostering greater transparency and accountability. The core principle involves establishing a minimum percentage of search results that must link to external websites, thereby safeguarding the diversity of perspectives and empowering users to independently verify information presented by AI systems. This approach seeks to balance the convenience of AI-powered summaries with the critical need for open access to the broader web and the sources that underpin knowledge.

The contemporary search environment is undergoing a rapid transformation, driven by the proliferation of artificial intelligence-powered engines such as Google Search, ChatGPT Search, Perplexity AI, Microsoft Bing, and Arc Search. These platforms are not merely indexing the web, but actively synthesizing information and presenting direct answers, reshaping user behavior and information access. Notably, the impact of Executive Order 14149, aimed at responsible AI development, correlated with a dramatic 5600% increase in AI-generated responses to queries concerning Covid-19 – a clear indication of both the technology’s potential for rapid information dissemination and its growing role in addressing critical public health concerns. This shift towards direct answers, while offering convenience, is prompting debate about the future of website traffic and the importance of maintaining a diverse and open web ecosystem.

The study illuminates how increasingly complex systems – like AI-driven search – introduce hidden costs, mirroring the interconnectedness of all things. Claude Shannon observed, “The most important thing in communication is to convey the meaning, not just the information.” This principle resonates deeply with the findings regarding algorithmic bias and misinformation. The research demonstrates that while AI search excels at delivering information, ensuring meaningful and unbiased information exposure remains a critical challenge. Every new dependency – each layer of the algorithm, each data source – introduces potential distortions, demanding a holistic understanding of the information ecosystem to maintain integrity and foster genuine understanding.

Where Do We Go From Here?

The observed acceleration in AI-driven search isn’t merely a technical shift; it’s a restructuring of the information ecosystem itself. The study highlights vulnerabilities – amplification of bias, susceptibility to misinformation – but these aren’t bugs in the system, they’re features of any complex adaptive system striving for efficiency. A clever algorithm, optimized for engagement, will predictably discover, and exploit, the shortcuts to attention. If a design feels clever, it’s probably fragile.

Future work must move beyond symptom-checking-cataloging the biases present-to understanding the structural forces that generate them. The focus shouldn’t be on ‘fixing’ algorithms, but on recognizing that information exposure is always a constructed reality. The challenge lies not in eliminating bias-an impossible task-but in designing systems that make those biases transparent and auditable.

Ultimately, the question isn’t whether AI search is ‘good’ or ‘bad,’ but whether its inherent tendencies towards simplification and efficiency align with a more nuanced understanding of knowledge itself. The elegance of a system is not measured by its complexity, but by its ability to maintain stability in the face of inevitable perturbation. The simple solutions are, invariably, the enduring ones.

Original article: https://arxiv.org/pdf/2602.13415.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Wuchang Fallen Feathers Save File Location on PC

- Gold Rate Forecast

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- All weapons in Wuchang Fallen Feathers

- Where to Change Hair Color in Where Winds Meet

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Movie Black-Haired Beauties, Ranked

- Best Video Games Based On Tabletop Games

2026-02-17 16:26