Author: Denis Avetisyan

As autonomous vehicles navigate increasingly complex environments, maintaining reliable object detection in challenging weather conditions is paramount for safety and performance.

This review assesses methods to evaluate and enhance the robustness of object detection systems in adverse weather, focusing on performance limits and data augmentation strategies.

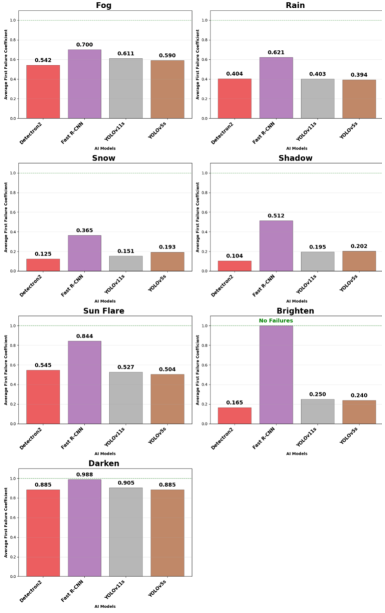

Ensuring the safe and reliable operation of autonomous vehicles requires robust performance beyond ideal conditions. This is addressed in ‘Robustness of Object Detection of Autonomous Vehicles in Adverse Weather Conditions’, which proposes a novel method for evaluating and enhancing the resilience of object detection models to challenging environmental factors. The study demonstrates that performance can be quantified via average first failure coefficients across synthetically generated adverse weather scenarios-including fog, rain, and snow-revealing significant variations in robustness between models like Faster R-CNN and YOLO variants. Can this approach not only identify operational limits but also guide the development of more consistently reliable perception systems for all-weather autonomous driving?

The Imperative of Reliable Perception in Autonomous Systems

The functionality of autonomous vehicles is deeply intertwined with the precision of object detection systems, which serve as the ‘eyes’ of the vehicle, identifying and classifying surrounding elements like pedestrians, other vehicles, and traffic signals. However, these systems, often reliant on cameras and lidar, are demonstrably susceptible to environmental interference. Variations in lighting, such as glare or shadows, and the presence of obstructions like heavy rain or dense fog, can significantly reduce the accuracy and reliability of object detection. This vulnerability isn’t merely a matter of inconvenience; it directly impacts the vehicle’s ability to make safe navigational decisions, potentially leading to collisions or other hazardous situations. Consequently, improving the resilience of object detection in real-world conditions is paramount to the widespread and safe adoption of self-driving technology.

Object detection systems, pivotal for applications like autonomous driving and advanced driver-assistance systems, experience substantial performance declines when confronted with inclement weather. Rain, fog, and snow introduce visual obstructions and distort sensor data, creating ‘noise’ that confuses algorithms trained on clear-weather datasets. This degradation manifests as reduced detection accuracy, increased false positives, and difficulty in accurately estimating object distances – all critical failures when a vehicle must react to pedestrians, cyclists, or other vehicles. Studies demonstrate that even moderate rainfall can halve the effective range of lidar and significantly impair the performance of camera-based systems, creating a dangerous scenario where timely and accurate object recognition is compromised, and the potential for collisions dramatically increases.

Current object detection systems, while increasingly sophisticated, possess inherent limitations that demand a paradigm shift toward operational robustness. These systems, often trained on datasets reflecting ideal conditions, struggle to maintain accuracy and reliability when confronted with the unpredictable variables of the real world. A focus on robustness isn’t simply about improving performance in adverse weather; it necessitates a fundamental rethinking of system design, incorporating techniques like sensor fusion, domain adaptation, and the development of algorithms less susceptible to noise and occlusion. Ensuring consistent and dependable functionality across all operational conditions-from blinding snowstorms to dense fog and torrential rain-is paramount for the widespread and safe deployment of autonomous technologies and other critical applications reliant on accurate environmental perception.

Data Augmentation: Expanding the Perceptual Horizon

Synthetic data generation and data augmentation are complementary techniques used to enhance the robustness of machine learning models. Synthetic data involves creating entirely new data instances programmatically, while data augmentation modifies existing data through transformations like rotations, scaling, or noise injection. Combining these approaches allows for a substantial expansion of the training dataset with diverse examples, particularly valuable when real-world data is scarce or biased. This expanded dataset exposes the model to a wider range of scenarios, improving its ability to generalize to unseen data and maintain performance under varying conditions. The process doesn’t require additional real-world data collection, reducing costs and time associated with acquiring and labeling large datasets.

Artificial expansion of training datasets through the introduction of variations simulating adverse weather conditions improves model generalization and sustained accuracy. This is achieved by creating modified versions of existing data-incorporating rain, snow, fog, or varying lighting conditions-to expose the model to a wider range of possible inputs. The model then learns to identify core features despite these variations, reducing its reliance on specific, ideal conditions present in the original dataset. This technique addresses the problem of insufficient real-world data captured during challenging weather events, thereby enhancing the model’s performance and reliability in diverse operational environments.

Autonomous vehicle development frequently encounters limitations due to the scarcity of real-world data captured during adverse conditions – such as heavy rain, snow, fog, or nighttime driving. Collecting sufficient data under these challenging scenarios is both expensive and potentially unsafe. This data limitation directly impacts the performance and reliability of perception systems, particularly those relying on machine learning. Consequently, models trained primarily on clear-weather datasets exhibit reduced accuracy and increased error rates when deployed in real-world conditions involving poor visibility or inclement weather. The inability to adequately train on edge cases creates a significant obstacle to achieving Level 4 or Level 5 autonomy, necessitating alternative data acquisition strategies.

Beyond mAP: Quantifying True Operational Resilience

Mean Average Precision (mAP), while a standard evaluation metric in object detection, primarily assesses performance under ideal conditions and fails to adequately capture performance degradation when faced with real-world disturbances. These disturbances include factors such as changes in lighting, weather events like rain or fog, or the introduction of sensor noise. A model achieving high mAP on a clean dataset may experience significant performance drops when deployed in a less controlled environment, a limitation not reflected in the mAP score. Consequently, reliance solely on mAP can lead to an overestimation of a model’s true operational capability and an inaccurate assessment of its robustness to common environmental stressors.

The First Failure Coefficient (FFC) is a metric designed to assess the operational robustness of object detection models when subjected to increasing levels of environmental stress. It quantifies performance degradation by establishing a threshold for acceptable performance – defined as a minimum mAP of 50% – and calculating the percentage of stress applied at which performance first falls below this threshold. Specifically, the FFC measures the stress level at which the model’s mAP drops below 50% and normalizes this value against the maximum possible stress level. This provides a single value representing the model’s resilience; a higher FFC indicates greater robustness as the model maintains acceptable performance under more severe conditions. The metric is calculated per stress type and averaged to provide a comprehensive robustness score.

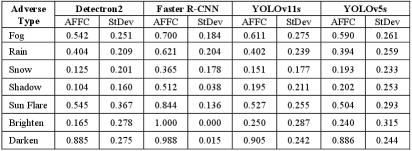

Evaluation of YOLOv5 and Faster R-CNN object detection models using the Corruption Benchmarks dataset, in conjunction with the First Failure Coefficient (AFFC), indicates superior robustness for Faster R-CNN. Specifically, Faster R-CNN achieved an average AFFC of 71.9% when subjected to various weather-related corruptions – including rain, snow, fog, and haze – simulating real-world operational stress. This metric quantifies the point at which performance degrades significantly and demonstrates that Faster R-CNN maintains acceptable performance levels at higher corruption intensities compared to the tested YOLOv5 configurations. The AFFC was calculated as the percentage of corruption severity levels at which the model still achieves a baseline level of performance, defined by a minimum mAP threshold.

Architectural Refinement: Forging Models Impervious to Adverse Conditions

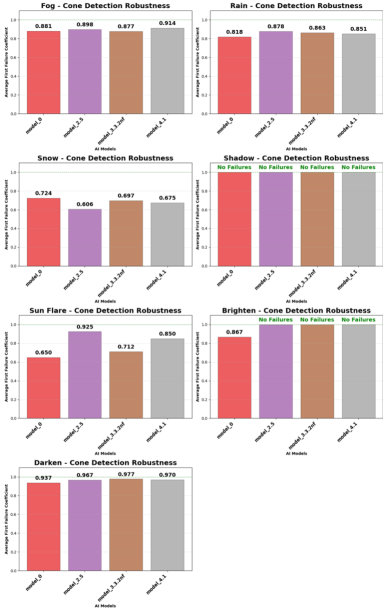

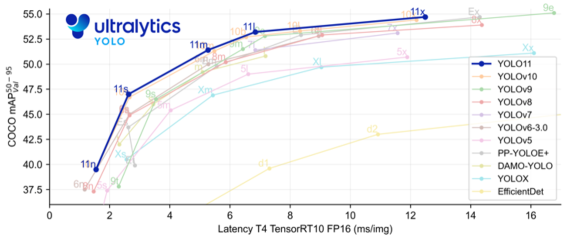

The Oxford Brookes Racing Autonomous (OBRA) team developed YOLOv11 as an iterative improvement upon the YOLOv5 object detection model. This new model incorporates architectural modifications and training strategies specifically intended to increase robustness in challenging environmental conditions. The core design goal was to maintain high detection accuracy while mitigating performance degradation caused by factors such as poor visibility, varying lighting, and inclement weather. Implementation involved integrating YOLOv11 into the team’s autonomous vehicle perception pipeline and conducting rigorous testing to validate its enhanced capabilities compared to the baseline YOLOv5 model.

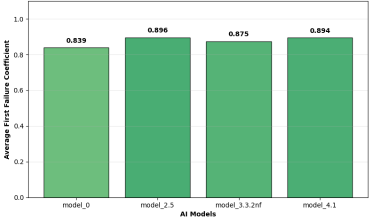

Performance of the YOLOv11 model was evaluated through simulation of adverse weather conditions using the First Failure Coefficient (AFFC) as a key metric. The AFFC quantifies robustness by determining the percentage of simulated failures before the model’s performance degrades below an acceptable threshold. Testing protocols included scenarios designed to mimic conditions such as sun flares and snowfall, allowing for comparative analysis against a baseline model (M0). Results indicate that YOLOv11 demonstrates a measurable improvement in maintaining accurate performance under these simulated disturbances, as evidenced by statistically significant increases in AFFC values across multiple test conditions.

Model M2.5 demonstrated a 6% increase in the First Failure Coefficient (AFFC) compared to the baseline model M0, achieving an overall AFFC of 89.6%. Performance was further evaluated under specific adverse conditions; the model attained an AFFC of 92.5% when subjected to simulated sun flare interference and 72.4% AFFC under simulated snow interference. These results indicate a measurable improvement in robustness across various challenging operational scenarios.

Towards Truly Autonomous and Reliable Systems: A Call for Resilient Perception

Object detection models intended for real-world deployment, particularly in autonomous systems, often falter when faced with conditions differing from their training data – a sudden downpour, dense fog, or glaring sunlight can drastically reduce accuracy. Researchers are now emphasizing operational robustness – the ability of a model to maintain performance across a wide spectrum of environmental challenges. This isn’t simply about achieving high average accuracy; it demands a shift towards evaluating models using metrics like the First Failure Coefficient, which measures the percentage of test cases where the model fails entirely. By prioritizing this coefficient alongside traditional metrics, developers can proactively identify and address vulnerabilities, building object detection systems demonstrably resilient to adverse weather and ensuring safer, more reliable performance in complex, uncontrolled environments.

The development of robust object detection models isn’t merely a technological refinement, but a foundational requirement for realizing the full potential of autonomous vehicles. Successful navigation in real-world conditions demands consistent and accurate performance, not just in ideal scenarios, but also when confronted with the unpredictable complexities of adverse weather, varied lighting, and dynamic environments. This consistent performance is paramount for ensuring passenger safety, reducing accident rates, and fostering public trust in self-driving technology. Consequently, prioritizing operational robustness translates directly into enabling the safe and widespread deployment of autonomous systems, paving the way for innovations in transportation, logistics, and accessibility across diverse geographical locations and challenging operational domains.

Continued progress in autonomous systems necessitates tackling the challenge of catastrophic forgetting, a phenomenon where newly learned information overwrites previously acquired knowledge, hindering long-term reliability. Researchers are actively investigating strategies to mitigate this, including techniques like replay buffers and elastic weight consolidation, aiming to preserve past learnings while accommodating new data. Complementing these approaches, the exploration of novel data augmentation strategies-going beyond simple rotations or flips-promises to create more robust models. These advanced augmentations could simulate rare but critical edge cases, such as unusual lighting conditions or sensor noise, thereby exposing the system to a wider range of scenarios during training and bolstering its overall resilience in unpredictable real-world environments.

The pursuit of reliable autonomous systems demands a focus extending beyond mere performance metrics. This paper’s emphasis on identifying operational limits under adverse weather conditions aligns perfectly with a mathematically grounded approach to machine learning. As Andrew Ng aptly stated, “Machine learning is essentially about learning a mapping from inputs to outputs.” However, a mapping’s validity isn’t determined solely by successful predictions on clean datasets; it requires rigorous testing against edge cases, such as those explored in this study. Establishing these limits isn’t about accepting imperfection, but rather about acknowledging the boundaries within which the system can provably operate safely, offering a level of certainty exceeding that of purely empirical evaluation. The work serves as a potent reminder that in the chaos of data, only mathematical discipline endures.

Beyond the Horizon

The pursuit of robustness in object detection, as illuminated by this work, frequently resembles an exercise in applied pragmatism rather than rigorous science. While identifying performance degradation under adverse weather is valuable – and belatedly so – it merely addresses symptoms. The fundamental question of what constitutes ‘detectable’ under genuinely challenging conditions remains largely untouched. One suspects that current metrics, often derived from idealized datasets, offer a dangerously optimistic view of real-world performance.

Future efforts must shift from empirical testing – endlessly augmenting datasets with synthetic rain and fog – toward a more formal analysis of sensor limitations and algorithmic invariants. To declare an object ‘detected’ implies a quantifiable degree of certainty, yet this certainty is rarely, if ever, explicitly linked to the underlying physics of the sensing process. The field needs a theoretical framework that can predict, rather than merely measure, the boundaries of reliable operation.

Optimization without analysis is, of course, self-deception. This work serves as a necessary, if incremental, step. However, the true challenge lies not in building models that appear to function in difficult conditions, but in understanding why they fail, and establishing mathematically sound operational limits beyond which any detection can be considered inherently unreliable.

Original article: https://arxiv.org/pdf/2602.12902.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Wuchang Fallen Feathers Save File Location on PC

- Where to Change Hair Color in Where Winds Meet

- Brown Dust 2 Mirror Wars (PvP) Tier List – July 2025

- All weapons in Wuchang Fallen Feathers

- Top 15 Celebrities in Music Videos

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Macaulay Culkin Finally Returns as Kevin in ‘Home Alone’ Revival

- HSR 3.7 breaks Hidden Passages, so here’s a workaround

2026-02-16 20:15