Author: Denis Avetisyan

New research reveals that combining the power of artificial intelligence with established operations research and human insight yields significant improvements in managing supply and demand.

This review demonstrates the complementary benefits of integrating Large Language Models with traditional optimization techniques and human expertise for enhanced inventory control systems.

Effective inventory control often relies on rigid modeling assumptions that struggle with dynamic demand and incomplete information. This research, ‘AI Agents for Inventory Control: Human-LLM-OR Complementarity’, investigates how to integrate the strengths of traditional operations research (OR), large language models (LLMs), and human expertise in a multi-period inventory setting. Results demonstrate that augmenting OR algorithms with LLMs and incorporating human oversight leads to improved performance, revealing a complementary-rather than substitutive-relationship between these approaches. Given these findings, what is the optimal architecture for human-AI collaboration in complex supply chain decision-making?

Deconstructing the Inventory Illusion

Supply chain efficiency fundamentally hinges on adept inventory management, a delicate balancing act between minimizing operational costs and consistently meeting customer demand. Insufficient inventory risks lost sales and diminished customer satisfaction, while excessive stock ties up capital, incurs storage expenses, and increases the potential for obsolescence. This interplay isn’t simply about finding a midpoint; it requires a nuanced understanding of demand patterns, lead times, and the associated costs of holding and ordering goods. Consequently, businesses strive to optimize inventory levels to ensure product availability without creating a financial burden, directly impacting profitability and long-term sustainability. A well-managed inventory isn’t merely a list of items; it’s a dynamic system that responds to market fluctuations and strengthens a company’s competitive position.

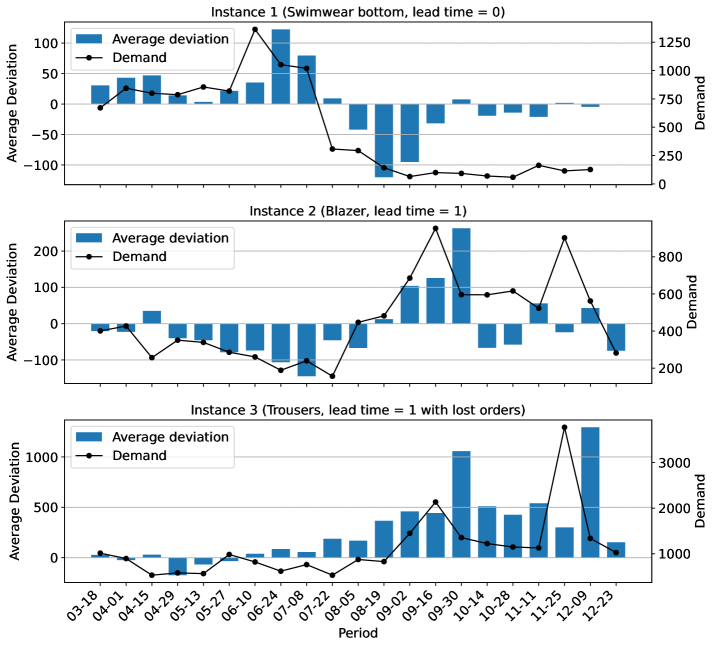

Conventional inventory control strategies often falter when confronted with the dynamism of actual supply chains. Historically, many systems relied on static forecasts and assumed predictable delivery schedules, but real-world demand rarely remains consistent and lead times are frequently subject to unforeseen disruptions. This disconnect results in a precarious balancing act: overestimation of need leads to costly excess inventory – tying up capital and risking obsolescence – while underestimation quickly manifests as stockouts, frustrating customers and potentially losing sales. The inherent difficulty lies in accurately modeling these uncertainties; even minor deviations from projected demand or lead times can cascade through the system, amplifying errors and creating significant inefficiencies. Consequently, businesses are increasingly seeking more robust and adaptive approaches to navigate these inherent complexities and maintain optimal inventory levels.

The core of effective inventory control extends beyond simply tracking stock levels; it’s fundamentally challenged by inherent uncertainties and financial considerations. The `Inventory_Control_Problem` isn’t a static calculation, but a dynamic one heavily influenced by `Stochastic_Lead_Time` – the unpredictable nature of how long it takes to replenish supplies. This variability forces businesses to hold safety stock, adding to costs. Simultaneously, optimizing the `Cost_Structure` – encompassing holding costs, ordering costs, and potential penalties for stockouts – becomes paramount. A robust solution must therefore balance the risk of insufficient inventory against the financial burden of maintaining large reserves, often requiring sophisticated modeling to navigate these competing pressures and achieve optimal efficiency. Cost = HoldingCost + OrderingCost + StockoutCost

Baseline Versus the Algorithm: A Test of Systems

The `OR_Baseline` serves as a quantitative benchmark for evaluating more complex decision-making strategies. This baseline utilizes a `Capped_Base_Stock` policy, meaning a fixed maximum inventory level is maintained, with replenishment occurring when stock falls below a predefined reorder point. The reorder point and base stock level are determined from historical demand data, aiming to satisfy anticipated demand while minimizing holding costs. This approach is purely data-driven and does not incorporate external factors or subjective assessments; its performance, measured by metrics such as total cost and fill rate, establishes a standard against which the `Human_Decision_Maker` and `LLM_Agent` are compared.

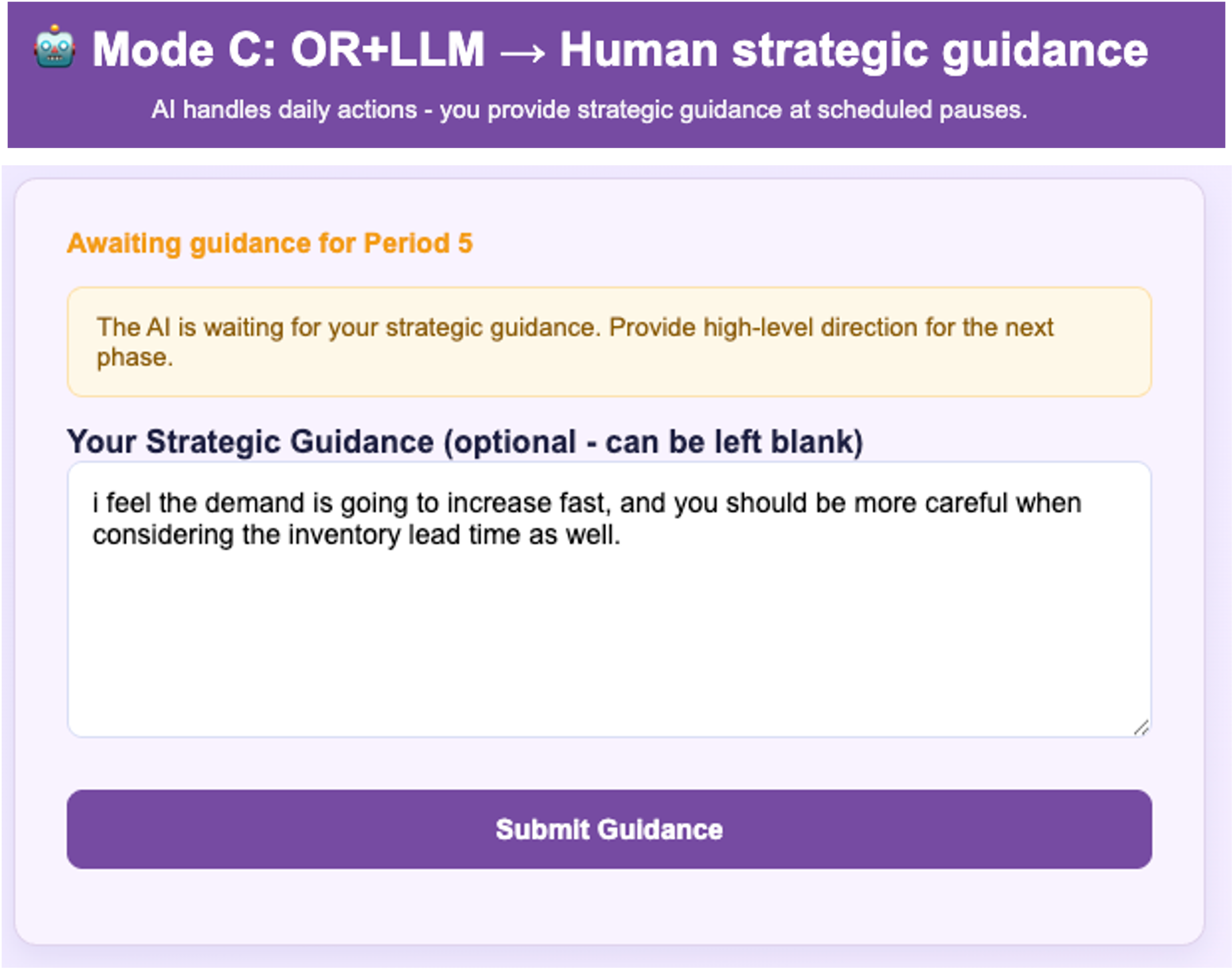

Two advanced inventory decision-making methods were implemented for comparative analysis: a human decision-maker and a Large Language Model (LLM) agent. Both approaches utilize demand forecasting data as a key input to their inventory replenishment strategies. This allows both the human and the LLM to anticipate future demand and adjust inventory levels proactively, rather than relying solely on historical data or static rules. The integration of demand forecasting aims to improve the responsiveness and efficiency of inventory management within these advanced systems.

Both the Human Decision-Maker and the LLM Agent enhance their situational assessments by incorporating contextual reasoning and world knowledge. Contextual reasoning involves analyzing current conditions – such as promotional periods, seasonality, or supply chain disruptions – to adjust inventory decisions beyond simple demand predictions. World knowledge refers to the integration of broader, generally accepted information about products, customer behavior, and market dynamics. This allows both agents to account for factors not explicitly captured in historical data or demand forecasts, leading to more nuanced and potentially accurate inventory control compared to baseline methods.

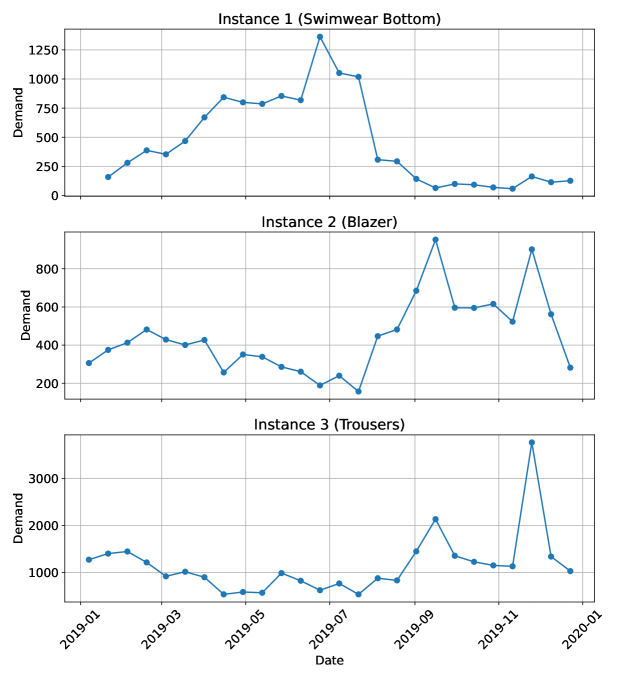

Stress-Testing Intelligence: The InventoryBench Dataset

The evaluation of these methods utilized the InventoryBench dataset, comprising 1320 instances derived from real-world inventory management scenarios. This dataset includes a diverse range of problem sizes, demand patterns, and cost structures, reflecting the complexity encountered in practical applications. Each instance within InventoryBench specifies parameters such as holding costs, ordering costs, lead times, and historical demand data over a defined planning horizon. The dataset was constructed to ensure a standardized and objective basis for comparing the performance of different approaches across a broad spectrum of realistic inventory control challenges.

The InventoryBench dataset facilitates standardized and objective comparison of model performance by comprising 1320 real-world inventory management instances with varying characteristics, including demand patterns, lead times, and cost structures. This allows for evaluation across a diverse range of operational conditions, mitigating bias from specific instance types. Each instance within the dataset includes historical demand data, associated costs (holding, ordering, shortage), and lead time distributions, providing a complete simulation environment for assessing the efficacy of different inventory control approaches. Performance is measured consistently across all instances using metrics such as total cost, fill rate, and order quantities, ensuring a fair and reproducible comparison of algorithmic performance.

Quantitative analysis of the LLM-augmented approach on the InventoryBench dataset reveals a 21% improvement in Normalized Reward when compared to the OR_Baseline. This metric, calculated across all 1320 instances, represents the total accumulated reward adjusted for instance-specific scales and complexities. The observed increase indicates a statistically significant enhancement in performance attributable to the integration of the Large Language Model, demonstrating its capacity to optimize inventory management strategies beyond the capabilities of the baseline approach. Further details on the Normalized Reward calculation and statistical significance are available in the methodology section.

Analysis of user interactions with the LLM-augmented system revealed that a minimum of 20.3% of individuals exhibited improved performance metrics as a direct result of the human-AI collaboration. This finding was determined through statistical analysis (p < 0.05), establishing a significant complementarity between human problem-solving and the LLM’s capabilities. The observed benefit manifested as increased efficiency and/or accuracy in completing tasks within the InventoryBench dataset, suggesting the LLM effectively augmented human decision-making processes for a substantial portion of the user base.

The Synergy of Mind and Machine: Beyond Automation

Recent research demonstrates a notable phenomenon termed the ‘Complementarity Effect,’ revealing that the synergistic combination of human expertise and large language model (LLM)-driven insights consistently yields superior outcomes compared to either approach functioning independently. This isn’t simply an additive benefit; rather, the interplay between human qualitative judgment and LLM-powered data analysis creates a more robust and effective decision-making process. The findings suggest that humans excel at incorporating nuanced contextual factors and exercising critical assessment, while LLMs rapidly process vast datasets and identify patterns often missed by traditional methods. This combined intelligence unlocks a capacity for problem-solving that transcends the limitations of individual capabilities, offering a pathway to enhanced performance across various complex domains.

The true power of combined intelligence lies in the complementary strengths of human expertise and large language models. While LLMs excel at processing vast datasets and generating data-driven recommendations with remarkable speed, they often lack the nuanced understanding of qualitative factors – considerations like geopolitical risk, supplier relationships, or evolving market sentiment. Humans, conversely, possess the critical thinking skills and contextual awareness to integrate these intangible elements into decision-making processes. This synergy allows for a more holistic and adaptive approach; the LLM provides a robust analytical foundation, and the human refines it with judgment and experience, ultimately leading to solutions that are both efficient and strategically sound.

The integration of large language models into supply chain management offers a pathway toward markedly improved operational efficiency and robustness. By combining human expertise with the analytical power of LLMs, organizations can anticipate disruptions, optimize resource allocation, and minimize costs with greater precision. This synergistic approach doesn’t simply automate existing processes; it empowers stakeholders to consider a broader range of variables – from geopolitical risks to nuanced market trends – fostering proactive decision-making. The result is a supply chain less vulnerable to unforeseen events, capable of adapting quickly to changing conditions, and ultimately, operating with greater sustainability through reduced waste and optimized resource utilization. This shift promises not only economic benefits, but also a more responsible and environmentally conscious approach to global commerce.

A recent study rigorously demonstrates the substantial benefits of integrating Large Language Models (LLMs) with traditional Operations Research (OR) heuristics. Results indicate a noteworthy 21% performance improvement when these approaches are combined, exceeding the capabilities of OR methodologies employed in isolation. This enhancement isn’t merely incremental; it suggests a synergistic effect where the LLM’s capacity for rapid data analysis and pattern identification complements the established rigor of OR techniques. The findings validate the potential of this integrated approach to optimize complex systems and unlock efficiencies previously unattainable, offering a pathway towards more robust and adaptable operational strategies.

The pursuit of optimized inventory control, as detailed in this research, echoes a fundamental principle of system understanding: true mastery lies in deconstruction. This work showcases how Large Language Models don’t simply replace established operations research methods, but rather, illuminate their limitations and potential for synergistic improvement. As Ken Thompson famously stated, “Sometimes it’s the people who can’t read that are the most informed.” Similarly, LLMs, while not possessing human intuition, can expose hidden patterns and augment human decision-making, fostering a complementarity that surpasses either approach alone. The research validates this, demonstrating LLMs’ ability to refine OR outputs and offer novel insights – a process akin to reverse-engineering the complexities of supply chain management.

Beyond the Stockroom: Future Vectors

The demonstrated complementarity of Large Language Models, operations research, and human intuition is, predictably, not an ending but a reconfiguration. The current work illuminates a functional synergy, but sidesteps the thornier question of why this combination thrives. Is the LLM merely smoothing the edges of OR’s inherent rigidity, or is it identifying previously unquantifiable variables? The answer, one suspects, lies in the messy realm of tacit knowledge-the things humans ‘just know’ that algorithms struggle to articulate, and which the LLM, through sheer scale, begins to approximate. Future research must dissect this ‘black box’-not to eliminate the mystery, but to rigorously define the boundaries of LLM competence.

A critical limitation remains the reliance on existing inventory data. True innovation will necessitate LLMs capable of proactive, not reactive, control-predicting disruptions, anticipating demand shifts, and dynamically reconfiguring supply chains before they falter. This demands a move beyond correlative analysis towards genuine causal modeling – a far more ambitious undertaking.

Ultimately, the best hack is understanding why it worked. Every patch-every refinement of this human-LLM-OR system-is a philosophical confession of imperfection. The goal isn’t flawless inventory control, but a continually evolving understanding of the complex systems it attempts to manage. And that, after all, is where the real value resides.

Original article: https://arxiv.org/pdf/2602.12631.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Celebrities in Music Videos

- Top 20 Extremely Short Anime Series

- Best Video Games Based On Tabletop Games

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

2026-02-16 16:57