Author: Denis Avetisyan

A new deep learning framework analyzes evolving patterns in earthquake data to pinpoint subtle anomalies that could indicate changing seismic risk.

This review details a progressive deep learning architecture for detecting spatiotemporal b-value anomalies as a potential indicator of evolving earthquake activity.

Predicting large earthquakes remains a persistent challenge despite decades of research into precursory signals. This is addressed in ‘Detecting Spatiotemporal b-Value Anomalies with a Progressive Deep Learning Architecture’, which introduces a novel methodological framework for identifying subtle changes in seismicity patterns using gridded b-values. The authors demonstrate a hybrid deep-learning architecture, trained with a progressive, time-forward strategy, capable of classifying spatiotemporal blocks of b-value data and potentially flagging anomalous seismic states. Could this approach, focused on dynamic pattern recognition, offer a pathway towards improved earthquake early warning systems and a deeper understanding of earthquake physics?

Unearthing the Whispers Before the Rupture

Earthquake prediction has historically depended on established statistical relationships, such as the Gutenberg-Richter Law which describes the frequency-magnitude distribution of earthquakes, assuming events follow predictable patterns. However, growing evidence suggests that significant earthquakes aren’t always neatly contained within these established norms; subtle anomalies – deviations in seismic activity, foreshock patterns, or even changes in groundwater levels – can sometimes precede major ruptures. These precursors, though often faint and complex, represent a critical area of study, as recognizing them could offer a pathway towards improving forecasting capabilities. The challenge lies in deciphering these signals from the constant ‘noise’ of natural tectonic processes, and determining whether a particular anomaly truly indicates an impending earthquake or is simply a random fluctuation within the Earth’s dynamic system.

The pursuit of earthquake precursors demands the analysis of immense spatiotemporal datasets – encompassing years of seismic readings, ground deformation measurements, and even subtle shifts in electromagnetic fields. This presents a formidable computational challenge, as the volume and complexity of the data quickly overwhelm traditional analytical techniques. Effectively processing this information requires not only substantial computing power but also the development of sophisticated algorithms capable of discerning meaningful signals from the constant ‘noise’ of natural geological processes. Researchers are increasingly turning to machine learning and advanced statistical modeling to manage this data deluge, seeking patterns that might otherwise remain hidden within the complex interplay of forces beneath the Earth’s surface. The sheer scale of computation needed highlights the need for distributed computing and innovative data storage solutions to make real-time anomaly detection a practical reality.

The pursuit of earthquake prediction is hampered by the difficulty of discerning genuine warning signs from the constant background ‘noise’ of natural tectonic activity. Current anomaly detection methods often flag fluctuations in seismic waves, ground deformation, or even subtle changes in electromagnetic fields, but these signals frequently prove to be false alarms. This stems from the inherent complexity of Earth’s systems, where random variations can mimic the patterns expected before a major rupture. Consequently, a high rate of false positives overwhelms researchers and erodes public trust in earthquake early warning systems, necessitating the development of more sophisticated analytical techniques capable of filtering out these misleading indicators and accurately identifying true precursors to seismic events.

Deconstructing the Signal: A Deep Learning Framework

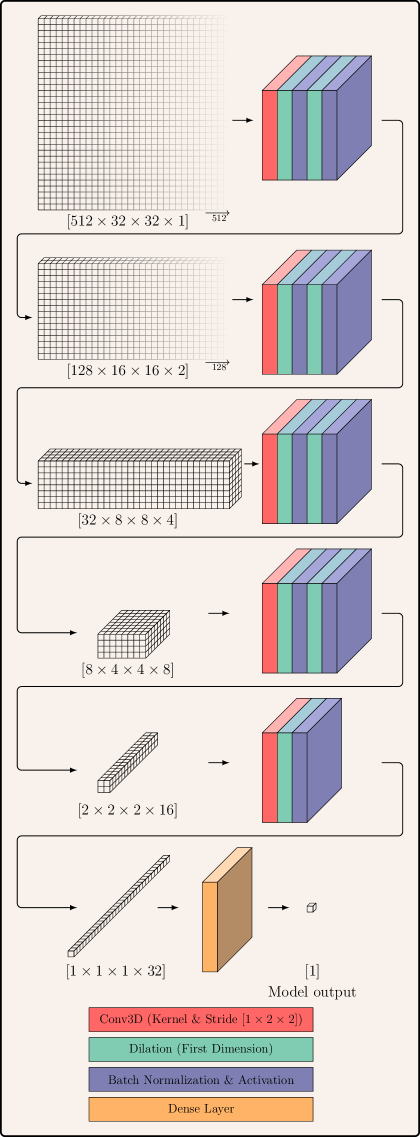

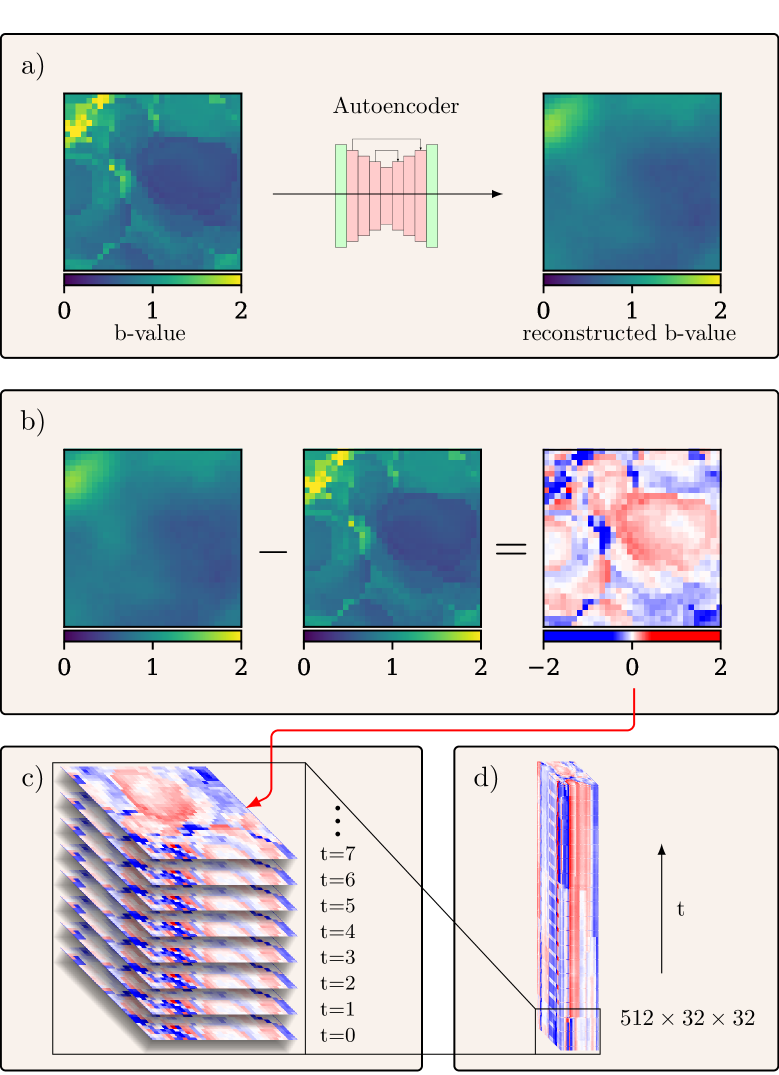

The proposed Deep Learning Framework is designed for the analysis of spatiotemporal data originating from earthquake catalogs, with a specific focus on variations in the b-value, a parameter related to the relative number of small versus large earthquakes. This framework ingests data representing earthquake locations and magnitudes over time, treating it as a continuous field. The b-value is calculated for defined spatial and temporal windows within this data, and these values, along with their associated coordinates and timestamps, serve as the primary input features for the deep learning model. The system is intended to identify deviations in b-value that may indicate changes in stress conditions or precursory activity, providing a means for automated monitoring of seismically active regions.

The Autoencoder component within the deep learning framework utilizes a neural network architecture to reduce the high dimensionality of spatiotemporal seismic data. This dimensionality reduction is achieved by learning a compressed, latent-space representation of the input data, effectively distilling key features while discarding noise and redundancy. The Autoencoder consists of an encoder network, which maps the input data to a lower-dimensional code, and a decoder network, which reconstructs the original data from this code. The difference between the input and reconstructed data is minimized during training, forcing the Autoencoder to learn the most salient features for accurate representation and subsequent analysis of seismic patterns.

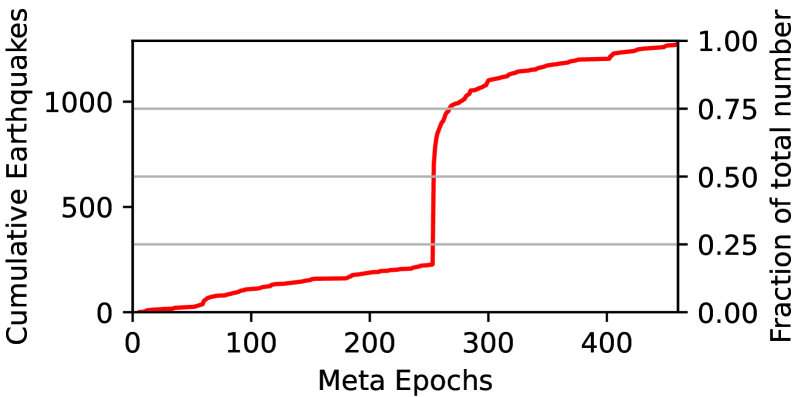

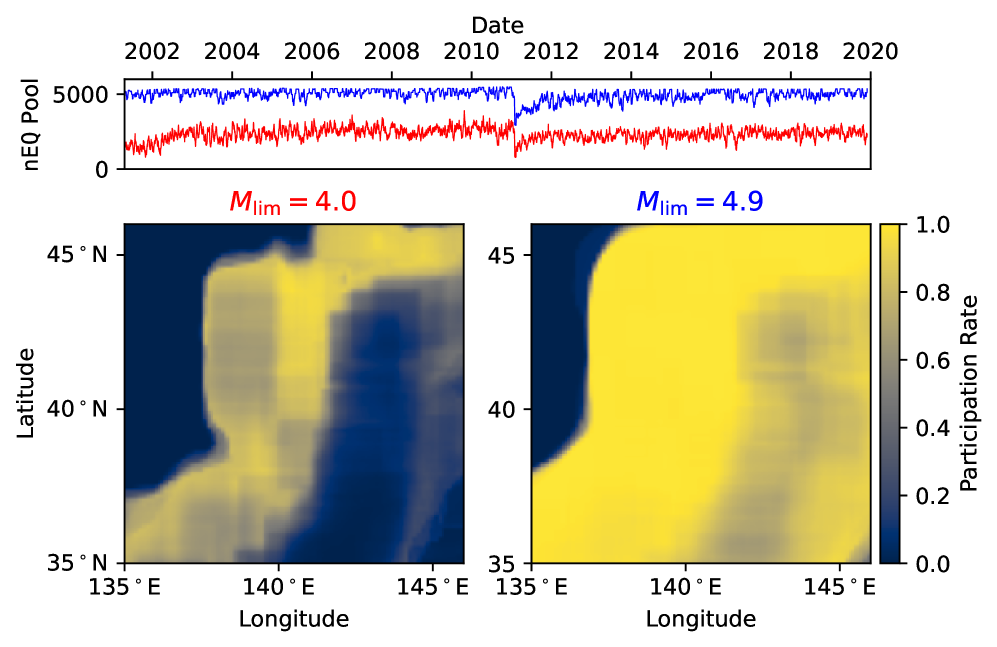

Progressive Training was incorporated into the deep learning framework to address non-stationary behavior in seismic data; the model is incrementally exposed to data reflecting evolving seismic conditions. This approach allows the autoencoder to continually refine its feature extraction capabilities, improving anomaly detection performance over time. During evaluation, the framework achieved a training accuracy of 70.52% based on the provided dataset, indicating successful adaptation to the dynamic nature of seismic events.

Quantifying the Predictive Edge: Performance Metrics

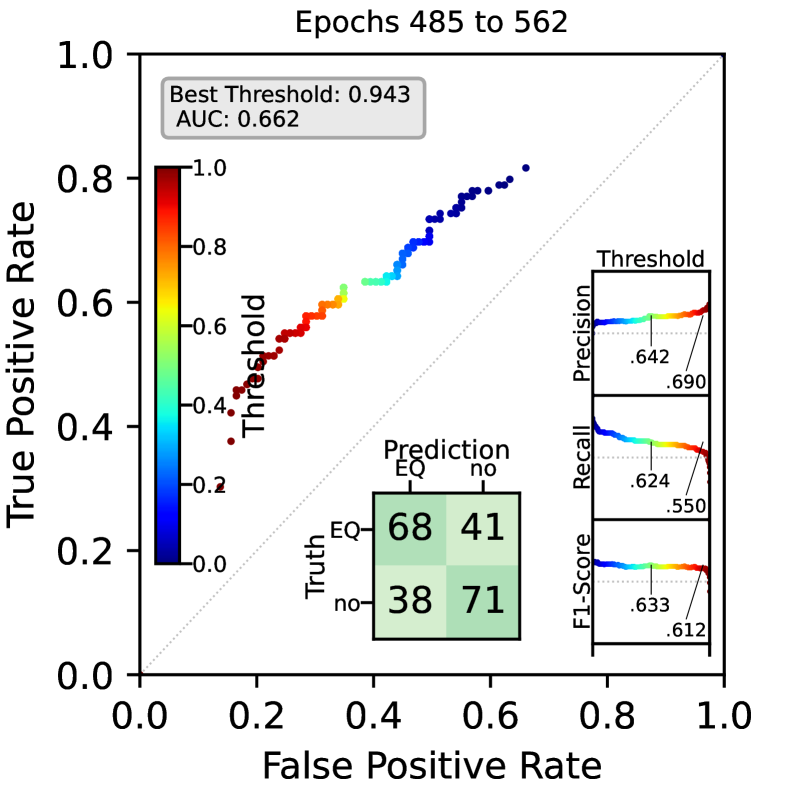

Anomaly detection performance is quantitatively evaluated using standard model performance metrics: precision, recall, and F1-score. Precision, calculated as \frac{True\ Positives}{True\ Positives + False\ Positives} , indicates the proportion of correctly identified anomalies out of all instances flagged as anomalous. Recall, defined as \frac{True\ Positives}{True\ Positives + False\ Negatives} , measures the proportion of actual anomalies that were correctly detected. The F1-score, the harmonic mean of precision and recall – calculated as 2 \times \frac{Precision \times Recall}{Precision + Recall} – provides a balanced measure of the model’s accuracy, particularly useful when dealing with imbalanced datasets where anomalies are rare.

Receiver Operating Characteristic (ROC) curve analysis is a graphical representation of the performance of a binary classification model at various threshold settings. The curve plots the True Positive Rate (TPR), also known as sensitivity or recall, against the False Positive Rate (FPR), or 1 – specificity. The TPR represents the proportion of actual positive cases correctly identified, while the FPR represents the proportion of actual negative cases incorrectly identified as positive. An ideal model would have a ROC curve that passes through the top-left corner of the plot, indicating a 100% TPR with a 0% FPR; however, this is rarely achieved in practice. The Area Under the Curve (AUC) provides a scalar value summarizing the overall performance, with a higher AUC indicating better discriminatory ability.

Testing of the anomaly detection framework on a designated test dataset yielded an accuracy of 81.41% and 90.38%. This performance indicates the framework’s capability to correctly identify anomalies within the tested data. Comparative analysis demonstrates superior performance relative to Model 4.0, suggesting improved anomaly detection capabilities and a reduced rate of both false positives and false negatives during testing.

Beyond Prediction: Mapping the Shifting Earth

The analytical framework’s capacity to handle intricate spatiotemporal datasets was rigorously tested using data sourced from the Japan Study Region, a seismically active zone characterized by a wealth of geological information. This application showcased the system’s ability to ingest, process, and interpret complex data streams-including historical seismic activity, geological formations, and real-time sensor readings-effectively demonstrating its functionality beyond theoretical simulations. The successful processing of data from this region validates the framework’s robustness and scalability, proving its potential for deployment in operational settings where continuous monitoring and analysis of geophysical data are critical for hazard assessment and mitigation.

The continuous monitoring of bb-value variations offers a promising avenue for proactive seismic risk assessment. This system doesn’t predict earthquakes, but rather identifies subtle shifts in the Earth’s crust that precede increased stress, potentially signaling areas where the likelihood of seismic activity is elevated. By establishing a baseline of bb-values and tracking deviations, researchers can pinpoint locations experiencing accumulating strain, effectively creating a dynamic map of relative seismic hazard. While not a standalone predictor, this information, when integrated with other geophysical data and historical seismicity, provides crucial context for refining risk models and potentially improving the precision of earthquake early warning systems, ultimately contributing to more informed preparedness and mitigation strategies.

Researchers anticipate that this innovative framework will soon become a valuable component of current earthquake early warning systems, potentially enhancing their accuracy and speed by incorporating real-time bb-value monitoring. Beyond seismology, the adaptability of this approach suggests potential applications in diverse geophysical fields; investigations are planned to assess its efficacy in tracking volcanic activity, monitoring ground deformation related to landslides, and even characterizing subtle changes in geomagnetic fields – demonstrating a broader utility for understanding dynamic Earth processes and improving hazard assessment across multiple disciplines.

The pursuit of discerning subtle shifts within complex systems finds a compelling echo in the work presented. This research, focusing on spatiotemporal anomalies in earthquake occurrences through bb-value variations, embodies a willingness to challenge established norms in data analysis. As Sergey Sobolev aptly stated, “The most interesting discoveries often lie at the boundaries of what is considered possible.” This sentiment perfectly encapsulates the approach detailed in the article-a progressive deep learning architecture designed to detect the previously undetectable. By embracing a method that adapts to evolving seismic data, the framework doesn’t simply observe the system; it actively probes its limits, seeking patterns at the periphery of current understanding. This mirrors Sobolev’s emphasis on pushing boundaries to uncover new insights.

Pushing the Boundaries

The presented architecture, while demonstrating a capacity to discern anomalies in bb-value fluctuations, merely scratches the surface of what constitutes ‘normal’ seismic behavior. The progressive learning strategy, intended to address evolving data distributions, implicitly acknowledges the inherent non-stationarity of the Earth’s crust – a concession that demands further scrutiny. One must ask: is the system truly learning to identify anomalies, or simply becoming adept at predicting the next expected variation within a chaotic system? The distinction is critical, and currently blurred.

Future work shouldn’t focus solely on refining the predictive accuracy, but on actively stressing the system. Introduce synthetic, deliberately misleading data – inject ‘false positives’ and ‘false negatives’ – to rigorously test the decision boundaries. Explore the integration of physics-based constraints – fault mechanics, stress transfer functions – not as inputs, but as adversarial components designed to challenge the network’s assumptions. If the model cannot be fooled, it suggests a genuine understanding, not merely pattern recognition.

Ultimately, the true test lies in its failure modes. A robust system doesn’t simply succeed in identifying known anomalies; it anticipates unforeseen ones, and flags its own uncertainty. The goal isn’t to build a perfect predictor of earthquakes, but a system that illuminates the limits of predictability itself. Only then can one claim a degree of understanding, however incomplete, of the forces at play beneath our feet.

Original article: https://arxiv.org/pdf/2602.12408.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Top 15 Celebrities in Music Videos

- Top 20 Extremely Short Anime Series

- Where to Change Hair Color in Where Winds Meet

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Top gainers and losers

- 50 Serial Killer Movies That Will Keep You Up All Night

- 20 Must-See European Movies That Will Leave You Breathless

2026-02-16 06:54