Author: Denis Avetisyan

A comprehensive review reveals how incorporating Riemannian geometry is reshaping graph neural networks and unlocking more powerful graph representation learning.

This article surveys the theoretical foundations, architectures, and applications of Riemannian graph learning, highlighting its potential for building more robust and expressive foundation models.

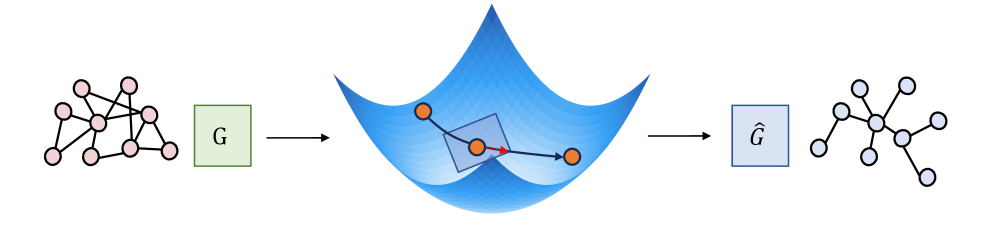

Despite the growing success of graph neural networks, capturing the intrinsic geometric properties of complex graph structures remains a significant challenge. The work ‘RiemannGL: Riemannian Geometry Changes Graph Deep Learning’ argues for a principled foundation for graph representation learning through Riemannian geometry, positioning it not as a niche technique, but as a unifying paradigm. This paper provides a comprehensive survey of this emerging field, outlining key architectural approaches, learning paradigms, and theoretical underpinnings for leveraging manifold structure in graph data. Can a deeper integration of geometric principles unlock more expressive and robust graph representations, ultimately driving the next generation of graph learning models?

Beyond Flatland: The Limits of Euclidean Thinking

Many established graph analysis techniques depend on converting network relationships into Euclidean space – a familiar, flat geometric representation. However, real-world networks are rarely so simple. Complex systems, from social networks to biological pathways, exhibit highly non-linear interactions that are fundamentally lost when flattened into this format. This process, akin to projecting a globe onto a map, inevitably introduces distortions and discards crucial information about the intricate relationships between nodes. Consequently, standard methods struggle to accurately represent and analyze these networks, particularly when dealing with phenomena like community structure, cascading failures, or the subtle influence of distant connections. The limitations of Euclidean embeddings highlight the need for more sophisticated approaches capable of preserving the inherent complexity of network geometry.

The practical consequences of relying on Euclidean embeddings extend to critical applications demanding a sensitive grasp of underlying network geometry. Anomaly detection, for instance, often requires identifying nodes deviating from established patterns within a complex, potentially curved space; a flat representation can obscure these subtle differences, leading to false negatives or positives. Similarly, recommendation systems frequently leverage geometric proximity to suggest relevant items; when relationships aren’t accurately captured due to spatial distortion, recommendations become less effective and user satisfaction declines. The inability to discern nuanced geometric structures directly impacts the precision of these algorithms, highlighting the need for methods that can faithfully represent and utilize the intrinsic dimensionality of complex networks.

The inherent limitations of representing complex networks within a flat, Euclidean space frequently result in a significant loss of relational information. Traditional methods, by forcing multidimensional connections onto a two-dimensional plane or simple vector space, fail to capture the nuanced geometry crucial for accurate analysis. This simplification diminishes the ability to discern subtle patterns and dependencies, leading to suboptimal performance in tasks like predictive modeling and community detection. Consequently, interpretations derived from these flattened representations may lack fidelity, obscuring the true underlying structure of the network and hindering a comprehensive understanding of the relationships between its constituent elements. The resulting loss of information impacts not only the accuracy of analytical outcomes but also their interpretability, making it difficult to confidently draw meaningful conclusions from the data.

Curvature and Connection: A Riemannian Foundation for Graph Learning

Riemannian manifolds provide a geometric framework for analyzing graph data by treating nodes as points on a curved space, rather than existing in a flat, Euclidean space. This approach allows for the representation of non-Euclidean relationships inherent in many graph structures, where shortest paths may not be straight lines in an embedded space. The core concept relies on defining a RiemannianMetric tensor at each node, which determines the local geometry and allows for the calculation of distances and curvatures. By leveraging the intrinsic geometry defined by this metric, graph representations can more accurately capture the complex relationships and structural properties of the underlying data, leading to improved performance in tasks such as node classification, link prediction, and graph clustering. This is particularly beneficial for graphs exhibiting hierarchical or tree-like structures where Euclidean embeddings often fail to preserve crucial topological information.

The selection of an appropriate geometric space for graph data relies on defining a RiemannianMetric which dictates how distances are measured. Different manifold types offer distinct properties suited to various data characteristics: HyperbolicSpace excels at representing hierarchical structures and capturing long-range dependencies, while SphericalSpace is effective for data with rotational symmetries or bounded variation. GrassmannManifold provides a framework for representing subspaces, making it suitable for data where relationships are defined by linear combinations, such as feature vectors or principal components. The choice of manifold directly influences the preservation of intrinsic data properties during embedding and subsequent analysis; for instance, a hyperbolic space may be preferable for representing social networks with community structures compared to a Euclidean space.

Euclidean embeddings, while computationally efficient, inherently distort the intrinsic geometry of graph data, leading to loss of structural information such as shortest path distances and neighborhood relationships. Representing graph data on Riemannian manifolds-specifically, those tailored to capture the data’s characteristics-allows algorithms to retain these crucial structural properties. For instance, geodesic distances on a manifold can more accurately reflect the true relationships between nodes than Euclidean distances, improving performance in tasks like node classification, link prediction, and graph clustering. This preservation of geometric information is particularly beneficial for non-Euclidean data, enabling more robust and accurate graph analysis compared to methods relying solely on Euclidean space.

From VAEs to ODEs: Tools for Mapping Curved Networks

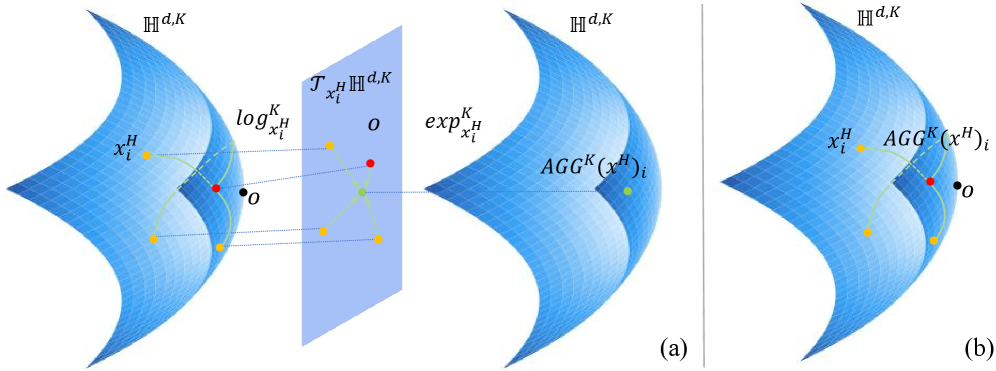

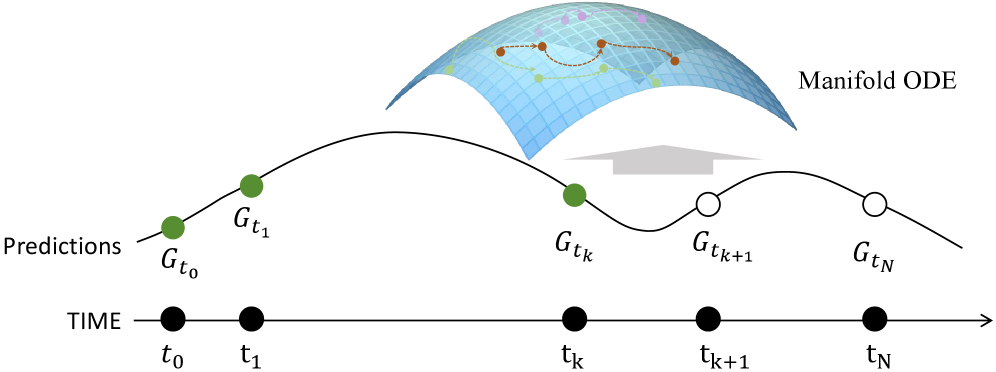

Established deep learning architectures are being adapted for use with graph data represented as Riemannian manifolds. RiemannianVAE modifies the Variational Autoencoder to operate on Riemannian data, allowing for non-Euclidean latent space embeddings. Similarly, RiemannianGCN extends the Graph Convolutional Network by incorporating Riemannian geometry into its convolutional operations, enabling the processing of graphs with intrinsic curvature. RiemannianODE leverages Ordinary Differential Equations to model the evolution of graph representations on a Riemannian manifold, facilitating the learning of continuous graph embeddings and improving performance on tasks requiring geometric understanding of the graph structure. These adaptations aim to capture the underlying geometry of graph data more effectively than traditional Euclidean-based methods, leading to improved representation learning.

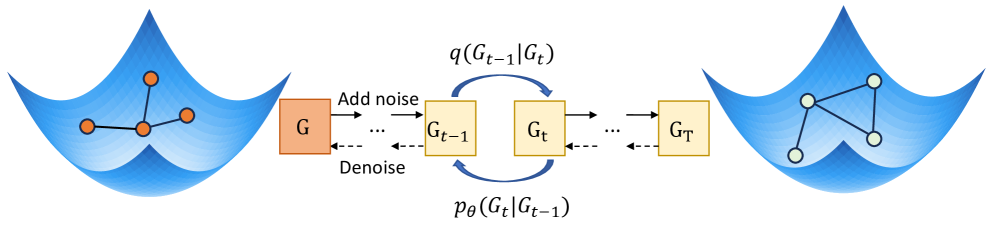

Riemannian Stochastic Differential Equations (SDEs) provide a probabilistic approach to graph generation and analysis by defining a diffusion process on a Riemannian manifold. Unlike deterministic methods, RiemannianSDE models graph structure as a stochastic process governed by an SDE, allowing for the creation of graphs with intricate geometric features and inherent uncertainty. This framework enables the sampling of graphs from a probability distribution defined on the space of Riemannian manifolds, and facilitates the analysis of graph properties through the lens of diffusion theory. Applications include generating realistic network topologies, modeling dynamic graphs, and performing uncertainty quantification in graph-based machine learning tasks.

Employing Riemannian geometry in graph learning capitalizes on intrinsic geometric properties – such as curvature and geodesics – to enhance model performance across several graph-based tasks. In node classification, Riemannian methods facilitate more nuanced feature representations by considering the relationships between nodes within the curved space of the graph manifold. For link prediction, these techniques improve the accuracy of predicting missing edges by modeling edge probabilities based on geodesic distances and geometric relationships. Furthermore, in graph generation, Riemannian approaches enable the creation of graphs with desired geometric characteristics, allowing for the synthesis of complex and realistic network structures; this is achieved by defining generative models on the space of Riemannian manifolds, thereby controlling the geometric properties of the generated graphs.

Beyond Supervision: Unlocking the Potential of Riemannian Graphs

The inherent complexity of graph data often presents challenges for machine learning algorithms, particularly when labeled data is scarce. Recent advancements demonstrate that integrating Riemannian geometry with unsupervised and semi-supervised learning offers a powerful solution. By representing graphs as Riemannian manifolds, algorithms can leverage the geometric structure – curvature, distance, and angles – to uncover meaningful patterns even in the absence of explicit labels. This approach moves beyond traditional node-feature based methods, enabling the discovery of intrinsic graph properties and relationships. Consequently, algorithms can effectively cluster nodes, identify communities, or predict link formations based solely on the graph’s connectivity and geometric characteristics, significantly expanding the potential for learning from the vast amounts of unlabeled graph data available in domains like social networks, biological systems, and knowledge graphs.

The advent of transfer learning in graph neural networks is enabling the creation of powerful graph foundation models, analogous to those seen in natural language processing and computer vision. These models are initially trained on expansive, readily available graph datasets – encompassing social networks, knowledge graphs, and molecular structures – to capture universal graph properties and relational patterns. Subsequently, this pre-trained knowledge can be efficiently transferred and adapted to downstream tasks with limited labeled data, significantly reducing the need for extensive task-specific training. This approach not only accelerates learning but also improves generalization performance, particularly in scenarios where labeled data is scarce or expensive to obtain. The resulting models demonstrate a remarkable ability to extrapolate learned representations to novel graphs and tasks, representing a substantial step toward building truly versatile and adaptable graph intelligence systems.

Knowledge graph embeddings, techniques that map entities and relations within a knowledge graph to vector spaces, experience significant enhancements when coupled with Riemannian geometry. Traditional embedding methods often fail to capture the inherent geometric structure of knowledge graphs, treating all relationships equally. By representing the graph as a Riemannian manifold – a space where distances are measured along curves – embeddings can better reflect the nuanced relationships between entities. This richer geometric representation allows for more accurate distance calculations and improved performance in tasks like link prediction and entity classification. Furthermore, the geometric structure facilitates interpretability; the learned embeddings aren’t merely abstract vectors but are grounded in the graph’s underlying geometry, enabling researchers to understand why certain predictions are made and providing insights into the knowledge itself. Consequently, these geometrically-informed embeddings offer a more powerful and transparent approach to knowledge representation and reasoning.

Towards a Geometric Future: Expanding the Horizon of Graph Understanding

Current Riemannian graph learning techniques, while powerful, often operate on simplified geometric spaces. Expanding these methods to encompass more complex manifolds-such as PseudoRiemannianManifold and ProductManifold-promises to unlock a far richer understanding of interconnected data. PseudoRiemannianManifold geometries, characterized by indefinite inner products, are particularly suited for modeling systems with directional dependencies or constraints, while ProductManifold structures allow for the representation of data existing across multiple, interacting spaces. By extending deep learning frameworks to these geometries, researchers can move beyond traditional Euclidean representations and capture subtle relationships inherent in complex systems, potentially revealing hidden patterns and improving predictive accuracy in diverse fields like network science and materials discovery.

The convergence of \text{GraphSignalProcessing} (GSP) with Riemannian geometry offers a powerful framework for discerning intricate patterns within complex networks. GSP, traditionally applied to graph-structured data, analyzes signals defined on graph nodes, leveraging the graph’s connectivity to define meaningful operations like convolution and filtering. When coupled with Riemannian geometry-which allows for the study of curved spaces-this approach transcends the limitations of Euclidean spaces often assumed in standard graph analysis. This integration enables the extraction of features that capture not only the network’s topology but also its intrinsic geometric properties, such as curvature and geodesics. Consequently, researchers can move beyond simple node-level analysis to understand how signals propagate across curved network landscapes, unlocking advanced capabilities in areas like community detection, anomaly identification, and predictive modeling – ultimately providing a more nuanced and comprehensive understanding of complex systems.

The emerging field of geometric deep learning offers a powerful new lens through which to understand and model complex systems, poised to dramatically impact diverse scientific disciplines. In social network analysis, these techniques move beyond traditional node-based approaches to capture the underlying geometry of relationships, potentially revealing hidden communities and predicting information diffusion with unprecedented accuracy. Drug discovery stands to benefit from improved molecular property prediction and the design of novel compounds, as geometric deep learning can effectively represent and analyze the complex 3D structures of molecules. Similarly, materials science anticipates advancements in predicting material properties and discovering new materials with tailored characteristics, enabled by the ability to model the intricate geometric relationships within crystalline structures and complex composites. This paradigm shift promises not merely incremental improvements, but rather a fundamental reimagining of how data is represented and analyzed within these critical fields.

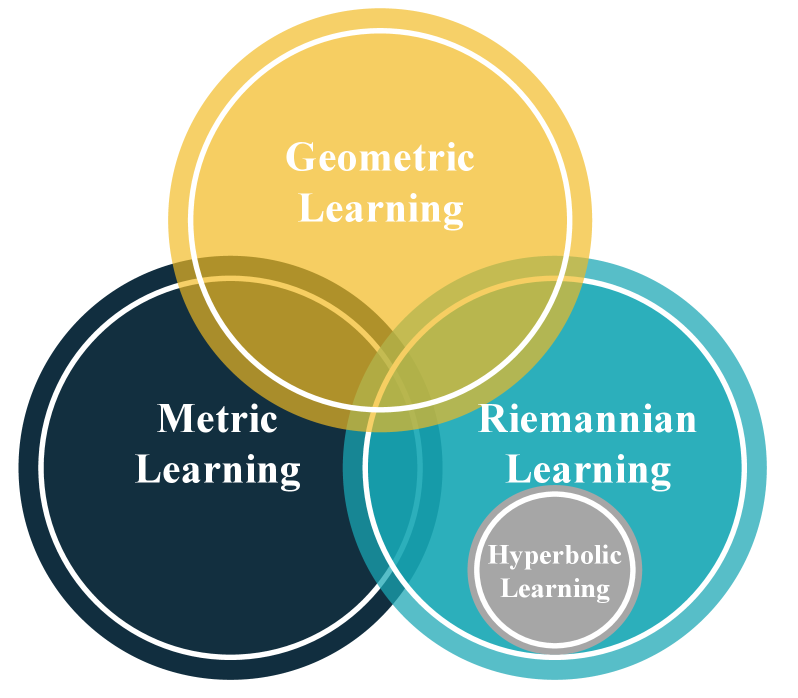

![Different learning paradigms-including <span class="katex-eq" data-katex-display="false"> \mathbb{E}[R] </span> maximization, optimal control, and inverse reinforcement learning-can be unified under the framework of policy optimization, each distinguished by its objective function and assumptions about the environment.](https://arxiv.org/html/2602.10982v1/figure/LearningParadigm.png)

The exploration within RiemannGL inherently embodies a spirit of challenging established norms. It isn’t simply about applying Riemannian geometry to graph neural networks; it’s a systematic dismantling of traditional Euclidean assumptions in graph representation learning. As Ken Thompson famously stated, “Sometimes it’s the people who can’t read the manual that make the most interesting discoveries.” This sentiment rings true as the paper actively investigates how departing from conventional methods – specifically, by embracing the complexities of curved spaces like hyperbolic space – can unlock superior performance and more robust models. The survey demonstrates that by deliberately ‘breaking the rule’ of Euclidean geometry, researchers can forge pathways to more expressive and powerful graph neural networks, moving beyond limitations previously accepted as inherent to the field.

Beyond Euclidean Nets

The exploration of Riemannian geometry within graph neural networks, as detailed in this work, isn’t merely a mathematical exercise. It’s a tacit admission that the standard tools – Euclidean space, linear algebra – are insufficient for truly representing the complexities inherent in relational data. The field now faces the uncomfortable question of where these manifolds actually lie. Simply embedding graphs into hyperbolic space, or applying curvature as a regularization term, feels like fitting a model to a symptom, not a cause. The next phase demands rigorous methods for discovering the intrinsic geometry of a graph, not imposing one.

A true test will be the development of architectures that aren’t simply Euclidean networks with a geometric veneer. The current reliance on spectral methods, while theoretically elegant, often translates to computational bottlenecks. The path forward likely involves exploring discrete, mesh-based representations that more closely mirror the underlying graph structure and allow for efficient, localized computations. Furthermore, the theoretical benefits of Riemannian learning must translate to demonstrable gains in generalization – a challenge often obscured by benchmark datasets designed for Euclidean thinking.

Ultimately, this isn’t about building ‘better’ graph neural networks; it’s about understanding the fundamental limitations of current approaches. The very notion of a ‘universal’ graph neural network, a ‘foundation model’ for relational data, feels premature. Perhaps the true innovation will lie not in scaling up existing architectures, but in abandoning the assumption that all graphs can be neatly represented within a single, fixed geometric framework.

Original article: https://arxiv.org/pdf/2602.10982.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Monster Hunter Stories 3: Twisted Reflection launches on March 13, 2026 for PS5, Xbox Series, Switch 2, and PC

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- 🚨 Kiyosaki’s Doomsday Dance: Bitcoin, Bubbles, and the End of Fake Money? 🚨

- ‘The Substance’ Is HBO Max’s Most-Watched Movie of the Week: Here Are the Remaining Top 10 Movies

- First Details of the ‘Avengers: Doomsday’ Teaser Leak Online

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Crypto’s Comeback? $5.5B Sell-Off Fails to Dampen Enthusiasm!

2026-02-12 18:34