Author: Denis Avetisyan

A new study examines how generative AI is being realistically implemented within an energy company, revealing employee expectations and key adoption challenges.

Qualitative research identifies practical use cases for generative AI in areas such as reporting, forecasting, and data handling within a complex organizational context.

Despite growing interest in leveraging artificial intelligence for operational efficiency, practical implementation within established industries remains a complex undertaking. This study, ‘Generative AI Adoption in an Energy Company: Exploring Challenges and Use Cases’, investigates employee perspectives on integrating generative AI within an energy organization, revealing expectations for support in routine tasks such as reporting, forecasting, and data handling. Our qualitative analysis of sixteen interviews across nine departments suggests a preference for incremental adoption strategies aligned with existing workflows. How can these insights inform broader strategies for responsible AI implementation across critical infrastructure sectors?

The Weight of Manual Effort in Energy Systems

The energy sector, despite advancements in technology, remains heavily reliant on manual processes across its value chain. From field data collection and equipment inspection to paperwork-intensive administrative tasks and dispatch coordination, a significant portion of work is performed by humans rather than automated systems. This dependence introduces substantial inefficiencies; human error is a constant risk, data processing is slow and prone to inaccuracies, and response times to critical events are often delayed. Consequently, operational costs are inflated by labor expenses, rework due to mistakes, and lost opportunities stemming from sluggish decision-making-effectively hindering the sector’s potential for optimization and innovation.

The energy sector grapples with a unique level of operational complexity, where data streams from diverse sources – aging infrastructure, geographically dispersed assets, and fluctuating market demands – often overwhelm traditional management systems. These systems, typically reliant on manual data entry, siloed databases, and reactive maintenance schedules, struggle to integrate and interpret this information effectively. Consequently, identifying patterns, predicting failures, and optimizing performance becomes significantly challenging. This inability to efficiently process and utilize data not only increases operational costs and risks but also stifles the development and implementation of innovative solutions, such as predictive maintenance algorithms or smart grid technologies, ultimately hindering progress toward a more sustainable and efficient energy future.

The persistent difficulties within the energy sector-stemming from extensive manual work and complex data management-are driving a powerful demand for automated and intelligent systems. These solutions aren’t simply about replacing human effort; they represent a fundamental shift towards optimized workflows and data-driven insights. By leveraging technologies like machine learning and predictive analytics, energy companies can move beyond reactive maintenance and towards proactive strategies, minimizing downtime, reducing operational costs, and enhancing overall efficiency. This transition promises not only to streamline existing processes but also to unlock new possibilities for innovation in areas like grid optimization, resource allocation, and renewable energy integration, ultimately fostering a more sustainable and resilient energy future.

Strategic AI Adoption: Beyond Technological Implementation

Effective Artificial Intelligence (AI) adoption within the energy sector necessitates a combined focus on technological deployment and organizational transformation. Simply implementing AI tools without addressing internal structures and capabilities will likely yield limited results. Successful strategies require parallel investment in data infrastructure, algorithm development, and the cultivation of a data-driven culture. This includes upskilling the workforce to utilize AI-powered insights, adapting workflows to integrate AI outputs, and establishing governance frameworks for responsible AI implementation. Ignoring the organizational component hinders the ability to effectively leverage technological advancements and realize the full potential of AI initiatives.

Successful integration of Artificial Intelligence requires significant attention to organizational readiness. This necessitates the development of data literacy across all relevant departments, enabling personnel to understand, interpret, and utilize data-driven insights generated by AI systems. Beyond technical skills, fostering a willingness to adopt new workflows and processes is critical; AI implementation frequently demands changes to existing operational structures and roles. Organizations must prioritize training initiatives, promote cross-functional collaboration, and establish clear communication channels to facilitate this cultural shift and ensure broad acceptance of AI-driven solutions.

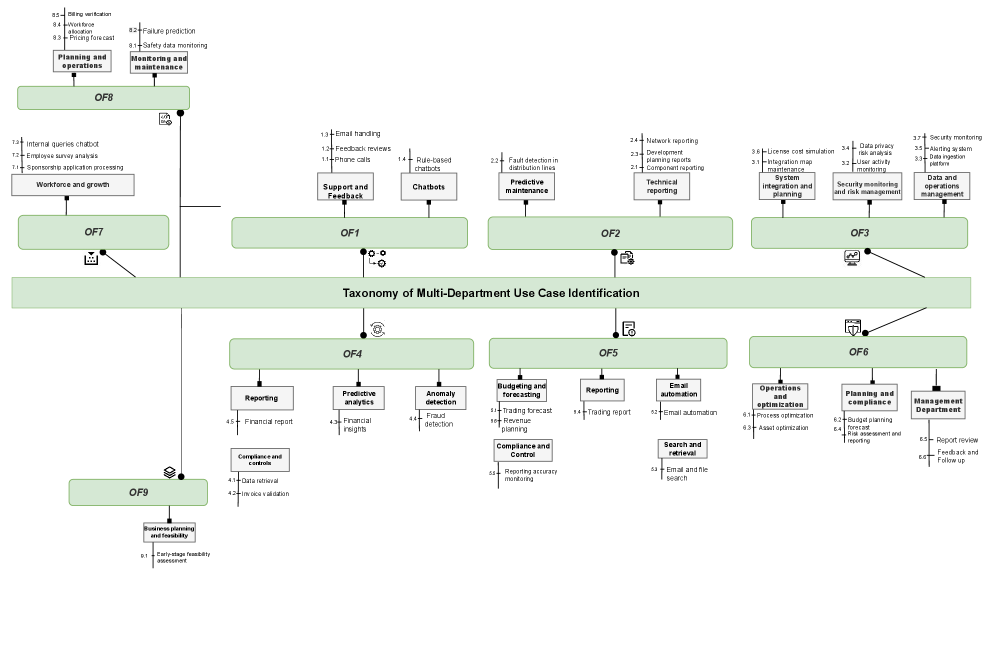

A comprehensive assessment within a large energy organization identified 41 distinct use cases suitable for Artificial Intelligence implementation. These potential applications were then rigorously prioritized based on three key criteria: demonstrable business importance, relative ease of implementation, and anticipated organizational value. The resulting prioritization highlighted applications such as Automated Reporting, designed to streamline data processing and reduce manual effort; Predictive Maintenance, focused on anticipating equipment failures and minimizing downtime; and Forecasting, enabling more accurate predictions of energy demand and resource allocation. Implementation of these prioritized use cases is projected to yield significant gains in operational efficiency across the organization.

Generative AI: Unlocking Intelligence Through Contextual Understanding

Generative AI, utilizing the capabilities of Large Language Models (LLMs), represents a significant advancement in automated knowledge processing. These models are trained on extensive datasets, enabling them to identify patterns, understand context, and generate novel outputs – including text, code, and other data formats – in response to given prompts. This functionality facilitates the extraction of insights from unstructured data sources and the creation of solutions to complex problems without explicit programming. LLMs achieve this through probabilistic modeling of language, predicting the most likely sequence of tokens based on the input and learned parameters, thereby enabling tasks such as content creation, summarization, translation, and question answering.

Retrieval-Augmented Generation (RAG) is a technique used to improve the factual grounding and relevance of outputs from generative AI models. Rather than relying solely on the parameters learned during training, RAG incorporates an information retrieval step; before generating a response, the system identifies and retrieves relevant documents or data snippets from a knowledge source. This retrieved information is then provided as context to the generative model, allowing it to base its response on verified data and reducing the likelihood of hallucination or inaccurate statements. This approach is particularly valuable in complex operational contexts where access to current and specific information is critical for effective decision-making and problem-solving.

Data integration is a critical enabler for effective generative AI applications. Research indicates that access to comprehensive and reliable information sources directly impacts performance; specifically, an email response generation system utilizing integrated data achieved an accuracy of 89%. This accuracy was determined through BERTScore comparison, a metric evaluating text similarity against a dataset of established, reference email responses. The results demonstrate a quantifiable correlation between robust data integration practices and the generation of accurate, contextually relevant outputs from generative AI models.

Collaboration and Compliance: The Human Dimension of AI Impact

Research indicates that realizing the full potential of artificial intelligence within an organization demands a deliberate dismantling of traditional departmental boundaries. Semi-structured interviews consistently revealed that successful AI integration isn’t solely a technological undertaking, but a profoundly collaborative one. Departments – such as data science, IT, legal, and business operations – must actively share knowledge, resources, and perspectives throughout the entire AI lifecycle, from initial planning and data acquisition to model deployment and ongoing monitoring. This cross-functional synergy fosters a shared understanding of both the opportunities and challenges presented by AI, enabling more effective problem-solving, streamlined workflows, and ultimately, a greater return on investment. Without this level of interconnectedness, organizations risk creating AI solutions that are misaligned with business needs, ethically questionable, or simply fail to gain widespread adoption.

Analysis of qualitative data consistently demonstrated that robust compliance frameworks are not merely a procedural requirement, but a fundamental pillar of responsible AI deployment. Interviewees emphasized that proactively addressing ethical considerations – including data privacy, algorithmic bias, and transparency – fosters trust and mitigates potential harms. These frameworks, encompassing clearly defined guidelines and ongoing monitoring, enable organizations to navigate the complex landscape of AI governance and ensure alignment with both regulatory expectations and societal values. The study revealed that a commitment to compliance isn’t simply about avoiding penalties; it’s integral to building sustainable AI solutions that are both innovative and ethically sound, ultimately bolstering public confidence and driving long-term adoption.

The emergence of agentic AI – systems capable of autonomous action and decision-making – presents both substantial opportunity and considerable risk, necessitating proactive governance and control frameworks. Research indicates that while these AI systems promise increased efficiency and innovation, their independent operation demands careful consideration of potential unintended consequences. Establishing clear parameters for agentic AI, including defined objectives, ethical boundaries, and robust monitoring protocols, is crucial to preventing unforeseen errors or harmful outcomes. Furthermore, mechanisms for human oversight and intervention remain essential, ensuring accountability and the ability to recalibrate agentic systems when necessary, thereby maximizing benefits while minimizing potential hazards associated with increasingly autonomous artificial intelligence.

The exploration of generative AI adoption reveals a pragmatic approach is key. Employees anticipate assistance with routine tasks – reporting, forecasting, and data handling – suggesting a desire to augment, not replace, existing workflows. This resonates with Vinton Cerf’s observation: “The internet is a powerful tool for communication and collaboration, but it’s also a tool for distraction and misinformation.” The energy sector, like all others, must navigate this duality. Abstractions age, principles don’t. A focus on incremental adoption, aligning AI with established processes, ensures value is realized without overwhelming systems or personnel. Every complexity needs an alibi; simplicity in implementation is paramount.

The Road Ahead

The pursuit of generative artificial intelligence within established organizations reveals less a technological hurdle and more a fundamental problem of expectation. This work demonstrates a preference not for revolutionary transformation, but for the automation of existing tedium. The observed desire for AI to handle reporting, forecasting, and data wrangling isn’t a vision of the future; it’s a tacit admission of present inefficiencies. A system that requires grand pronouncements of its potential has already failed to justify its cost.

Further research must resist the temptation to showcase what AI can do, and instead rigorously examine what existing processes reveal about organizational friction. The true metric of success will not be the novelty of the application, but the degree to which it renders itself invisible-seamlessly integrating into, and ultimately simplifying, established workflows. The focus should shift from building ‘intelligent agents’ to dismantling unnecessary complexity.

Ultimately, the challenge isn’t teaching machines to think like humans, but convincing humans to tolerate less thinking. Clarity, after all, is courtesy-and a truly effective system requires no instruction manual. The most profound innovation may well be the one that simply vanishes, leaving only a quieter, more efficient operation in its wake.

Original article: https://arxiv.org/pdf/2602.09846.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Monster Hunter Stories 3: Twisted Reflection launches on March 13, 2026 for PS5, Xbox Series, Switch 2, and PC

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- 🚨 Kiyosaki’s Doomsday Dance: Bitcoin, Bubbles, and the End of Fake Money? 🚨

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- 39th Developer Notes: 2.5th Anniversary Update

- 10 Hulu Originals You’re Missing Out On

- 10 Underrated Films by Ben Mendelsohn You Must See

- Target’s Dividend: A Redemption Amidst Chaos

- First Details of the ‘Avengers: Doomsday’ Teaser Leak Online

2026-02-12 03:30